Ongoing activites

- Looper tagging & pad-parallel tagging

- Retrain NN's to exclude loopers

- pT & η differential studies

1. Looper tagging

- Both looper tagging and pad-parallel tagging work

- Same algorithm, different "cuts" -> In process of optimization (fewest possible rejected volume with maximum possible fake reduction)

- Total rejected TPC volume ~15-16%

- Weakness for larger loopers -> needs fixing with applied cuts

2. pT & η differential studies

- Checked performance with and without looper tagging

- Results make sense and show that the network get's more "confused" with loopers

3. Retraining NN's

- Next step in the pipeline

- Need to check that training data is read out correctly (some changes were necessary in the QA task)

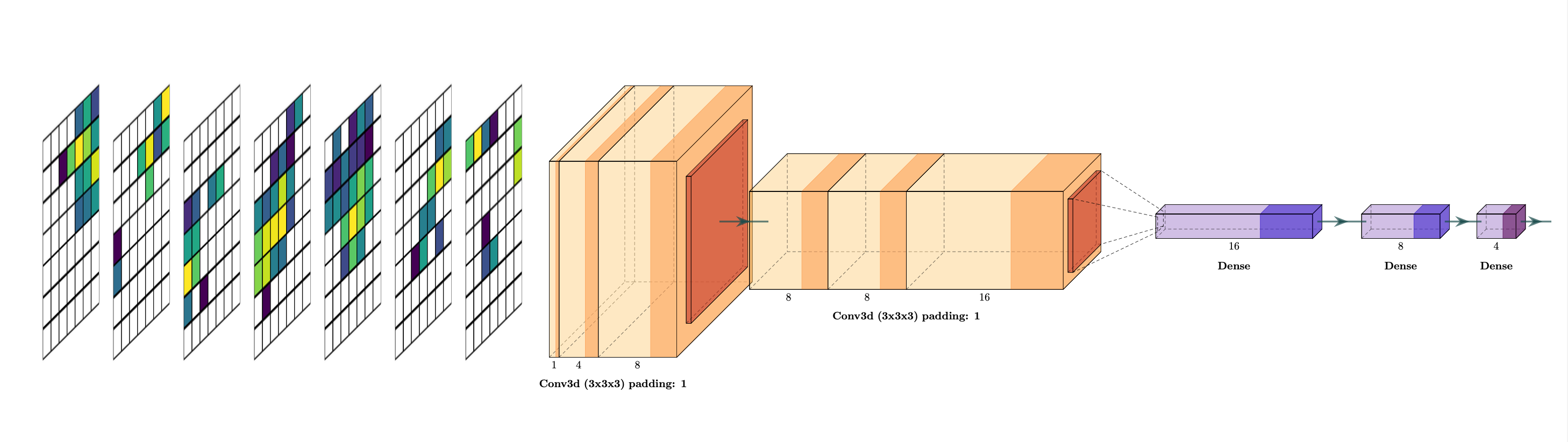

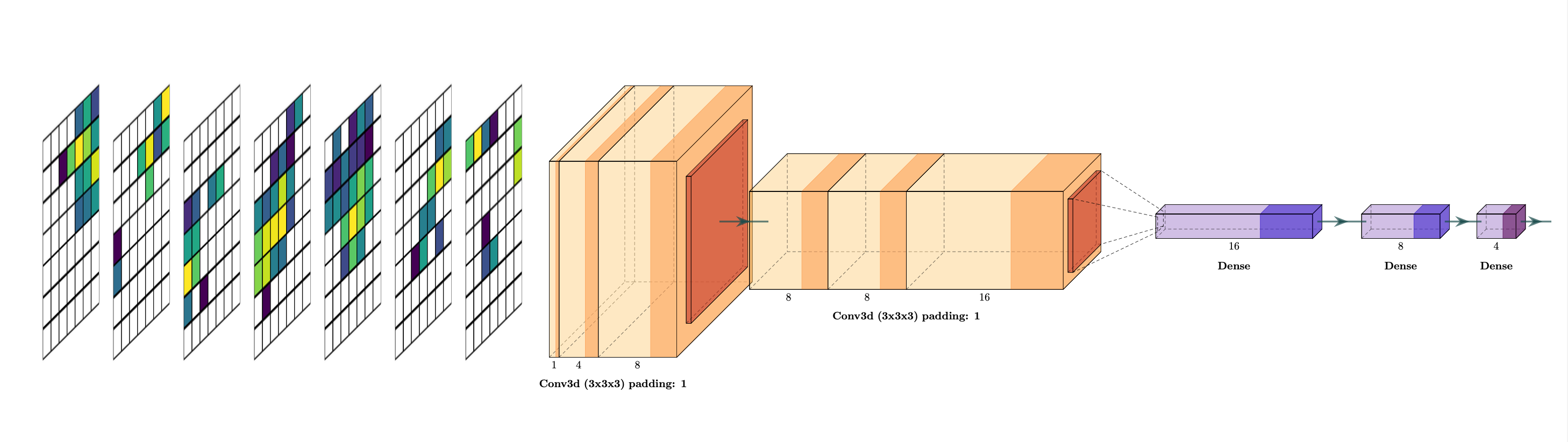

4. Visualization & PyTorch

- Found a nice GitHub repo with some latex code for plotting neural networks

Minutes from private discussion

1. Looper tagger

- Only use pad-parallel tagging

- Only mask regions around individual clusters, not full regions of investigation

- Re-branding of looper tagger -> "Exclusion of identified regions from efficiency calculation"

- Re-branding of pad-parallel tracks -> tracks with high inclination angle

2. Cluster writing

- Create own tpc-native-clusters.root to run tracking and create tracking QA once running

3. Neural network

- Try with only fully connected layers