GPU param optimisation

Setup

Measured on Alma 9.4, ROCm 6.3.1, MI50 GPU

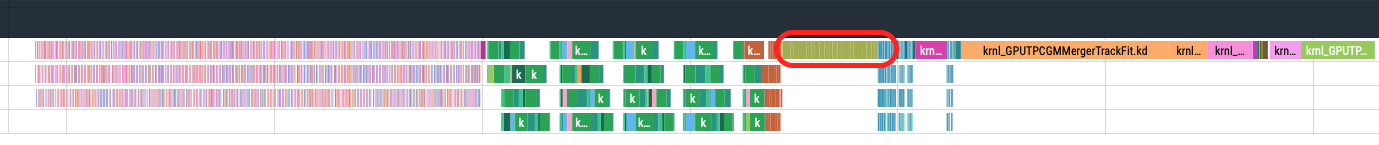

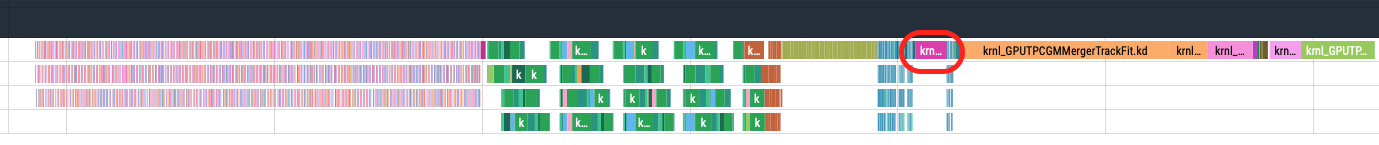

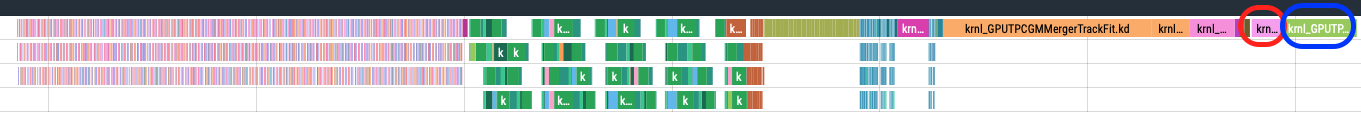

Executed grid search for the following kernels:

These are the longest single stream kernels. Parameters are independent, so easier to optimise. Custom search space for every kernel (for some can't have large block sizes).

Each mean time is normalised to the mean time of the current (block_size, grid_size) configuration. So < 1 mean a better configuration, > 1 means worse and = 1 equal perfomance as current.

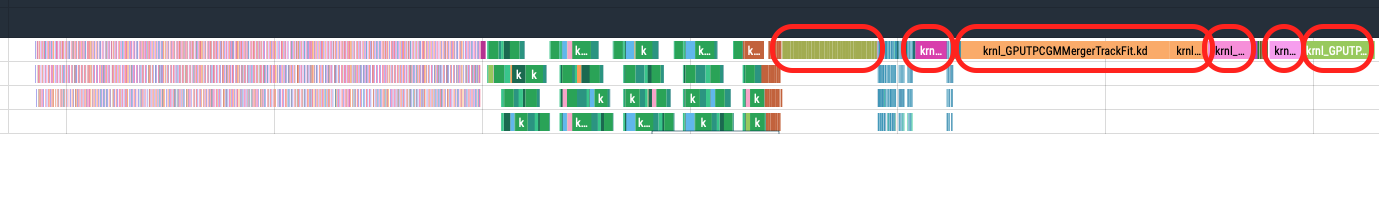

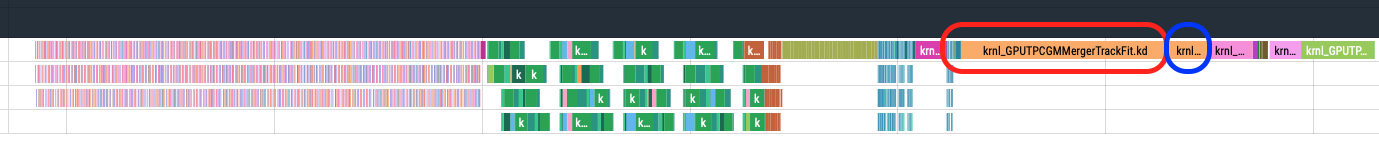

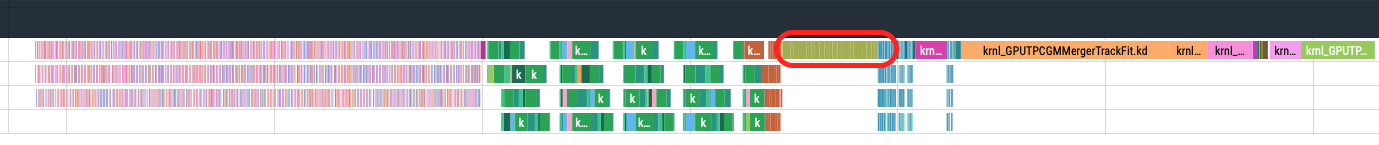

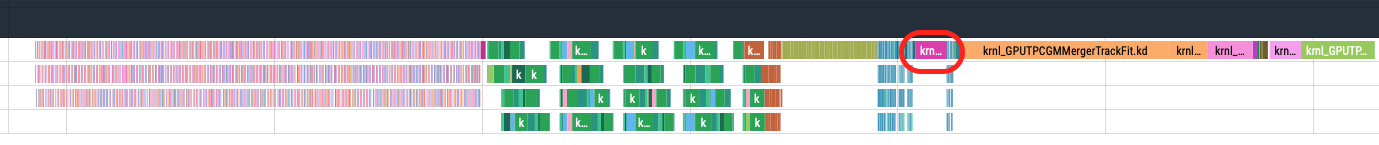

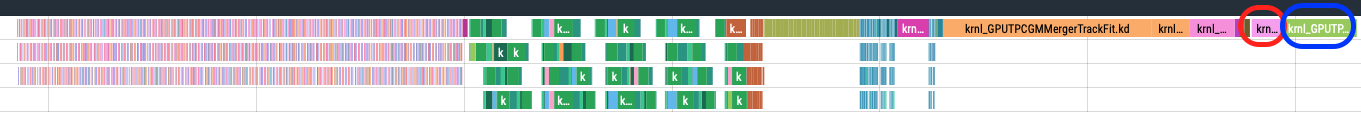

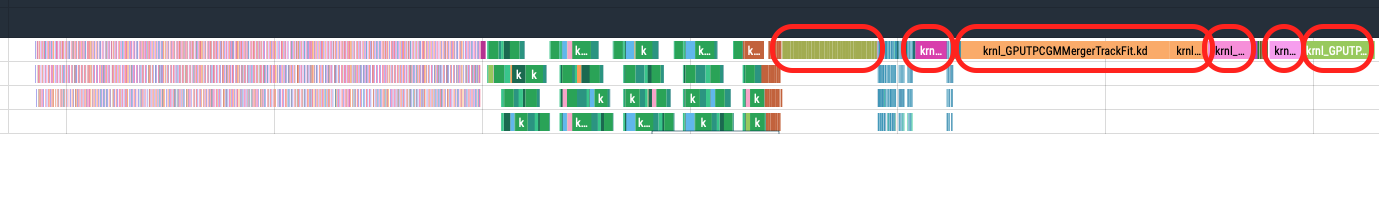

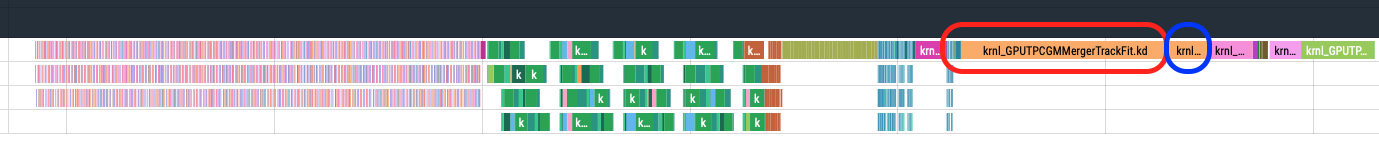

MergerTrackFit

Executed two times (Merger 1 and Merger 2)

pp

Merger 1

- Low IR same performance as normal configuration (grid size dependent on number of tracks)

- High IR same as low IR, except for (64,240) where it also has the same performance as normal

Merger 2

- Low and High IR sync benefits from bigger grid sizes

- High IR async is 34% faster with higher grid sizes than current configuration for async

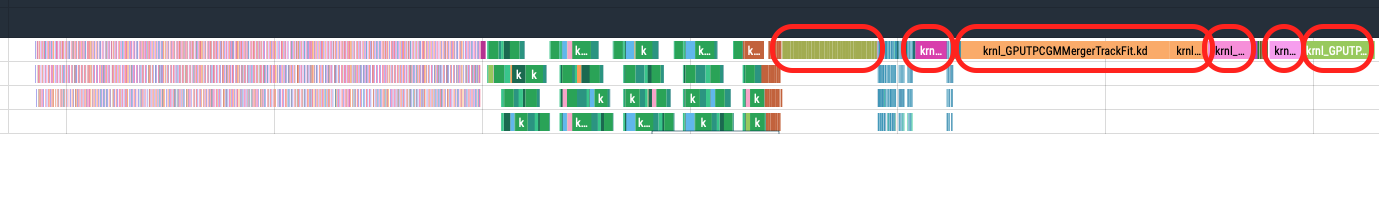

PbPb

Merger 1

- Larger grid sizes almost reaches current configuration (grid_size * block_size >= n_tracks)

Merger 2

- Low IR can be 10% faster with bigger grid sizes

- High IR is 40% faster with bigger grid sizes

MergerSliceRefit

Kernel is executed 36 times (once per TPC sector).

- pp low IR benefits from lower block sizes

- pp high IR benefits from larger grid and block sizes

- PbPb low IR better with lower block sizes

- PbPb high IR better with larger grid and block sizes

MergerCollect

pp

Must retake some measurments due to some unkown problems. Overall best performance given by (64, 960), while current configuration is (512,60).

PbPb

Roughly same as pp

MergerFollowLoopers

Best configuration uses 900 or 960 as grid size. Current configuration is (256,200).

Compression kernels

Step 0 attached clusters

No significant improvements when changing grid and block sizes.

Step 1 unattached clusters

For High IR, (192,180) shows better performances compared to current configuration (512,120).

Grid search script

Since these kernels are not executed concurrently, their parameters are independent. Hence, a python script to perform multiple grid searches at once has been created:

- A custom grid search space is defined for each kernel

- At each iteration, take a new space point, i.e. (block_size,grid_size), from each search space

- Modify (automatically) the code, plugging each new configuration into the correspondent kernel call in O2

- Compile

- Execute and measure kernels timings

- Iterate until the largest search space is exhausted. Skip new point sampling if search space has been completely explored.

Pros: Multiple grid searches possible per single run

Cons: Works effectively only with non concurrent kernels

Next things to do

- Try to perform grid search on long kernels with other concurrent kernels

- Determine a way to asses what configuration is the best after grid search (instead of just looking at the heatmaps)

- Create a set of optimum parameters based on beamtype and IR

- Explore best parameters with other IRs

- Explore if best parameters change for different datasets with same beamtype and IR