Main efforts

- Improve network regression fit

- Improve QA:

- Evaluation on real data

- Created fully parallelizable version of clusterization code

1. Improve the network fits.

- Noticed that GPU CF has a lot of entries at 0.

- Reason 1: Many clusters have a small size, so GPU CF sees "everything", especially inner 3x3 window

- Reason 2: Especially for clusters with sigma_pad = 0 (in reality), CoG_pad == Max_pad -> NN smears distribution slightly (same goes for time)

- Fix: Network receives as input the CoG and sigma calculated with the inner 3x3 inputs (no exclusion) in the old fashioned way and applies a multiplicative correction -> Improves the network fit

Left: Purely fully connected network; Right: Fully connected network with inner 3x3 CoG and σ input

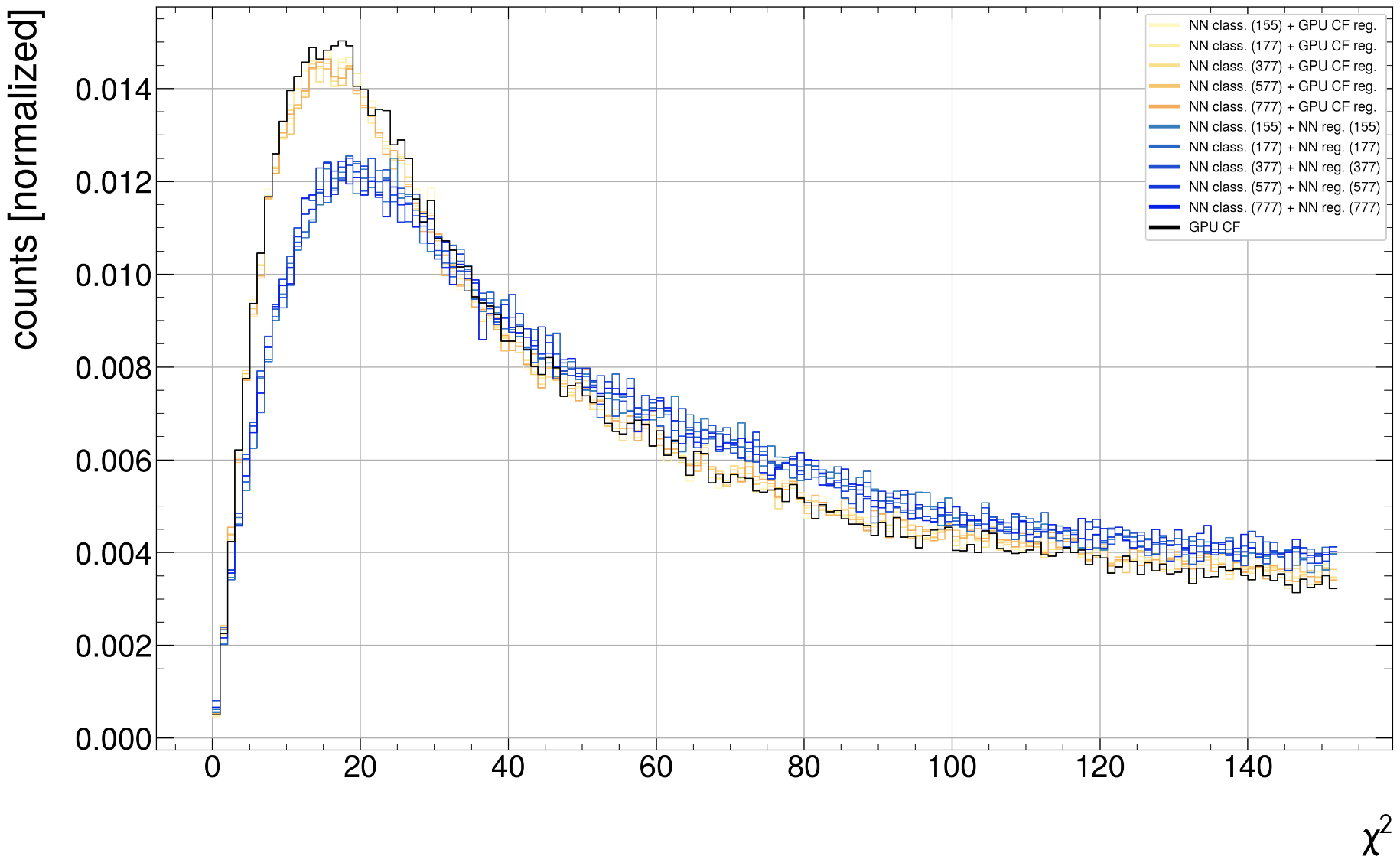

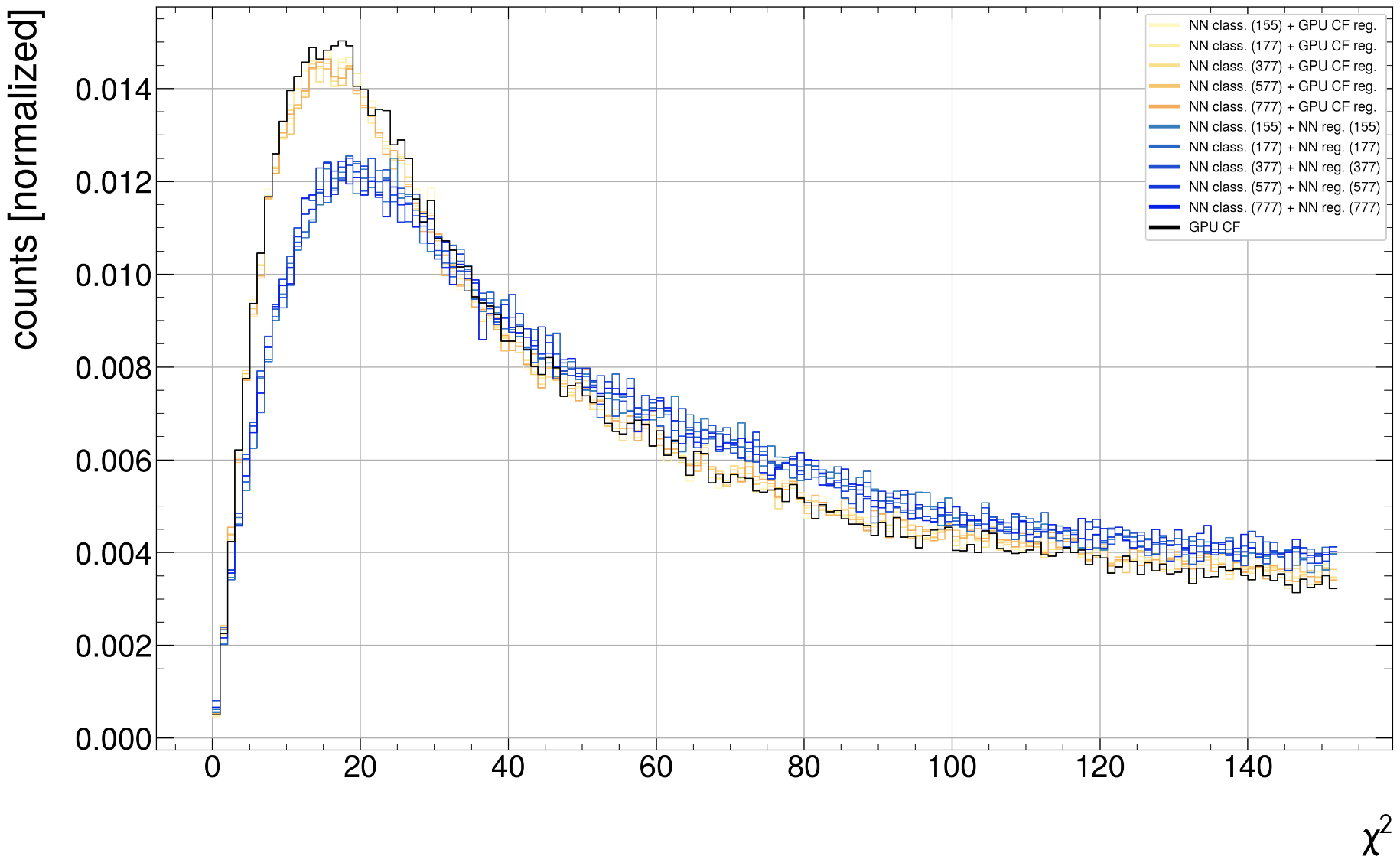

Improvement noticeable in track-chi2 distribution

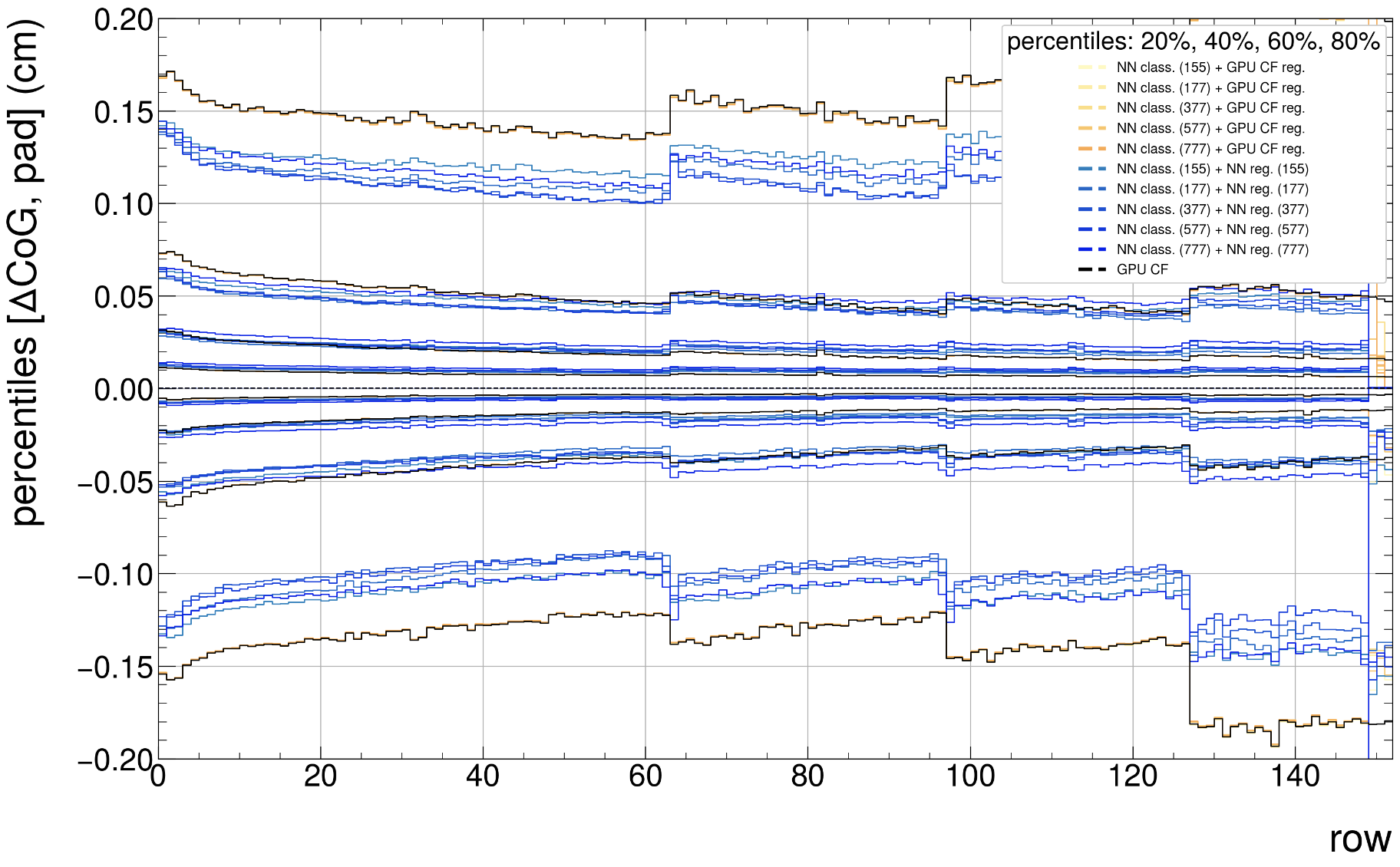

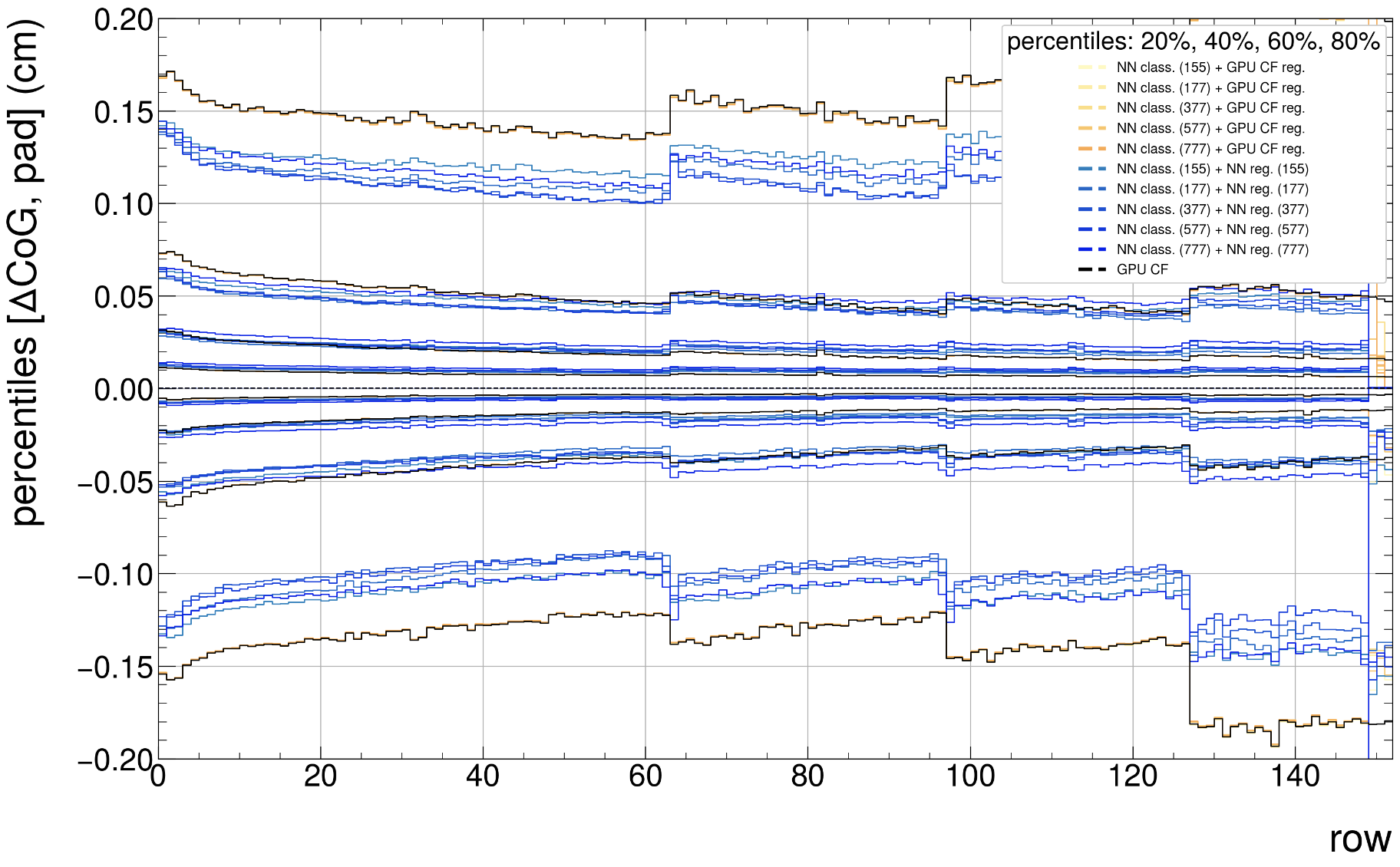

2. Percentile comparison

Noticeable differences:

- Distribution is slightly asymmetric and also non-gaussian

- GPU CF distribution is slightly higher peaked at the center (at delta cog_pad = 0).

- NN has a narrower distribution on the outsides -> At 60% and 80% percentiles, the NN curves are closer to 0.

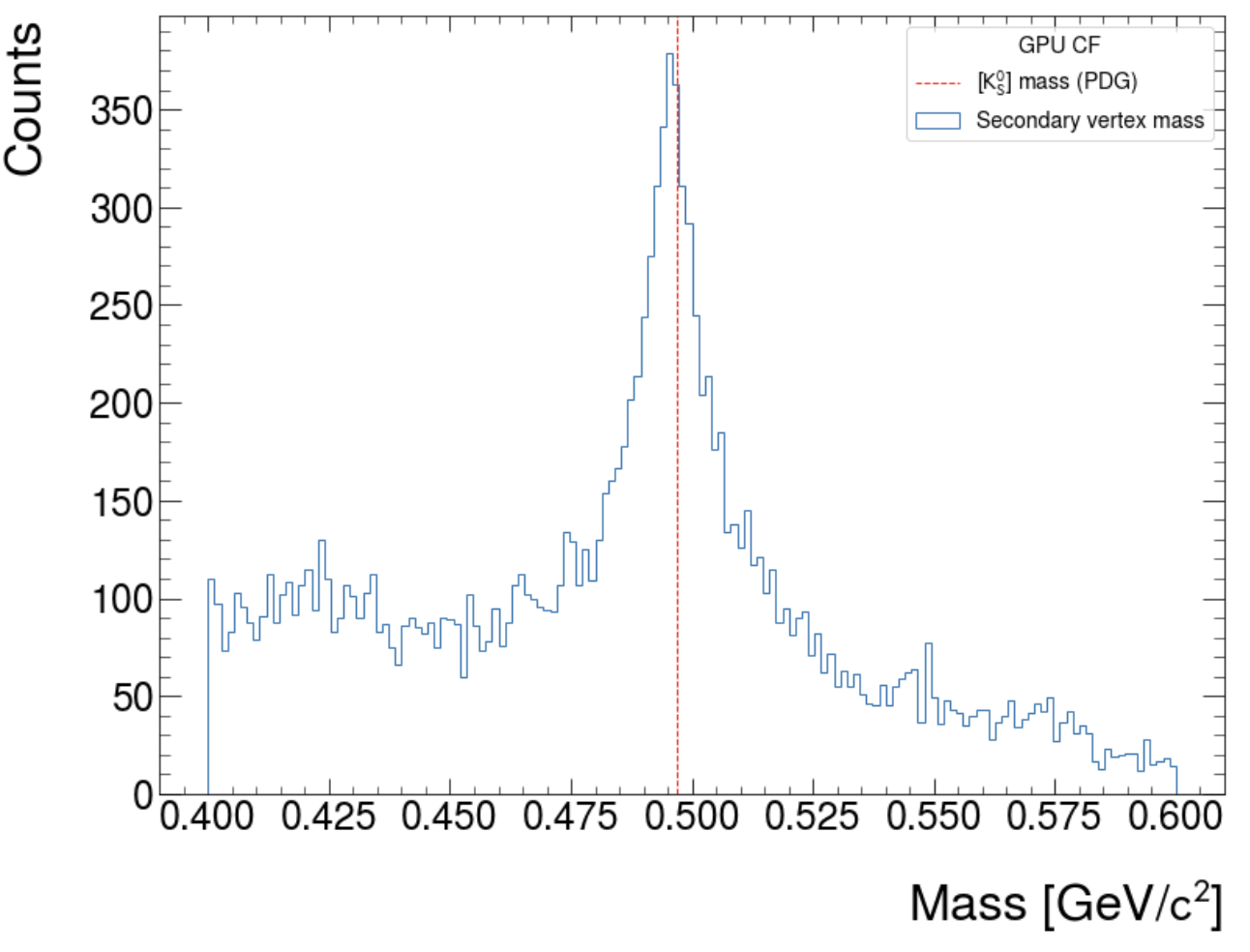

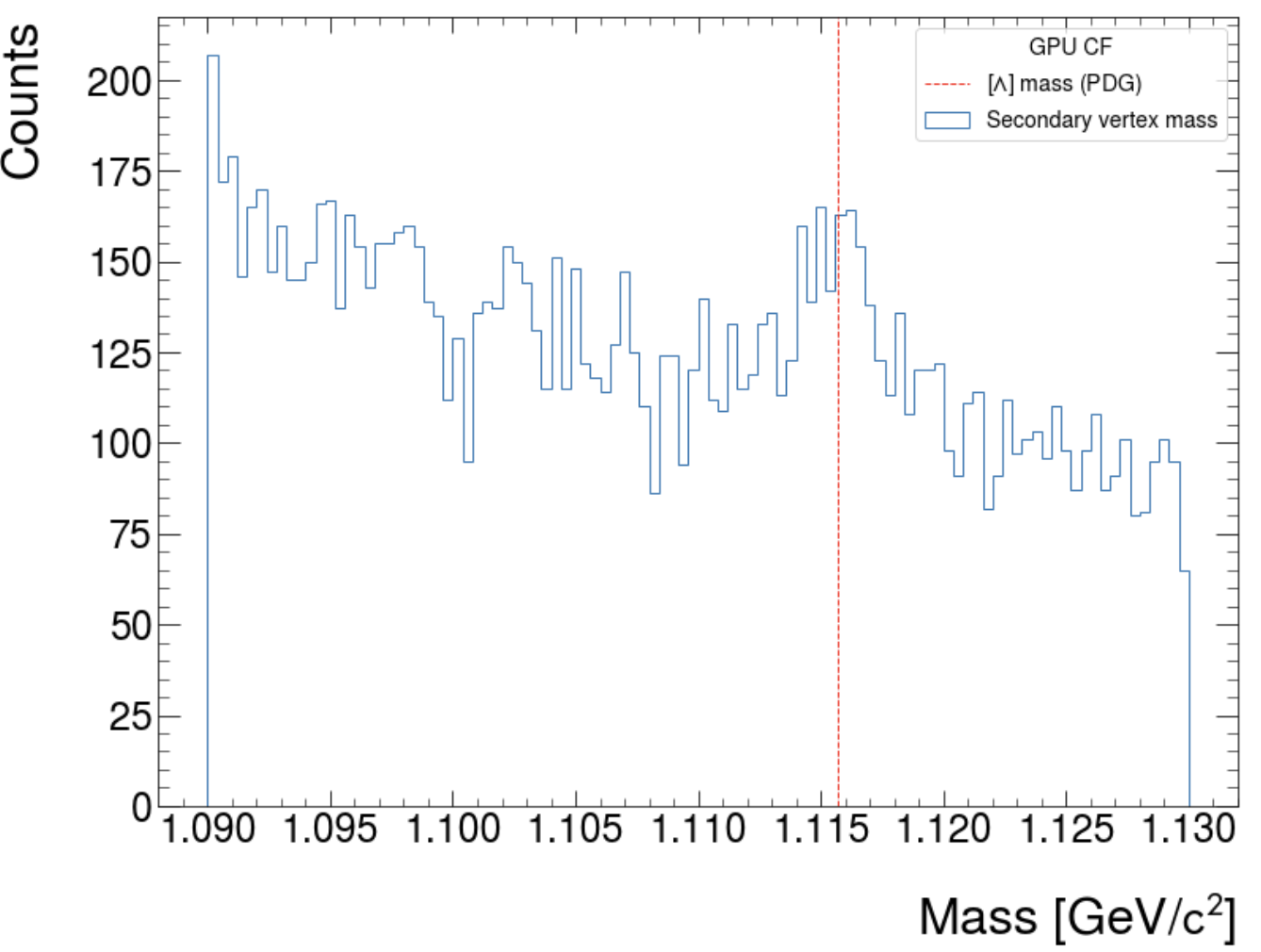

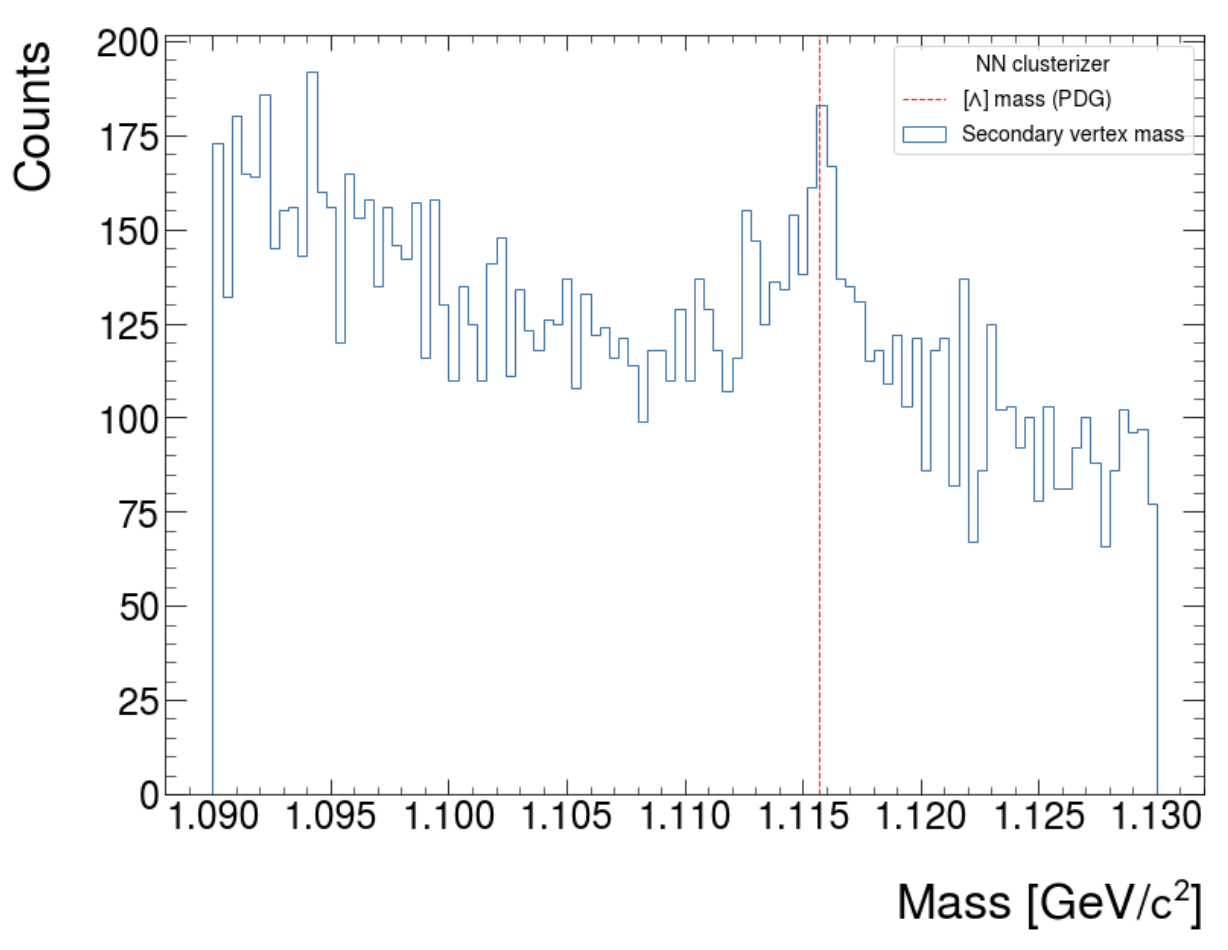

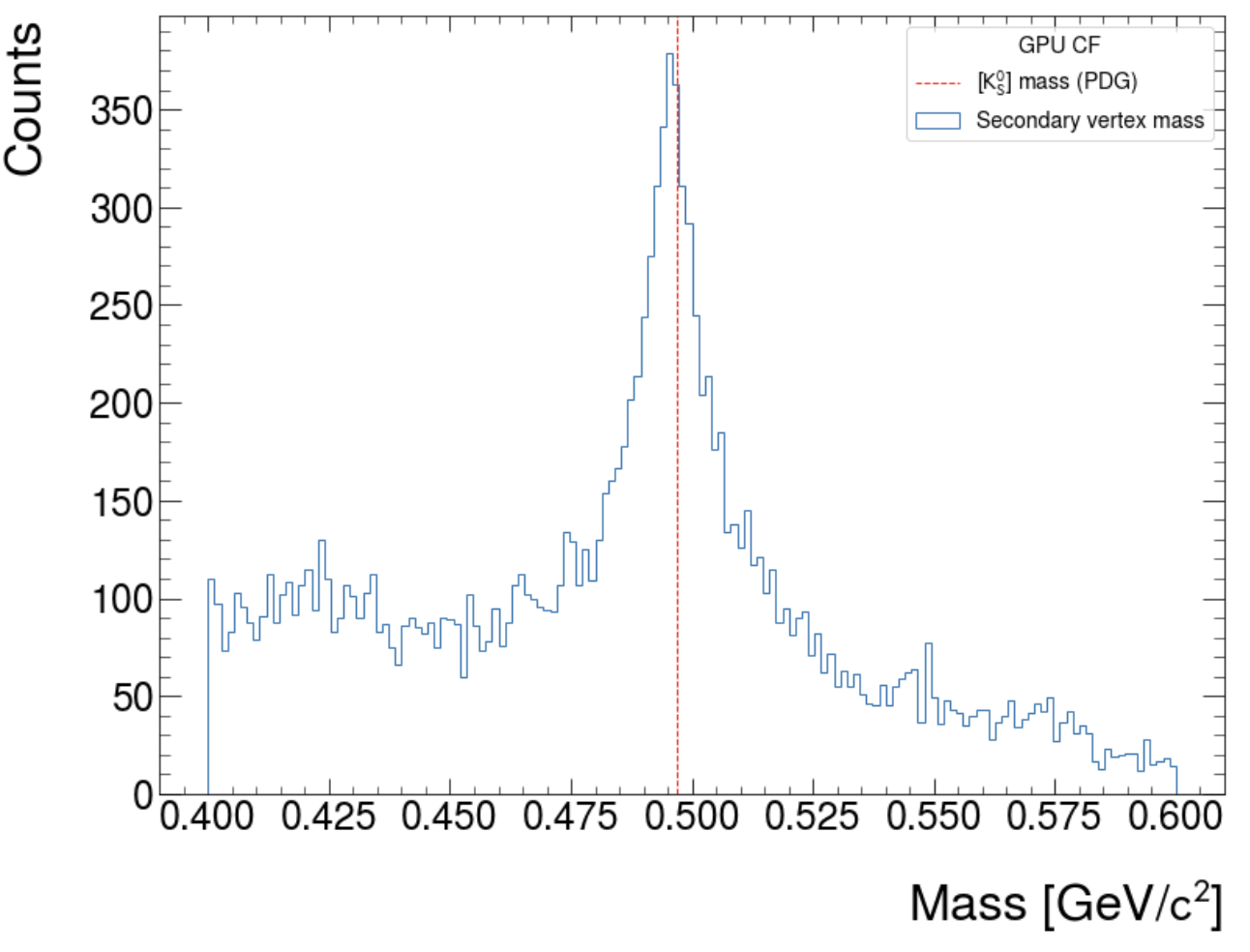

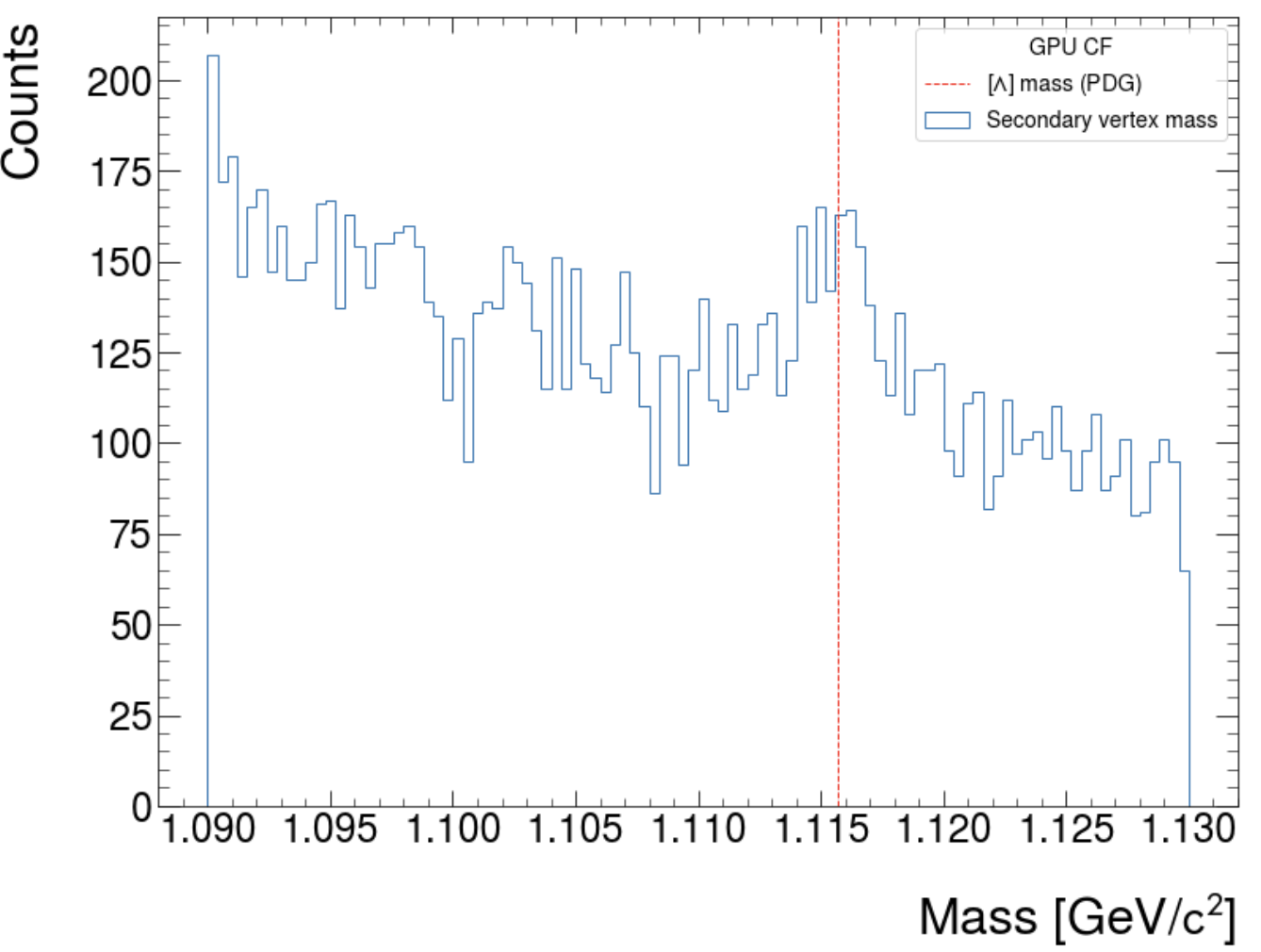

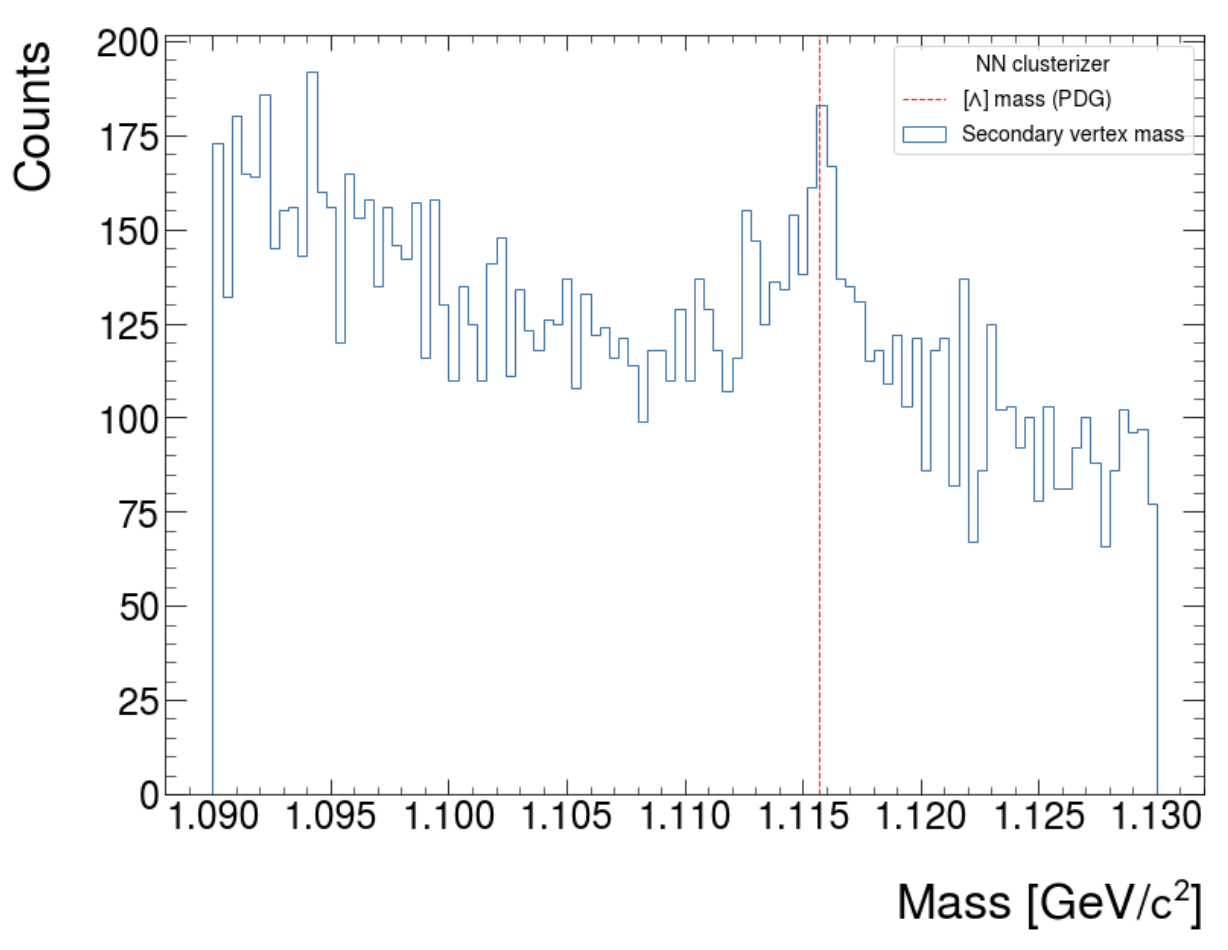

3. Real data

- All raw TFs of LHC24af, run 550536

- Using corrections: "--lumi-type 2 --corrmap-lumi-mode 1 --enable-M-shape-correction"

- Using a rather strict cut on NN clusterizer: Removing ~23% of clusters and ~10% of tracks

- Loosening cut on NN: Removing 8.2% of clusters and ~3.5% tracks -> Almost no effect on Lambda and K0S spectra!

-> Investigating dN/dη vs. pT next: Most probably loosing tracks at very low momentum