Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

10:00

→

10:20

Discussion 20mSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308:

- RC did some timeout tests, need to repeat in physics, then decide on actual timeouts.

- Ernst will verify the processing of calib data after data processing timeout.

- PR with InfoLogger improvements still WIP.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

- TF-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy. Status?

- ONNXRuntime update merged.

- Problem with GPU and non-GPU container building ONNXRuntime with same hash and uploading to binary repository.

- GPU O2 build does not get binary of ONNXRuntime with GPU support.

- Fixed by https://github.com/alisw/alidist/pull/5855 using new alibuild feature of versioned system packages.

Sync reconstruction

- Waiting for RC to test COSMIC replay data set.

- Waiting for RC to test STOP timeout impact.

- Problem with high CPU load due to DPL metrics. Disabled GUI metrics in online mode. Issue mostly fixed, but yesterday we had some problems?

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

AliECS related topics:

- Extra env var field still not multi-line by default., created https://its.cern.ch/jira/browse/OGUI-1624 to follow this up seperately from other tickets.

GPU ROCm / compiler topics:

- List of important issues with AMD:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- EPN deployed the fix by AMD a second time, this time it works. Automatic workaround for MI100 removed in O2/dev. Will be deployed with next SW update.

- Problem with building ONNXRuntime with MigraphX support, to be checked.

- slc9-gpu-builder container was lacking dependencies for building NVIDIA GPU ONNX Support with TensorRT. Fixed, and tested, but currently not really needed since we cannot build ONNXRuntime with AMD and NVIDIA support.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Once we bump arrow (PR opened by Giulio), we can bump LLVM to 19.

- AMD reported a regression where deterministic mode has slight differences CPU v.s. GPU. Need to check if regression in O2 code or in ROCm.

- ROCm 6.4 released. AMD split the driver and the ROCm part. Need to check if something do be done on our side. In any case, fix for synchronization is missing, so we cannot use 6.4 yet.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- Felix debugged the OpenCL clusterization problem to be due to off-by-one offset in NoiseSuppression. Need to check how that can happen only in OpenCL.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

- Improved code-generation and application of DETERMINISTIC mode flags, such that GPU RTC can enable the deterministic mode and no_fast_math flags in the code it compiles. Now also yields 100% same results as the CPU in deterministic mode.

- However, originally planned to have deterministic mode a runtime flag only if RTC is used. Tunrs out this won't work, since configuration parameters might be rounded when scaled on the host before being passed on to the GPU. I.e. unavoidable to recompile the host code in deterministic mode.

- Switched from thrust library to CUB library for sorting using the full GPU device. Thrust was adding unnecessary synchronizations. I patched them away in CUDA's thrust, but never had time to do the same in HIP thrust, and my CUDA patch didn't work any more with the latest CUDA. So switching to CUB seems the simplest solution. Time per TF reduced from 4.1 to 4.0 seconds on my NVIDIA GPU, unfortunately no improvements on MI50/MI100.

- With RTC able to compile with NO_FAST_MATH, working to fix the issue that some clusters fail track-model decoding if RTC enabled due to floating point rounding.

- We had fixed this without RTC using per-kernel compilation and per-kernel compile flags, but so far this was not possible with RTC.

- We now have a runtime config object with all GPU launch bound parameters. Can automatically generate RTC code with that. (And we can load the runtime object from a preprocessor define header).

- This is now use by Gabriele, to tune launch bound parameters with RTC (much faster than recompiling O2).

- Working on PR to add more GPU compile time parameters to the config object in this way, such that they can be changed for RTC.

Other Topics

- Selected Felix as first candidate for Quest position. Now at HR, but going pretty slowly...

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- EPN would like to bump slurm, for that we also need to bump the async voboxes. I'd suggest to move them to ALMA9 directly. Probably need to sit together to do this. From then on, we also plan to put the vobox handling into the EPN ansible, so that EPN will take over its maintenance.

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

- Start / Stop / Start: 2 problems on O2 side left:

-

10:20

→

10:25

Following up JIRA tickets 5mSpeaker: Ernst Hellbar (CERN)

Low-priority framework issues https://its.cern.ch/jira/browse/O2-5226

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Merged workflow fails if outputs defined after being used as input

- needs to be implemented by Giulio

- Cannot override options for individual processors in a workflow

- requires development by Giulio first

- Problem with 2 devices of the same name

- Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Run getting stuck when too many TFs are in flight.

- Do not use string comparisons to derrive processor type, since DeviceSpec.name is user-defined.

- Support in DPL GUI to send individual START and STOP commands.

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

- Implement a proper solution to detect wheter a device is firstInChain

- Deploy topology with DPL driver

PDP-SRC issues

- Check if we can remove dependencies on

/home/epn/odc/filesin DPL workflows to remove the dependency on the NFS- reading / writing already disabled

- remaining checks for file existence?

- check after Pb-Pb by removing files and find remaining dependencies

logWatcher.shandlogFetcherscripts modified by EPN to remove dependencies onepnloguser- node access privileges fully determined by e-groups

- new

log_accessrole to allow access inlogWatchermode to retrieve log files, e.g. for on-call shifters - to be validated on STG

- waiting for EPN for further feedback and modifications of the test setup

- new

BEAMTYPEfor oxygen period- https://its.cern.ch/jira/browse/O2-5797

- beam types

- p-O and O-O

- Ne-Ne still to be confirmed

- scripts to be adjusted to set proper workflow parameters

- will as RC to create new configurations, workflow parameters to be set in the configuration instead of the scripts

- tests using Pb-Pb replay data with new beam types

- some performance issues due to unset process multiplicities, otherwise everything looks fine

- RC asked for a synthetic OO dataset, will check with Sandro if our generators are already set up for this

- O2 code to be checked for pp and PbPb specific variables

- STOP timeout tests

- fixed: O2_DPL_PROCESSING_TRANSITION_TIMEOUT_DEFAULT=10

- EPNs, STOP time between `Stop request for ODC` and `Collection states: READY`

- 2tcEZMn3qXX - 561585 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=1` - 13 s - 56 tasks fail at shutdown, all `gpu-reconstruction`

2tcF2Aape2M - 561586 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=10` - 33 s - 52 tasks fail at shutdown, all `gpu-reconstruction`

2tcFeEy12DF - 561587 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=20` - 40 s - 15977 tasks fail at shutdown

2tcGLWLFhG5 - 561588 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=30` - 40 s - 112 tasks fail at shutdown, all `gpu-reconstruction`

2tcGp96HGRo - 561589 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=40` - 50 s - few oldetPossibleInput/Output messages 20 s after STOP, no crashes at shutdown

- 2tcEZMn3qXX - 561585 - `O2_DPL_EXIT_TRANSITION_TIMEOUT_DEFAULT=1` - 13 s - 56 tasks fail at shutdown, all `gpu-reconstruction`

-

10:25

→

10:30

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Framework

- Major changes / bug-fixes / improvements: https://github.com/AliceO2Group/AliceO2/pull/14117

- GPU stream implementation

- I/O Binding -> Massive speed up improvement

- Coming up: Memory arena deallocation -> Decreases execution speed but releaves memory after each Run() function call

Physics

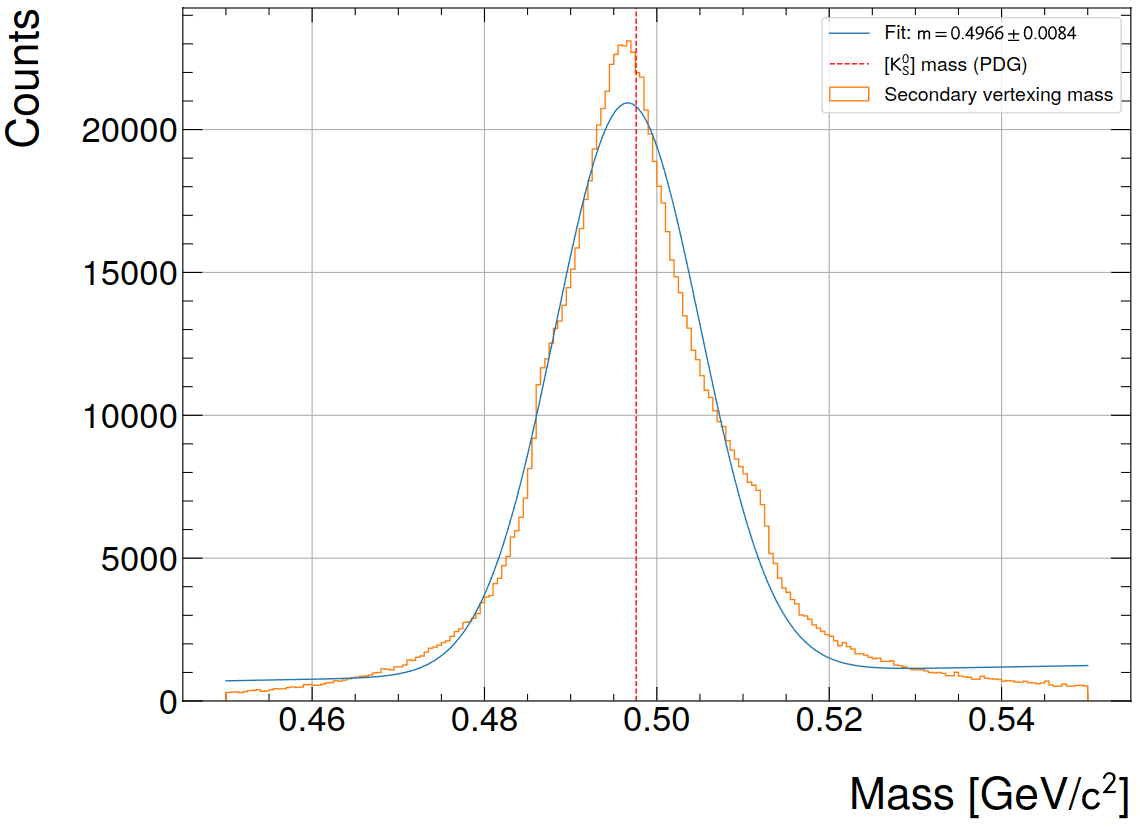

- Investigated loss of V0s: Just an issue with setting the right boundary values as configurable. no achieving >10% cluster reduction without loss of tracks

Current Focus

- Performance improvement of NNs -> Testing network and input size

- Reuse of memory for ONNX internal allocations

--------------------

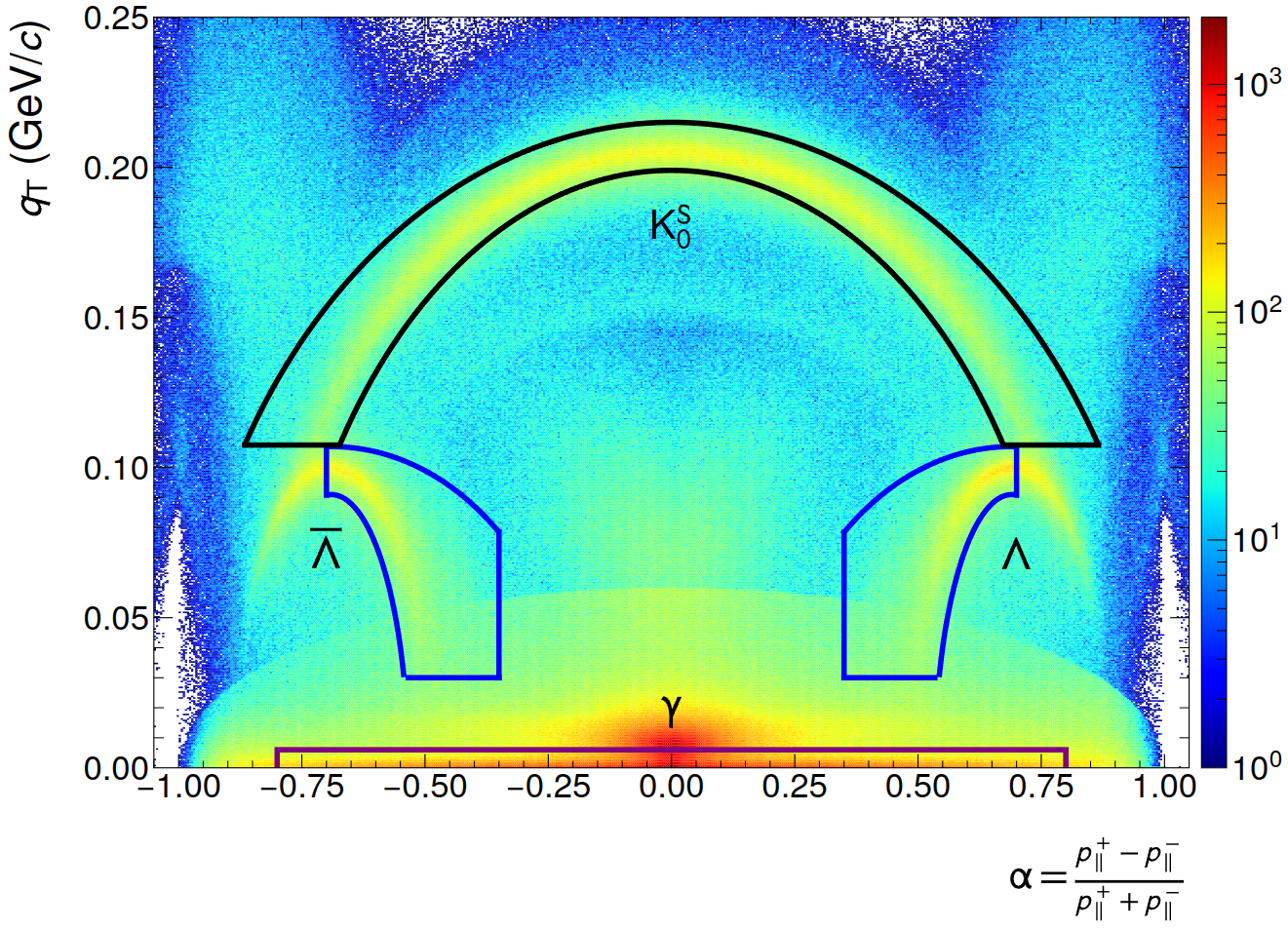

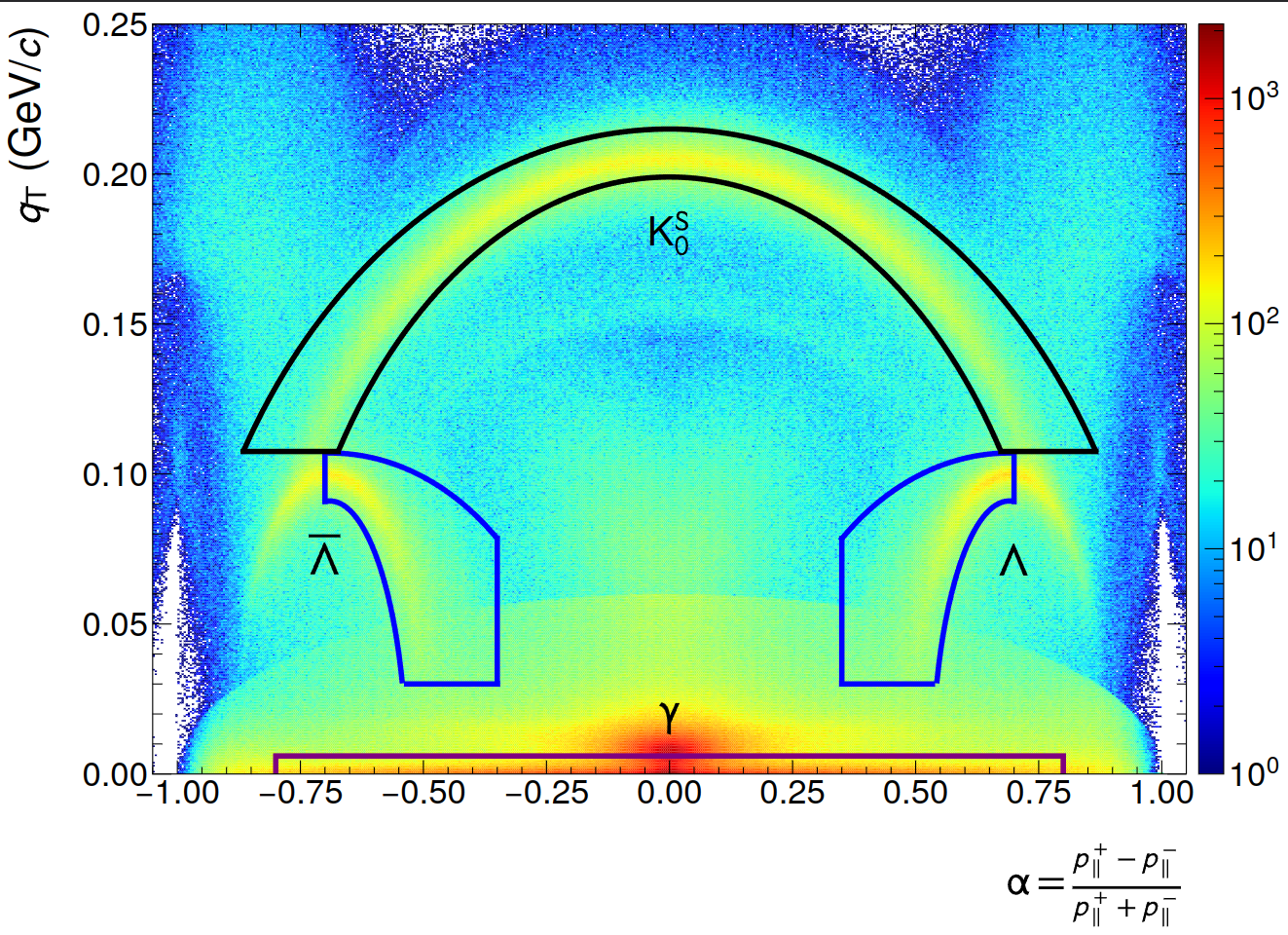

Full 24as period (or at least the fraction where we have digits)

Selection

- cos(PA) > 0.9995

- Armenteros-Podolanski selections (shown below)

- NN cutoff reduces clusters by ~13%, Input size: 5x11x11

(Left: NN, Right: GPU CF)

NN inference speed improvements

- Trade-off between memory consumption, compute speed and resulting quality: 3x9x9 input size, one / two layers with 128 nodes each. Achieves ~10-20 mio. clusters/s/GPU peak load (including data filling, evaluation of 2 NNs and data readout / publishing). Test on 3 lanes, 1 GPU.

- Arena memory clearing after each Run() function comes at some performance regression, but cannot be steered externally. Either clearing is done or not -> decided by the Run()-function internally with an external 0/1 option.

- Optimization: ONNX allows to set

kSameAsRequestedas arena option, which avoids new allocations (https://onnxruntime.ai/docs/get-started/with-c.html)

- Optimization: ONNX allows to set

- Tried CNNs but they are inherently "slow": PyTorch uses (N,C_out,H_out,W_out) layout. CNNs perform best when having a lot of channels. Parallelization is done over H,W dimensions, but not C dimension -> Subsequent layers with high number of channels are slow.

- ONNX with CUDA has some optimization for this after internal graph partitioning, but doesn't offer this functionality for ROCm

VRAM usage with 40k clusters per batch

- Input tensor: 40000x(3x9x9)x(3 lanes)xfloat = 116.6MB

- ONNX requests ~3% of the total GPU VRAM ~= 1GB

- Preciseness can be increased if needed

To-Do

- Use volatile memory at execution time for ONNX internal allocations (avoids GPU memory overloads while keeping the memory available for tracking)

- Potentially improve CCDB API calls

- What do we do with the momentum vector estimate? Currently not used at all.

- Major changes / bug-fixes / improvements: https://github.com/AliceO2Group/AliceO2/pull/14117

-

10:30

→

10:35

ITS Tracking 5mSpeaker: Matteo Concas (CERN)

ITS GPU tracking

- General priorities:

- Integration into the FST: done

- [WIP] Thrust allocator with external memory management: for the moment it compiles but does not work, needs dedicated discussion.

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Currently optimising the o2::its::timeframeGPU intialisation GPU transfers, trying to maximise data reuse & anticipating loads when it is possible.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Optimizations:

- Asynchronous parallelisation in the tracklet finding, i.e. Multi-streaming for obvious parallelisations.

- intelligent scheduling and multi-streaming can happen right after.

- Kernel-level optimisations to be investigated.

TODO:-

- Reproducer for HIP bug on multi-threaded track fitting: no follow-up yet.

- Fix possible execution issues and known discrepancies when using

gpu-reco-workflow: no progress.

DCAFitterGPU

- Deterministic approach via using

SMatrixGPUon the host, under particular configuration: no progress.

- General priorities:

-

10:35

→

10:45

TPC Track Model Decoding on GPU 10mSpeaker: Gabriele Cimador (Universita e INFN Torino (TO))

What has been done:

- Developed a unique Python interface to the standalone benchmark (https://github.com/cima22/O2GPU-autotuner)

- Easilly change kernel parameters defining dictionaries

- Method to measure mean kernel time

- Input: kernel_name, block and grid size, dataset

- Output: mean, std_dev

- Method to measure mean step time (e.g. TrackletConstructor, Clusterizer, GMMerger...)

- Input: dictionary of kernel_name, block and grid size and the dataset

- Output: mean, std_dev

- Without need to modify O2 code, only RTC used

- Developed the algorithm based on the Latin Hypercube Sampling, only for single kernels

- From first observations: minimum reached in less evaluations than grid search for a single kernel search

- Can dynamically refine the granularity of the search space

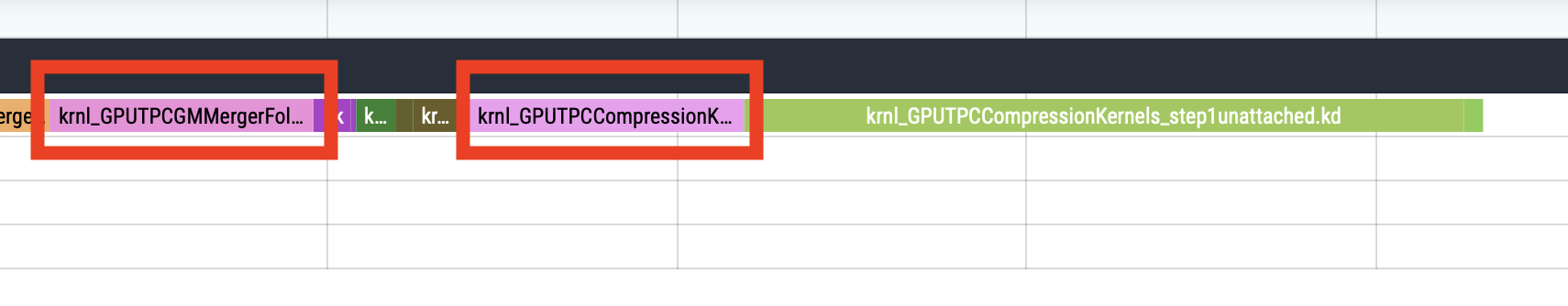

- Discovered dependency between GMMergerFollowLoopers and CompressionKernels_step0attached kernels

- Changing the parameters of GMMergerFollowLoopers alters the performance of step 0 of the compression kernels

- Looking at the profiler, it seems that write and reading operations of step 0 perform differently:

- WriteUnitStalled : The percentage of GPUTime the Write unit is stalled. Value range: 0% to 100% (bad).

Goes from 0.008% to 79% - VALUBusy : The percentage of GPUTime vector ALU instructions are processed. Value range: 0% (bad) to 100% (optimal).

Goes from 4.90% to 3.54% - R/WDATA1_SIZE : The total kilobytes fetched/written from the video memory. This is measured on EA1s.

Both metrics get reduced (i.e. less data movement)

- WriteUnitStalled : The percentage of GPUTime the Write unit is stalled. Value range: 0% to 100% (bad).

- This behaviour has not been observed for other kernels. This means that GMMergerFollowLoopers and Compression step 0 should be optimised together

- For this reason also grid search results for Compression step 0 where not around 1 when the default configuration was measured

WIP :

- Make the new algorithm work for multiple kernels (step optimisation)

- Obtain more data to effectively compare grid search with new algorithm for single kernels

To-Do:

- Test the new algorithm with the GMMergerFollowLoopers / CompressionKernels_step0 pair (4 dimension optimisation)

- If results are promising, test with a bigger step (e.g. TrackletConstructor step has 16 parameters)

- Use external optimisation framework via the new Python interface

Questions:

- If in the parameter file, a third integer is defined, e.g.:

#define GPUCA_LB_GPUTPCGMMergerFollowLoopers 256, 2, 200The kernel is executed with

grid_size = 200, instead of being a multiple of the Compute Units. - This might be interesting when refining the granularity of the grid size to be different than being a multiple of CUs, especially for kernels which run in parallel

- Developed a unique Python interface to the standalone benchmark (https://github.com/cima22/O2GPU-autotuner)

-

10:45

→

10:55

Efficient Data Structures 10mSpeaker: Dr Oliver Gregor Rietmann (CERN)

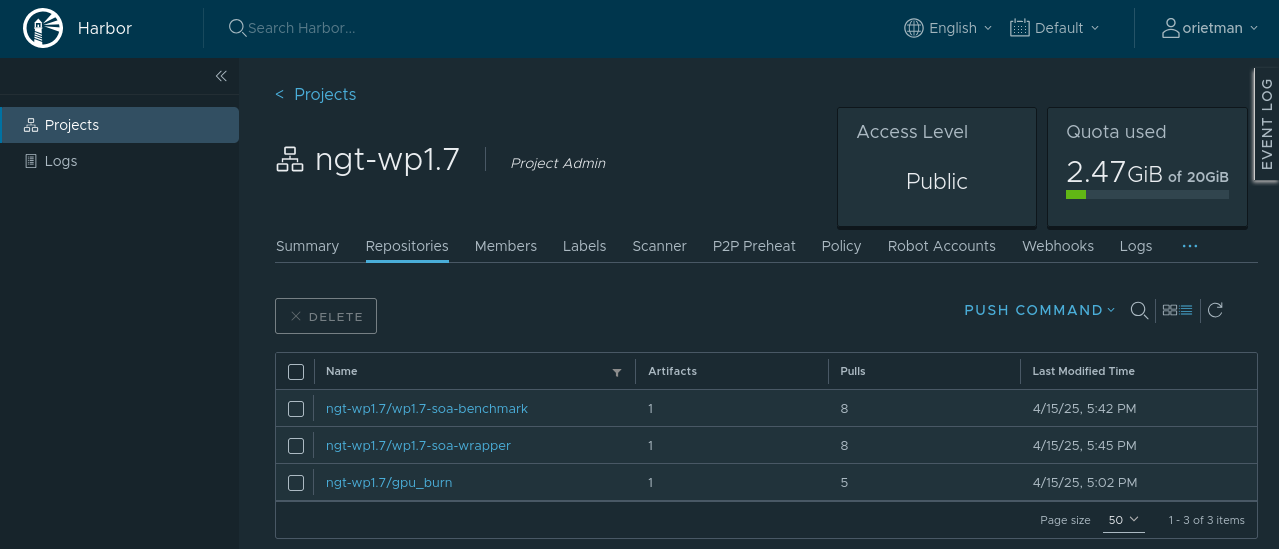

Harbor Container Registry (plays the role of Dockerhub)

- Created a registry called ngt-wp1.7: https://registry.cern.ch/harbor/projects/3795/repositories

- Admin access for everyone in the e-group ngt-wp1-task1-7

- Images are scanned for vulnerabilites on push

- Pushing an image of the same name and tag will overwrite the image (retention policy due to 20 GB quota)

- Everyone can pull without login, e.g. docker pull registry.cern.ch/ngt-wp1.7/wp1.7-soa-wrapper:latest

- How to push: docker login registry.cern.ch

- In terminal, run: docker login registry.cern.ch

- Username is the Cern username

- Password is NOT the Cern password

- Instead go to the web interface (link above), top right corner --> User Profile --> Copy CLI secret.

- After successful login, run e.g. docker push registry.cern.ch/ngt-wp1.7/wp1.7-soa-wrapper:latest

- A robot account robot-ngt-wp1.7+github was created to push from Github actions.

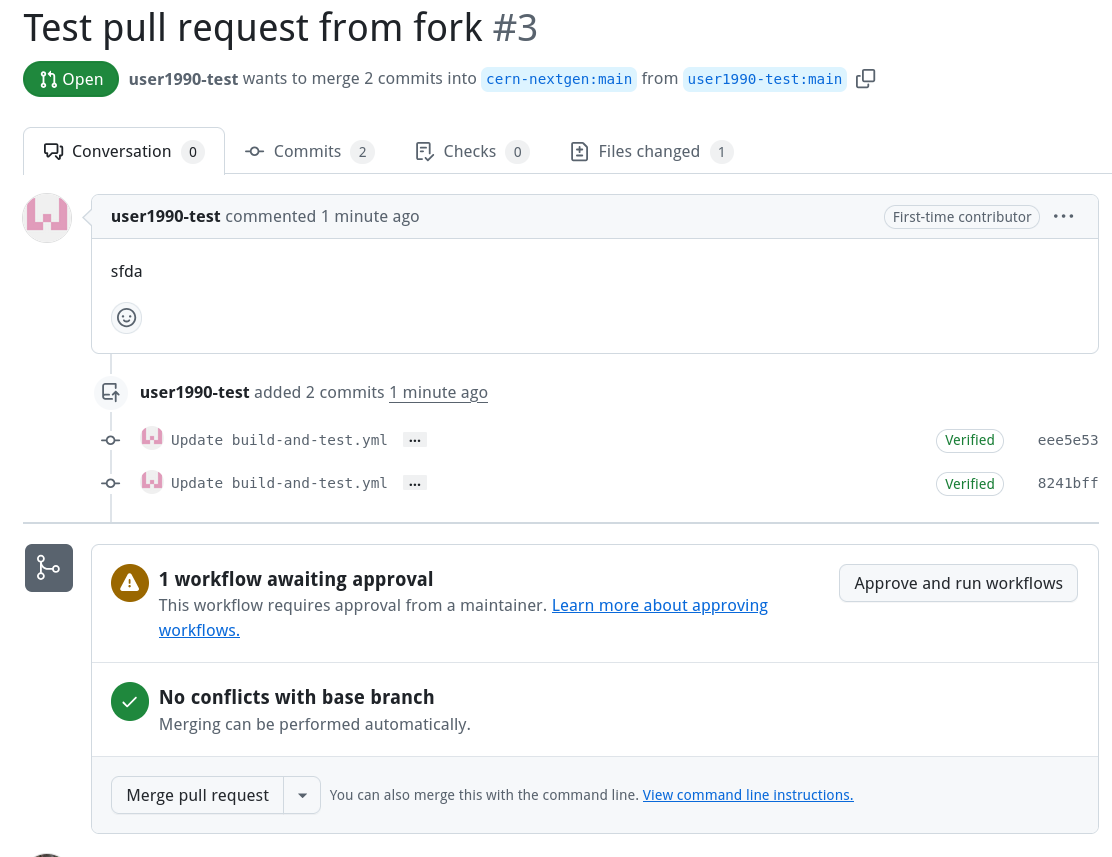

GitHub repos naming convention (only a proposal)

REPONAME is a placeholder e.g. for "soa-wrapper". For a GitHub action (e.g. for testing), we set up two repos:- wp1.7-REPONAME (contains the code)

- wp1.7-REPONAME-image (contains a Dockerfile)

One repo manages the GitHub action image of the other. More precisely:- The second repo has a GitHub action that builds a docker image called wp1.7-REPONAME:latest and pushes it to our registry on harbor.

- The first repo has a GitHub action that runs in the container wp1.7-REPONAME:latest. This container needs all the dependencies installed.

GitHub Actions

We can now run GitHub actions on our private runners. Check the following two repositories for an example.Actions run by pull requests from forked repos need approval from a repo maintainer before they are run.

Permissions (only a proposal)

- We create a GitHub group on cern-nextgen called wp1.7.

- We give maintainer access to this group for every wp1.7-* repo.

- In this group we put every engineer that works on wp1.7 code.

Moreover, we give admin access to Ricardo Rocha on organization level. Otherwise he cannot debug the runners.

NGT Hackaton

- We compared different approaches for SoA libraries.

- People liked our approach for its simplicity. They have integrated it testwise in their code (e.g. CMSSW).

- We implemented a benchmark repo to compare the performance of these approaches:

https://github.com/cern-nextgen/wp1.7-soa-benchmark

-

10:00

→

10:20