- Easilly change kernel parameters defining dictionaries

- Method to measure mean kernel time

- Input: kernel_name, block and grid size, dataset

- Output: mean, std_dev

- Method to measure mean step time (e.g. TrackletConstructor, Clusterizer, GMMerger...)

- Input: dictionary of kernel_name, block and grid size and the dataset

- Output: mean, std_dev

- Without need to modify O2 code, only RTC used

- From first observations: minimum reached in less evaluations than grid search for a single kernel search

- Can dynamically refine the granularity of the search space

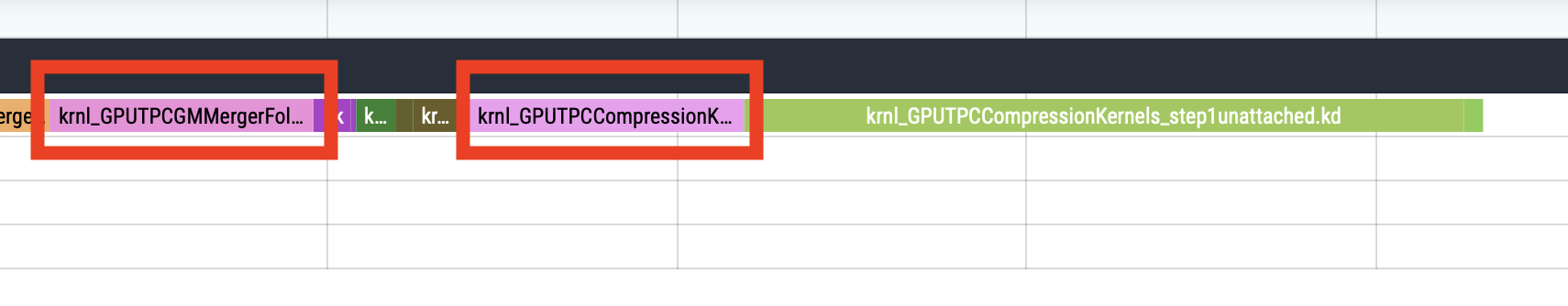

- Changing the parameters of GMMergerFollowLoopers alters the performance of step 0 of the compression kernels

- Looking at the profiler, it seems that write and reading operations of step 0 perform differently:

- WriteUnitStalled : The percentage of GPUTime the Write unit is stalled. Value range: 0% to 100% (bad).

Goes from 0.008% to 79% - VALUBusy : The percentage of GPUTime vector ALU instructions are processed. Value range: 0% (bad) to 100% (optimal).

Goes from 4.90% to 3.54% - R/WDATA1_SIZE : The total kilobytes fetched/written from the video memory. This is measured on EA1s.

Both metrics get reduced (i.e. less data movement)

- WriteUnitStalled : The percentage of GPUTime the Write unit is stalled. Value range: 0% to 100% (bad).

- This behaviour has not been observed for other kernels. This means that GMMergerFollowLoopers and Compression step 0 should be optimised together

- For this reason also grid search results for Compression step 0 where not around 1 when the default configuration was measured