US ATLAS Computing Facility

Facilities Team Google Drive Folder

Zoom information

Meeting ID: 996 1094 4232

Meeting password: 125

Invite link: https://uchicago.zoom.us/j/99610944232?pwd=ZG1BMG1FcUtvR2c2UnRRU3l3bkRhQT09

-

-

1

WBS 2.3 Facility Management NewsSpeakers: Robert William Gardner Jr (University of Chicago (US)), Dr Shawn Mc Kee (University of Michigan (US))

-

2

OSG-LHCSpeakers: Brian Hua Lin (University of Wisconsin), Matyas Selmeci

Release (this week or next)

- HTCondor-CE 5.1.1 (3.5 upcoming, 3.6)

- HTCondor 9.0.1 (3.5 upcoming, 3.6)

- Patched XRootD 5.2.0

- Fix 'anygroup' config backwards compat segfault https://github.com/xrootd/xrootd/commit/316300c9b2176153fe50ce766079f701d0d10a25#diff-81e843035b5adc6547b1280bc821da90eb628934d57456d4e9b8cb0f497627f2

- Fix HTTP TPC issue due to empty cert/CRL files https://github.com/xrootd/xrootd/issues/1467

- frontier-squid 4.15-2.1 (no log rotation if log compression disabled)

-

Topical ReportsConvener: Robert William Gardner Jr (University of Chicago (US))

-

3

Squid Failover discussionSpeakers: Dave Dykstra (Fermi National Accelerator Lab. (US)), Fred Luehring (Indiana University (US))

-

3

-

4

WBS 2.3.1 Tier1 CenterSpeakers: Doug Benjamin (Brookhaven National Laboratory (US)), Eric Christian Lancon (Brookhaven National Laboratory (US))

Large scale data deletion from life time model showed the dCache configuration changes to speed up deletion was highly successful. 11 Hz -> 61 Hz.

Seeing issues with PnfsMangers. We are using 6.2.23.

Chasing down transfer issues from BNL LAKE.

Chilled water issues caused an automated shutdown for ATLAS compute nodes. see plot for details

-

WBS 2.3.2 Tier2 Centers

Updates on US Tier-2 centers

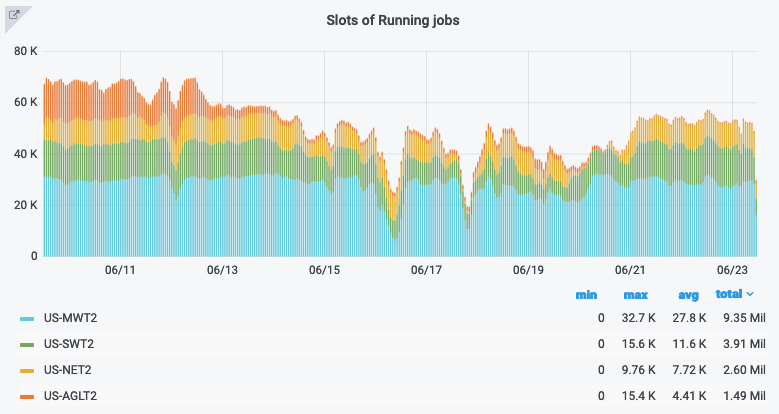

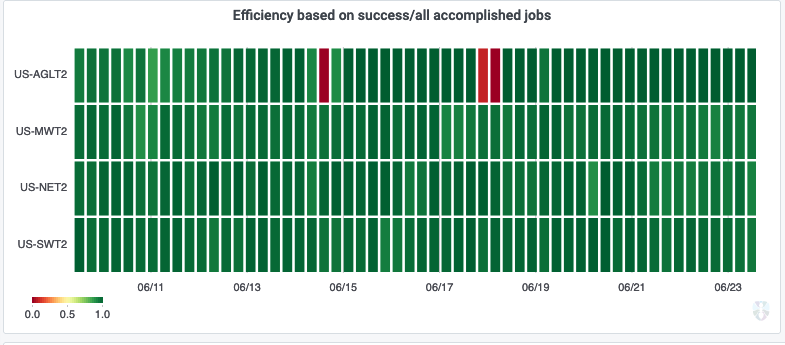

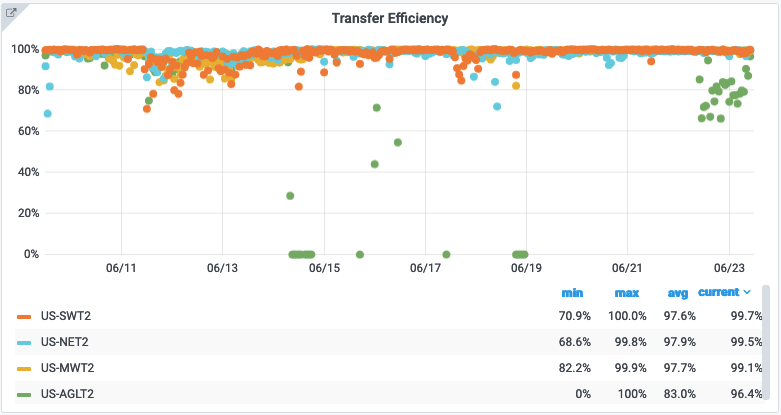

Convener: Fred Luehring (Indiana University (US))- Various problems and down times reduced production over the last 2 weeks.

- AGLT: Long downtime for network hardware/cabling upgrade

- MWT2: IPV6 troubles with UC enterprise switches

- NET2: Staging / transfer issues?

- SWT2: OU downtime, CPB had utility issues and gatekeeper inefficiencies.

- Please provide updates on supporting IPV6 and HTTP-TPC for sites not supporting them.

-

5

AGLT2Speakers: Philippe Laurens (Michigan State University (US)), Dr Shawn Mc Kee (University of Michigan (US)), Prof. Wenjing Wu (University of Michigan)

Infrastructure upgrades

- MSU moved first wave of equipment to Data Center on 14 & 15-Jun.

Included VMware and all dcache pool nodes.

Scope of first wave limited by (lack of) availibility of Juniper 10/25G switches.

Timing and choice of items was made to synchronize with UM site downtime.

Now have all switches.

Next wave will include about half of MSU T2 WNs.

Last wave(s) need to be synchronized with physics dept servers in neighbor room.- UM replaced (almost) all rack switches (repurposing a few old ones).

Redundant uplinks at 100G.

Redundant paths to main campus and WAN.

Mix of Cisco (campus) and Dell (in room) brings some limitations.

Also replaced all network cabling, using fibers and transceivers everywhere possible.

Increased KVM coverage.

Increased switch serial port access.

- Despite extensive preparations and pre-labeling and sorting of cables,

and despite the 2 admin, 2 staff and 5 student task force

and despite UM Networking support

and despite working over the weekend

... this took much longer than anticipated.- Status

Inter switch infrastructure was finished on Friday

Solving bugs and problems solved over the weekend.

dCache back online on Monday.

Queues in TEST mode on Tuesday.

Queues now back on AUTO on Wednesday (running mostly on MSU WNs)- Remaining: More cabling of T2 WNs and UM T3

-

6

MWT2Speakers: David Jordan (University of Chicago (US)), Jess Haney (Univ. Illinois at Urbana Champaign (US)), Judith Lorraine Stephen (University of Chicago (US))

-

7

NET2Speaker: Prof. Saul Youssef (Boston University (US))

No GGUS tickets.

We've had a couple of power sag incidents in the past two weeks which has caused some problems. This may have caused a dip in production over the weekend, but it's unclear. Still investigating...

Xrootd 5.2.0 cluster, containerized is working. I guess we'll upgrade to 5.3.0 before going into production, re: Brian's notes.

Preparing for new worker nodes.

Preparing to upgrade networking to NESE.

-

8

SWT2Speakers: Dr Horst Severini (University of Oklahoma (US)), Mark Sosebee (University of Texas at Arlington (US)), Patrick Mcguigan (University of Texas at Arlington (US))

UTA:

SWT2_CPB had an issue with CE due to remote spool directory. Combined with minor power work, we drained and reconfigured the spool directory to be local. So far, so good.

No issues at UTA_SWT2, but we are making progress with logistics necessary to retire cluster. Will start looking at retiring the storage first while using SWT2_CPB_DATADISK as storage location.

OU:

- xrootd proxy gateway on se1.oscer.ou.edu (OU_OSCER_ATLAS_SE) upgraded to 5.2.0, and http-tpc enabled. http transfers work, but tpc is failing because of a bug with TmpCA file creation which affects xfs file systems (which we have here). Will be fixed in 5.3.0.

- Today is OSCER cluster maintenance, so jobs are held, no jobs currently running. They will automatically start after maintenance is concluded.

- Various problems and down times reduced production over the last 2 weeks.

-

9

WBS 2.3.3 HPC OperationsSpeaker: Lincoln Bryant (University of Chicago (US))

-

WBS 2.3.4 Analysis FacilitiesConvener: Wei Yang (SLAC National Accelerator Laboratory (US))

-

10

Analysis Facilities - BNLSpeaker: William Strecker-Kellogg (Brookhaven National Lab)

1. Power cut didn't affect the Tier-3 very much (a 60% reduction in available slots for 2-3 hours) when many weren't used anyway.

2. GPFS vulnerability may require drain & reboot soon

3. Request from DOE / NPP to give large fraction of HTC resources to a short-deadline analysis until August. Tier-3 will have 40% reduction in quota through August

-

11

Analysis Facilities - SLACSpeaker: Wei Yang (SLAC National Accelerator Laboratory (US))

-

12

Analysis Facilities - ChicagoSpeakers: David Jordan (University of Chicago (US)), Ilija Vukotic (University of Chicago (US))

atlas-ml.org - all running fine

UC Analysis Facility

- Everything is racked. All but one machine has power and is networked.

- Will get that machine online and ready to be benchmarked either today or tomorrow (6/23-24).

- Having some temperature concerns and running benchmarks again to stress test temps now that more mitigations have been put in place on the racks.

- Everything is racked. All but one machine has power and is networked.

-

10

-

WBS 2.3.5 Continuous OperationsConvener: Ofer Rind (Brookhaven National Laboratory)

- Hit a few XRootd deployment issues with setup at OU; some will be fixed in 5.3 release expected to come out this week.

- Status of BU/SWT2 deployment?

- Mark is working through the OSG Topology settings for sites to check compatibility with CRIC configuration

- VOMS-IAM testing ongoing (John DeStefano)

- Federated DevOps meeting

- Most SLATE squids redeployed to use disk space, BU change pending, AGLT2 when they're up and running fully again

- Further discussion of support procedures - monitoring/alerts; documentation (US ATLAS website); communication (RT, MM)

-

13

US Cloud Operations Summary: Site Issues, Tickets & ADC Ops NewsSpeakers: Mark Sosebee (University of Texas at Arlington (US)), Xin Zhao (Brookhaven National Laboratory (US))

-

14

Service Development & DeploymentSpeakers: Ilija Vukotic (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

XCache

- AGLT2 downtime, getting XCaches back up today

- MWT2 one of the nodes went down and everything still worked (as expected), added another node.

- BNL xcache node used by Doug, off-lined BNL VP queue

- Doing in depth analysis of the xcache usage data collected

VP

- Running fine

- Prague VP is now running "storage-less".

Alarm & Alert

- Running fine

- Updates as asked by CERN

ServiceX

- Stress tests continuing

- Rucio changes merged

- 15

- Hit a few XRootd deployment issues with setup at OU; some will be fixed in 5.3 release expected to come out this week.

-

16

AOB

-

1