US ATLAS Computing Facility

Facilities Team Google Drive Folder

Zoom information

Meeting ID: 996 1094 4232

Meeting password: 125

Invite link: https://uchicago.zoom.us/j/99610944232?pwd=ZG1BMG1FcUtvR2c2UnRRU3l3bkRhQT09

-

-

13:00

→

13:10

WBS 2.3 Facility Management News 10mSpeakers: Robert William Gardner Jr (University of Chicago (US)), Dr Shawn Mc Kee (University of Michigan (US))

We have a request from Alessandra to fix our SRR set ups. There is a new script you can run to check a specific cloud.

For the US Cloud I did the following:

setupATLAS -3

lsetup adctools

voms-proxy-init -voms atlas

checkSRR.sh USI will attach a the output as a separate file. The US cloud has some issues to fix here.

Next week is Supercomputing

-

13:10

→

13:20

OSG-LHC 10mSpeakers: Brian Hua Lin (University of Wisconsin), Matyas Selmeci

Release (this week)

- 3.5 upcoming

- XRootD 5.3.2

- xrootd-multiuser

- osg-ca-certs-updater (3.5, EL7+EL8)

- 3.6

- gratia-probe

- osg-flock

Token Transition

- Default issuer + subject mappings

# ATLAS production

SCITOKENS /^https:\/\/atlas-auth.web.cern.ch\/,7dee38a3-6ab8-4fe2-9e4c-58039c21d817/ usatlas1

# ATLAS analysis

SCITOKENS /^https:\/\/atlas-auth.web.cern.ch\/,750e9609-485a-4ed4-bf16-d5cc46c71024/ usatlas3

# ATLAS SAM/ETF

SCITOKENS /^https:\/\/atlas-auth.web.cern.ch\/,5c5d2a4d-9177-3efa-912f-1b4e5c9fb660/ usatlas2 - CEs that need to update to HTCondor-CE 5/HTCondor 9 from 3.5 upcoming

- gate02.grid.umich.edu

- gate01.aglt2.org

- gate03.aglt2.org

- gridgk01.racf.bnl.gov

- gridgk02.racf.bnl.gov

- gridgk03.racf.bnl.gov

- gridgk04.racf.bnl.gov

- gridgk06.racf.bnl.gov

- gridgk07.racf.bnl.gov

- gridgk08.racf.bnl.gov

- iut2-gk.mwt2.org

- uct2-gk.mwt2.org

- mwt2-gk.campuscluster.illinois.edu

- atlas-ce.bu.edu

- deepthought.crc.nd.edu

- ouhep0.nhn.ou.edu

- tier2-01.ochep.ou.edu

- grid1.oscer.ou.edu

- gk04.swt2.uta.edu

- 3.5 upcoming

-

13:20

→

13:50

Topical ReportsConvener: Robert William Gardner Jr (University of Chicago (US))

-

13:20

TBD 30m

-

13:20

-

13:50

→

13:55

WBS 2.3.1 Tier1 Center 5mSpeakers: Doug Benjamin (Brookhaven National Laboratory (US)), Eric Christian Lancon (Brookhaven National Laboratory (US))

dCache upgrade to latest golden release. bug in SRR - dCache had work around - to be fixed

New VP site and VP queue - to avoid injection of local xcache address that caused problems for users jobs using data from BNLLAKE_DATADISK.

Shigeki will produce a list of questions for a collaboration btwn BNL SDCC, dCache, ATLAS ADC, Rucio, FTS dev. goal to provide enough metadata to efficiently use TAPE system at Teri 1 site.

-

13:55

→

14:15

WBS 2.3.2 Tier2 Centers

Updates on US Tier-2 centers

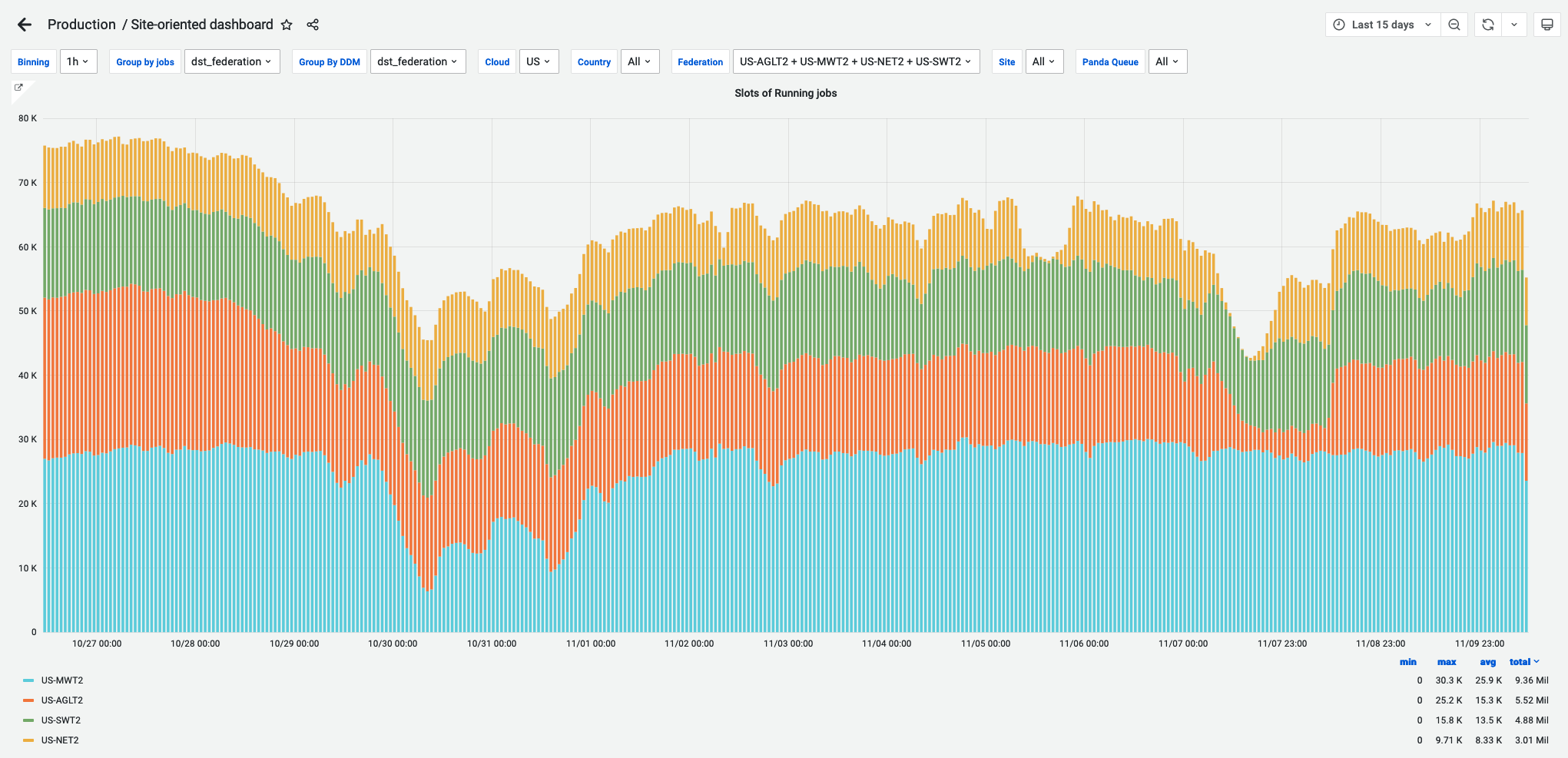

Convener: Fred Luehring (Indiana University (US))- The past two weeks were OK running but there were problems:

- AGLT2 grid job / dCache issues over last weekend

- BOINC did fill empty slots.

- However the grid prod job failure rate was 11%.

- MWT2 Drained over weekend before last.

- Issue not understood but unlikely to be a site problem.

- NET2 high failure rate for prod jobs: 24% over the two weeks.

- SWT2 OU: XRootD service hangs and 21% prod job failure rate

- SWT2 CPB: Some XRootD service issues but generally OK

- AGLT2 grid job / dCache issues over last weekend

- Rob discovered in talking to Dell that sites ordering C6525 servers(chassis) are getting them this month bit sites ordering R6525 servers (1U) are getting them in January.

- Do we want to change our orders to chassis or ask Dell if we can get better CPUs since we are waiting until January anyway for 1U servers?

- IU is getting its chassis servers this week and Illinois expects to receive theirs soon.

- Could UMich, MSU, UC, BU, and OU please email me with what was ordered what the expected dates are to receive the gear.

- Once I have this info I will organize a planning meeting to discuss the next round of purchases this week or early next week. We must urgently start the process of spending down the rest of the equipment money by January 31...

- XRootD continued to have issues. From Wei:

-

We still see problem at OU. I finally was able to repeat this issue at SLAC.

-

For a long time, SLAC ran xrootd 5.3.0 from xrootd repo, and we never saw the problem. OU runs xrootd 5.3.1 from OSG 3.5upcoming and saw the problem of accumulating TCP CLOSE_WAIT in short period of time.

-

Since Oct 28, SLAC switched to use the same very OU use and we saw this problem after 9 days. SLAC has since to switched to xrootd 5.3.1 in EPEL to see if we can repeat the problem

-

- Please provide updates on IPV6 (NET2, SWT2) and the moves (MWT2, SWT2)

- We need to retire SRM soon.

-

13:55

AGLT2 5mSpeakers: Philippe Laurens (Michigan State University (US)), Dr Shawn Mc Kee (University of Michigan (US)), Prof. Wenjing Wu (University of Michigan)

Hardware

MSU site installed 3x R740xd2, now doing benchmark on different stripe size of the RAID6.

Service:

Update dCache from 6.2.29 to 6.2.32 to address security issues. The update was smooth.

HS06 benchmark:

We run it on 2 types of CPUs (Intel(R)Xeon(R)Gold6240R and Intel(R) Xeon(R) CPU E5-2650 v2), there are 2 discoveries: 1) the new HS06 score is between 6-8% higher than the old number (with the same benchmark toolkits)but different kernel and firmware. 2) We compare HS06 score with and without BOINC jobs running in the background, and having BOINC reduces the score by 1.32-2

Incidents:

Removing DBRelease file by ADC caused BOINC jobs failing and mis-accounting.

Xcache server sl-um-es4 crashed because of one disk failing. We replaced the disk (raid 0), recreated the instance for xcache.

On 11/7/2021 , from 3am UTC, SrmManager on dcache head nodes (head01) started to fail, and it caused file transfer low efficiency (60% failure), and rucio deletion failure (datadisk was almost full), and the AGLT2 PanDA queue to be also set to test status (this drained the site to only 20% job slot usage) due to over 60% failure on the jobs. We first restarted dcache on the head node, and it fixed the rucio deletion failure issue. Later we still saw low transfer efficiency, and the problem was traced back to one xrootd door(dcdmsu01) and one pool node (msufs04), we restarted dcache on both nodes and the transfer efficiency started to improve, but we saw more errors from other pool nodes, so we ended up restarting dcache on all nodes which eventually solved all the problems.

-

14:00

MWT2 5mSpeakers: David Jordan (University of Chicago (US)), Jess Haney (Univ. Illinois at Urbana Champaign (US)), Judith Lorraine Stephen (University of Chicago (US))

Updated dcache from 6.2.30 to 6.2.33 for security fix.

There were a handful of 1099 errors on a few workers at UC that all seemed to be failing to download the same file/set. Manually attempting to download the file succeeded.

Found a pilot bug where pilot would tell Panda the job failed, but the job is still running happily on the compute nodes until completion. Found while troubleshooting the 1099 errors and a spur of "lost heartbeat" errors.

Swing compute, for the move, is being benchmarked. Swing storage is in production.

First physical machine move is scheduled for December 6th.

UIUC:

- finalizing infrastructure changes to support the new compute purchase (shipment ETA this week)

- ICCP (Chit) is rebuilding the OS image for the SLATE node and Lincoln has offered to configure software

-

14:05

NET2 5mSpeaker: Prof. Saul Youssef

SRR reporting endpoint updated => gridftp not a used protocol in CRICBump from used space > total space briefly...

Issue with migration slowing GPFS down too much, resulting in staging errors.

Smooth operations otherwise

Low level of squid failovers...

We're mostly working on preparations for new workers, networking upgrade, NESE Tape preparation and preparing for adding more NESE Ceph storage.

-

14:10

SWT2 5mSpeakers: Dr Horst Severini (University of Oklahoma (US)), Mark Sosebee (University of Texas at Arlington (US)), Patrick Mcguigan (University of Texas at Arlington (US))

SWT2_CPB:

- System generally running smoothly

- Continue to monitor XRootD on SE gateway for hang-ups

- Adding additional hosts to distribute the load

UTA_SWT2:

- Decommissioning of the storage essentially done

- Working with data center personnel to schedule powering off, disconnecting network, etc.

- Final step is physically moving the hardware back to campus

OU:

- Generally smooth running, except

- XrootD on SE gateway also keeps hanging up

- Continue investigating with Andy, Wei, and rucio developers

- Some dark data on OU_OSCER_ATLAS_DATADISK, investigating

- Dark data was also responsible for DATADISK being black listed for writing, which caused additional job failures, since rucio refused to stage-out jobs. rucio is supposed to still stage-out, and they found a bug which they're working on.

- The past two weeks were OK running but there were problems:

-

14:15

→

14:20

WBS 2.3.3 HPC Operations 5mSpeakers: Lincoln Bryant (University of Chicago (US)), Rui Wang (Northern Illinois University (US))

- TACC has good throughput for the last few days

- Cori filesystem has been unstable off and on for the past week

- 20M additional hours added to Cori, need to use by the end of the year. Need to ramp up number of prod jobs going to Cori and use the regular priority queue to make sure we use the allocation

- Perlmutter setup continues, no major blockers just need to focus on configuration and testing.

-

14:20

→

14:35

WBS 2.3.4 Analysis FacilitiesConvener: Wei Yang (SLAC National Accelerator Laboratory (US))

-

14:20

Analysis Facilities - BNL 5mSpeaker: Ofer Rind (Brookhaven National Laboratory)

- Update of BNL shared-T3 documentation in progress.

- Very useful AGC Tools Workshop last week.

- 14:25

-

14:30

Analysis Facilities - Chicago 5mSpeakers: Ilija Vukotic (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

-

14:20

-

14:35

→

14:55

WBS 2.3.5 Continuous OperationsConvener: Ofer Rind (Brookhaven National Laboratory)

- Issue with Rucio prepending xcache to storage URL for jobs accessing data from BNLLAKE datadisk

- Config changed to: Atlas site = BNL-ATLAS_VP , Panda site=BNL_VP, PQ=ANALY_BNL_VP, Storage Unit = BNL_VP_SU

- Ilija/Ofer following up to finalize configuration

- Investigating why VP not declared down for dCache upgrade

- Status of XRootd site issues? Update to 5.3.2?

- Planning to test xrootd-standalone at BNL

-

14:35

US Cloud Operations Summary: Site Issues, Tickets & ADC Ops News 5mSpeakers: Mark Sosebee (University of Texas at Arlington (US)), Xin Zhao (Brookhaven National Laboratory (US))

-

14:40

Service Development & Deployment 5mSpeakers: Ilija Vukotic (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

XCache

- All working fine.

- All Slate managed instances on latest version 5.3.2

- BNL and LRZ-LMU asked to upgrade

- Issue with RAL as an origin. Debugging with Andy and Matevz

VP

- Working fine

- BNL VP queue moved to a new site (BNL_VP). This was needed due to introduction of BNLLAKE. Users jobs at BNL-ATLAS were being prepended xcache paths when reading from BNLLAKE.

- Oxford advised to move to VP

ServiceX

- Successful debut at the AGC Tools workshop last week.

- More tests. Documentation is being updated.

Rucio

- still issues returning closest replicas. Debugging with Martin.

- 14:45

- Issue with Rucio prepending xcache to storage URL for jobs accessing data from BNLLAKE datadisk

-

14:55

→

15:05

AOB 10m

-

13:00

→

13:10