- Compact style

- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

US ATLAS Computing Facility

→

US/Eastern

Description

Facilities Team Google Drive Folder

Zoom information

Meeting ID: 996 1094 4232

Meeting password: 125

Invite link: https://uchicago.zoom.us/j/99610944232?pwd=ZG1BMG1FcUtvR2c2UnRRU3l3bkRhQT09

-

-

13:00

→

13:10

WBS 2.3 Facility Management News 10mSpeakers: Robert William Gardner Jr (University of Chicago (US)), Dr Shawn Mc Kee (University of Michigan (US))

Hope everyone is recovered from our intense face to face meeting at SLAC last week!

We have quite a few areas to work on based on our meeting.

- The Tier-2 procurement plans should be read and commented on by anyone/everyone in the facility: https://drive.google.com/drive/folders/1rxwnJtNOxrfvBj7lCuiL3LZRItnJGaIB?usp=sharing. We need to finalize them by the end of the month

- We need to develop our mini-challenges and associated milestones for the upcoming DC24

- The load testing framework needs to be retried and improved, along with the creation of quarterly activities

- We want to test VP at the largest scale possible. Perhaps one Tier-2 by end of March 2023, then two by June 2023 and the whole facility by September 2023?

- The work on differentiated storage and storage organization needs to be planned out

- Everyone is encouraged to sign up for lightning talks at future meetings.

Don't forget our R&D and F2F notes:

F2F notes: https://docs.google.com/document/d/1Cb7nLgJKlwy_3twM_PkIzGq7biX6q6YPFwsCdIq0VtU/edit

Facility R&D notes: https://docs.google.com/document/d/1uqHqygcY6Dcg1amPBZcIH0d1x-5kBNvaxjc99UxvlXU/edit

The WLCG DOMA meeting today discussed DC24 https://indico.cern.ch/event/1226223/ . WLCG DOMA will be responsible for gathering information about mini-challenges and milestones (not imposing them or defining them). Interested groups need to contribute (like USATLAS).

Last item: we need to add our demonstrators in advance of the next ATLAS S&C week (January 23, 2023). See links at top of Facility R&D notes for more details.

-

13:10

→

13:20

OSG-LHC 10mSpeakers: Brian Hua Lin (University of Wisconsin), Matyas Selmeci

Packages Ready for Testing

OSG 3.6 - Major Components [1] - XRootD 5.5.1 - Fixes critical issue with XRootD FUSE mounts via xrdfs - CVMFS 2.10.0 - https://cvmfs.readthedocs.io/en/2.10/cpt-releasenotes.html - HTCondor 10.0.0 [2] - Please consult the upgrade guide for major differences https://htcondor.readthedocs.io/en/latest/version-history/upgrading-from-9-0-to-10-0-versions.html - In particular, consult the second section of things to be aware of when upgrading

[2] HTCondor 10.0.0 will not be released until we have a few successful upgrades.HTCondor

- The November 2022 release of 10.0.0 means that the 9.0 EOL is pushed back to May 2023

- HTCondor 10.2.0 is an EL9 preview release: it will have EL9 support but NOT cgroup support. EL9 cgroup support is targeted for 10.3.0

-

13:20

→

13:50

Topical ReportsConvener: Robert William Gardner Jr (University of Chicago (US))

-

13:50

→

13:55

WBS 2.3.1 Tier1 Center 5mSpeakers: Doug Benjamin (Brookhaven National Laboratory (US)), Eric Christian Lancon (Brookhaven National Laboratory (US))

-

13:55

→

14:15

WBS 2.3.2 Tier2 Centers

Updates on US Tier-2 centers

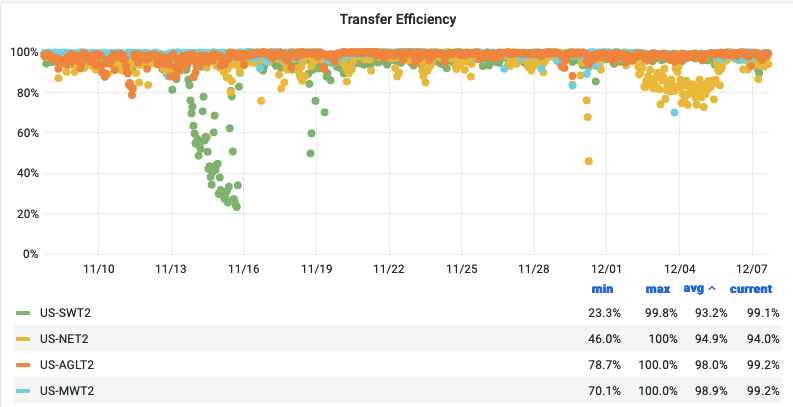

Convener: Fred Luehring (Indiana University (US))- Good running recently with few problems.

- Legacy NET2 storage stability improved by Augustine (BU). This storage will transfer over to NET2.1.

- MWT2 IU had serious enterprise level switch failure knocking the IU part of MWT2 offline for 4 days

- Database upgrade at CERN exposed a bug in a Panda daemon turning off most sites for about half a day

- Will discuss procurement plans made available as the SLAC meeting started at next week's 2.3 Management.

- The plans are in this Google folder: https://drive.google.com/drive/folders/1rxwnJtNOxrfvBj7lCuiL3LZRItnJGaIB

- We need to strategize about the purchase timing so the gear arrives when needed and we maximize our purchasing plans.

- Also discussed Tier 2 milestones at SLAC meeting.

- Procurement

- Next OS

- Local site

- Discussed a proposal to create operations plans for each Tier 2 and the a global operations plan.

-

13:55

AGLT2 5mSpeakers: Philippe Laurens (Michigan State University (US)), Dr Shawn Mc Kee (University of Michigan (US)), Prof. Wenjing Dronen

Incidents:

3 pools from msufs12 and msufs13 went offline, and caused file stage-in errors in jobs. Restarting the 3 pools fixed the problem.

Procurement:

In contact with Dell to get quote for the computing and storage nodes.

software:

Next OS an provision: Deployed CentOS 8 Stream with Cobbler for computing and storage nodes, configured by cfengine. We are testing different software deployment and configuration on CentOS 8 Stream.

verified that VMware 7 can use not-officially-supported MD3460 storage (Dell-customized VMware ISOs are still available on the VMware site) . This will help the MSU transition to TrueNAS as it won't be our only storage, thus upgrading to VMware 7 is not contingent on ordering/receiving a second TrueNAS storage node. iSCSI connectivity is still waiting on configuration.

-

14:00

MWT2 5mSpeakers: David Jordan (University of Chicago (US)), Farnaz Golnaraghi (University of Chicago (US)), Judith Lorraine Stephen (University of Chicago (US))

- UC had a storage node go down for a handful of hours morning of 12/5. MWT2 ran through it. Otherwise UC had smooth running.

- IU WAN switch failure so IU was offline: 11/29-12/2

- Site came back online on 12/2 at half bandwidth capacity.

- Some trouble with CRIC accepting downtime updates from topology. Only one of the condor central managers updated the extended downtime. The squid and second CM came back online after the initial downtime timeframe ended.

- UIUC drained when IU switch went down. Once we set IU gatekeepers offline, jobs filled back up.

-

14:05

NET2 5mSpeakers: Eduardo Bach (University of Massachusetts (US)), Rafael Antonio Lopez, William Axel Leight (University of Massachusetts Amherst)

- We've heard back from BU this Monday and our team has the clearance to begin the process of moving the hardware. We are hoping to have some equipment removal started by the end of next week.

- On the network front, our IT team is configuring the internal network, and the connection to ESNet comes right after that.

- We've heard back from BU this Monday and our team has the clearance to begin the process of moving the hardware. We are hoping to have some equipment removal started by the end of next week.

-

14:10

SWT2 5mSpeakers: Dr Horst Severini (University of Oklahoma (US)), Mark Sosebee (University of Texas at Arlington (US)), Patrick Mcguigan (University of Texas at Arlington (US))

- Good running recently with few problems.

-

14:15

→

14:20

WBS 2.3.3 HPC Operations 5mSpeakers: Lincoln Bryant (University of Chicago (US)), Rui Wang (Argonne National Laboratory (US))

- Focus for the next few weeks is on completing the allocation at NERSC. We have 100K hours of our original allocation remaining, plus we were given another 70K on top of that (170K total remaining)

- Now running Cori jobs in the Premium queue (2x charge factor)

- We are running as many jobs as we can at Perlmutter (40K - 80K cores), although there is a high number of failed jobs (30% or so). Needs further investigation.

- Rui working on GPU jobs at NERSC, Tadashi gave us a configuration hint for getting analysis/prun jobs going there.

- We started investigations into VP at NERSC. Running XCache on the login nodes (Lisa's recommendation, as PSCRATCH is not available on the DTNs), plus heartbeats and monitoring. All started manually for now (no containers just shell scripts). TBD if this would scale.

- We now have a TACC allocation for 330K hours and 3K GPU hours. Building a new, clean configuration at TACC. Investigating Singularity+CVMFSExec to bring our own CVMFS and run any job.

- Focus for the next few weeks is on completing the allocation at NERSC. We have 100K hours of our original allocation remaining, plus we were given another 70K on top of that (170K total remaining)

-

14:20

→

14:35

WBS 2.3.4 Analysis FacilitiesConveners: Ofer Rind (Brookhaven National Laboratory), Wei Yang (SLAC National Accelerator Laboratory (US))

-

14:20

Analysis Facilities - BNL 5mSpeaker: Ofer Rind (Brookhaven National Laboratory)

- IRIS-HEP/AGC Demo Day a week from Friday: https://indico.cern.ch/event/1218004/

- Doug has been testing Dask at BNL and we are coordinating with Matt Feickert on his presentation

- IRIS-HEP/AGC Demo Day a week from Friday: https://indico.cern.ch/event/1218004/

-

14:25

Analysis Facilities - SLAC 5mSpeaker: Wei Yang (SLAC National Accelerator Laboratory (US))

-

14:30

Analysis Facilities - Chicago 5mSpeakers: Fengping Hu (University of Chicago (US)), Ilija Vukotic (University of Chicago (US))

- Added batch queue status monitoring to the portal

- shows number of running jobs, used cores, pending and held jobs

- Observed memory used up first before cores lately. The prometheus monitoring(not currently availble in the protal) indicates in most cases the actual memory usage is much less than the requested memory. Some user engagement is needed to and we will also add monitoring improvement to help user understand the status better

- Hardware issue

- A node(c010) with fan issue -- reseated fan cage and cables solved the problem

- Added batch queue status monitoring to the portal

-

14:20

-

14:35

→

14:55

WBS 2.3.5 Continuous OperationsConvener: Ofer Rind (Brookhaven National Laboratory)

- WLCG DOMA general meeting earlier today: https://indico.cern.ch/event/1226223/

- Targets and milestones for DC24 still under discussion

- Scope and schedules for mini-challenges will be left to the sites/regions but need planning/coordination

- Expanding effort on testing/deployment of tape REST API

- Ongoing investigation of issues with HC tests for VP queues.

- Manual override on queues temporarily while this is being addressed

-

14:35

US Cloud Operations Summary: Site Issues, Tickets & ADC Ops News 5mSpeakers: Mark Sosebee (University of Texas at Arlington (US)), Xin Zhao (Brookhaven National Laboratory (US))

-

14:40

Service Development & Deployment 5mSpeakers: Ilija Vukotic (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

- 14:45

- WLCG DOMA general meeting earlier today: https://indico.cern.ch/event/1226223/

-

14:55

→

15:05

AOB 10m

-

13:00

→

13:10