Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority framework topics:

- Problem at end of run with lots of error messages and breaks many calibration runs https://alice.its.cern.ch/jira/browse/O2-3315

- Unfortunately, online there are still errors after fixing those that are seen in the FST (e.g. partition 2e5XVwXYwvX).

- After a recent O2 update, these messages started to appear in the FST as well again. At least this case can be reproduced locally.

- Postponed until after the standalone multi-threading works.

- Fix START-STOP-START for good

- Multi-threaded pipeline still not working in FST / sync processing, but only in standalone benchmark.

- Rebased my original PR to use the DPL-multi threading, started to adapt it for standalone multi-threading: https://github.com/AliceO2Group/AliceO2/pull/11918

- Problem with QC topologies with expendable tasks- For items to do see: https://alice.its.cern.ch/jira/browse/QC-953 - Status?

- Problem in QC where we collect messages in memory while the run is stopped: https://alice.its.cern.ch/jira/browse/O2-3691

- Tests ok, will be deployed after HI and then we see.

- Switch 0xdeadbeef handling from on-the-fly creating dummy messages for optional messages, to injecting them at readout-proxy level.

- Will be steered by command line option, since should not be active for calib workflows.

- In any case the right thing to do. Less failure-prone, and will solve several issues, and particularly allow multiple processes to subscribe to raw data.

- Needed for standalone multi-threading.

- Eventually, will require all EPN and FLP workflows that process raw data to add a command line option to the readout proxy.

- In the meantime, we enable it only for EPNs, remove the optional flag only for the TPC GPU reco, and leave the rest as is. Should be backward compatible, and implies minimum changes during HI.

Other framework tickets:

- TOF problem with receiving condition in tof-compressor: https://alice.its.cern.ch/jira/browse/O2-3681

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Backpressure reporting when there is only 1 input channel: no progress.

- Stop entire workflow if one process segfaults / exits unexpectedly. Tested again in January, still not working despite some fixes. https://alice.its.cern.ch/jira/browse/O2-2710

- https://alice.its.cern.ch/jira/browse/O2-1900 : FIX in PR, but has side effects which must also be fixed.

- https://alice.its.cern.ch/jira/browse/O2-2213 : Cannot override debug severity for tpc-tracker

- https://alice.its.cern.ch/jira/browse/O2-2209 : Improve DebugGUI information

- https://alice.its.cern.ch/jira/browse/O2-2140 : Better error message (or a message at all) when input missing

- https://alice.its.cern.ch/jira/browse/O2-2361 : Problem with 2 devices of the same name

- https://alice.its.cern.ch/jira/browse/O2-2300 : Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Found a reproducible crash (while fixing the memory leak) in the TOF compressed-decoder at workflow termination, if the wrong topology is running. Not critical, since it is only at the termination, and the fix of the topology avoids it in any case. But we should still understand and fix the crash itself. A reproducer is available.

- Support in DPL GUI to send individual START and STOP commands.

- Problem I mentioned last time with non-critical QC tasks and DPL CCDB fetcher is real. Will need some extra work to solve it. Otherwise non-critical QC tasks will stall the DPL chain when they fail.

Global calibration topics:

- TPC IDC workflow problem - no progress.

- TPC has issues with SAC workflow. Need to understand if this is the known long-standing DPL issue with "Dropping lifetime::timeframe" or something else.

- Even with latest changes, difficult to ensure guaranteed calibration finalization at end of global run (as discussed with Ruben yesterday).

- After discussion with Peter, Giulio this morning: We should push for 2 additional states in the state machine at the end of run between RUNNING and READY:

- DRAIN: For all but O2, the transision RUNNING --> FINALIZE is identical to what we do in STOP: RUNNING --> READY at the moment. I.e. no more data will come in then.

- O2 could finalize the current TF processing with some time out, where it stops processing incoming data, and at EndOfStream trigger the calibration postprocessing.

- FINALIZE: No more data is guaranteed to come in, but the calibration could still be running. So we leave FMQ channels open, and have a time out to finalize the calibration. If input proxies have not yet received the EndOfStream, they will inject it to trigger the final calibration.

- This would require changes in O2, DD, ECS, FMQ, but all changes except for in O2 should be trivial, since all other components would not do anything in these states.

- Started to draft a document, but want to double-check it will work out this way before making it public.

- Problem when calibration aggregators suddenly receive an endOfStream in the middle of the run and stop processing:

- Happens since ODC 0.78, which checks device states during running: If one device fails, it sends sigkill to all devices of the collection, and FairMQ takes the shortest way through the state machine to EXIT, which involves a STOP transition, which then sends the EoS. The DPL input proxy on the calib node should in principle check that it has received EoS from all nodes, but for some reason that is not working. Todo:

- Fix the input proxy to correctly count the number of EoS.

- Change the device behavior such that we do not send EoS in case of sigkill when running on FLP/EPN.

CCDB:

- Performance issues accessing https://alice-ccdb.cern.ch by Matteo and me.

- Merged in alidist, PR to bump libwebsockets stuck by some MacOS / OpenSSL issues. Giulio is checking.

- JAlien-ROOT will switch to supporting libuv, so libwebsockets can be used by DPL and JAlien-ROOT with one libuv loop.

Sync reconstruction:

- Problem with ccdb-test.cern.ch in SYNTHETICS due to network issue. Propose not to change anything, and we just live with possible problems in SYNTHETICS, but we keep testing the CCDB infrastructure in SYNTHETICS. Could ask the crew not to call oncalls outside working ours for this reason.

- Last night spontaneous pp reference at low IR. Several issues appeared, only thing that concerns PDP software are some crashing/slow calib tasks.

- HI will start on the weekend presumably, ramp up to full rate expected for Tuesday.

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. No progress

- Checking 2 things: does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- Performance issue seen in async reco on MI100, need to investigate.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- Interface to communicate list of active EPNs to epn2eos monitoring: https://alice.its.cern.ch/jira/browse/EPN-381

- Improved to integrate also calib nodes, still monitoring seems to work fine without mail spam.

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

Other EPN topics:

TPC Raw decoding checks:

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

Full system test issues:

Topology generation:

- Should test to deploy topology with DPL driver, to have the remote GUI available. Status?

Software deployment at P2.

- Deployed several software updates at P2 with cherry-picks:

- Make several extra env variables defaults.

- Fixes for ITS/MFT decoding

- Fix some QC histograms

- Fix TPC vdrift calib

- Add switch to disable TPC PID without disabling dEdx

- New TPC error parameterization.

- TPC trigger decoding (handling and writing to CTF still missing)

- Speed up TPC processing by avoiding reupload of calib objects to GPU

- Next updates to be included:

- 0xDEADBEEF handling in readout-proxy

- Full TPC trigger handling and propagation to CTF

- Checking cuts for TPC clusterization, possibility to tune in case too high data rate

- TPC Standalone multi-threading

- DPL fix for incorrect dropping lifetime::timeframe.

QC / Monitoring / InfoLogger updates:

- TPC has opened first PR for monitoring of cluster rejection in QC. Trending for TPC CTFs is work in progress. Ole will join from our side, and plan is to extend this to all detectors, and to include also trending for raw data sizes.

AliECS related topics:

- Extra env var field still not multi-line by default.

GPU ROCm / compiler topics:

- Found new HIP internal compiler error when compiling without optimization: -O0 make the compilation fail with unsupported LLVM intrinsic. Reported to AMD.

- Found a new miscompilation with -ffast-math enabled in looper folllowing, for now disabled -ffast-math.

- Must create new minimal reproducer for compile error when we enable LOG(...) functionality in the HIP code. Check whether this is a bug in our code or in ROCm. Lubos will work on this.

- Found another compiler problem with template treatment found by Ruben. Have a workaround for now. Need to create a minimal reproducer and file a bug report.

- Debugging the calibration, debug output triggered another internal compiler error in HIP compiler. No problem for now since it happened only with temporary debug code. But should still report it to AMD to fix it.

- New compiler regression in ROCm 5.6, need to create testcase and send to AMD.

- ROCm 5.7 released, didn't check yet. AMD MI50 will go end of maintenance in Q2 2024. Checking with AMD if the card will still be supported by future ROCm versions.

TPC GPU Processing

- Bug in TPC QC with MC embedding, TPC QC does not respect sourceID of MC labels, so confuses tracks of signal and of background events.

- New TPC Cluster error parameterization merged..

- TPC Trigger extraction from raw data merged..

- Online runs at low IR / low energy observe weird number of clusters per track statistics.

- Problem was due to incorrect vdrift, though it is not clear why this breaks tracking so badly, being investigated.

- Ruben reported an issue with global track refit, which some times does not produce the TPC track fit results. To be investigated.

ANS Encoding

- Tested new ANS coding in SYNTHETIC run at P2 with Pb-Pb MC. Actually slightly faster than before, and 14% better compression with on-the-fly dictionaries. (Though it should be noted that 5% better compression is already achieved in the compatibility mode, i.e. is from general improvements not dictionaries. And the dictionaries used for the reference were old and not optimal.) In any case, very good progress, and generally superior to old version.

Issues currently lacking manpower, waiting for a volunteer:

- For debugging, it would be convenient to have a proper tool that (using FairMQ debug mode) can list all messages currently in the SHM segments, similarly to what I had hacked together for https://alice.its.cern.ch/jira/browse/O2-2108

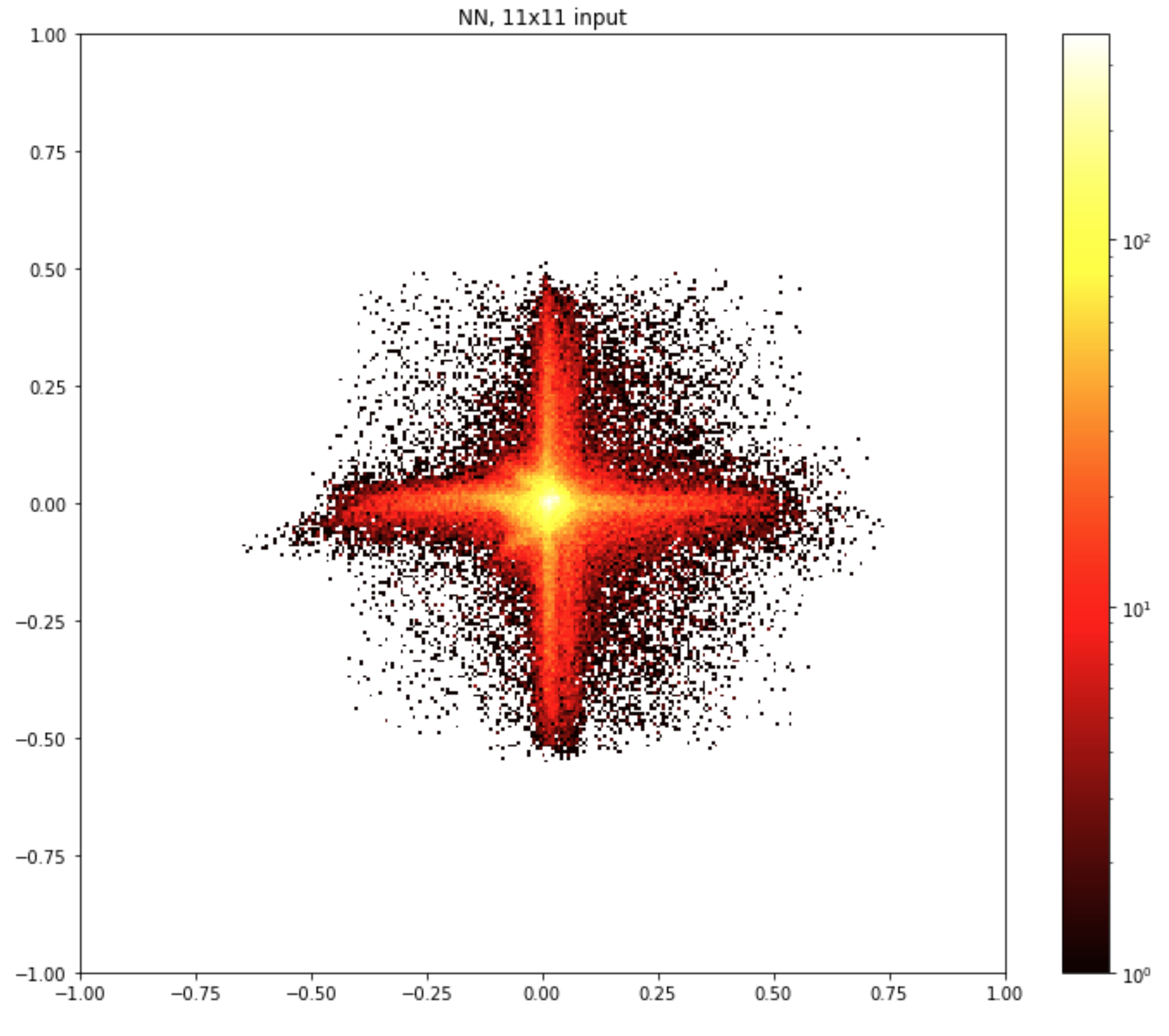

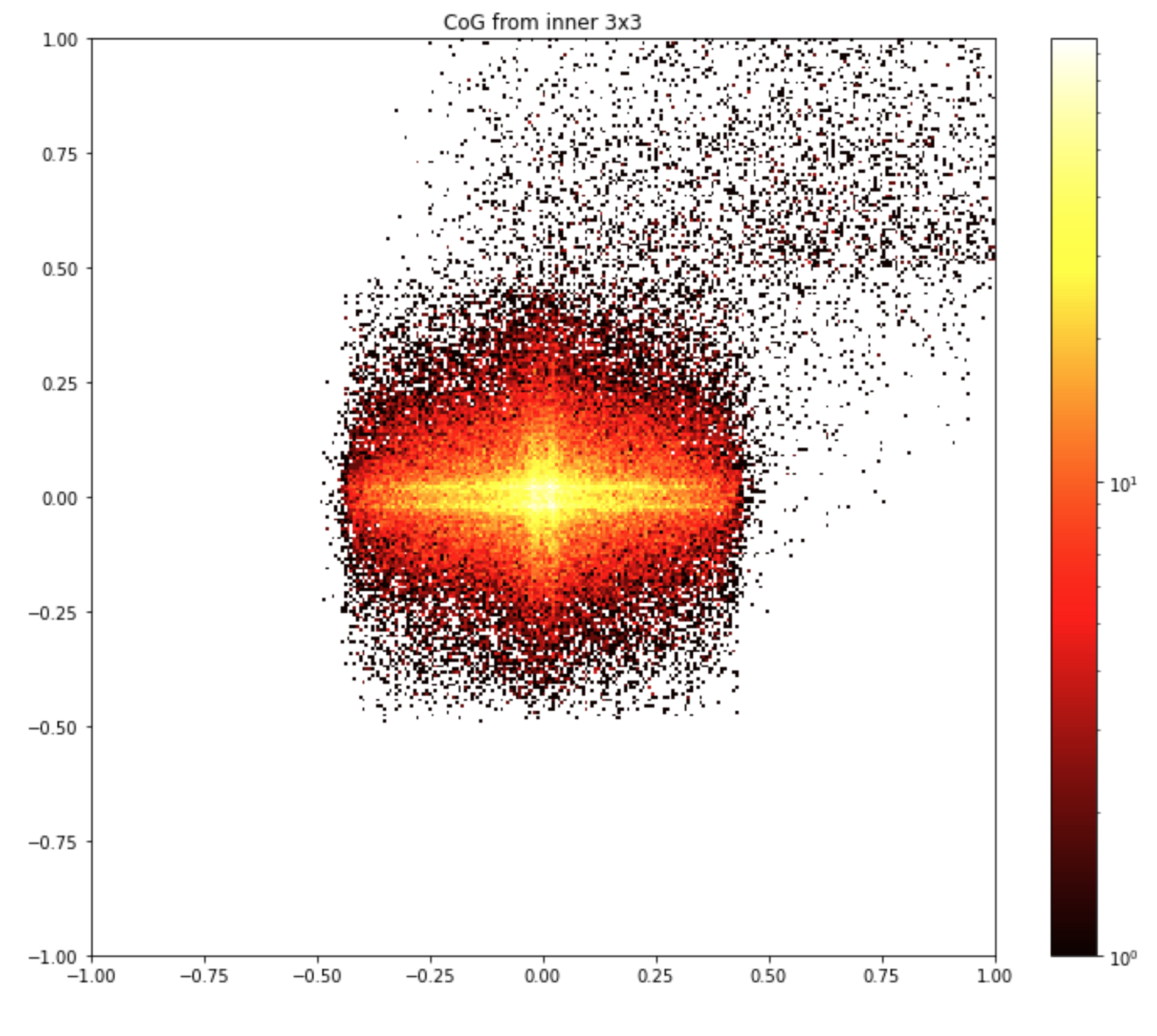

- Redo / Improve the parameter range scan for tuning GPU parameters. In particular, on the AMD GPUs, since they seem to be affected much more by memory sizes, we have to use test time frames of the correct size, and we have to separate training and test data sets.