US ATLAS Computing Facility

Facilities Team Google Drive Folder

Zoom information

Meeting ID: 996 1094 4232

Meeting password: 125

Invite link: https://uchicago.zoom.us/j/99610944232?pwd=ZG1BMG1FcUtvR2c2UnRRU3l3bkRhQT09

-

-

13:00

→

13:05

WBS 2.3 Facility Management News 5mSpeakers: Robert William Gardner Jr (University of Chicago (US)), Dr Shawn Mc Kee (University of Michigan (US))

Forthcoming meetings

- ATLAS S&C week, Feb 5-9, https://indico.cern.ch/event/1340782/

- ADC @ S&C week (Sites Jamboree), Feb 6-8, https://indico.cern.ch/event/1355529/

- Tier2 Technical, Facility R&D, Joint WBS 2.3/5, and Facility Coordination next week all cancelled due to S&C.

- Facility Topical in two weeks (Feb 14). Start a default rotation through L3 areas. Last talk was from 2.3.1. Next: topical presentation from 2.3.2. Can be superceded as additional topics emerge.

- New 2.3/5 (and RAC) schedule:

- Wednesday, February 7th: canceled for S&C week

Wednesday, February 21st: 2.3/5 and RAC

Wednesday, March 6th: 2.3/5

Wednesday, March 20th: 2.3/5 and RAC

Wednesday, April 3rd: 2.3/5

Wednesday, April 17th: 2.3/5 and RAC

etc

- Wednesday, February 7th: canceled for S&C week

-

Mar 18-22: US ATLAS T1 jamboree @ BNL

-

May 13-17: HSF/WLCG workshop @ DESY

-

Jun 3-7: 78th ATLAS S&C week @ Oslo

-

Jul 8-12: HTC 24

-

July 15-17 US ATLAS Summer Workshop in U Washington (including WBS5 training, and a co-located IRIS-HEP training event)

- July 19-20 Scrubbing

-

13:05

→

13:10

OSG-LHC 5mSpeakers: Brian Hua Lin (University of Wisconsin), Matyas Selmeci

Release (next week?)

- HTCondor 23.4.0 and 23.0.4

- We are planning on making changes to how we use the micro version number.

- Current proposal is to bump the micro version for every release candidate so you may see larger jumps in the final number for what's available in the release repositories

- XRootD 5.6.6

- This may get punted, depending on whether or not we can get our integration tests to cooperate

- voms-2.10.0-0.31.rc3.1

- We do not currently ship VOMS for EL9 and had to bump the version to get builds working

- We updated the version across all operating systems

- We need testers!

IRIS-HEP Y6Q4 (Aug 2024) milestones

- ARM work (host deployment, forays into OSG Software builds/tests)

- Retire OSG 3.6 and EL7

- Kuberentes Gratia Probe

- HTCondor 23.4.0 and 23.0.4

-

13:10

→

13:25

WBS 2.3.1: Tier1 CenterConvener: Alexei Klimentov (Brookhaven National Laboratory (US))

-

13:10

Infrastructure and Compute Farm 5mSpeaker: Thomas Smith

-

13:15

Storage 5mSpeaker: Vincent Garonne (Brookhaven National Laboratory (US))

-

Operational interventions including HW and pool failures

-

ATLAS utilizes tokens for transfers, and DATADISK and SCRATCHDISK have been migrated to the CERN FTS Pilot instance

-

USATLAS storage upgraded to dCache 9.2.6 + java-17 (1/22)

-

While initially a temporary workaround is in place to overcome the issue. Most recently refreshing the state of components appeared to help as the occurrence of the issue appeared to be reduced.

-

Issues found and reported www.dcache.org #10575 configuration instabilities observed wrt. WAN TPC writes.

-

Configuration for RHEL8 (HPSS, dCache pools) is ready. New data servers just delivered to start their commissioning, etc

- 1000 LTO8 tapes (= 12 PB) purchased and delivered to BNL receiving

- Procurement process of FY24 storage for ATLAS started

-

Data challenge 24 preparation:

-

Stress-testing and performance evaluation of USATLAS storage (tuning, etc.) with dedicated FTS servers

- Joint USATLAS-USCMS site transfer stress testing (1/24):

-

Achieved WAN READ throughput surpassing 400 Gb/s.

-

No issues were identified on core, doors, and pools servers regarding CPU, memory, or load. Traffic distribution among the pools is uniform

-

-

-

-

13:20

Tier1 Services 5mSpeaker: Ivan Glushkov (University of Texas at Arlington (US))

- Networking:

- Accounting:

- WLCG 2023 offical: over pledge: CPU/Disk/Tape:110%/104%/102%

- December 2023: validated at 12632 kHS23*days

- BNL provides 25% more CPU resources than accounted for due to HIMEM.

- BNL provided 13% (the most) of all ATLAS HIMEM jobslots for December 2023 (Monitoring link)

- Froblem "solved" by limiting the number of HIMEM slots in CRIC to 5k.

- To be discussed on S&C Week

- Farm:

- Corepower:

- Starting revising the BNL CPU corepower declared in CRIC. Current value: 12.69

- ALMA9 transition:

- Full chain set-up. HC jobs are sigfaulting. Ongoing debugging

- IPv6 transition:

- One node fully operational. Observing performance. Potentially scaling up by the ned of February

- Upgrade to HTCondor 10.x - nearly done.

- VP queue - running as expected. Limited by the number of slots opportunistically available

- Corepower:

-

13:10

-

13:25

→

13:30

WBS 2.3.2 Tier2 Centers

Updates on US Tier-2 centers

Convener: Fred Luehring (Indiana University (US))- Good production will all sites at or near capacity after MWT2 recovered from the dCache upgrade (and before too!)

- NET2 did extensive work on their network which led to some reduction in production.

- Quarterly reporting submitted before official deadline but well after Shawn's requested date.

- Still working on getting HEPSpec06 to run on the benchmarking machines that Dell provided.

- Needed to compare HEPScore to HEPSpec to understand how the $/HS stacks up.

- NET2 has negotiated a good price ~$5.30/HEPSpec for servers with dual 128C/256T AMD 9754 Bergamo CPUs (512T total).

- Dell tells me that NVMe is cheaper than SAS and Rafael's results show using NVMe improves the HEPScore significantly.

- There will be no Tier 2 technical meeting next week because of S&C Week and the reminder email will be marked as canceled.

- I have corrected the length and dates of the technical meetings in Indico.

- I will miss the Jamboree because of a conflicting TRT meeting in Bonn. I will join the S&C week on Wednesday morning.

- I need a speaker for the topical talk in two weeks...

- Good production will all sites at or near capacity after MWT2 recovered from the dCache upgrade (and before too!)

-

13:30

→

13:35

WBS 2.3.3 HPC Operations 5mSpeakers: Doug Benjamin (Brookhaven National Laboratory (US)), Rui Wang (Argonne National Laboratory (US))

- Perlmutter: Jobs are running and failing after switching from Shifter now that the user namespace issue resolved.

- Switch to usatlas Globus collaboration endpoint

- The connection appears to be not very stable. Connection timeout in many tasks

- Fixed the Python version issue with the pilot

- Preparing to switch to Podman-HPC

- Working with Asoka deSilva to sort things out.

- Switch to usatlas Globus collaboration endpoint

- TACC:

- Switched to and tested the TACC Globus v5 endpoint

- Setups need in both CIlogon and Access account

- Fixed the errors with the harvester envrioment with python 3.9

- Helping from Lincoln on the grid cretificats

- Switched to and tested the TACC Globus v5 endpoint

- Perlmutter: Jobs are running and failing after switching from Shifter now that the user namespace issue resolved.

-

13:35

→

13:50

WBS 2.3.4 Analysis FacilitiesConveners: Ofer Rind (Brookhaven National Laboratory), Wei Yang (SLAC National Accelerator Laboratory (US))

-

13:35

Analysis Facilities - BNL 5mSpeaker: Ofer Rind (Brookhaven National Laboratory)

-

13:40

Analysis Facilities - SLAC 5mSpeaker: Wei Yang (SLAC National Accelerator Laboratory (US))

-

13:45

Analysis Facilities - Chicago 5mSpeakers: Fengping Hu (University of Chicago (US)), Ilija Vukotic (University of Chicago (US))

- Added a reserved queue to the HTCondor batch system. It Currently consists of 1 node and would allow a analysis team to run whole node jobs for the MadGraph application.

- Working on taming the ceph file system. It has some stability issues that warrant more investigations. Very high mem usage on mds is observed during incidences.

- volume mounts are monintored and alerted on both HTCondor workers and the interactive login nodes.

- will work on rook-ceph upgrade. Had some trouble last time due to some K8s deprications. It appears at least the newer ceph version(v17) would have a alertable metric(slow mds ops) that we usually observed during incidences.

- will also update os.

- AnalysisBase image has been updated to latest: 24.2.37. All the libraries have been updated. Now setting dev version of uproot with a lot of fixes for reading physlite data files.

-

13:35

-

13:50

→

14:05

WBS 2.3.5 Continuous OperationsConvener: Ofer Rind (Brookhaven National Laboratory)

- Lots of updates on tokens in Rucio/FTS and monitoring for DC24 at today's WLCG DOMA General (link)

- LHCONE Jumbo Frames survey: https://limesurvey.web.cern.ch/977971

-

13:50

ADC Operations, US Cloud Operations: Site Issues, Tickets & ADC Ops News 5mSpeaker: Ivan Glushkov (University of Texas at Arlington (US))

Open and dormant GGUS tickets:

- Storage Tokens at SWT2_CPB (link)

- CMS SAM/ETF jobs at BNL (link)

- Site Network Monitoring at SWT2_CPB (link)

- Site Network Monitoring at OU (link)

- Alma 9 image testing ongoing at BNL T1 farm and T3 interactive

- BNL xcache crashed yesterday (possible hw issue), otherwise smooth running

-

13:55

Service Development & Deployment 5mSpeakers: Ilija Vukotic (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

- Elasticsearch

- Some cluster stability issues. We anyhow need an upgrade so we will rebuild cluster next week.

- XCache

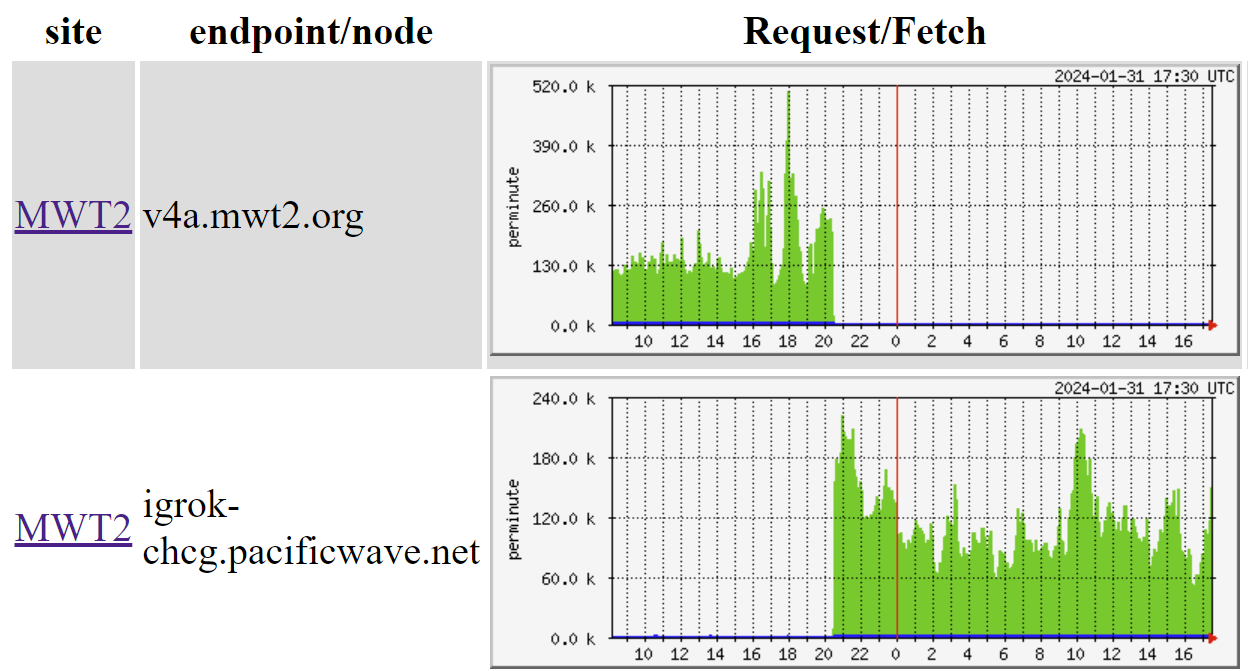

- Added new node to MWT2. We will rebuild the one causing issues.

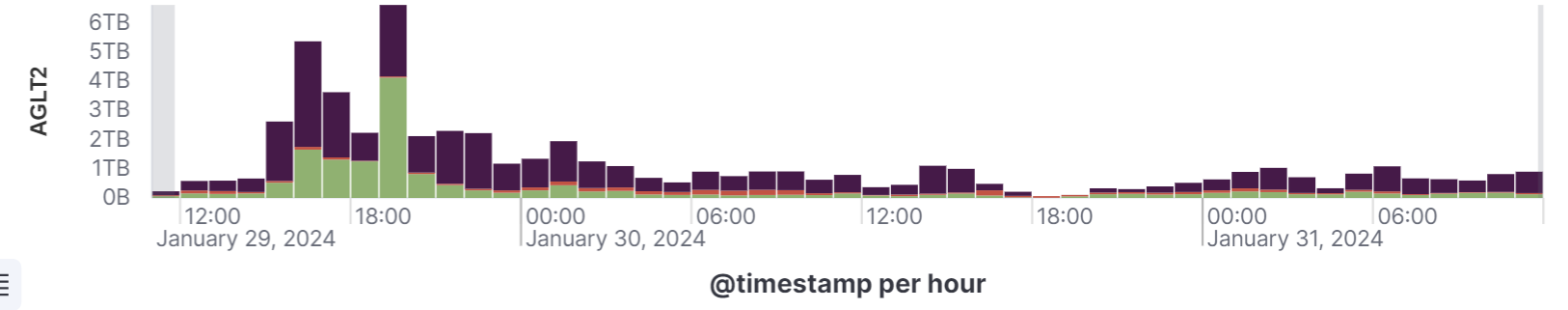

- Need a stronger xcache node at AGLT2. This one can't keep up:

- Added a SLATE installed xcache node to Wuppertal. Work on setting up their VP queue. This is a test for the larger deployment now that Germany is getting rid of ATLAS sites at Universities and moving resources to HPCs.

- We need better ticketing when xcache has issue. If Squid people can monitor and ticket sites (and there are many more of them) we should be able to do that for XCaches too. We have enough tests, we just need another person to look at them every day and create a ticket if needed

- Varnishes

- work fine but we get tickets when we move traffic from one to another:

- Elasticsearch

-

14:00

Facility R&D 5mSpeakers: Lincoln Bryant (University of Chicago (US)), Robert William Gardner Jr (University of Chicago (US))

Presented on Stretched K8S options at last week's facility R&D call

Asking sites for their input and consideration

-

14:05

→

14:10

AOB 5m

-

13:00

→

13:05