Choose timezone

Your profile timezone:

Help us make Indico better by taking this survey! Aidez-nous à améliorer Indico en répondant à ce sondage !

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

Global calibration topics:

Sync reconstruction

Async reconstruction

EPN major topics:

Other EPN topics:

Full system test issues:

Topology generation:

AliECS related topics:

GPU ROCm / compiler topics:

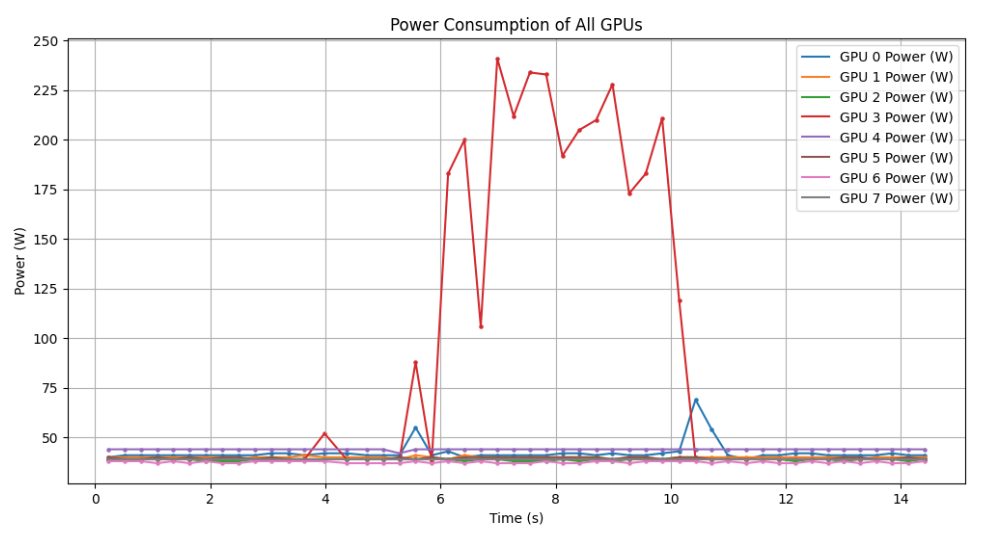

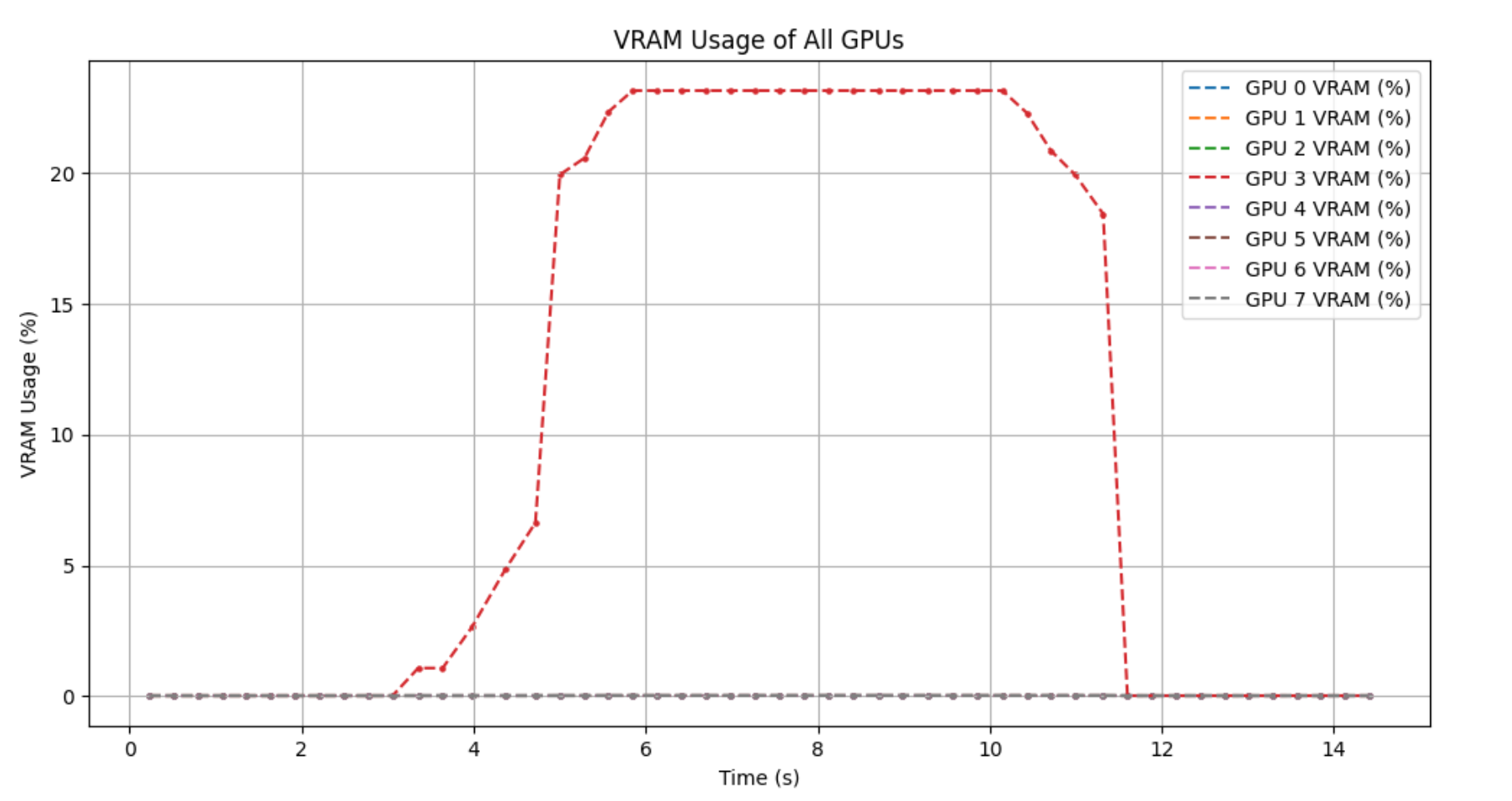

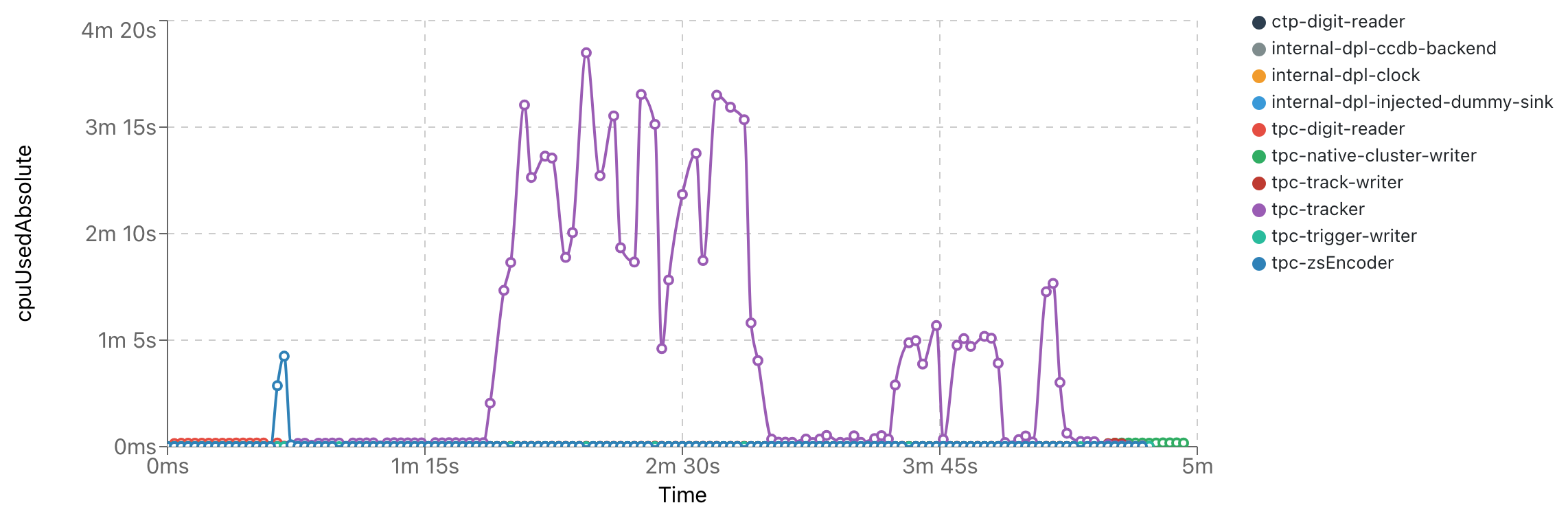

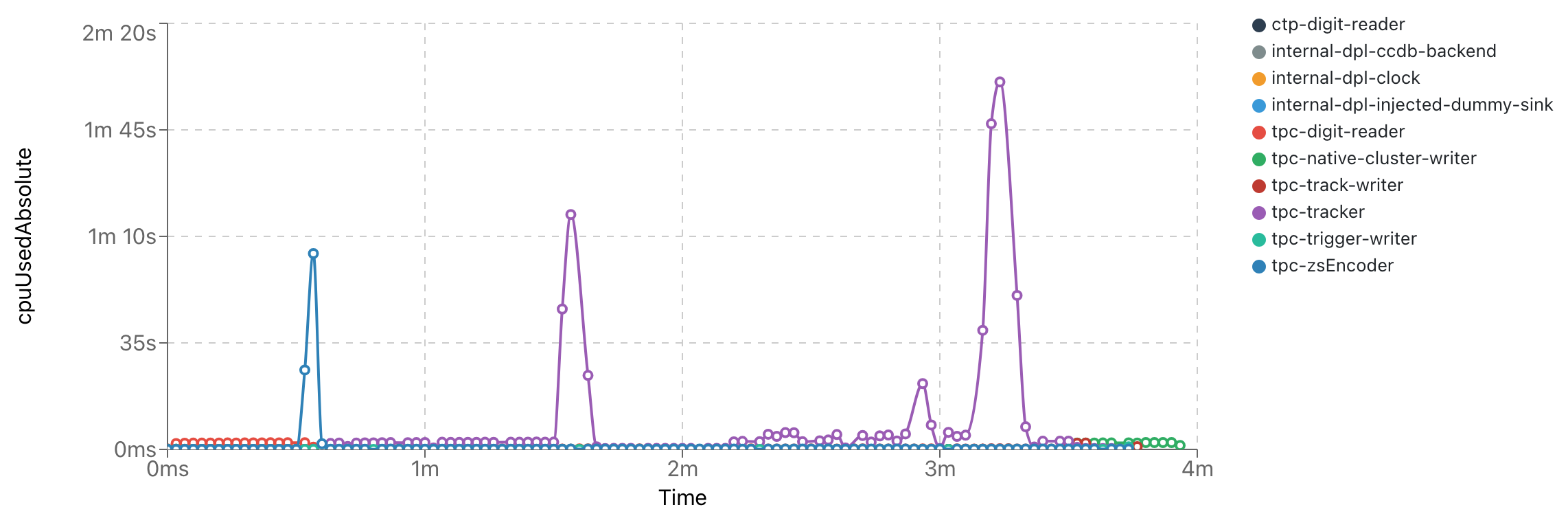

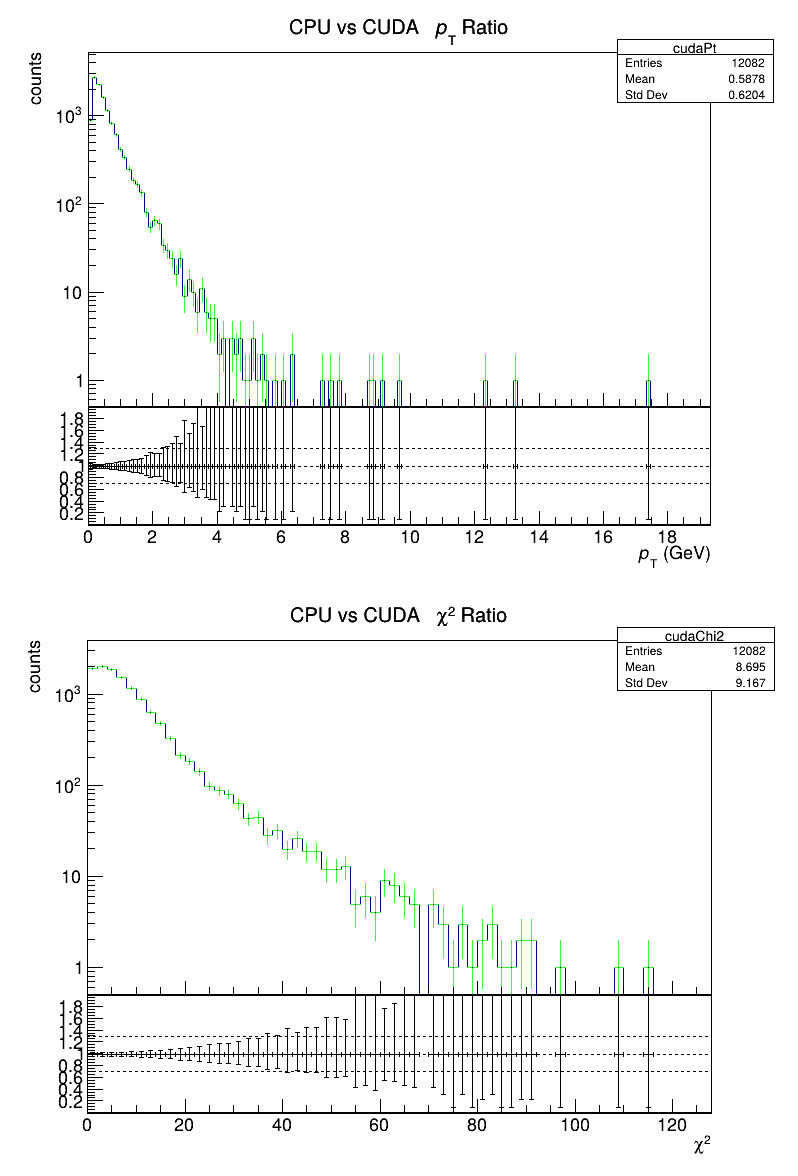

TPC GPU Processing

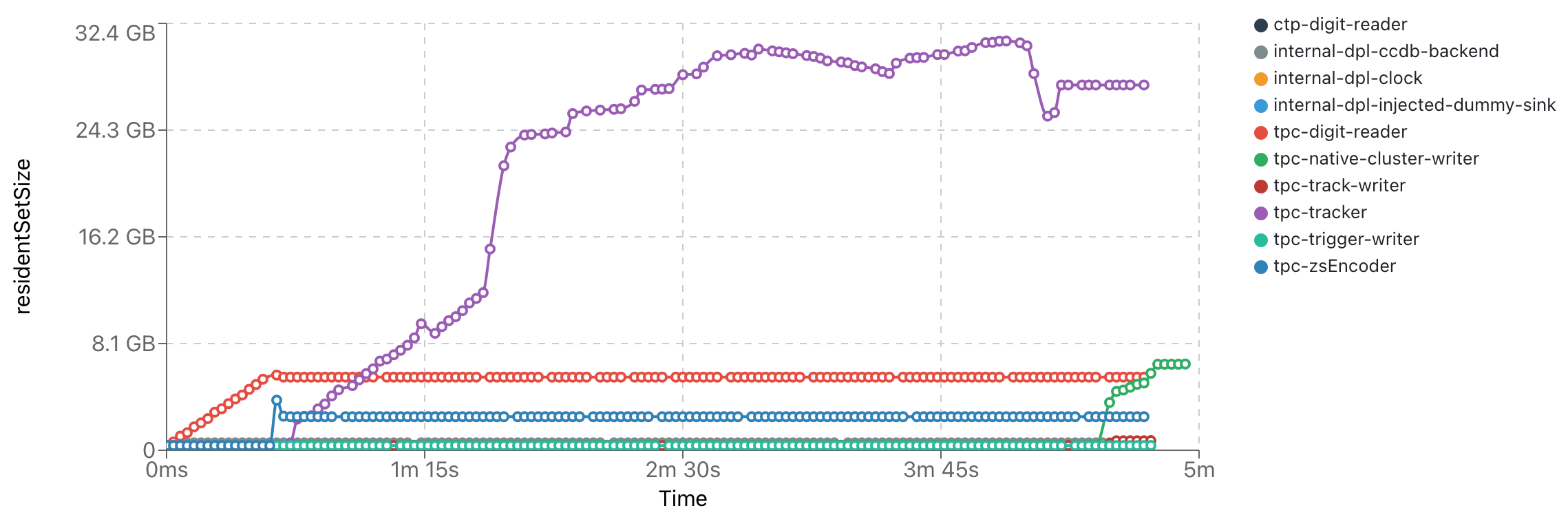

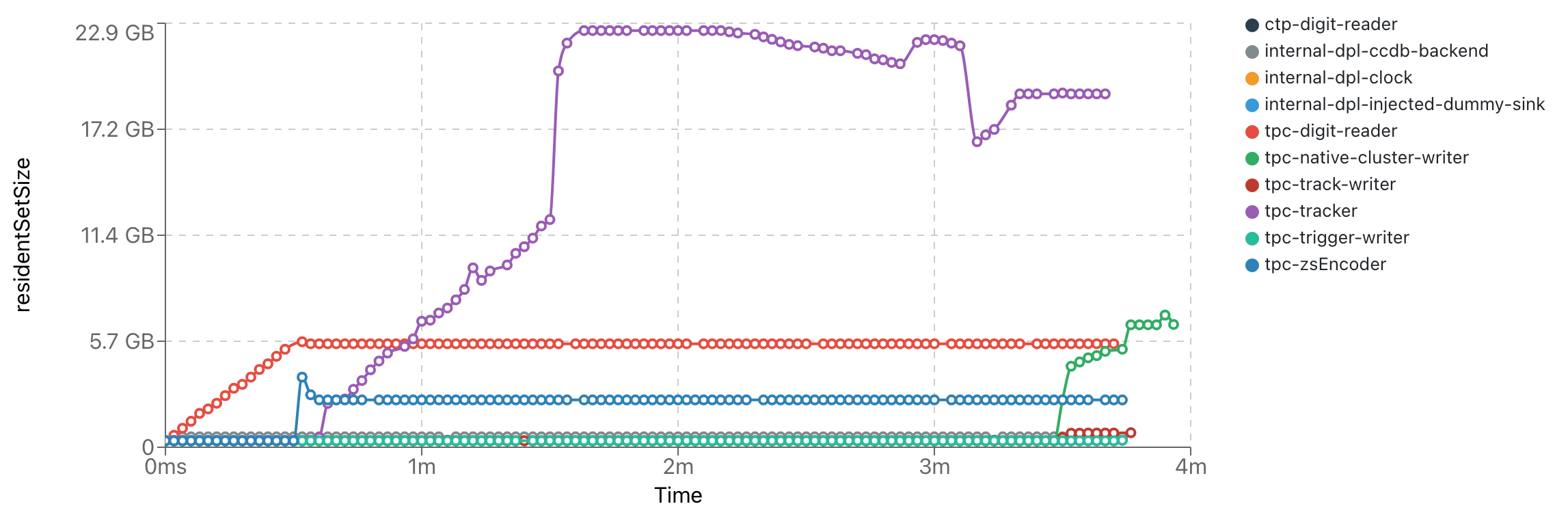

TPC processing performance regression:

General GPU Processing

Overview of low-priority framework issues

Updates

Dropping incomplete errors at run stop

Expected Lifetime::Timeframe data MCH/HBPACKETS/0 was not created for timeslice 47383 and might result in dropped timeframesExpected Lifetime::Timeframe data MCH/ERRORS/0 was not created for timeslice 20 and might result in dropped timeframesDropping incomplete <matcher query: (and origin:MCH (and description:ORBITS (just startTime:$0 )))> Lifetime::timeframe data in slot 14 with timestamp 20 < 21 as it can never be completed.Dropping incomplete <matcher query: (and origin:MCH (and description:DIGITS (just startTime:$0 )))> Lifetime::timeframe data in slot 14 with timestamp 20 < 21 as it can never be completed.Missing <matcher query: (and origin:MCH (and description:HBPACKETS (just startTime:$0 )))> (lifetime:timeframe) while dropping incomplete data in slot 14 with timestamp 20 < 21.Updates on NN perfromance

Updates on NN speed & implementation

---------------------------------------------------

.png)

.png)