Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

1

DiscussionSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Fix dropping lifetime::timeframe for good: Still pending: problem with CCDB objects getting lost by DPL leading to "Dropping lifetime::timeframe", saw at least one occation during SW validation.

- Found yet another "last problem with oldestPossibleTimeframe": Errors at P2 during STOP about oldestPossibleOutput decreasing, which is not allower.

- Problem is due to TimingInfo not being filled properly during EoS, and old or uninitialized counters were used. Fixed which cures my reproducer in FST. To be deployed at P2, and we need to check again for bogus messages.

- Status?

- Found yet another "last problem with oldestPossibleTimeframe": Errors at P2 during STOP about oldestPossibleOutput decreasing, which is not allower.

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308: Status?.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

- Expendable tasks - Problem with cyclic channel of dummy sink. Status?

Global calibration topics:

- TPC IDC and SAC workflow issues to be reevaluated with new O2 at restart of data taking. Cannot reproduce the problems any more.

Sync reconstruction

- Had a problem with COSMIC runs crashing due to bug in ITS code.

- Recorded cosmic raw data. Status?

- Again crashes in its-tracker, fixed by Ruben in O2/dev, deployed at P2.

- Recorded cosmic raw data. Status?

- ITS reco had a bug with excessive logging in first SW version deployed at P2, was fixed by deploying nightly tag with fix.

- gpu-reconstruction crashing due to receiving bogus CCDB objects.

- Found that the corruption occurs when the CCDB object download times out. New O2 tag of today has proper error detection, and will give a FATAL error message instead of shipping corrupted objects.

- After investigating with Costin, Ruben, the problem was incorrect propagation of HTTP header Content-Size information, and then high CPU load due to extension of SHM PMR vector. Fixed.

- TOF fix solves the memory corruption leading to boost interprocess lock errors.

- Some crashes of tpc-tracking yesterday in SYNTHETIC runs, to be investigated.

- RC did a test run with CPU only to see if the problem is only on GPUs. Had some side effects on EPNs at STOP. To be checked.

- RC did a test run with CPU only to see if the problem is only on GPUs. Had some side effects on EPNs at STOP. To be checked.

- Will discuss with RC tomorrow what timeouts to use for STOP of run, and new Calib Scheme.

- Added an extra env variable to set the exit transition timeout from ECS GUI. RC will play around and see what timeout is reasonable as in the shadow of other systems. In general, they are OK with flushing the buffers and losing the data in the buffers if a new run can be started earlier.

- Discussed with Alice from the GRID group that we'll add monitoring of the number of CTFs stored, so we can compare what goes into FLP and what leaves EPN, and see how much we lose.

- Added an extra env variable to set the exit transition timeout from ECS GUI. RC will play around and see what timeout is reasonable as in the shadow of other systems. In general, they are OK with flushing the buffers and losing the data in the buffers if a new run can be started earlier.

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- ḾI100 GPU stuck problem will only be addressed after AMD has fixed the operation with the latest official ROCm stack.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Improve core dumps and time stamps: https://its.cern.ch/jira/browse/EPN-487

- Tentative time for ALMA9 deployment: december 2024.

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

Full system test issues:

Topology generation:

- Should test to deploy topology with DPL driver, to have the remote GUI available.

- DPL driver needs to implement FMQ state machine. Postponed until YETS issues solved.

- 2 Occucances where the git repository in the topology cache was corrupted. Not really clear how this can happen, also not reproducible. Was solved by wiping the cache. Will add a check to the topology scripts to check for a corrupt repository, and in that case delete it and check it out anew.

AliECS related topics:

- Extra env var field still not multi-line by default.

GPU ROCm / compiler topics:

- Compilation failure due to missing symbols when compiling with -O0. Similar problem found by Matteo, being debugged.. Sent a reproducer to AMD.

- Internal compiler error with LOG(...) macro: we have a workaround, AMD has a reproducer, waiting for a fix.

- New miscompilation for >ROCm 6.0

- Waiting for AMD to fix the reproducer we provided.

- Bumped LLVM to 18 by Sergio:

- Fixed relocation problems, vanilla 18.1 release has a regression for OpenCL compilation. Backported the fix from LLVM 19.

- Fixed relocation problems, vanilla 18.1 release has a regression for OpenCL compilation. Backported the fix from LLVM 19.

- GPU RTC deployed on EPNs and working properly.

- O2 Code Checker: failed to find header files to to relocation problem. Investigated yesterday and finally managed to solve with Giulio.

- New clang has additional checks, e.g. for modernization of shared pointers. Thus CI will now report some new errors, to be fixed over time.

- Long-pending issue of CodeChecker not finding omp.h. After the problem caused by not finding <iostream> etc. above, started to look into this, since we learned it can have side effects.

- Fixed problem with omp.h header in O2CodeChecker.

- Fixed problem with omp.h header in O2CodeChecker.

- New clang has additional checks, e.g. for modernization of shared pointers. Thus CI will now report some new errors, to be fixed over time.

TPC GPU Processing

- Bug in TPC QC with MC embedding, TPC QC does not respect sourceID of MC labels, so confuses tracks of signal and of background events.

- Started to look into this, should have a fix this week. Fixed.

- Christian reported a bug when the O2 noise label is set. TPC QC was derived from AliRoot and didn't know about a noise label besides the fake label. Fixed.

- Sandro starting to look into the GPU event display, asking if one can color tracks based on collision. Currently not possible for Run 3, since the MC label is not read correctly. Fix should be easy.

- Fixed.

- Tests with TPC MC processing revealed problem with incorrectly aligned labels, leading to undefined behavior.

- Fixed with alignas statement enforcing alignment, but unfortunately this makes stored MC labels unreadable since the on disk format would change.

- Fixed this by adding packer attribute to MCCompLabel class.

- Now running into a problem that this breaks ROOT dictionary generation, and that MCTruthContainer is used with other template TruthElements but MCCompLabel, which must be fixed as well.

- Now running into a problem that this breaks ROOT dictionary generation, and that MCTruthContainer is used with other template TruthElements but MCCompLabel, which must be fixed as well.

- Fixed with alignas statement enforcing alignment, but unfortunately this makes stored MC labels unreadable since the on disk format would change.

- Started to look into this, should have a fix this week. Fixed.

- New problem with bogus values in TPC fast transformation map still pending. Sergey is investigating, but waiting for input from Alex. Ruben reported that he still sees such bogus values.

- Status of cluster error parameterizations

- No progress yet on newly requested debug streamers.

- Waiting for TPC to check PR with full cluster errors during seeding.

- No progress yet on newly requested debug streamers.

- Want to switch to int8 ... uint64 types instead of char, short, ...

TPC processing performance regression:

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

General GPU Processing

- Fix dropping lifetime::timeframe for good: Still pending: problem with CCDB objects getting lost by DPL leading to "Dropping lifetime::timeframe", saw at least one occation during SW validation.

-

2

Following up JIRA ticketsSpeaker: Ernst Hellbar (CERN)

low-priority framework issues

- https://its.cern.ch/jira/browse/O2-5231 Many processes crashing after throwing instance of 'boost::interprocess::lock_exception'- fixed and closed- https://alice.its.cern.ch/jira/browse/O2-1900 : Merged workflow fails if outputs defined after being used as input- needs to be implemented by Giulio- https://alice.its.cern.ch/jira/browse/O2-2213 : Cannot override options for individual processors in a workflow- requires development by Giulio first- implement something similar as for AOD metadata- serialized and separated workflow options to be compared with already given options- policies on how to proceed in case of options present several times to be discussed- https://alice.its.cern.ch/jira/browse/O2-4166 ROOT Messages in the output of a workflow should not generally be interpreted as error- locally, FairLogger messages are communicated via WebSocket to the driver while all other output just goes through stderr- at P2, all goes via InfoLogger and is disrtibuted in two log files, `*_out.log` containing the FairLogger messages and `*_err.log` the stderr- log messages in ROOT all sent to `stderr` with `fprintf`- more iterations required with Giulio to identify the best way how to interpret them properly- DebugGUI- unknown logs (such as those from ROOT) are printed in dark red compared to neon red for FairLogger `ERROR` logs- different color (light gray, dark green) can mitigate the issue for the eyes, but not real solution since real `stderr` will be printed in the same color- few (`INFO`) logs without timestamp at the beginning of each task are printed in unknown color (dark red)- logs with true % sign now printed in color (fix by Giulio)- working on DebugGUI improvements- https://its.cern.ch/jira/browse/O2-4196 Show in the debugGUI which input is condition / optional / timeframe- https://its.cern.ch/jira/browse/O2-2209 Show data origin/description in the info box when hovering a processor specPDP SRC

- should clean up known issues in QC shifter's documentation and filters for InfoLoggers- everything related to dropping incomlpete erors at EOR- oldestPossibleOutput errors- SW installation on EPNs- limit number of SW versions to 8 (?) for disk space -

3

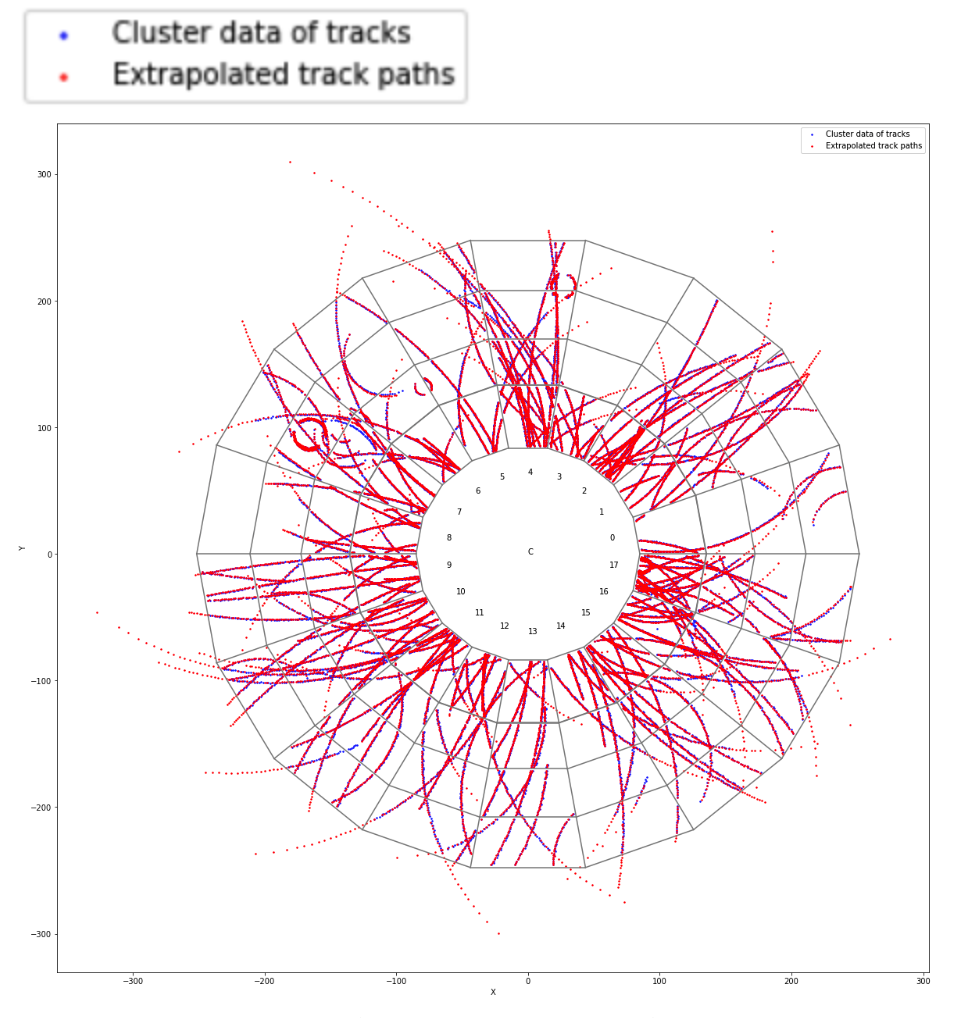

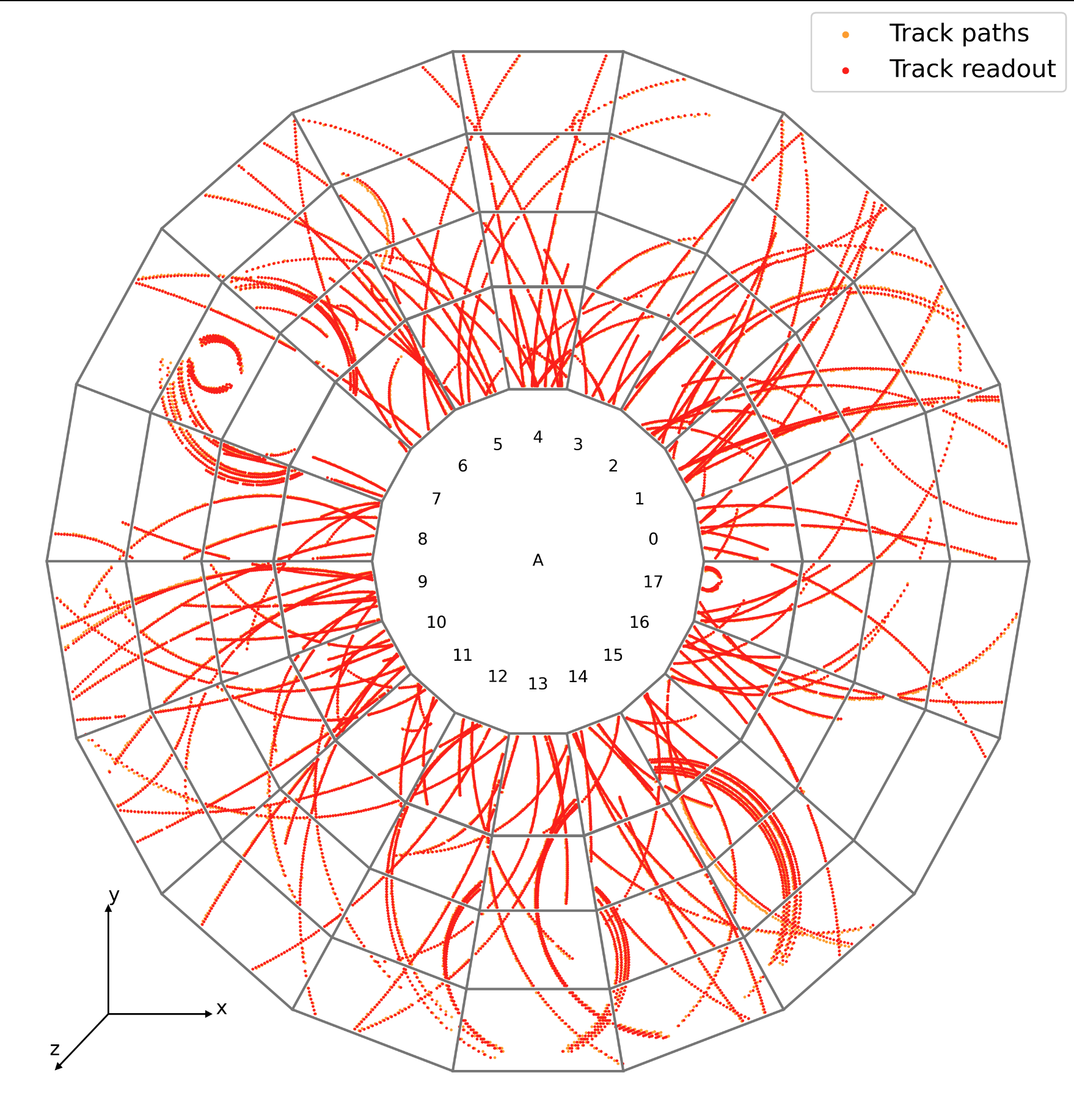

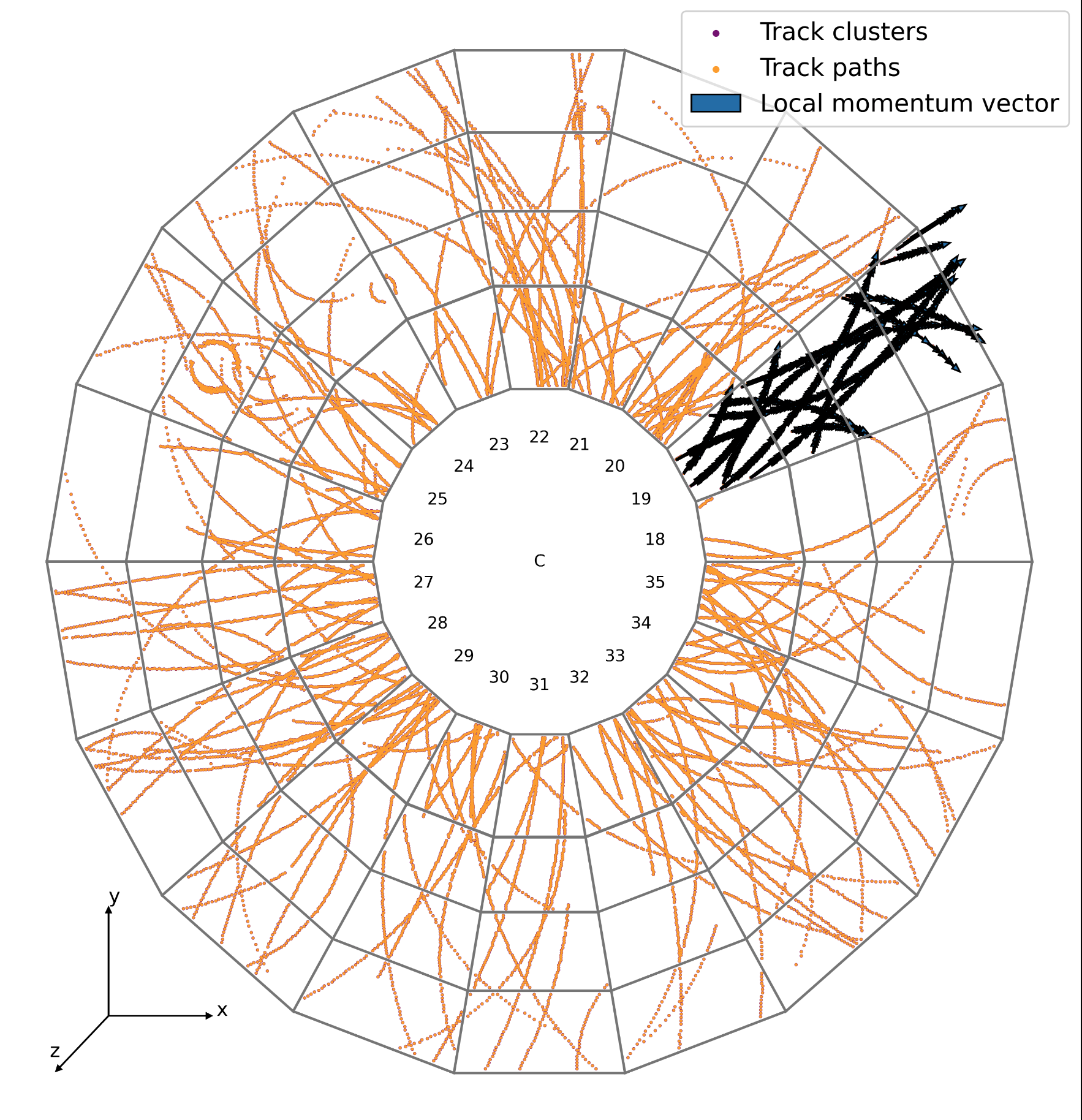

TPC ML ClusteringSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Several smaller updates

- Brought Nicodemos up to speed for playing with ONNX execution providers / optimizing inference

- Created the header for execution: For inference performance reasons its easiest to work with ORT directly in the code -> header instead of library

- Went from 27 mio cls/s down to ~15 mio cls / s just because of dtype conversions and data writes

- Figured out the issue why tracks seem to be propagated outside the TPC boundaries -> sector crossing

- Then also the momentum vectors are aligned

Next up

- Adding in the SC distortions and correcting the mapping from z to time (currently using LinearZ2Time)

- Calculate the MSE for cluster-to-track residuals and shift time coordinate of the track to minimize that value (if linear mapping is good enough)

-

4

ITS TrackingSpeaker: Matteo Concas (CERN)

- ITS GPU tracking:

- Fixed crashing when in sync mode

- Inconsistency HIP vs CUDA/CPU in deterministic mode seems to be:

- Related and proportional to the number of threads and blocks used: (1B,1T) => no issue, (1B,2T) => first discrepancy in a single candidate, (20B, 256T) => tenth of discrepancies, the fits seem to fail (to be confirmed)

- Related to the material correction in the propagator: using materialCorrection::NONE and (20,256) gives consistency back

- Non-trivial to reproduce: it is not systematically happening, specifically, e.g., inc current pp dataset first discrepancies we have at the second TF and third iteration.

- Will dump the seeds on a file and create a simple reproducer to process them, as I am not sure randomising track parametrisations would spot the issue.

- Inconsistency GPU WF and dedicated workflow remains also with disabled matbud correction, candidate could be the mag field. We don't use polinomial field, but the constant can still be slightly different (to be re-checked)

- DCA fitter on GPU (#13447):

- Goal: have a library exposing CPU calls/wrappers to kernel executions. Something similar to cuda::thrust approach. Minimal functionality for me would be to have a clone of the testDCAFitterN that calls

doProcessOnDevice(&fitter, &track1, &track2)instead offitter.process(t1, t2) - Current layout has

- a

DCAFitterCUDAlib privately and separate linked to theGPUTrackingCUDAExternalProviderlib (for TrackParCov and later Propagator definitions). There I also define the kernels, so to have everything in the same TU. - a

DCAFitterAPICUDAlib privately linked to the previous one, to only get cpu interface to expose at any linked case (e.g. testDCAFitter) so to possibly link both DCAFitterN and DCAFitterNCUDAAPI

- a

- Problem: on CUDA it compiles and just ignores the CPU calls on a host Fitter obj if I only link with *CUDAAPI file, whereas it does not call gpu part if I link to both

- HIP: it does not link the API lib, complaining some missing definitions, which seems clang to be more genuine than nvcc in this respect _or_ I am not correctly using the separate compilation with it.

- Problems can be in bad design pattern, bug in the TUs, my misinterpretation of some concepts.

- Goal: have a library exposing CPU calls/wrappers to kernel executions. Something similar to cuda::thrust approach. Minimal functionality for me would be to have a clone of the testDCAFitterN that calls

ldd o2-test-gpu-DCAFitterNCUDA...libO2DCAFitterAPICUDA.so => /ssd_data/builds/latest/sw/BUILD/O2-latest-gpu-dca-fitter/O2/stage/lib/libO2DCAFitterAPICUDA.so (0x0000732bc9b92000)libO2DCAFitter.so => /ssd_data/builds/latest/sw/BUILD/O2-latest-gpu-dca-fitter/O2/stage/lib/libO2DCAFitter.so (0x0000732bc9b57000)...libO2DCAFitterCUDA.so => /ssd_data/builds/latest/sw/BUILD/O2-latest-gpu-dca-fitter/O2/stage/lib/libO2DCAFitterCUDA.so (0x0000732bc3600000) - ITS GPU tracking:

-

5

TPC Track Model Decoding on GPUSpeaker: Gabriele Cimador (Universita e INFN Trieste (IT))

-

1