BiLD-Dev

Bi-Weekly "Loyal" DIRAC developers meeting. And, following, the LHCbDIRAC developers meeting.

Zoom: BiLD

https://cern.zoom.us/j/62504856418?pwd=TU1kb01SOFFpSDBJeWVBdU9qemVXQT09

Meeting ID: 62504856418

Passcode: 12345678

BiLD – 03/10/2024

At CERN: Federico, André, Christophe, Christopher

On Zoom: Janusz, Simon, Ruslan, Daniela, Hideki, Jorge

Apologies: Andrei, Alexey

hedgedoc is not anymore reliable for these notes!

- All old notes are archived in https://demo-archive.hedgedoc.org/ (for another 4 months) but the new https://demo.hedgedoc.org/ is cleaned up ~daily (at least once every 24h).

- All old BiLD notes are also in Indico Agendas.

- Using codimd.web.cern.ch from now on

Follow-up from previous meetings

- Last BiLD was September 12th

- DiracX hackathon on 16th/17th Sept

- Last DIRAC certification hackathon on June 6th

laston lbcertifdirac70- then attempted to set up new infrastructure, without success

- Discussion last week (26/9), see below

DIRAC communities roundtable

LHCb:

Federico+Alexandre+Christophe+Christopher+Alexey+Ryunosuke

- “Business as usual”, but stressing the system with large number of Real Data productions. Performance of Transformation System getting critical (after many years since last time it happened).

ILC/Calice/FCC

André

- NTR

Belle2

Hideki, Ruslan

- Migration to EL9 cluster

- from previous meeting Can we use the TS to set Tags on its job? – open discussion

GridPP:

Daniela, Simon, Janusz

- No news.

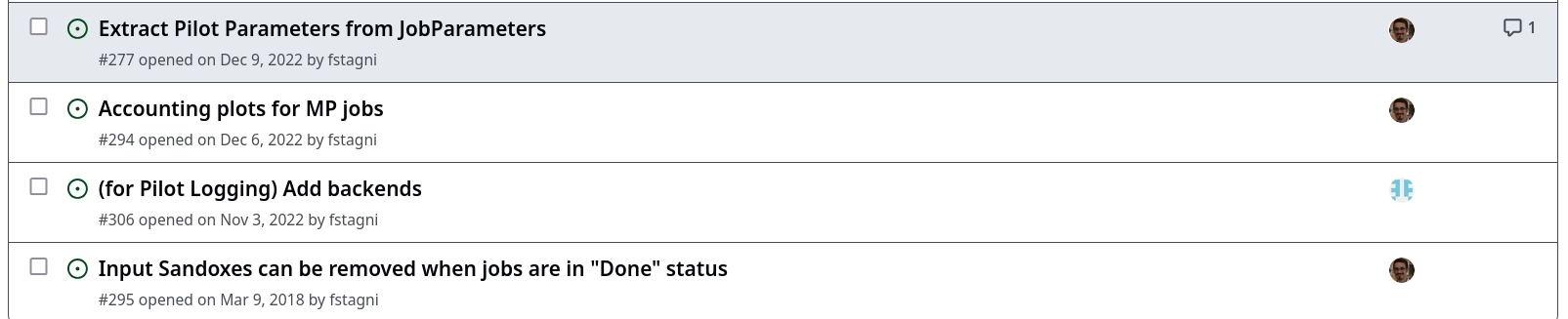

Topics from GitHub/Discussions

only un-answered topics with discussion updates:

DIRAC releases

- v8r0

- 8.0.53

- NEW: (#7741) RequestTaskAgent uses getBulkRequestStatus instead of getRequestStatus

- 8.0.53

DIRAC projects

DIRAC:

Issues by milestone:

- v8.0:

- Auto-reschedule jobs waiting for long time?

- Discussion: it would be helpful to monitor these jobs, but no auto reset

- FTS3Agent deadlocks when running multiple agents

- job status transition in ReqClient vs JobStatus

- Removal of “Systems” part of CS

- Auto-reschedule jobs waiting for long time?

- v9.0:

- Few issues moved to DiracX

- Few issues moved to DiracX

- from previous meeting Is it possible to add a last chance option for file transfers via FTS ?

* closed - from previous meeting Pilot status for pilots in PollTime related sleep cycle

- interesting discussion, feel free to contribute

PRs discussed:

- from previous meeting [9.0] remove JobDB’s site mask

WebApp:

- NTR

Pilot:

- from previous meeting Janusz some doc to write

DIRACOS:

- Neewds review PRs and release

- from previous meeting Installation errors for diracos

- from previous meeting Made a new release with

libxml2downgraded. Issue opened togfal2for proper fix- FTS/gfal developers are going to look at this now

- ==> a new release is there, pending in conda-forge

- from previous meeting Requests for Apple-M2 compatibility

- also asked to update doc…

Documentation:

- from previous meeting Need to decide on strategy for DiracX documentation – André to take care?

OAuth2:

- NTR

management

- from previous meeting Always upload releases to CVMFS

- still not working (did not work for for 8.0.53)

- Andrei created a new script, so PR needed

diraccfg

- NTR

DB12

- NTR

Rucio

- NTR

Tests

- NTR

DiracX:

DiracX hackathon 2 weeks ago fruitful. Some PRs created and in general increased knowledge

Issues

- Christophe “I will go through all issues” next week

PRs discussed:

- from previous meeting Janusz Implemetation of PilotAgentsDB schema:

- https://github.com/DIRACGrid/diracx/pull/292

- https://github.com/DIRACGrid/diracx-charts/pull/112

- Probably both OK, start with un-drafting

DiracX-charts:

- Also PRs to review (rather simple)

container-images:

- NTR

Release planning, tests and certification

-

Certification machines

- from previous meeting Federico the support level for running services at in2p3 is not sufficient. I will move to k3s at CERN for everything in order to restart things

- I would run all DBs, including MySQL, through k3s. This is of course not ideal for a production environment, but for the test setup is hopefully OK

- 3 VMs for DiracX

- 1 VM for DIRAC v9

- The plan above did not fully work, so last Thursday we discussed what to do and:

- For the moment (which might be a long “moment”) keep using the old setup at CERN:

- lbcertifdirac70 for DIRAC code

- DBs from CERN

- DiracX running on paas.cern.ch (OpenShift)

- keep investigating the other solutions, but with a lesser priority

- For the moment (which might be a long “moment”) keep using the old setup at CERN:

- from previous meeting Federico the support level for running services at in2p3 is not sufficient. I will move to k3s at CERN for everything in order to restart things

-

Next hackathon(s)

- next week on Tuesday (!)

Next appointments

-

Meetings:

- hackathon: Tuesday 8th

- BiLD: in 4 weeks (Oct 31st)

-

WS/hackathons/conferences:

- CHEP 2024 19th-25th Oct, Krakow

- 1 talk in plenary (Federico)

- 2 talks in parallel (Natthan, Xiaowei)

- 1 poster (Alexandre)

- DiracX hackathon: 14th-15th January 2025, CERN https://indico.cern.ch/event/1458873/

- Dirac&Rucio mini-workshop and hackathon: 16th-17th January 2025, CERN https://indico.cern.ch/event/1443765/

- Dirac Users’ Workshop: 17th-20th September 2025 - https://indico.cern.ch/e/duw11

- CHEP 2024 19th-25th Oct, Krakow

AOB

- NTR

LHCbDIRAC

- BKK:

- getDescendants stuff: Alexey next week will finish the MR

- will get hotfixed before

- Chris added 2 new methods for speed up the consistency checks

- Chris DM consistency check: “insame” query

- new implementation, started reviving the discussion with IT

- maybe need to use a materialized view

- getDescendants stuff: Alexey next week will finish the MR

- Ryun is adding stuff for running LbConda inside the LHCb trasnformation jobs

- Ryun possibility of running on a subset of a BkQuery for prescaling

- from previous meeting Some runs without luminosity. Total luminosity is there.

- Frèderic Hemmer to check it