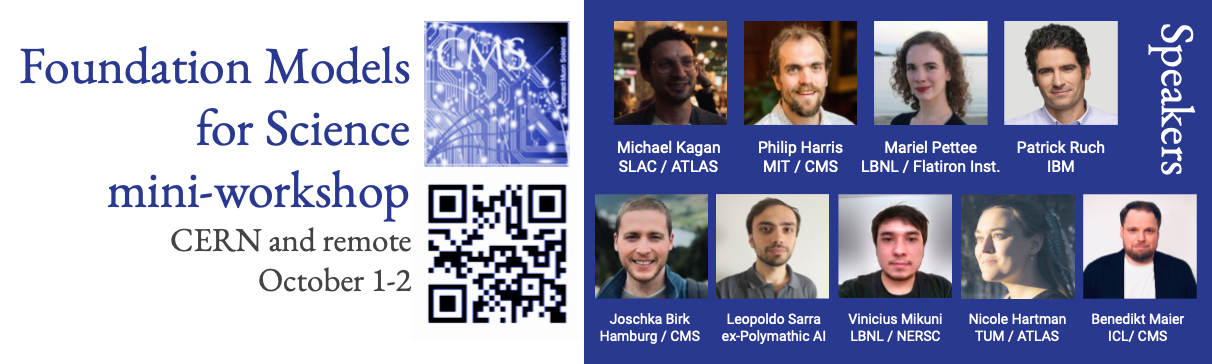

Speaker

Michael Kagan

(SLAC National Accelerator Laboratory (US))

Description

Can Foundation Models, which rely on massive parameter counts, data sets, and compute, and have proven extremely powerful in computer vision and natural language systems, be built for High Energy Physics? To do so, several challenges must be addressed, including understanding how the training strategies, which are often data-type specific, can be developed for HEP data. In this talk, we will discuss our first steps towards building HEP foundation models using Self-Supervised training methods, such as masking strategies. We will also explore how pre-trained models can encode general knowledge of high utility when adapted for a variety of tasks.