- Compact style

- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

→

Europe/Zurich

-

-

1

DiscussionSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Fix dropping lifetime::timeframe for good: Still pending: problem with CCDB objects getting lost by DPL leading to "Dropping lifetime::timeframe", saw at least one occation during SW validation.

- Newly spotted bogus message about decreased oldestPossible counter should be suppressed. Status?

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308: Reported 2 issues to GIulio, waiting for a fix.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

Global calibration topics:

- TPC IDC and SAC workflow issues to be reevaluated with new O2 at restart of data taking. Cannot reproduce the problems any more.

Sync reconstruction

- Waiting for RC to test COSMIC replay data set.

- Waiting for RC to test STOP timeout impact.

- Several software updates with cherry-pick of detector code and new QC versions.

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- ḾI100 GPU stuck problem will only be addressed after AMD has fixed the operation with the latest official ROCm stack.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- Problem reported by Max/Ruben that analysis fails, since sometimes at EOR we receive TFs with no data and bogus orbit.

- Giulio will implement tf-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy.

- Giulio will implement tf-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Tentative time for ALMA9 deployment: december 2024.

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

Full system test issues:

Topology generation:

- Deployed a workaround in O2DPG GenTopo, to delete repository and check out anew if this happens. To be seen if this fixes it fully.

AliECS related topics:

- Extra env var field still not multi-line by default.

GPU ROCm / compiler topics:

- ROCm 6.2.2 available from AMD, old problems seem fixed, but we see 2 new types of crashes

- GPU memory error : no update

- Server crash: AMD needs serial cosonsole access to check for kernel messages since the server just disappears, hope this helps.

- New miscompilation for >ROCm 6.0

- Waiting for AMD to fix the reproducer we provided (not yet fixed in 6.2.2, but we have a workaround).

- Waiting for AMD to fix the reproducer we provided (not yet fixed in 6.2.2, but we have a workaround).

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Created PR to bump GCC to 14.2 (just to test).

- GCC14 PR now gree despite CUDA problem in FullCI, now have to wait for new CUDA version.

TPC GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster including average charge and occupancy map errors during seeding.

TPC processing performance regression:

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

General GPU Processing

-

2

Following up JIRA ticketsSpeaker: Ernst Hellbar (CERN)

Low-priority framework issues https://its.cern.ch/jira/browse/O2-5226

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Backpressure reporting when there is only 1 input channel: no progress

- Merged workflow fails if outputs defined after being used as input

- needs to be implemented by Giulio

- Cannot override options for individual processors in a workflow

- requires development by Giulio first

- Problem with 2 devices of the same name

- Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Run getting stuck when too many TFs are in flight.

- Do not use string comparisons to derrive processor type, since DeviceSpec.name is user-defined.

- Support in DPL GUI to send individual START and STOP commands.

- DPL sending SHM metrics for all devices, not only input proxy

- Some improvements to ease debugging with the GUI:

- Show in the debugGUI which input is condition / optional / timeframe

- done https://github.com/AliceO2Group/AliceO2/pull/13628

- using

InputRoute/OutputRoutein addition toDataProcessingState - inputs/outputs consistent between the two, also in case of pipelining

- using

- done https://github.com/AliceO2Group/AliceO2/pull/13628

- Show data origin/description in the info box when hovering a processor spec

- ROOT Messages in the output of a workflow should not generally be interpreted as error

- done https://github.com/AliceO2Group/AliceO2/pull/13635

- for unknown log level, check for typical ROOT messages (

Info in, ...) at the beginning of the message string to assign the proper log level - assign color (

YELLOW) forALARMmessages

- for unknown log level, check for typical ROOT messages (

- will implement something similar in

Utilities/EPNMonitoring/src/EPNstderrMonitor.cxxfor EPN InfoLogger - also check stderr log output from Driver when running locally and assign proper log level in this third case

- done https://github.com/AliceO2Group/AliceO2/pull/13635

- Show in the debugGUI which input is condition / optional / timeframe

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

- Implement a proper solution to detect wheter a device is firstInChain

- Deploy topology with DPL driver

- DPL does not shutdown when one process exhibits FATAL state

- fixed by https://github.com/AliceO2Group/AliceO2/pull/13654

- setting the default

error-policytoQUITin case ofno-batch+ no GUI window

- setting the default

- fixed by https://github.com/AliceO2Group/AliceO2/pull/13654

PDP-SRC issues

- Check if we can remove dependencies on

/home/epn/odc/filesin DPL workflows to remove the dependency on the NFS- reading / writing already disabled

- remaining checks for file existence?

- check after Pb-Pb by removing files and find remaining dependencies

logWatcher.shandlogFetcherscripts modified by EPN to remove dependencies onepnloguser- node access privileges fully determined by e-groups

- new

log_accessrole to allow access inlogWatchermode to retrieve log files, e.g. for on-call shifters - to be validated on STG

- waiting for EPN for further feedback and modifications of the test setup

-

3

TPC ML ClusteringSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Update on cluster-overlap study

- Cluster overlap is counted like this

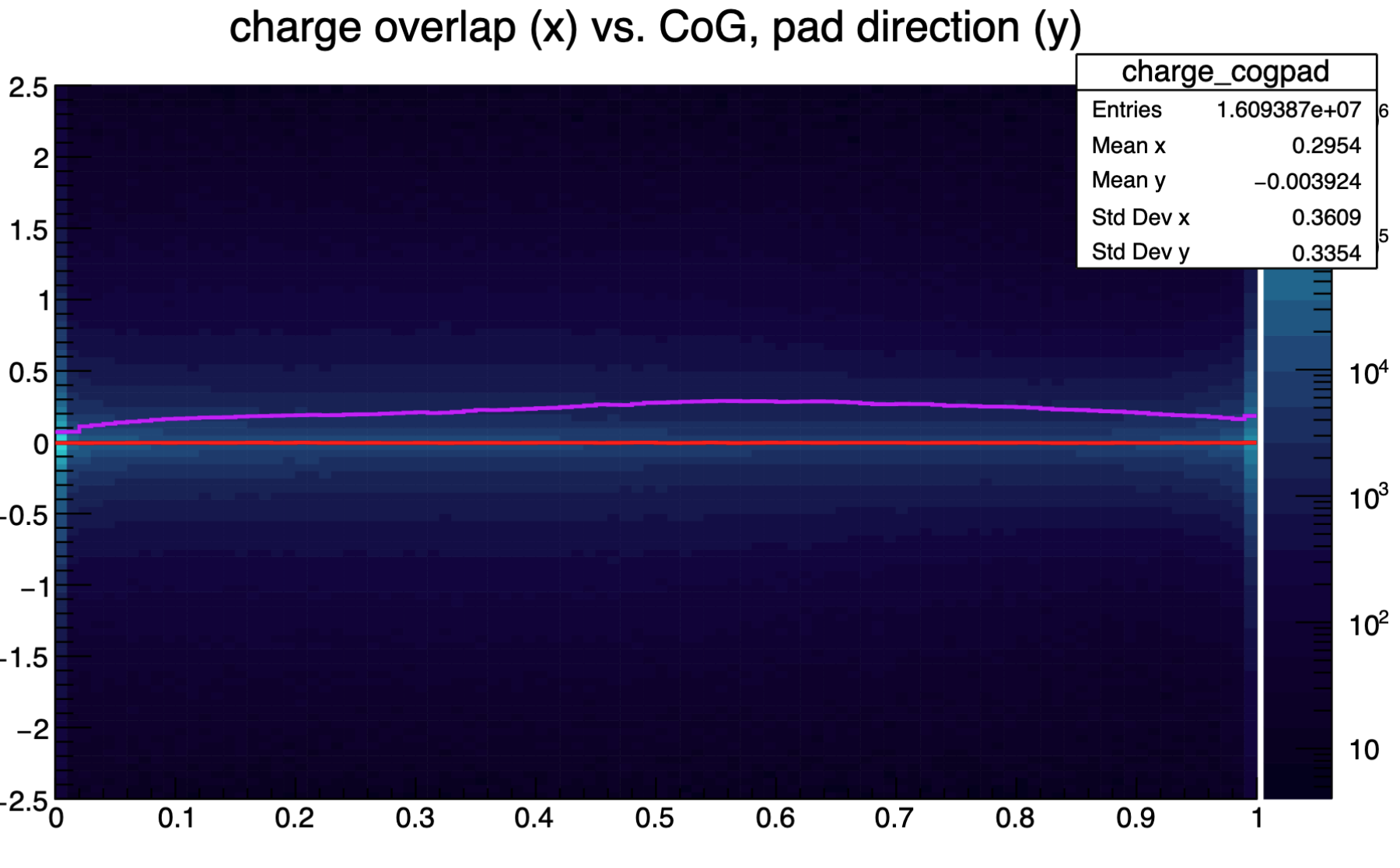

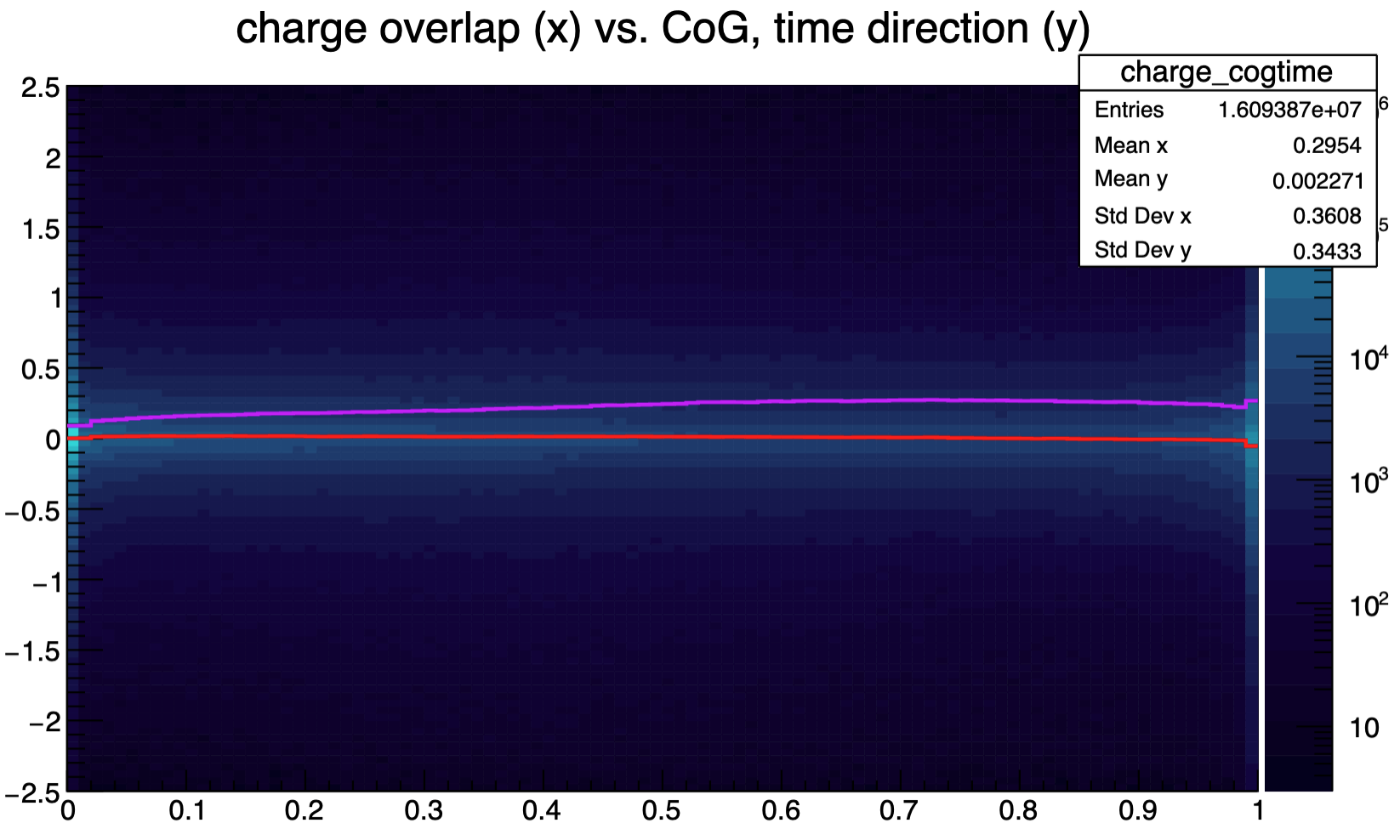

- Charge overlap: For cluster with MC-ID i, sum charges where pad-time-units of this cluster (with charge of MC-ID i) have contributions from other clusters with MC-ID j != i. Divide by total charge of cluster i (range between 0 and 1).

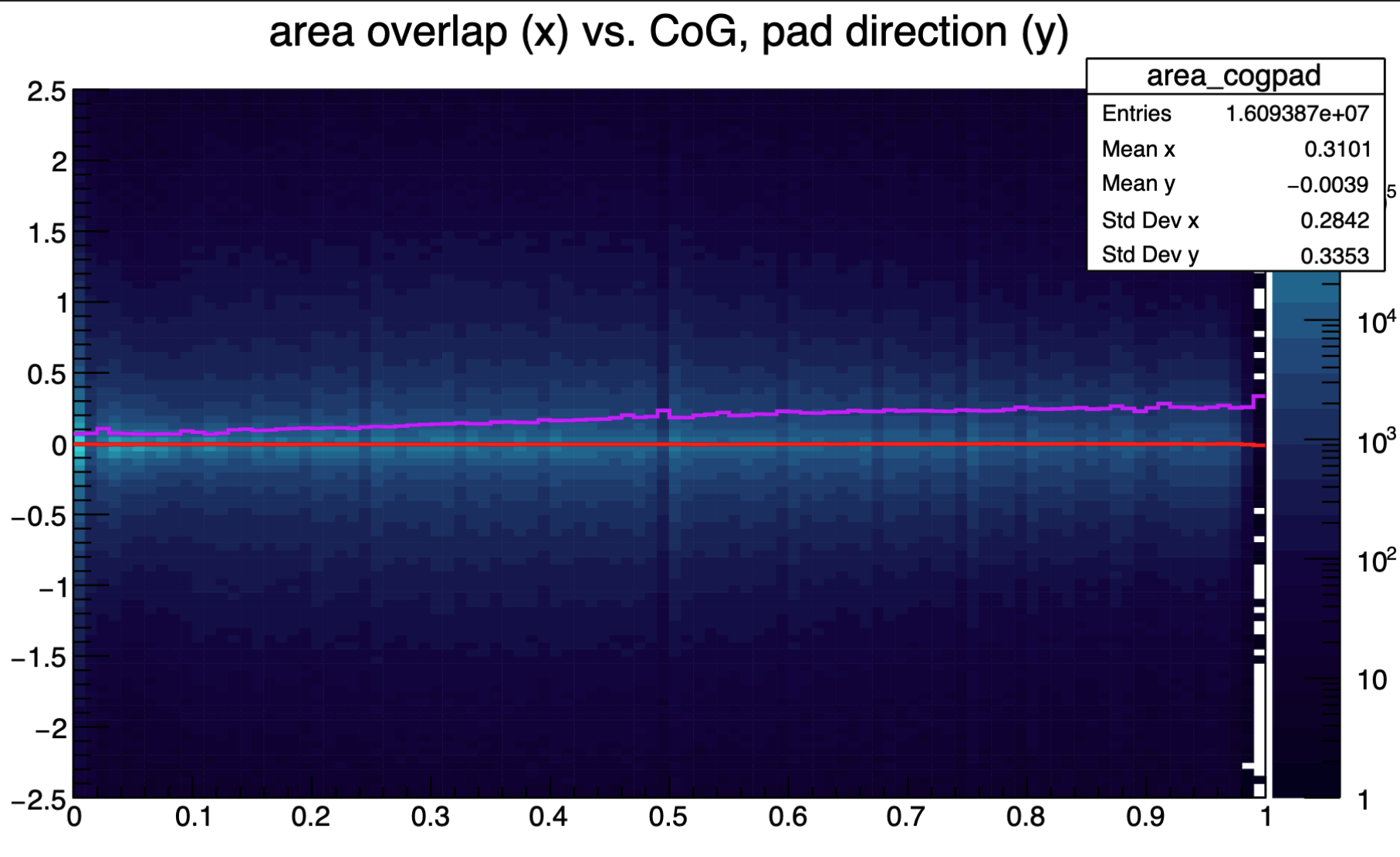

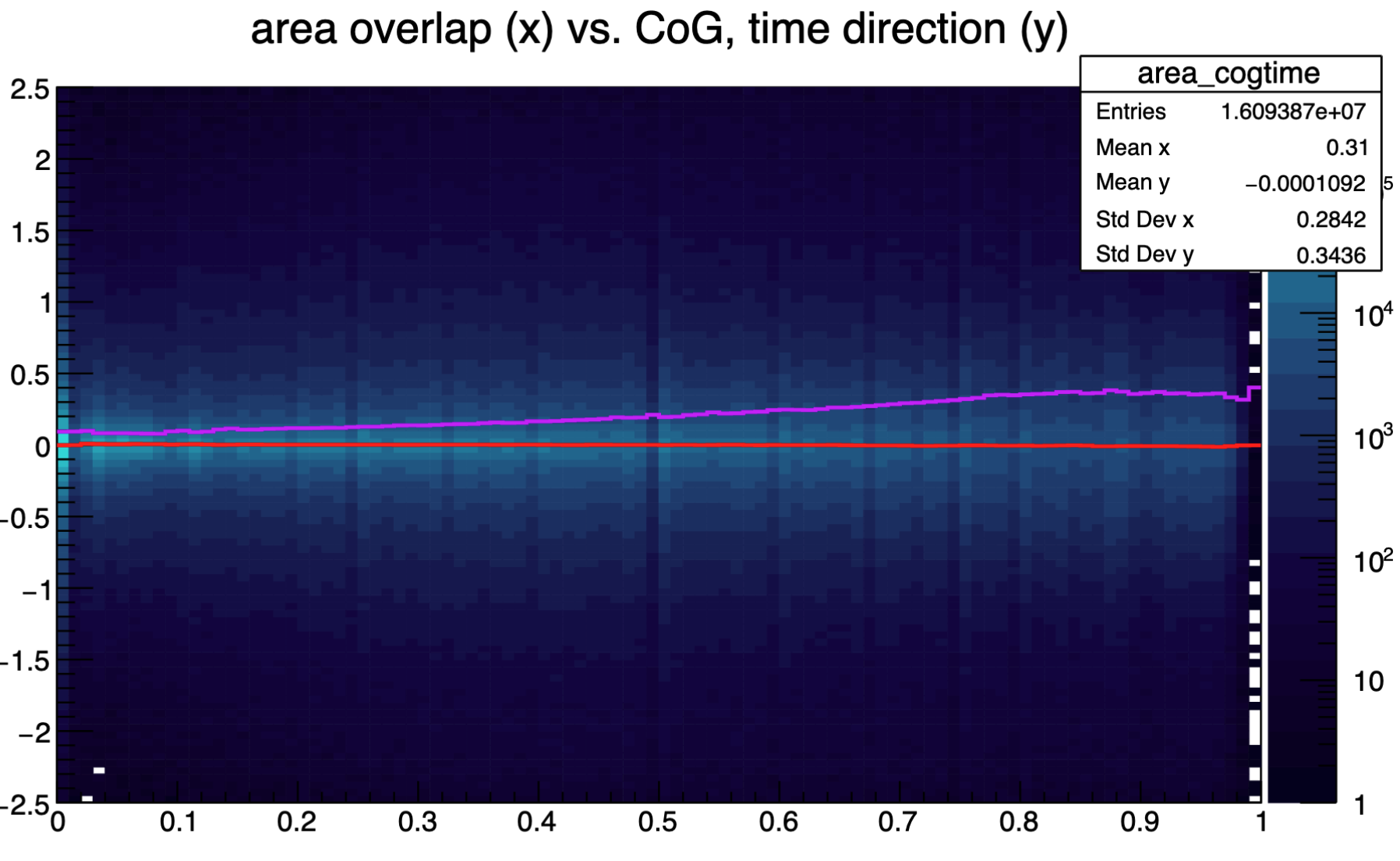

- Area overlap: Count all pad-time bins where a charge of MC-ID (i and j != i) contributed and divide by all pad-time bins where charge of MC-ID i is present

- Caveat: Probably loopers are not captured well with this technique as I count all charges (not bound by pad-time windows)

Correlation of area and charge overlap is visible, but not as strong as I had expected...

Centre-of-gravity resolution of NN, area overlap

Centre-of-gravity resolution of NN, charge overlap

Booster of moral

~30 minutes ago, the clusterization project was presented in the physics forum by the ALICE ML group and outlined as the most interesting and benefical project for the entire collaboration from the ML group side 🥳

Plans & To-Do's

Title and text; High priority, medium priority, low priority; short term, mid term, long term; other

- Neural networks

- Cluster splitter network

- N-class classifier network: Probably only going until split-level 2 or 3. Higher gets really sparse in training data

- N-class regression network: Similar approach as the N-class classifier, but need to see how good performance is...

- Pass momentum vector to downstream reconstruction

- Cluster splitter network

- GPU developments

- Pull requests

- O2

- PR, ORT library integration: https://github.com/AliceO2Group/AliceO2/pull/13522

- PR, Full clusterization integration: https://github.com/AliceO2Group/AliceO2/pull/13610

- alidist

- PR, ORT GPU build: https://github.com/alisw/alidist/pull/5622

- O2

- Issues & Feature requests

- OnnxRuntime

- Coming up...

- Coming up...

- OnnxRuntime

- Pull requests

- QA task & algorithmic developments

- Include SC distortion simulation

- Use black PbPb data to evaluate performance and for training

- Redo 2D study with NN

- Cluster overlap is counted like this

-

4

ITS TrackingSpeaker: Matteo Concas (CERN)

ITS GPU tracking

- General priorities:

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Optimizations via intelligent scheduling and multi-streaming can happen right after.

- Kernel-level optimisations to be investigated.

- Neighbour finding ported: (#13636)

- We have our "first" non-deterministic effect due to the ranking of the Cells subject to concurrency ~per-mille.

- Deterministic mode implies now <<<1,1>>> settings to restore 1:1 coherence with CPU.

- Will leave it as it is for now; the overall impact and possible action will be evaluated once the porting is fully finalised.

- Cell finding ported: (#13653)

- no concurrence: deterministic out of the box.

- PbPb: 1.5x faster than CPU already, with single stream serialization+deterministic flags, factors more expected by multi-streaming on the different layers.

- TODO:

- Tracklet finding to be ported back: work in progress right now.

- Reproducer for HIP bug on multi-threaded track fitting: no progress yet.

- Move more of the track-finding tricky steps on GPU: no progress yet.

- Fix possible execution issues and known discrepancies when using

gpu-reco-workflow: no progress yet; will start after the tracklet finding is ported.

DCAFitterGPU

- Deterministic approach via using

SMatrixGPUon the host, under particular configuration: no progress yet.

- General priorities:

-

5

TPC Track Model Decoding on GPUSpeaker: Gabriele Cimador (Universita e INFN Trieste (IT))

Currenty doing: Code duplication fix

- Same decompressTrack() function present in both old and new decoding

- decompressTrackStore() present both in old and new decoding

- In the old one creates a ClusterNative object and stores in a vector using emplace_back

- In the new one stores the ClusterNative object using plain array + counter

- Try to remove old decompressTrack()

- Use variadic templates on new decompressTrack() function

- Move decompressTrackStore() with vectors in new code

- Depending on the unpacked template, the correct decompressTrackStore() is called

Still to do:

- Update standalone benchmark to use new decoding with --compressionStat

- Check pp decoding performance (was a bit lower than expected)

-

6

Efficient Data StructuresSpeaker: Dr Oliver Gregor Rietmann (CERN)

Efficient Data Structures

Context

- Create data structures for controling the data layout (AoS vs SoA)

- These data structures should hide the underlying data layout.

- We want to change the underlying data layout without affecting the code using it.

Member Functions

#include <iostream>

#include <string>

#include <vector>

template <typename T>

using identity = T;

template <typename T>

using reference = T&;

template <template <class> typename F, template <template <class> class> class S>

struct soa_wrapper : S<F> {

S<reference> operator[](std::size_t i) {

auto& [x, y, activation, identifier] = *this;

return {x[i], y[i], activation[i], identifier[i]};

}

};

struct Point2D { double x, y; };

template<template <class> class F>

struct S {

F<int> x, y;

F<Point2D> activation;

F<std::string> identifier;

int abs2() const { return x * x + y * y; } // not defined for std::vector<int>

int& getX() { return x; }

void setX(int x_new) { x = x_new; }

};

int main() {

soa_wrapper<std::vector, S> my_array;

my_array.x = {0, 1, 2};

my_array.y = {0, 1, 2};

my_array.activation = {{0, 1}, {2, 3}, {4, 5}};

my_array.identifier = {"bla", "foo", "bar"};

my_array[1].setX(10);

my_array[1].getX();

for (int i = 0; i < 3; ++i) std::cout << my_array[i].abs2() << std::endl;

return 0;

}Why is this compiling?

-

1