Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

10:00

→

10:20

Discussion 20mSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308: Reported 2 issues to GIulio, waiting for a fix. Status?

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

- TF-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy. Status?

Sync reconstruction

- Waiting for RC to test COSMIC replay data set.

- Waiting for RC to test STOP timeout impact.

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- ḾI100 GPU stuck problem will only be addressed after AMD has fixed the operation with the latest official ROCm stack.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

EPN major topics:

- ALMA9 / ROCm 6.x deployment on hold, until AMD manages to fix the random server reboots or we find a workaround.

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

AliECS related topics:

- Extra env var field still not multi-line by default.

GPU ROCm / compiler topics:

- AMD Status: Random server reboots are more rare with ROCm 6.3.1, but still happening. No improvement on the other issues. Send again a detailed list of issues to AMD, and will have a meeting on Thursday to discuss how to progress. From the 2 AMD engineers working for us, 1 is in parental leave and 1 retired, so currently no one available to debug. List of issues ordered by severity:

- Random server reboots on MI100: Tried several workarounds, but no solution found so far. Giada spotted some weird FairMQ problems in the large scale test, which could probably be due to some memory corruption happening.

- Random crashes on MI100 due to memory error, can be worked around by serializing all kernel and DMA transfers, which has 20% performance degradation.

- Miscompilation leading to crashes, worked around by changing our code, but compiler bug still there.

- Provide an RPM ROCm version with all fixes, so that we don't need to compile clang manually with custom patches.

- Proper way to enable amdgpu-function-calls instead of hacking AMD scripts and binaries.

- hipHostRegister has become very slow when more than 1 GPU visible (via ROCR_VISIBLE_DEVICES).

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

TPC GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster including average charge and occupancy map errors during seeding.

- Added protection that system headers cannot be included in GPU device code, which was the cause for the RTC compilation failures in November not seen in the CI.

- Added option requested by TPC to reject clusters for dEdx based on cluster flag mask.

- With POCL trunk and Clang 19, for the first time managed to run our OpenCL 2 code. After some fixes, full slice tracking with OpenCL2 was working with exact same results as OpenCL1 / CPU, thus OpenCL 2 now superseds OpenCL 1 code.

- With OpenCL 1 no longer needed, and I don't do any more tests with AliRoot in the last year, started a larger cleanup campaign:

- Removed all OpenCL 1 code, and merged OpenCL common and OpenCL2 into one OpenCL library.

- Removed all code related to AliRoot.

- Still ongoing to add a feature to the standalone benchmark to create new default calib objects if the format changes. This will allow to keep using Run 2 data with the Standalone benchmark if they are without distortions.

- Still ongoing to add a feature to the standalone benchmark to create new default calib objects if the format changes. This will allow to keep using Run 2 data with the Standalone benchmark if they are without distortions.

- Removed all workarounds we had to run with ROOT 5, for compilers that do not support C++11, and for missing C++17 support.

- Unfortunately, OpenCL for C++ 2021 is still at C++17, so we cannot yet have full C++20 support.

- Unfortunately, OpenCL for C++ 2021 is still at C++17, so we cannot yet have full C++20 support.

- In the process of removing several code paths that are obsolete now, since they were used only in legacy code (e.g. OpenCL1 could not run all kernels on CPU, AliRoot needed slice data output between sector tracing and merging).

- Removed all OpenCL 1 code, and merged OpenCL common and OpenCL2 into one OpenCL library.

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- GPU MemClean not working in TPC clusterization, need to debug.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

TPC processing performance regression:

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

General GPU Processing

- Start / Stop / Start: 2 problems on O2 side left:

-

10:20

→

10:25

Following up JIRA tickets 5mSpeaker: Ernst Hellbar (CERN)

Low-priority framework issues https://its.cern.ch/jira/browse/O2-5226

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Merged workflow fails if outputs defined after being used as input

- needs to be implemented by Giulio

- Cannot override options for individual processors in a workflow

- requires development by Giulio first

- Problem with 2 devices of the same name

- already discussed a bit with Giulio, but still looking for the right place, time, condition to check for duplicate processor names

- check for duplicate processor names done twice

- once at the very beginning, starting with an empty workflow and building it up step by step

- a second time at the start of each task, but comparing the processor names against the full workflow

- these will always be duplicates

- Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Run getting stuck when too many TFs are in flight.

- Do not use string comparisons to derrive processor type, since DeviceSpec.name is user-defined.

- Support in DPL GUI to send individual START and STOP commands.

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

- Implement a proper solution to detect wheter a device is firstInChain

- Deploy topology with DPL driver

PDP-SRC issues

- Check if we can remove dependencies on

/home/epn/odc/filesin DPL workflows to remove the dependency on the NFS- reading / writing already disabled

- remaining checks for file existence?

- check after Pb-Pb by removing files and find remaining dependencies

logWatcher.shandlogFetcherscripts modified by EPN to remove dependencies onepnloguser- node access privileges fully determined by e-groups

- new

log_accessrole to allow access inlogWatchermode to retrieve log files, e.g. for on-call shifters - to be validated on STG

- waiting for EPN for further feedback and modifications of the test setup

- Promote critical DPL Errors to ILG Ops level

- new "critical" severity in FairLogger

- "critical" DPL log messages to be mapped to ILG severity Error and level 1 (Ops)

- https://github.com/AliceO2Group/AliceO2/pull/13957

- requires bump of FairRoot and modification and bump of InfoLogger

- https://github.com/alisw/alidist/pull/5763

- jump from FairLogger v1.11.1 to v2.1.0

- https://github.com/AliceO2Group/InfoLogger/pull/95

- struct member type changed from string to string_view, used in InfoLogger

- to be changed in InfoLogger to match the type in FairLogger

- https://github.com/alisw/alidist/pull/5763

- promote known and documented messages to "critical" respectively ILG Error + Ops level if they require action from the shifter

- draft: https://github.com/AliceO2Group/AliceO2/pull/13930

- stderr messages

- "(core dumped)"

- todo

- see if there are other critical stderr messages

- promote documented messages

- check content of Fatal and Critical messages, make sure they are appropriate for shifters, eventually putting extra information in an Error message right in front

-

10:25

→

10:30

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Developments since last week

- Working on realistic physics simulations with adapted FST, switiching lowneut=false for faster sim and cleaner environment

-

SIMOPTKEY = G4.physicsmode=3;SimCutParams.lowneut=false;TPCEleParam.doCommonModePerPad=0;TPCEleParam.doIonTailPerPad=1;TPCEleParam.commonModeCoupling=0;TPCEleParam.doNoiseEmptyPads=1;TPCEleParam.doSaturationTail=0;TPCDetParam.TPCRecoWindowSim=10;

- DIGITOPTKEY = TPCEleParam.doCommonModePerPad=0;TPCEleParam.doIonTailPerPad=1;TPCEleParam.commonModeCoupling=0;TPCEleParam.doNoiseEmptyPads=1;TPCEleParam.doSaturationTail=0;TPCDetParam.TPCRecoWindowSim=10;

-

- Added QA for ITS-TPC matching

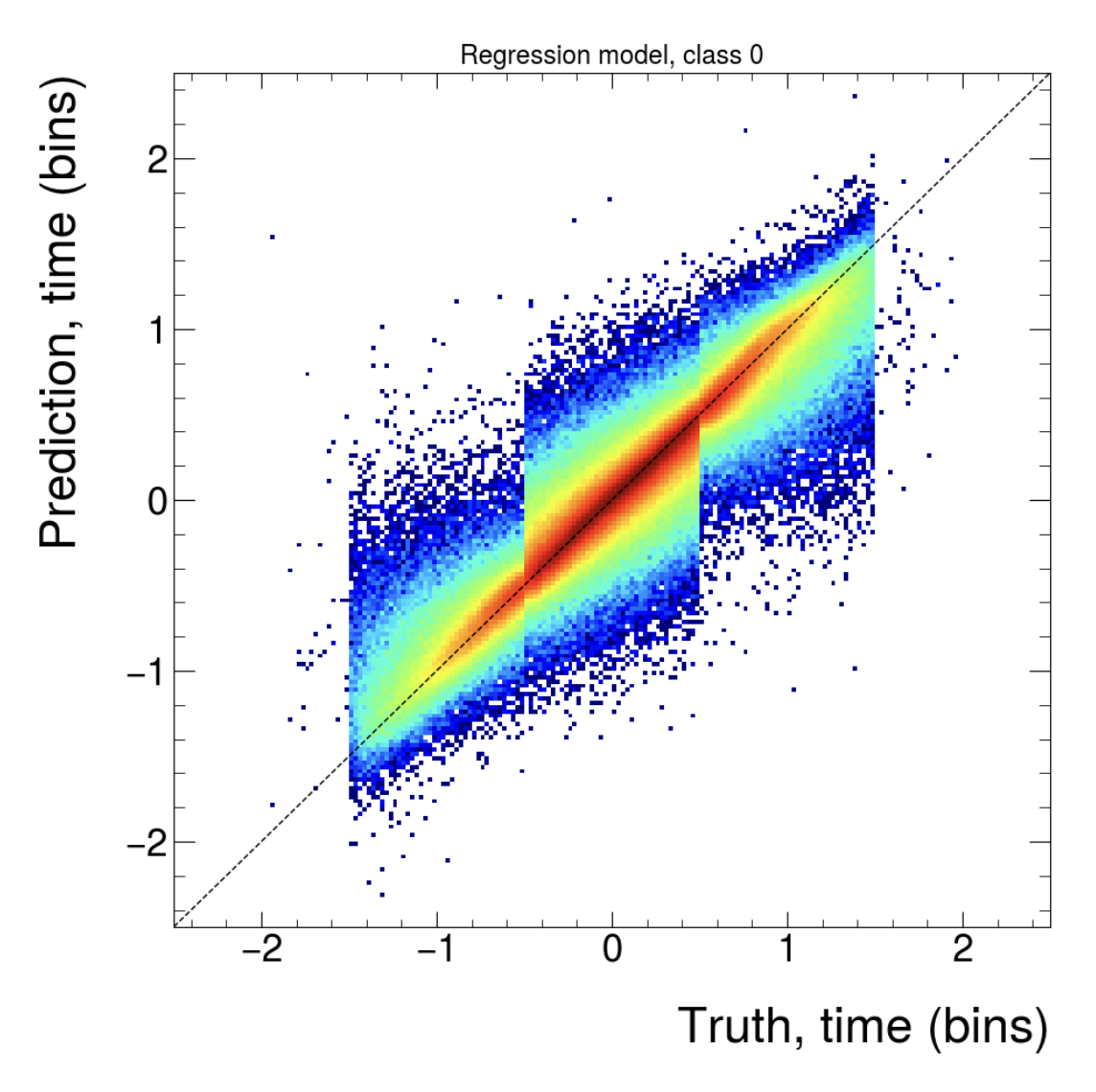

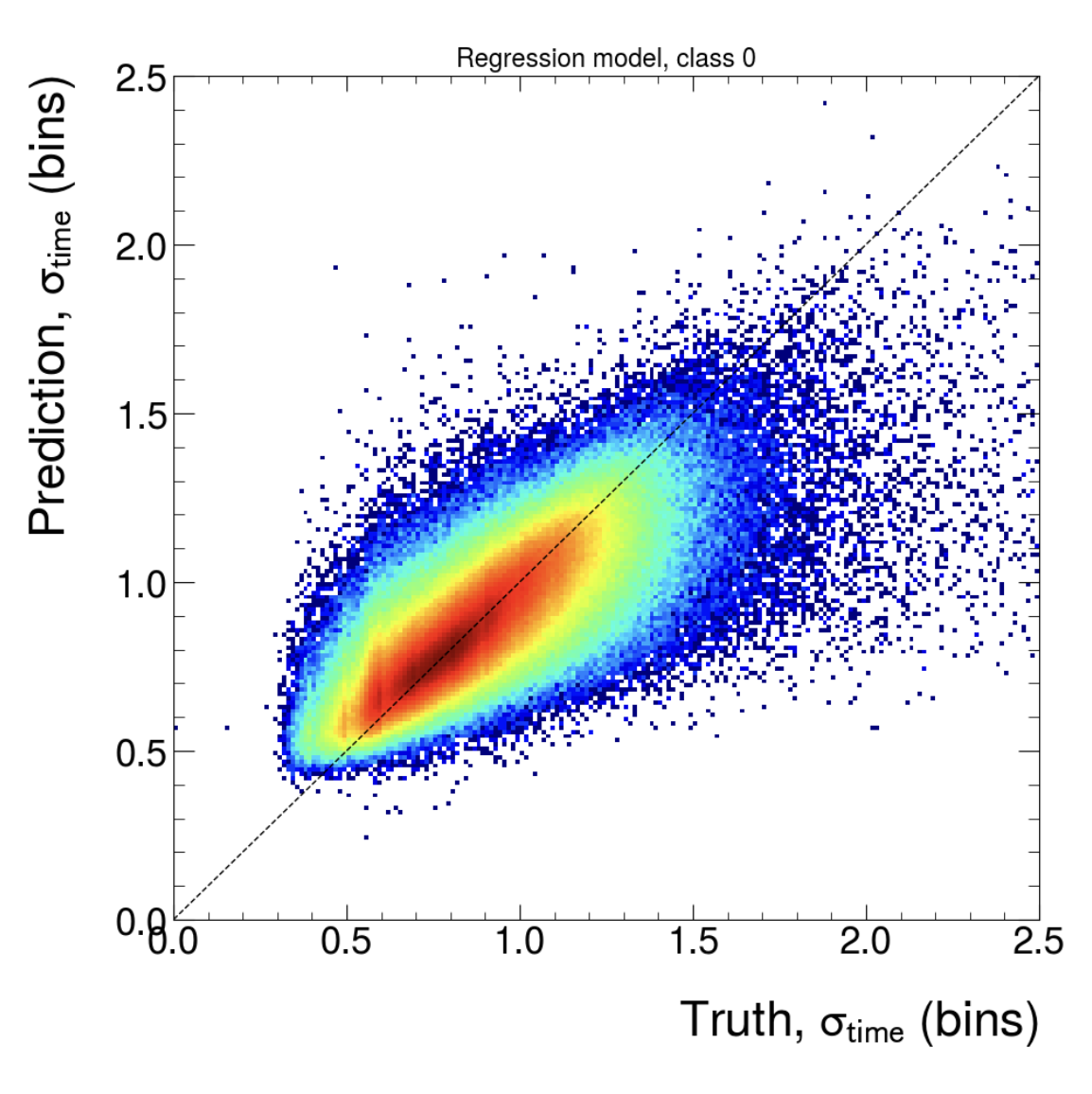

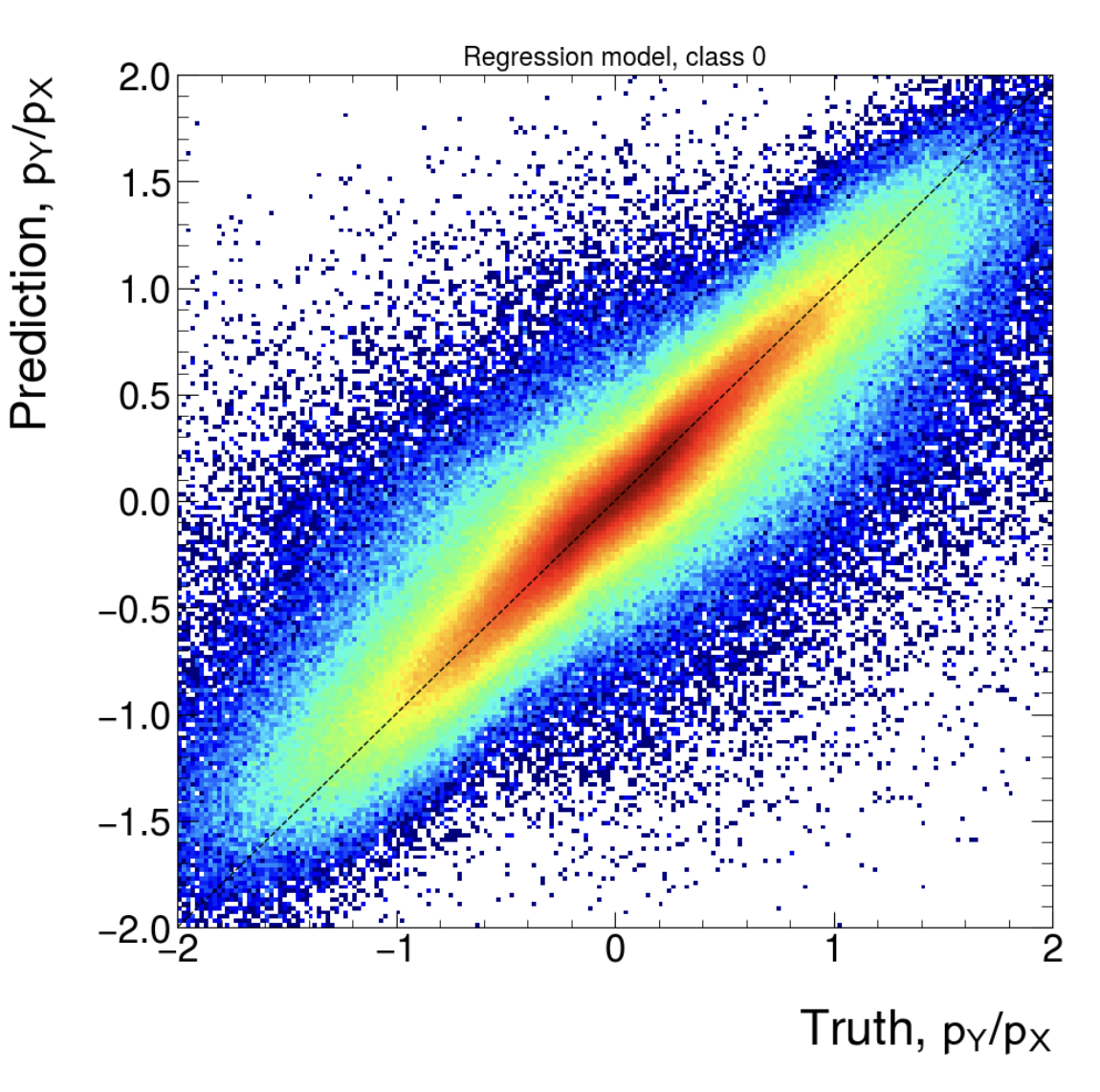

- Added post-training QA after NN training is finished (on training data)

- Tried several different network architectures. Both classification and regression could not majorly benefit from change, fully connected still seems to be the best option so far.

- Worked on fitting class2 regression -> Limited by training data availability: 5 x 50 Ev. @ 50 kHz = ~1700 training data points

- Optimizing training data selection

Post training QA

(Regression, class 1, input size (577))

(Classification, class 1. First: Input size (177), Second: Input size (777))

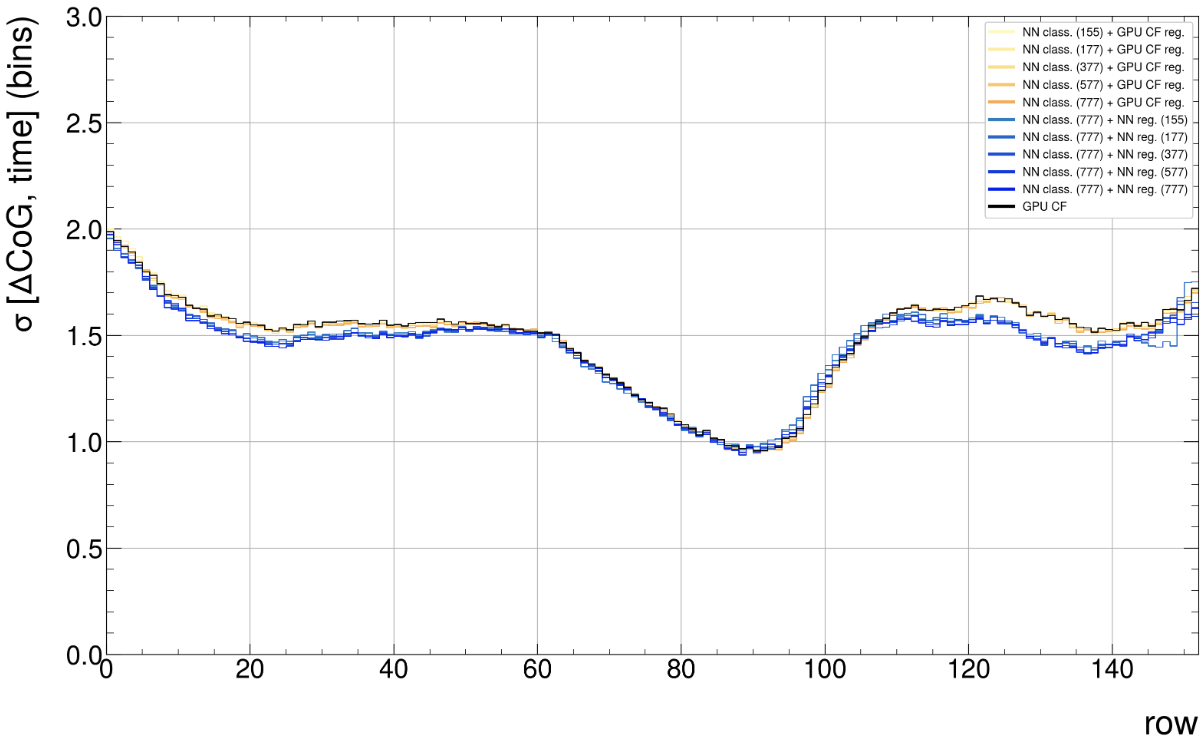

ITS-TPC matching

(GPU CF: 30.4 mio. clusters, 266.4k tracks; NN: 27.0 mio. clusters, 254.4k tracks)

Interesting effect

We know the network learns a slightly better time-mean position of the clusters and produces a more gaussian shaped width distribution. This seems to result in smaller delta(cog time) cluster-to-track residuals, even though the network was not trained to optimize this (yet)

- Working on realistic physics simulations with adapted FST, switiching lowneut=false for faster sim and cleaner environment

-

10:30

→

10:35

ITS Tracking 5mSpeaker: Matteo Concas (CERN)

ITS GPU tracking

- General priorities:

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Tracking fully ported on GPU.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Next required developments:

- Thrust allocator with external memory management -> possibly the most critical missing piece, to find a decent way of introducing it.

- Asynchronous parallelisation in the tracklet finding, i.e. Multi-streaming for obvious parallelisations.

- Optimizations:

- intelligent scheduling and multi-streaming can happen right after.

- Kernel-level optimisations to be investigated.

TODO:-

- Reproducer for HIP bug on multi-threaded track fitting: no follow-up yet.

- Fix possible execution issues and known discrepancies when using

gpu-reco-workflow: no progress.

DCAFitterGPU

- Deterministic approach via using

SMatrixGPUon the host, under particular configuration: no progress.

- General priorities:

-

10:35

→

10:45

TPC Track Model Decoding on GPU 10mSpeaker: Gabriele Cimador (Universita e INFN Trieste (IT))

General summary on GPU param optimisation

Can we optimize parameters individually, and which parameters do we have to optimize globally?

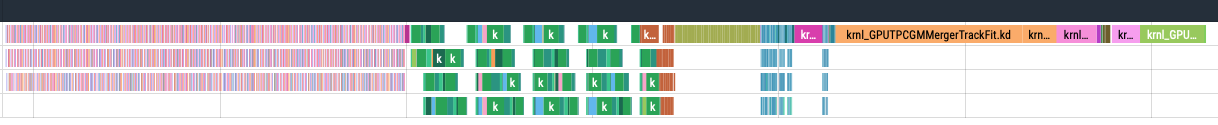

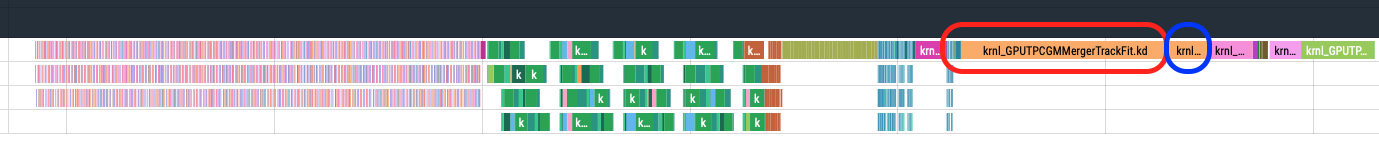

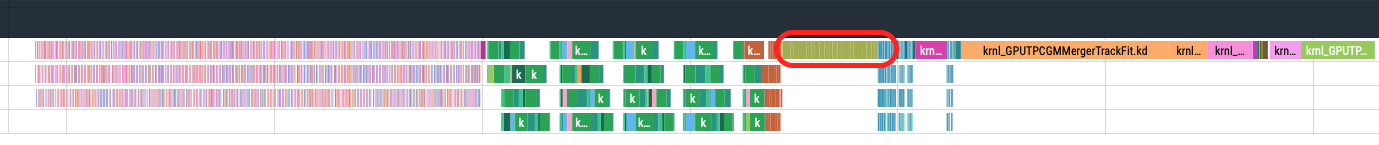

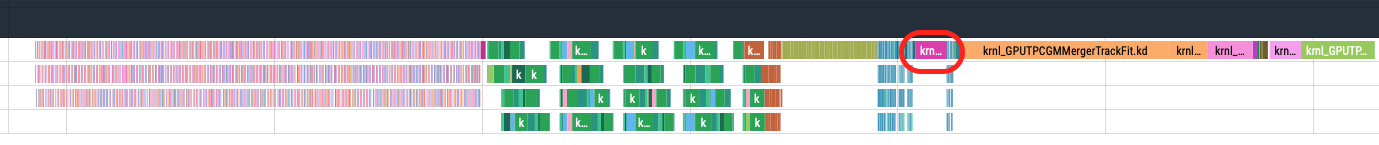

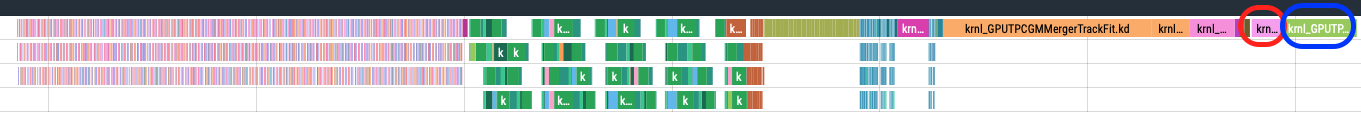

Image below is the GPU sync TPC processing chain. Each colored box is a GPU kernel, time flows in this direction -->.

Drawn following conclusions:

- Compression and decompression steps: these steps contain kernels which do not execute concurrently. Parameters are independent and can be optimised separately.

- Clusterizer step: small concurrent kernels, dependent parameters, need global optimisation.

-

TrackingSlices step: medium concurrent kernels, dependent parameters, need global optimisation.

- Merger step: mix of medium/long single stream kernels and small concurrent kernels. Some parameters can be optimisied individually while concurrent kernels require global opt.

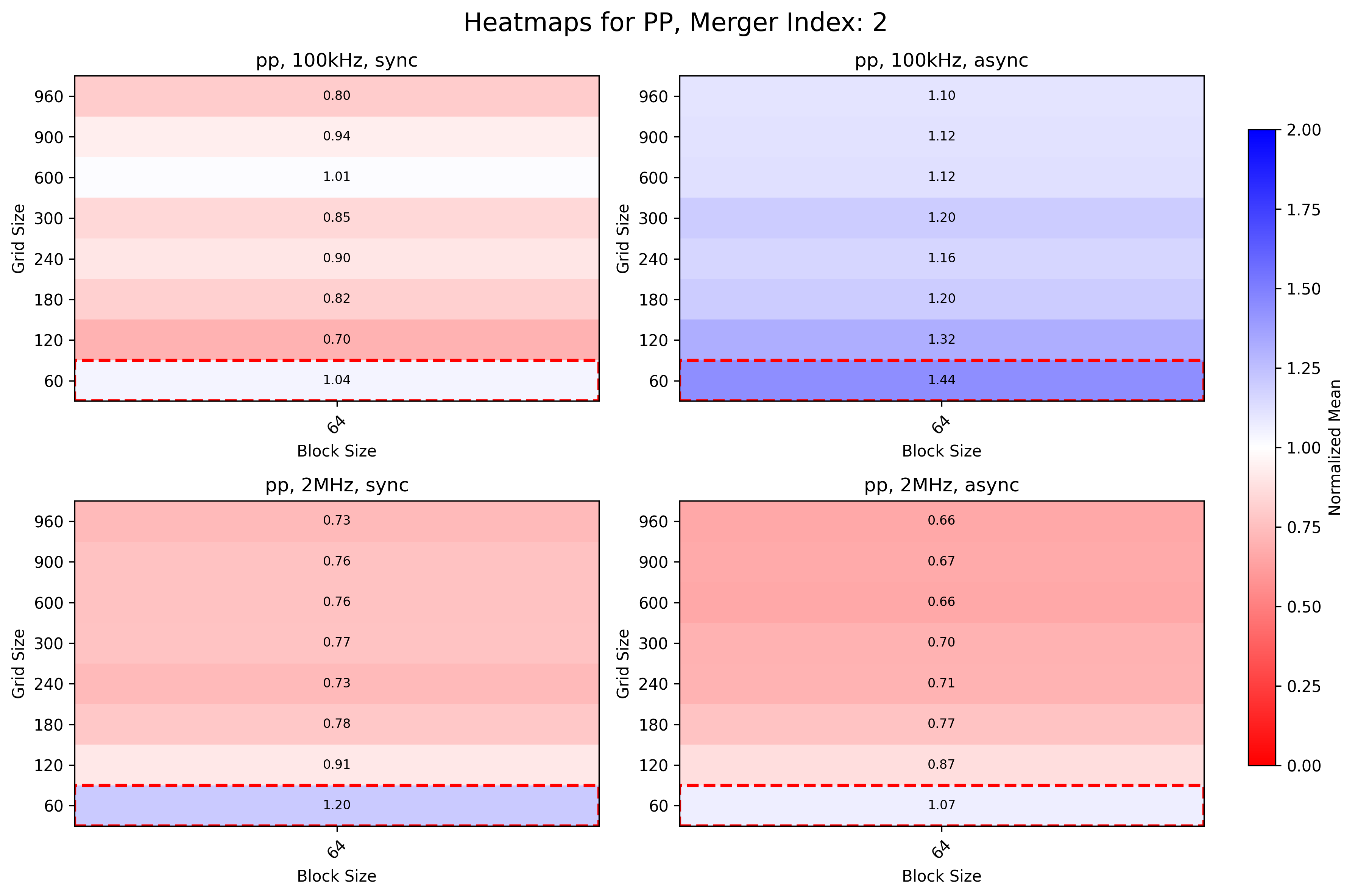

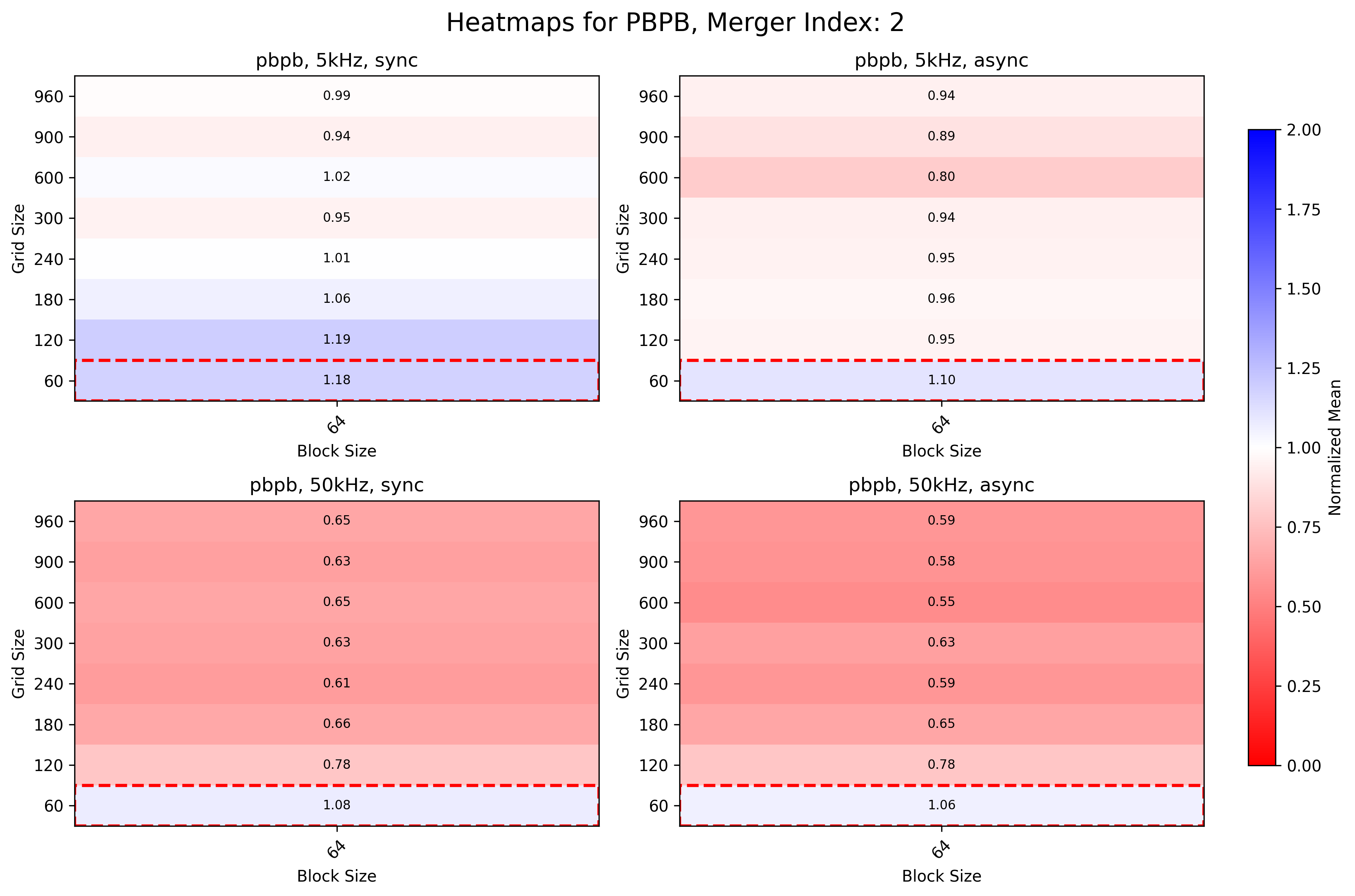

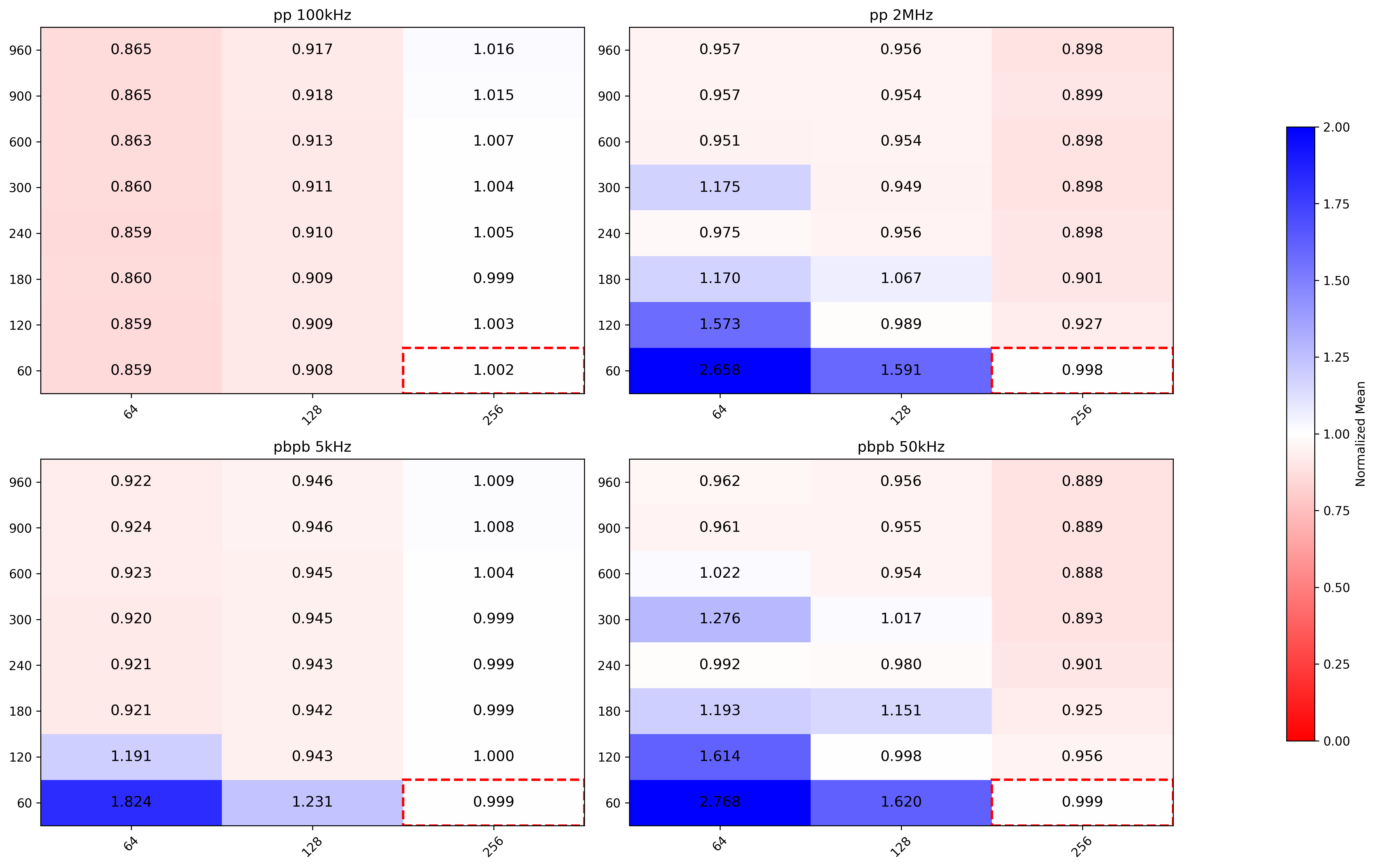

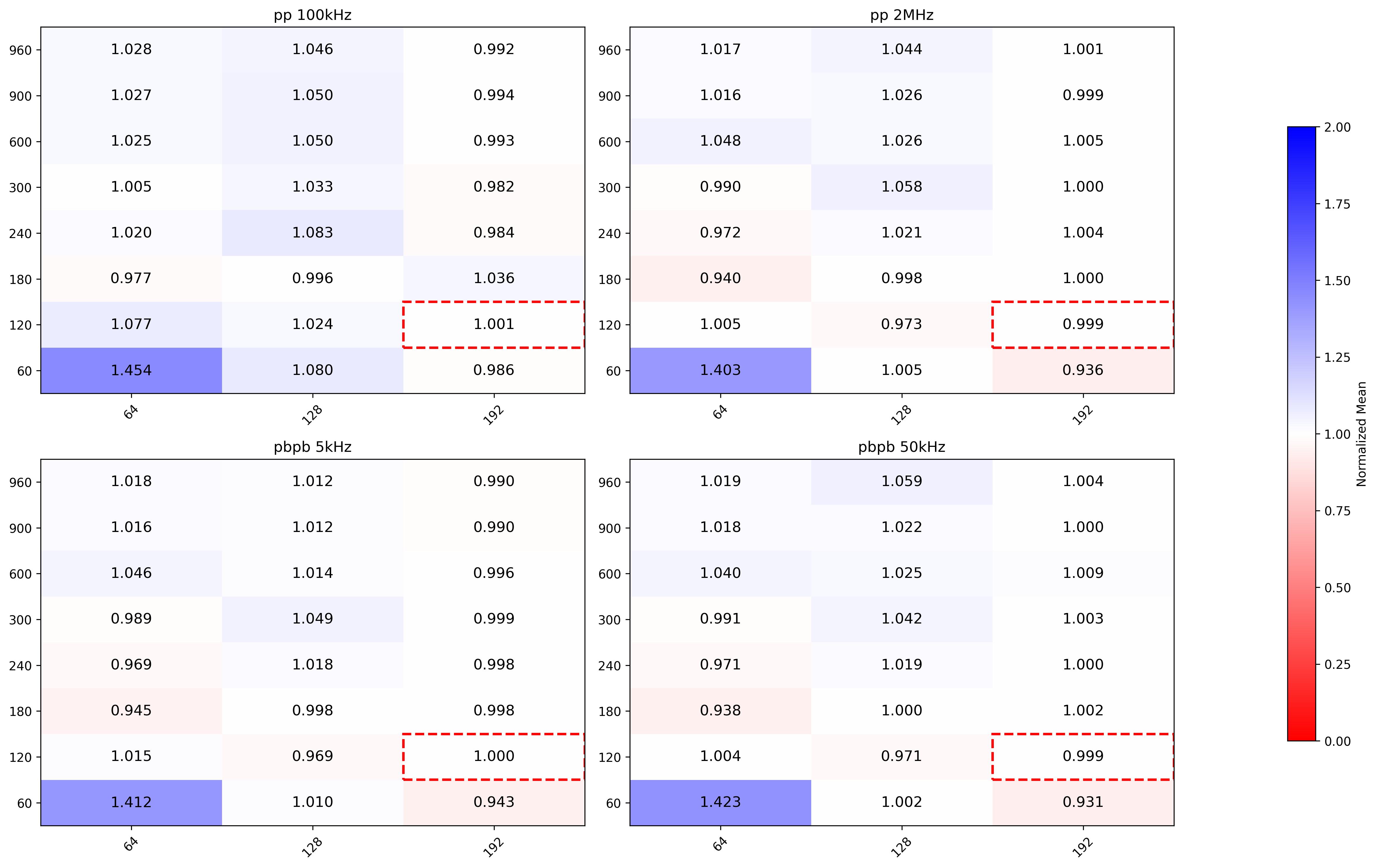

Are the optimal parameters the same for different input data pp vs PbPb and low vs high IR?

Measured on Alma 9.4, ROCm 6.3.1, MI50 GPU. Tested four different configurations: pp 100kHz, pp 2MHz, PbPb 5kHz and PbPb 50kHz. Simulated TFs with 128 lhc orbits.

Independent params optimisation

- Grid search approach. Block size is multiple of warp size (64 for AMD EPN GPUs), Grid size is multiple of number of Streaming Multiprocessors (Compute Units in AMD jargon).

- Each indepedent kernel has a custom search space, and can be studied separately from the others

- Created automated measurements routine, capable of executing multiple grid searches on different independent kernels

-

Executed grid search for the following kernels:

-

MergerTrackFit

-

MergerFollowLoopers

-

MergerSliceRefit

-

MMergerCollect

-

CompressionKernels_step0attached

-

CompressionKernels_step1unattached

-

MergerTrackFit

Executed two times (Merger 1 and Merger 2)

pp

Merger 1

- Low IR same performance as normal configuration (grid size dependent on number of tracks)

- High IR same as low IR, except for (64,240) where it also has the same performance as normal

Merger 2

- Low and High IR sync benefits from bigger grid sizes

- High IR async is 34% faster with higher grid sizes than current configuration for async

PbPb

Merger 1

- Larger grid sizes almost reaches current configuration (grid_size * block_size >= n_tracks)

Merger 2

- Low IR can be 10% faster with bigger grid sizes

- High IR is 40% faster with bigger grid sizes

MergerSliceRefit

Kernel is executed 36 times (once per TPC sector).

- pp low IR benefits from lower block sizes

- pp high IR benefits from larger grid and block sizes

- PbPb low IR better with lower block sizes

- PbPb high IR better with larger grid and block sizes

MergerCollect

pp

Overall best performance given by (64, 960), while current configuration is (512,60).

PbPb

Roughly same as pp

MergerFollowLoopers

Best configuration uses 900 or 960 as grid size. Current configuration is (256,200).

Compression kernels

Step 0 attached clusters

No significant improvements when changing grid and block sizes.

Step 1 unattached clusters

No significant improvements when changing grid and block sizes.

After grid search

Create set of best parameters per

beamtype(pp, PbPb) and perIR(100kHz, 2MHz for pp and 5kHz, 50kHz for PbPb). How to choose best configuration:- compute

conf_mean_time - default_conf_mean_time - propagate error (std dev) of the difference and compute 95% confidence interval

- if 0 is in the interval, can not tell with confidence if current configuration is better than the default

- if one or more CIs have upperbound < 0, choose the one with smaller mean (i.e. the best)

Plug in the best parameters for each beamtype / IR configuration and check if there is a noticable improvement in the whole sync / async chain (work in progress).

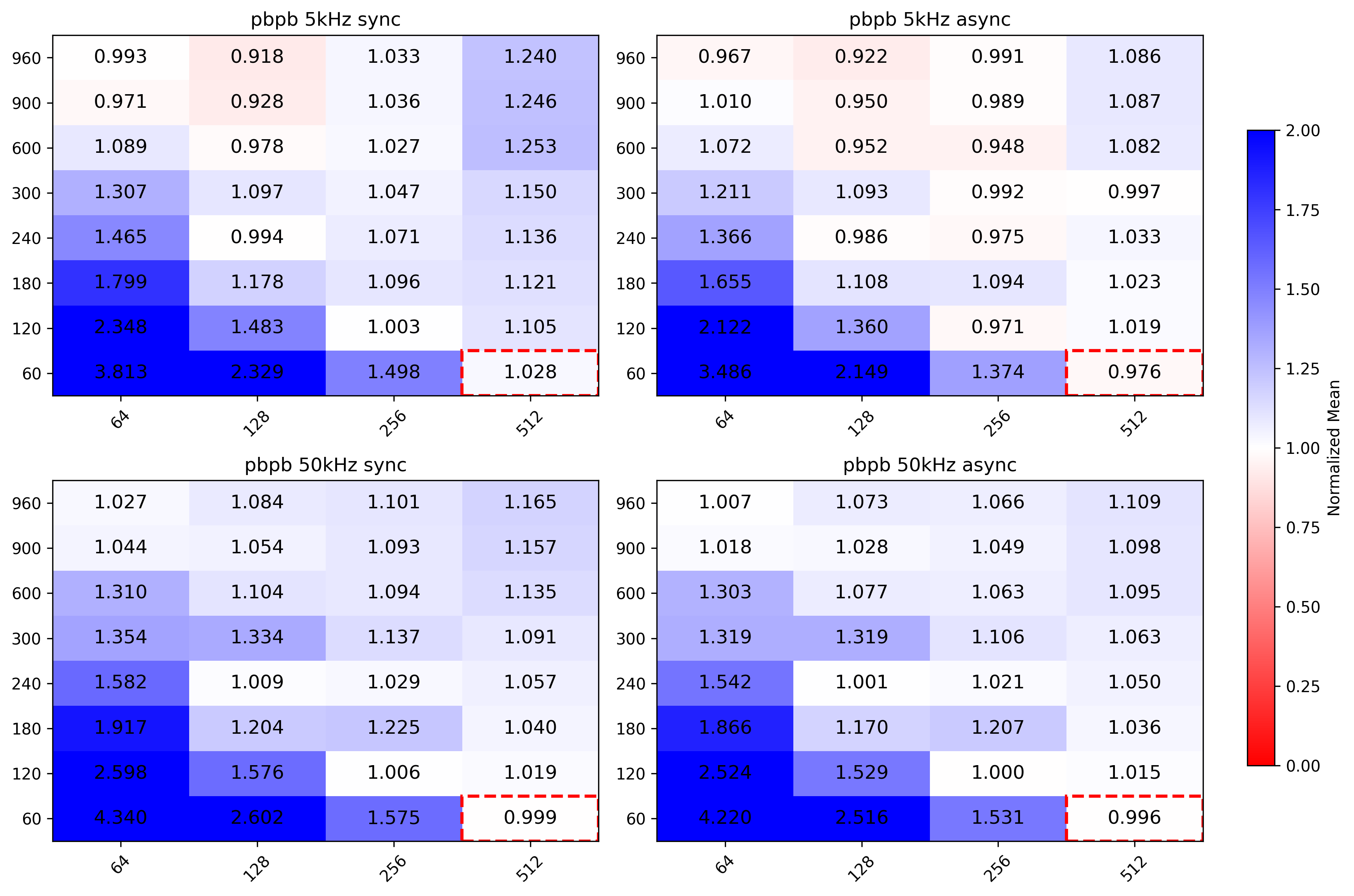

Dependent params optimisation

- More difficult to tackle. Group kernels which run in parallel and optimise this set.

- Identified following kernels which are the longest which are concurrently executed with other kernels:

- CreateSliceData

- GlobalTracking

- TrackletSelector

- NeighboursFinder

- NeighboursCleaner

- TrackletConstructor_singleSlice

- Started with grid search approach on TrackletConstructor_singleSlice. Measured both kernel mean execution time and whole SliceTracking execution time, as chaning parameters may influence the execution time of other kernels and thus on the whole SliceTracking slice.

- Block size is multiple of warp size (64 for AMD EPN GPUs), Grid size is multiple of number of Streaming Multiprocessors (Compute Units in AMD jargon).

- Each indepedent kernel has a custom search space, and can be studied separately from the others.

Possible ideas for post manual optimization

- Isolate the parameters which are dependent, i.e. kernels from the same task which run in parallel (e.g. Clusterizer step, SliceTracking slice)

- Apply known optimization techniques to such kernel groups

- Grid/random search

- Bayesian optimization?

See: F.-J. Willemsen, R. Van Nieuwpoort, and B. Van Werkhoven, “Bayesian Optimization for auto-tuning GPU kernels”, International Workshop on Performance Modeling, Benchmarking and Simulation of High Performance Computer Systems (PMBS) at Supercomputing (SC21), 2021. Available: https://arxiv.org/abs/2111.14991

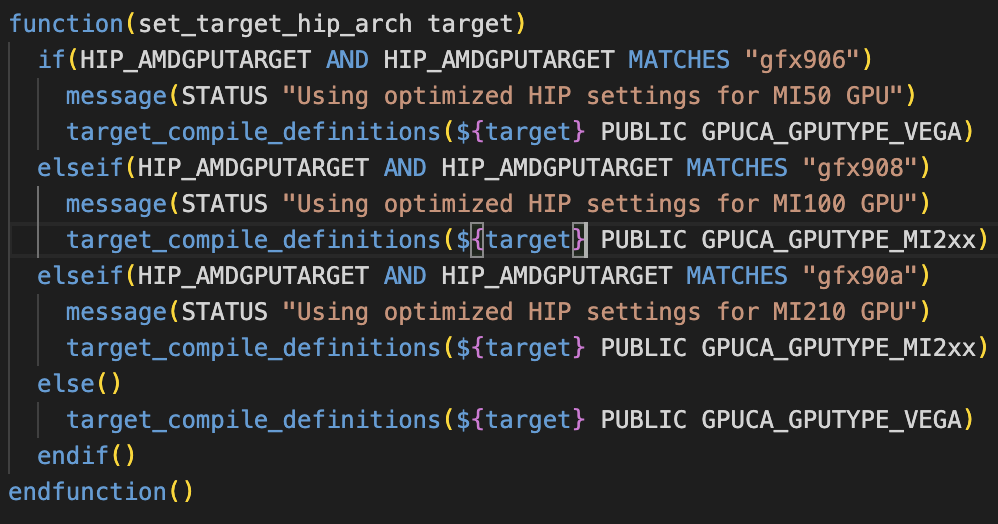

Possible bug spotted

HIP_AMDGPUTARGET set to "default" in GPU/GPUTracking/Standalone/cmake/config.cmake translates in HIP_AMDGPUTARGET=gfx906;gfx908 and forces to use MI50 params

Basically here HIP_AMDGPUTARGET=gfx906;gfx908 enters the first if clause for MI50 even if I am compiling for MI100. Commented set(HIP_AMDGPUTARGET "default") on the config.cmake of the standalone benchmark and forced usage of MI100 parameters via

cmake -DCMAKE_INSTALL_PREFIX=../ -DHIP_AMDGPUTARGET="gfx908" ~/alice/O2/GPU/GPUTracking/Standalone/

Did not investigate further on this.

-

10:45

→

10:55

Efficient Data Structures 10mSpeaker: Dr Oliver Gregor Rietmann (CERN)

Overview

The goal is to develop a C++ library that allows to abstract the data layout of an array. Possible data layouts include aray of struct (AoS) and struct of array (SoA), see the following example.

constexpr std::size_t N = 42;struct Point { int x, y, z; };Point point_aos[N]; // data layout: AoStemplate <std::size_t N>struct PointSoA {int x[N];int y[N];int z[N];};Point<N> point_soa; // data layout: SoAWe aim at writing a class that takes the struct Point, a data layout, and possibly more arguments. The class then allows for AoS access, but stores the data in a possibly different layout, thereby hiding the data layout.

New: Unit Tests using GTest

GTest was integrated into the CMake build and tests were added. We have 8 Tests in total. We test the following underlying containers in AoS and SoA layout:

- std::vector

- std::span

- pre-existing buffer of type char*

- std::pmr::vector

These are the first template parameter F of our wrapper class that is abstracting the data layout. Instances of this class allow an AoS-syntax access via the operator[].

template<

template <class> class F, // container

template <template <class> class> class S, // e.g. "Point"

layout L // data layout

>

struct wrapper;New: CUDA Support

We can now use our wrapper on the host and in the device. See the following example.

#include "wrapper.h"

template <class T>

using pointer_type = T*;

template <

template <class> class F,

template <template <class> class> class S,

wrapper::layout L

>

__global__ void add(int N, wrapper::wrapper<F, S, L> w) {

for (int i = 0; i < N; ++i) w[i].y = w[i].x + w[i].y;

}

struct Point2D { double x, y; };

template <template <class> class F>

struct S {

F<int> x;

F<int> y;

F<Point2D> point;

F<double> identifier;

__host__ __device__ void setX(int x_new) { x = x_new; }

};

void main() {

int N = 8;

wrapper::wrapper<pointer_type, S, wrapper::layout::aos> w; // change for soa

cudaMallocManaged(&w.data, N * sizeof(S<wrapper::value>)); // change for soa

for (int i = 0; i < N; ++i) {

S<wrapper::reference> r = w[i];

r.setX(1);

r.y = 2;

r.point = {0.5 * i, 0.5 * i};

r.identifier = 0.1 * i;

}

add<<<1, 1>>>(N, w);

cudaDeviceSynchronize();

cudaFree(w.data); // change for soa

}Remarks

- Only three lines change if we use SoA instead of Aos, but they can be outsourced to factory function

- The kernel "add" can deduce the template parameters of wrapper

- In particular, the kernel does not depend on the container nor on the data layout

- Some CUDA C++20 features were not working properly, thus had to roll back to C++17.

New: Proposal for Summer Student Project

We have submitted a proposal for a summer student project. The project is about benchmarking different data layouts.

-

10:00

→

10:20