Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

10:00

→

10:20

Discussion 20mSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308:

- O2 with new EndOfStream deployed at P2. working so far, but didn't test in detail. Spotted also one minor problem that apparently if processes hit the stop-transition-timeout during STOP, they segfault during SHUTDOWN.

- Still need to test that it keeps running the calibration after the data processing timeout.

- PR with InfoLogger improvements still WIP.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

- TF-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy. Status?

- Bumping plenty of packages and dependencies (particularly protobuf, GRPC) and finally bump ONNX:

- Needs new tag of control (FLP team has to check Giulio's PR and tag).

- Need to fix mesos for the new protobuf.

- Have to update GPU build container in order to build ONNX inside (e.g. misses hipblas). @Christian: could you send me a list of all packages needed for CUDA and ROCm/HIP to build the ONNX ORT?

Sync reconstruction

- Waiting for RC to test COSMIC replay data set.

- Waiting for RC to test STOP timeout impact.

- EPN2EOS problem fixed by Lubos. EL9 was behaving differently than EL8.

- New SW version deployed at P2: new EndOfStream, fix for CCDB bug affecting TPC, automatic MI100 workaround.

Async reconstruction

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- ḾI100 GPU stuck problem will only be addressed after AMD has fixed the operation with the latest official ROCm stack.

- MI100 tests on async looked OK, though it was only ~15 jobs. All of them went through without a long GPU stall. Though there were short GPU stalls, which we also see in sync. To be investigated.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

AliECS related topics:

- Extra env var field still not multi-line by default., created https://its.cern.ch/jira/browse/OGUI-1624 to follow this up seperately from other tickets.

- FLP working on this, but not yet deployed.

GPU ROCm / compiler topics:

- List of important issues with AMD:

- Random server reboots on MI100: Tried several workarounds, but no solution found so far. Giada spotted some weird FairMQ problems in the large scale test, which could probably be due to some memory corruption happening.

- Disappeared with Alma 9.5, AMD might still check with our Alma 9.4 / ROCm 6.2 server to understand root cause.

- Random crashes on MI100 due to memory error, can be worked around by serializing all kernel and DMA transfers, which has 20% performance degradation.

- Still there with our latest setup. Only persisting problem. Deployed my serialization workaround automatically for online.

- AMD proposed a different workaround to disable the DMA engine. It works for working around this bug, but has another problem that GPUs get stuck. Sent a reproducer to AMD, although not important for us. We want a proper fix anyway.

- Debugged in detail what is going on: multiple commands enqueued in a command queue get executed in parallel or in the wrong order, so that data is read before it is written. Clearly synchronization problem on AMD side.

- AMD provided a bugfix end of last week, which fixes the memory / synchronization error.

- First patch lead to spurious messages written to stderr, received a fix today. Currently being tested but looks good.

- Not clear when we will get official ROCm RPMs with this included, might take a while. I would propose in the meantime we overwrite the installed library in /opt/rocm by the patched one.

- Miscompilation leading to crashes, worked around by changing our code, but compiler bug still there.

- Not appearing any more with ROCm 6.3.2, not clear if fixed, AMD might check the reproducer with the old ROCm.

- Provide an RPM ROCm version with all fixes, so that we don't need to compile clang manually with custom patches.

- No compiler patch or HIP patch necessary any more for ROCm 6.3.2, big step ahead. Still need new version which proper fix for the memory error.

- Proper way to enable amdgpu-function-calls instead of hacking AMD scripts and binaries.

- Can now do everything with CMake HIP language and Clang. Got rid of the hipcc binary, and thus of the hacks to enable function calls.

- hipHostRegister has become very slow when more than 1 GPU visible (via ROCR_VISIBLE_DEVICES).

- Fixed with ROCm 6.3.2

- Discussed with AMD the meaning of a couple of workarounds we are applying. Once we are stable, should remove some and see if it is still needed.

- HIP_DEVICE_MALLOC workaround could be removed, not needed any more. NO_SCRATC_RECLAIM workaround also removed, with new ROCm was even degrading perfromance. NO_LOWER_LDS compiler option must remain, helps amdgpu-function-calls and improves performance.

- Random server reboots on MI100: Tried several workarounds, but no solution found so far. Giada spotted some weird FairMQ problems in the large scale test, which could probably be due to some memory corruption happening.

- Damon from AMD is back working for us, and will look at the GPU memory error next.

- SLC9 GPU container in Jenkins working and RPM publishing fixed. Set up as secondary container for online builds (since we still need the old container for the infra node) and switched to a unified SLC9 container for offline builds (can run on the GRID and on the EPNs with GPU).

- Created GenTopo (topology generation) build for EL9, to be used by EPN once they move their infra nodes to EL9.

- Updated vobox to use el9 container, reported a bug to Max about a missing library that was fixed. MI50s now working for offline in the new setup, MI100 to be tested.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Added some functionality to CAMath class that was needed for BFloat16 for NN clusterizer.

- Can now bump to GCC 14, PR reopened. Suppressed one set of bogus compiler warnings due to GCC regressions. Now some other GC14 warnings in simulation code, pinged Sandro.

- Improved GPU standalone build, so we can build with sanitizers in optimized mode, and with sanitizers using clang. Revealed a couple of minor issues, which were not affecting the processing. Fixed.

- This was used to understand a long-pending issue with random Floating Point Exceptions when running TPC tracking on CPU. The problem is auto-vectorization of the compiler, which uses only 2 of the 4 SSE lanes, leading to computation of random data in the remaining lanes, which can trigger an FPE. (Random means, the compiler even initializes the data to 0, but that eventually leads to computation of sqrt(-inf). It seems there is no way to fix this. As a workaround, by default I'll disable FPE traps in the standalone benchmark when compiler with -ffast-math. (In the code I can only check for -ffast-match, not for -ftree-vectorize, which would be the correct check. But checking for fast-math might be enough, since it makes the problem much more likely.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- Felix debugged the OpenCL clusterization problem to be due to off-by-one offset in NoiseSuppression. Need to check how that can happen only in OpenCL.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

Other Topics

- 32 applicartions in total, 17 rejected by HR, 8 late applications from Monday evening not yet screened by HR. Set up interviews with Ruben and 4 candidates beginning of next week, and we already have Felix and Anton as viable candidates, so ample of candidates.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Created new O2PDPSuite with new DDS version.

- New ODC version merged in alidist today. Do you need yet another build?

- Status of infrastructure node update to Alma 9.5?

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

- Start / Stop / Start: 2 problems on O2 side left:

-

10:20

→

10:25

Following up JIRA tickets 5mSpeaker: Ernst Hellbar (CERN)

-

10:25

→

10:30

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Last week

- O2 PR for NN implementation is through (https://github.com/AliceO2Group/AliceO2/pull/13981)

- alidist PR seems to make good progress, although not building on EPN yet (-> same error as the CI) (https://github.com/alisw/alidist/pull/5793)

- Several new plots for MC efficiencies and fake-rates, K0 mass peaks and dEdx separation (see slides)

-

10:30

→

10:35

ITS Tracking 5mSpeaker: Matteo Concas (CERN)

ITS GPU tracking

- General priorities:

- Providing GPU ITS tracking alongside TPC via gpu-reco-workflow in full system test -> WIP

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Tracking fully ported on GPU.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Currrently optimising the o2::its::timeframeGPU intialisation GPU transfers, trying to maximise data reuse & anticipating loads when it is possible.

- Optimizations:

- Asynchronous parallelisation in the tracklet finding, i.e. Multi-streaming for obvious parallelisations.

- intelligent scheduling and multi-streaming can happen right after.

- Kernel-level optimisations to be investigated.

TODO:-

- Thrust allocator with external memory management -> possibly the most critical missing piece, to find a decent way of introducing it.

- Reproducer for HIP bug on multi-threaded track fitting: no follow-up yet.

- Fix possible execution issues and known discrepancies when using

gpu-reco-workflow: no progress.

DCAFitterGPU

- Deterministic approach via using

SMatrixGPUon the host, under particular configuration: no progress.

- General priorities:

-

10:35

→

10:45

TPC Track Model Decoding on GPU 10mSpeaker: Gabriele Cimador (Universita e INFN Torino (TO))

Multi grid search script

- Script for measuring kernel durations using different grid_size block_size configurations

- Allows for multiple measurements at once

- Limited to kernels defined in GPUDefGPUParameters.h

- It uses the standalone TPC benchmark

How much it is automated? 🤖

Pretty much, i.e. it is sufficient to specify the kernel name and the space search in the script and it is ready to go

What is the input?

Just the dictionary. For the moment the dataset on which to measure is fixed, and loops between four datasets.

What is the output?

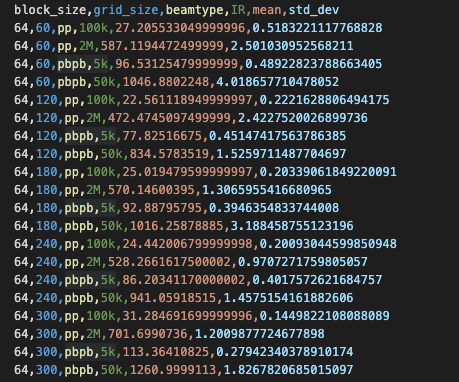

Folder with a csv file for each measured kernel, e.g.

Nice features 👍

- Independent from O2, just compile the standalone benchmark with the desired version

- Efficiently executes multiple grid searches, sampling one point in the search space for each kernel at each measurments

- Duration of the measurments dependent on the biggest search space, not on how many kernels are evaluated

Limitations 👎

- Currently llimited to AMD GPUs as it exploits the

rocprofprofiler from AMD - Takes a lot of time as each time new points in the search spaces are evaluated, it must compile the benchmark

- Example: Took 23 hours on MI50 when biggest search space was 32 space points

- Useful just for kernels which do not run in parallel with other kernels

Currently doing / to do 🚧

- Select best parameters for each dataset and check correctness / improvement

- Benchmark with other datasets

- Try to use runtime compilation (need a bit of guidance here 😬)

- Tackle concurrent kernels

- Make the search less brute force and more "intelligent"

- See feasibility of other optimisation techniques (optuna, bayesian opt, MCMC...)

-

10:45

→

10:55

Efficient Data Structures 10mSpeaker: Dr Oliver Gregor Rietmann (CERN)

Summer Student 2025

- Candidate: Milla Bramsted

- She got an offer on Monday, but did not reply yet.

- I sent her a very brief outline of the project.

- If she accepts, she will be here for 8 weeks starting on June 30.

GitHub CI Pipeline with GPUs

- I became the beta tester Ricardo Rocha's new setup.

- We are basically building the documentation thereof together.

- Currently we have some permission issues, I am on it.

Integrating our AoS vs. SoA into O2

For the moment, we work only with the standalone benchmark. The goals are:

- Replace current AoS by our library set to AoS and check if the performance remains the same.

- Replace current AoS by our library set to SoA and check if the performance increases.

- Present the findings e.g. in a plot.

Current status: I have added our files to the O2 code. They compile, but they do only trivial stuff as of now.

-

10:00

→

10:20