Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

10:00

→

10:20

Discussion 20mSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308:

- RC did timeout tests. COSMICs tests were not helpful, since no backpressure at all in processing.

- In tests in PHYSICS, saw that indeed there is a bug that calib data is not processed after the dataprocessing timeout (the feature not yet tested).

- Problem with calib data in new EoS fixed, Ernst deployed new version yesterday.

- Will ask RC to test again, and repeat the timeout tests in physics.

- Then we need to update O2 once more with the new default timeouts.

- PR with InfoLogger improvements still WIP - Status?

- Processes crashing at shutdown if STOP timeout was short, not clear if related, but should be checked.

- Fix problem with ccdb-populator and cpv calib: no idea yet - since Ole left, someone else will have to take care.

- TF-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy.

Sync reconstruction

Async reconstruction

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

AliECS related topics:

- Extra env var field still not multi-line by default., created https://its.cern.ch/jira/browse/OGUI-1624 to follow this up separately from other tickets.

GPU ROCm / compiler topics:

- List of important issues with AMD:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- Problem with building ONNXRuntime with MigraphX support, to be checked.

- Need to find a way to build ONNXRuntime with support for CUDA and for ROCm.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Once we bump arrow (PR opened by Giulio), we can bump LLVM to 19.

- Waiting for ROCm 6.4.1 with the synchronization fix, then we can test the 6.4 version.

- GPU Deterministic mode for async fully fixed.

- Tested new ROCm 6.4.1, which contains the fix/workaround for the serialization bug. Seems to work on both MI50/Mi100 without extra patches. Opened JIRA ticket for EPN to bump https://its.cern.ch/jira/browse/EPN-557

- EPN installed ROCm 6.4.1 on parallel today.

- Next: update build container and EPN O2 build to use new ROCm.

- Then: Remove old ROCm (and coordinate with async, that they do not use the old one any more).

- Memory error reported by Gabriele for certain parameters in parameter range scan unclear. Disappears with O2/dev, and disappears with ROCm 6.4. Bisection shows a cleanup PR getting rid of an unused variable. This is totally unrelated, and might hint to a compiler problem. Sent a reproducer to AMD.

- After going to O2/dev, no new errrors appeared in parameter scan, So we assume this was really a compiler issue. Waiting for AMD to comment.

- Even with AMD’s fix for the ROCm runtime, seeing sporadic crashes on MI100. Very difficult to debug. Dumped one TF that led to a crash online, but reprocessed it 500000 times locally without crash.

- Not sure if we can get a good reproducer.

- Fix by AMD is no real fix, but only a mitigation which makes it less likely. AMD is working on a better mitigation, but not clear to me if we will every get a full fix for deprecated MI100 GPUs.

- Have a mitigation in O2, which might also make it less likely.

- New mitigation active as of today, but we run at 500 kHz, where the crash was not so likely. Will need to gather some statistics before claiming success.

- CUDA and ROCm can meanwhile use some std headers on GPU (like <array>, <type_traits>), for which we had custom reimplementations so far. Unfortunately, doesn’t work yet with OpenCL.

- OpenCL in clang should support <type_traits>, but that fails compilation. Filed a bug report with clang.

- Changed O2 such to use the custom GPU versions only for OpenCL, and the std headers otherwise. Can only clean up fully when OpenCL supports everything.

- GPU check in gpu-systems.sh alidist package (bash script) and in O2 (CMake modules) do not match, so we can have that one finds a GPU feature and one doesn’t. PR https://github.com/alisw/alidist/pull/5896

- Quite some progress, we need a new build container containing CMake (Sergio and Giulio are preparing that). With that container, it works for me locally on my laptop and inside the container.

- Some more things to do:

- Need to propagate error messages out of the prefer_system_check to the console in case of a failure.

- Need an env variable during O2 build that tells which alidist we are using, to check we use the same FindO2GPU.cmake for the detection and for the O2 build.

- Need a new aliBuild feature, to run the prefer_system_check in a temp directory. The gpu-system.sh tries to clean up, but I'd prefer to be sure not to leave remnants in the current folder.

- Bumped CUDA to 12.9 in build container, and added CUDA ORT ONNX requirements for TensorRT build.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- Felix debugged the OpenCL clusterization problem to be due to off-by-one offset in NoiseSuppression. Need to check how that can happen only in OpenCL.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

- Adding a GPU Standalone Benchmark build to the FullCI, to avoid breaking it in the future.

- Need to check the problem with ONNX external memory allocator, and the build failure with MigraphX.

Other Topics

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Slurm bump

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

- Start / Stop / Start: 2 problems on O2 side left:

-

10:20

→

10:25

Following up JIRA tickets 5mSpeaker: Ernst Hellbar (CERN)

Low-priority framework issues https://its.cern.ch/jira/browse/O2-5226

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Merged workflow fails if outputs defined after being used as input

- needs to be implemented by Giulio

- Cannot override options for individual processors in a workflow

- requires development by Giulio first

- Problem with 2 devices of the same name

- Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Run getting stuck when too many TFs are in flight.

- Do not use string comparisons to derrive processor type, since DeviceSpec.name is user-defined.

- Support in DPL GUI to send individual START and STOP commands.

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

- Implement a proper solution to detect wheter a device is firstInChain

- Deploy topology with DPL driver

PDP-SRC issues

- Check if we can remove dependencies on

/home/epn/odc/filesin DPL workflows to remove the dependency on the NFS- reading / writing already disabled

- remaining checks for file existence?

- check after Pb-Pb by removing files and find remaining dependencies

logWatcher.shandlogFetcherscripts modified by EPN to remove dependencies onepnloguser- node access privileges fully determined by e-groups

- new

log_accessrole to allow access inlogWatchermode to retrieve log files, e.g. for on-call shifters - to be validated on STG

- waiting for EPN for further feedback and modifications of the test setup

- new

BEAMTYPEfor oxygen period- https://its.cern.ch/jira/browse/O2-5797

- beam types

- p-O and O-O

- Ne-Ne highly likely

- RC asked for a synthetic OO dataset

- Ruben uploaded respective GRPECS and CTPConfig (created by Roman) objects for unanchored MC to CCDB

- O2 code to be checked for pp and PbPb specific variables

-

10:25

→

10:30

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

-

10:30

→

10:35

ITS Tracking 5mSpeaker: Matteo Concas (CERN)

-

10:35

→

10:40

TPC Track Model Decoding on GPU 5mSpeaker: Gabriele Cimador (Universita e INFN Torino (TO))

News from GPU parameters tuning

- So far every measurement taken with --memSize 30 GB and 128 orbit timeframes --> wrong

- Retuned the parameters with

- --memSize 15 GB

- 32 orbit timeframes

Dataset Default sync time Optimized sync time pp 2MHz 2658.22 ms ± 4.50 ms 2507.92 ms ± 4.26 ms (5.65%) PbPb 50kHz 4708.97 ms ± 14.41 ms 4352.53 ms ± 6.35 ms (7.57%) Question

- How I simulate TFs:

events = interaction_rate * n_orbits * 0.0000894 - For 50kHz 32 orbit I get

events = 143 - Is it ok?

To-dos:

- Tune remaining steps

- Test robustness with different datasets with same nature

- Tune with other datasets configurations e.g. pp 750kHz

- Draw more decent plots

- Test on MI100

-

10:40

→

10:45

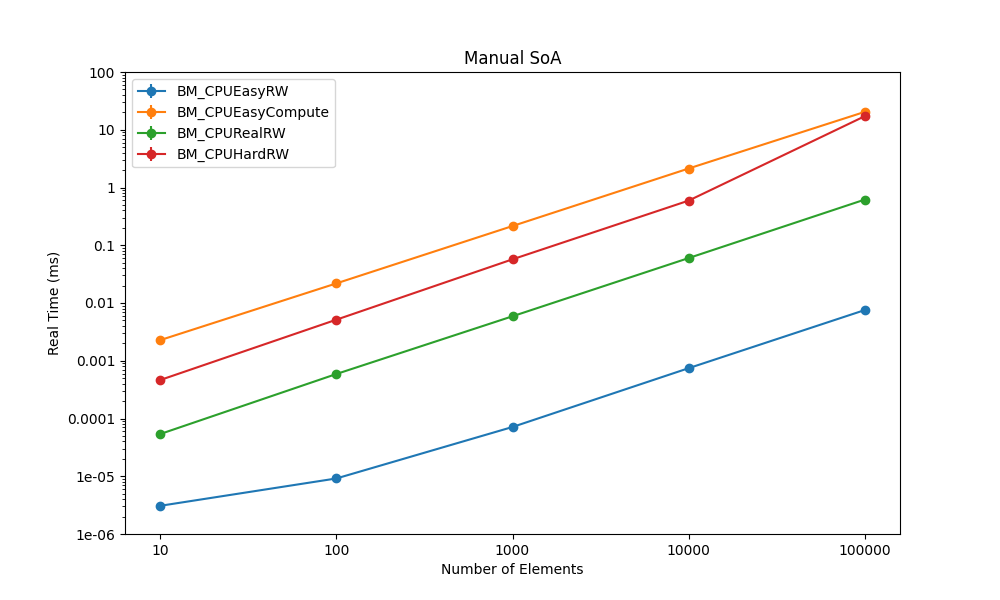

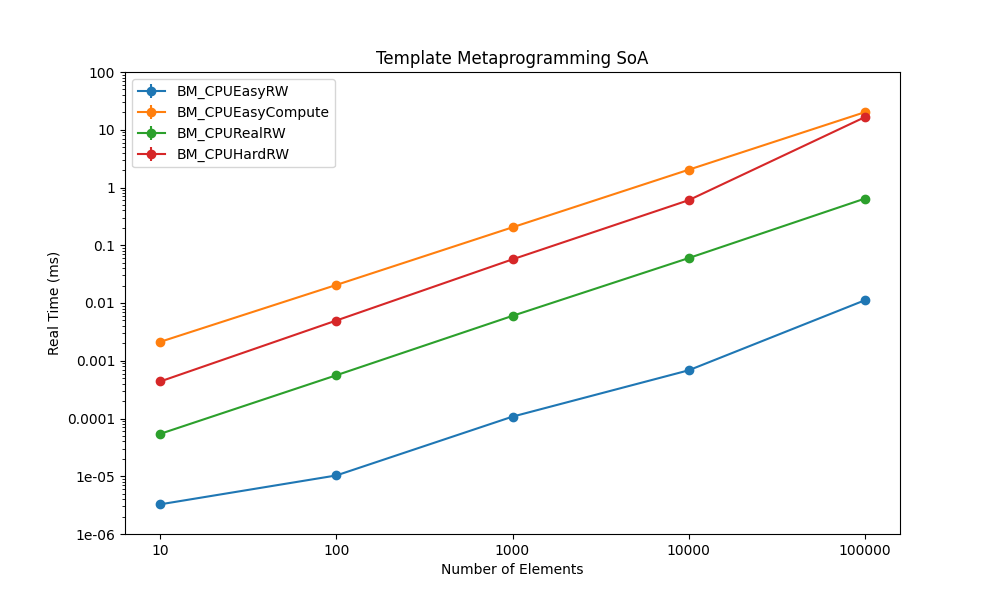

Efficient Data Structures 5mSpeaker: Dr Oliver Gregor Rietmann (CERN)

SoA Benchmarks

- We have added a few things in our benchmarks repository: https://github.com/cern-nextgen/wp1.7-soa-benchmark

- Truly contiguous SoA data layouts

- Automatic plots comparing different SoA implementations

- Discussions about summer student Milla working on this.

- Our library now has the same performance than hardcoded SoA in the tested examples only with clang, not with gcc.

- In small examples, such as the one below, I cannot reproduce the issue (both gcc and clang are optimizing away the issue).

#include <vector>

struct SoA_Points { std::vector<float> x, y, z; };

struct S_ref { float &x, &y, &z; };

struct wrapper {

SoA_Points soa_points;

S_ref operator[]( int i ) {

return { soa_points.x[i], soa_points.y[i], soa_points.z[i] };

}

};

int main() {

int N = 100;

wrapper w {{ std::vector<float>(N), std::vector<float>(N), std::vector<float>(N) }};

w[42].x = 3; // Accesses all data members of soa_points, although only x is needed

return 0;

} - We have added a few things in our benchmarks repository: https://github.com/cern-nextgen/wp1.7-soa-benchmark

-

10:45

→

10:50

Following up GPU to-dos 5mSpeaker: Dr Vikas Singhal (Department of Atomic Energy (IN))Q:-1 Difference between GPU Kernels and TPC Kernelseg: GPUTPCCFDecodeZSGPUTPCCFCheckPadBaselineGPUTPCCFPeakFindereg: TPC Sector TrackingTPC Track Merging and FitQ:-2 GPU.out and CPU.out are different.Q:-3

When we enable-DGPUCA_DETERMINISTIC_MODECmake setting or--PROCdeterministicGPUReconstructionGPU.out is same but--RTCdeterministiccommand line optionGPU.out is not same.Google Doc with timings of different kernels for the deterministic mode. -

10:50

→

10:55

TPC Clusterization / OpenCL / Highly Ionizing Particles 5mSpeaker: Felix Weiglhofer (Goethe University Frankfurt (DE))

1. Orchestrate the access of ALICE to the CERN NGT benchmark/development hardware, and alternatively to other CERN resources, to benchmark the ALICE GPU code on modern / new GPUs.

- NGT Documentation: https://ngt.docs.cern.ch/getting-started/

- Gives us access to H100, already supports Github runners

- Need variant of O2 build container with CUDA driver to run GPU tests.

2. Establish a software infrastructure / scripts, to run the ALICE GPU processing benchmarks on CPUs and GPUs over different data set, and provide performance results in a way that enables further analysis.

- In principle the standalone benchmark should be enough, but pp and Pb-Pb data sets of different interaction rates should be tested.

3. Extend the Alice O2 CI infrastructure to run actual code on the GPU, check for correctness and performance, for pull requests.

- Should be a fast test for the CI. The standalone benchmark should be enough. Ideally we can perhaps use the deterministic mode, to validate results between CPU and GPU.

4. Establish automated continuous benchmarking, to monitor the evolution of the GPU processing performance per O2 version.

- We already have/had such benchmarking on the alibi server with some old GPUs. But this should be extended, we need to use more modern GPUs, and we need monitoring and alerting.

-

10:00

→

10:20