Data Format Choices

- We currently use CSV files for data preprocessing, but this introduces workflow sacrifices.

- Using CSVs is seen as suitable for exploratory analysis, but not optimal for large-scale or production ML workflows because of scalability issues.

- Handling Missing Values

- RDF does support missing value handling.

- Planning to use attention masking methods to handle artificial (previously missing) values during training ML model.

- Workflow and Performance Concerns

- Operations like `snapshot` and `as_numpy` in ROOT are not lazy (but can be); they trigger computation immediately and can block execution ---> single-core performance bottlenecks .

- Feeding Data into ML Infrastructure

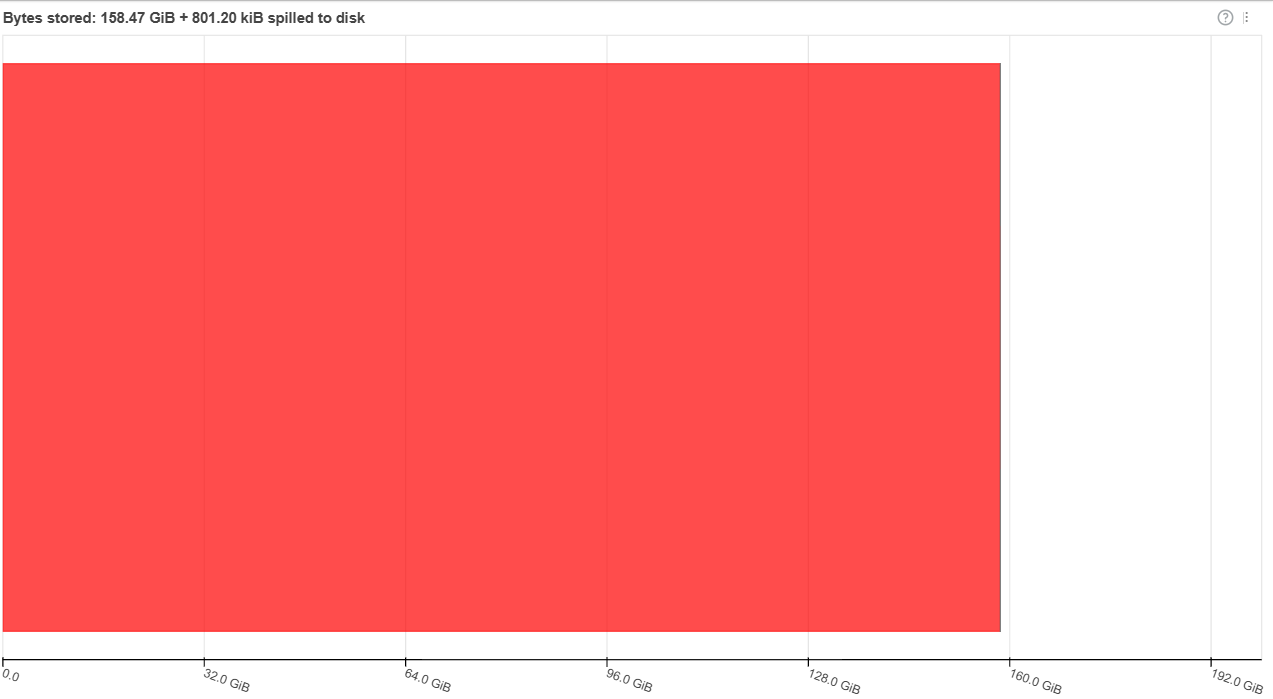

- Current solutions do not work efficiently in distributed environments. Keeping data in RAM (e.g., with Numpy) is optimal but not always feasible.

- A distributed batch generator could address memory and scalability issues, especially when processing massive datasets Open Data ~O(50TB).

- Action:

- Will move away from CSVs and try with ROOT files with efficient batch generators.

Dask and ROOT Integration Issues

- Problem: memory is not released after Dask jobs, leading to resource saturation. This issue comes from both Dask and ROOT.

- In SWAN sessions, the Dask Python process remains tied to the Condor job and session lifetime, to guarantee its usage at will by the users; this causes lingering memory usage and process persistence. To be tested with lazy snapshots.

- ROOT uses compilers for jitting (compiling on the fly) which further degrades memory retention, as compilers do not release memory while the Dask process is active.

These problems are site-specific; for example, INFN is not configured in the same way and does not show this issue.

Do we know what they do? Could we ask them?