PyHEP 2025 - "Python in HEP" Users Workshop (hybrid), CERN

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

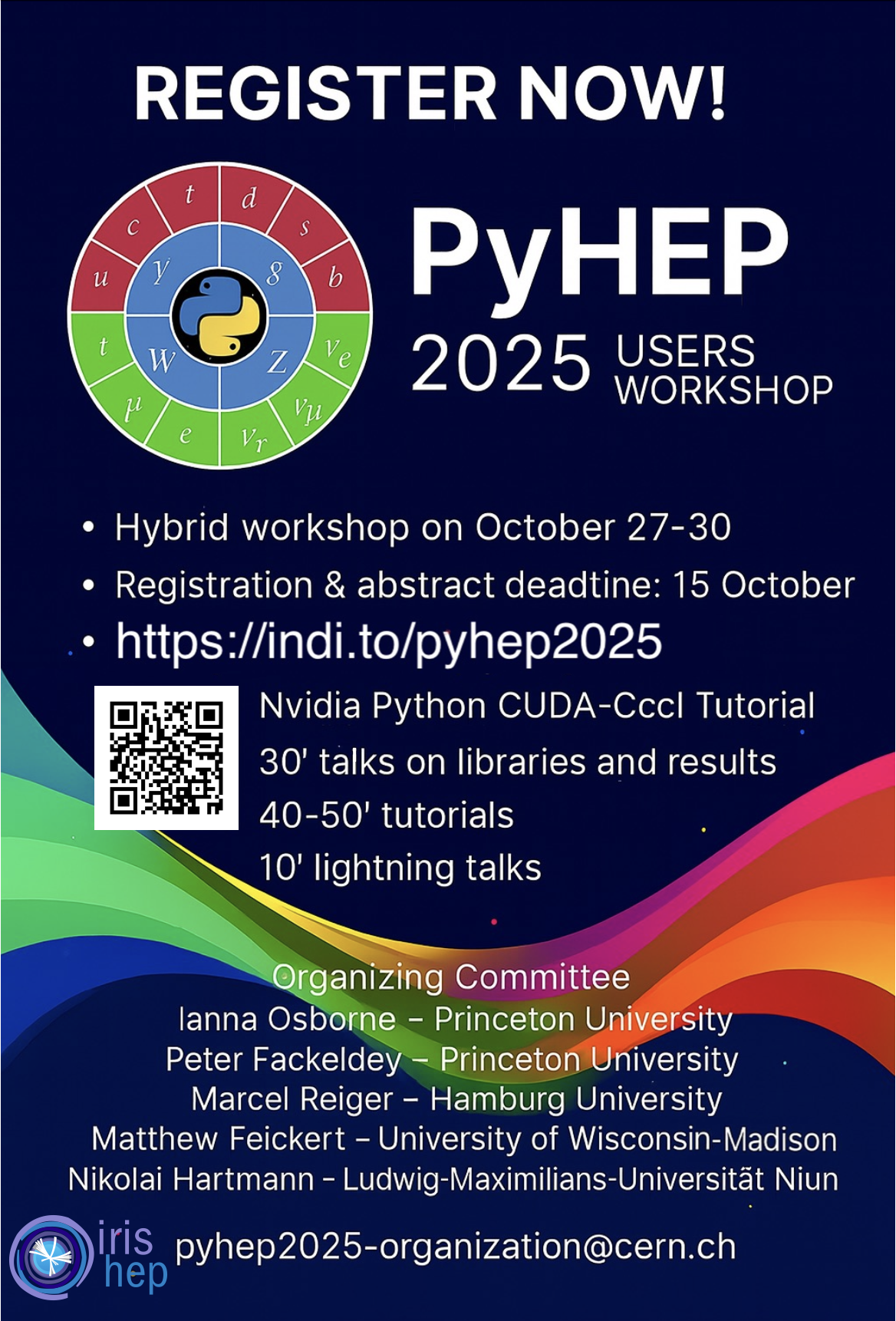

PyHEP 2025 will be a hybrid workshop, held in this format for the first time. While the event has traditionally taken place online, this edition will welcome participants both virtually and in person at CERN. The workshop will serve as a forum for participants and the wider community to discuss developments in Python packages and tools, share experiences, and help shape the future of community activities. Ample time will be dedicated to open discussions.

The agenda is composed of plenary sessions:

Organising Committee

Peter Fackeldey - Princeton University

Ianna Osborne - Princeton University

Nikolai Krug - Ludwig Maximilian University of Munich

Marcel Rieger - Hamburg University

Matthew Feickert - University of Wisconsin-Madison

Sponsors

The event is kindly sponsored by