Alice Weekly Meeting: Software for Hardware Accelerators

-

-

10:00

→

10:20

Discussion 20mSpeaker: David Rohr (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

GPU Benchmarks in HS23 Contribution from ALICE

- Had a meeting 2 weeks ago, Gabriele will report on the status

Sync reconstruction

Async reconstruction

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- We can now set the GPU architectures in the environment variable field of Jenkins builds.

- Managed to run the o2-gpu-standalone-benchmark from an async build on CVMFS in the default GRID job container on the NERSC perlmutter site running on their A100 GPU.

- Async reco test (using some 2024 Pb-Pb setup I copied from the GRID) were successful.

- Improved O2DPG scripts and added ALIEN_JDL_SITEARCH setting to select between MI50, MI100, NERSC (so we do not need custom run scripts per site).

GPU ROCm / compiler topics:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- Problem with building ONNXRuntime with MigraphX support.

- Need to find a way to build ONNXRuntime with support for CUDA and for ROCm.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- LLVM Bump to 20.1: status?

- ROCm 6.4.1 status:

- AMD is checking the reproducer. I have some idea how to narrow down where it miscompiles using different compile flags in per-kernel mode.

- New problem with ROCm 6.5 / 7.0 after bumping clang: New clang encounters internal compiler error processing our code...

- Improved Standalone Benchmark CI, can now run RTC test for CUDA also with no GPU installed.

- Updating alidist/gpu-system to be build_requires only, and reverted that since it broke the dailies. Now generating a dummy modulefile (even if not used), as requested by Giulio.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object: Draft version by Sergey exists but still WIP.

- Matthias Kleiner might look into commissioning this.

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

- Need to check the problem with ONNX external memory allocator. Status?

EPN GPU Topics:

-

10:20

→

10:25

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Framework

- Optimizations of kernel speeds. Best possible solution (for now): pad-aligned memory accesses per thread

Computing

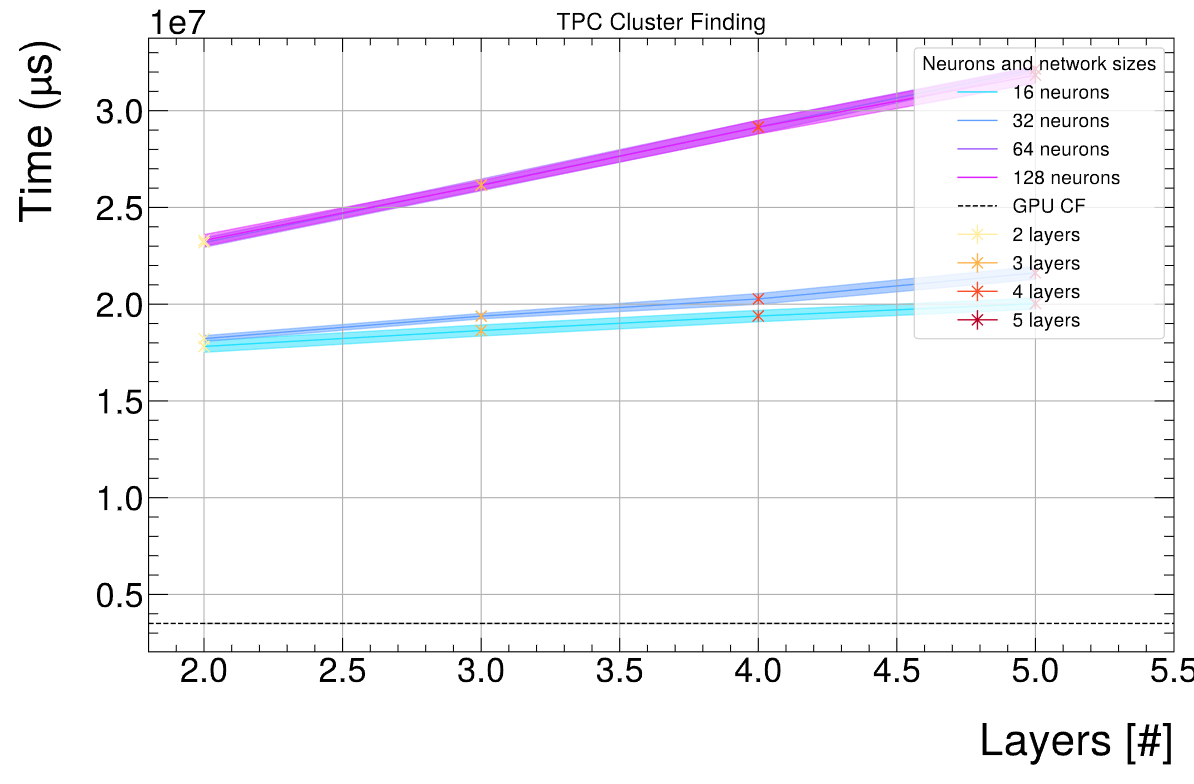

Evaluated different network sizes: 5 x (2 TF, FST, only classification network (+ CF regression), float16) per NN

- debugLevel=0, batchsize=8192

- debugLevel=1, batchsize=8192

- Did an OPTUNA optimization for batchsize: For all networks, higher batchsize seems better, although there seems to be some irregularity (sometimes a batchsize which is not maximum was best -> Irregular optimization surface)

- Probably will stick with 32 neurons per layer, 32 neurons per layer

- Ideally would like to find a pattern: Something like ((neurons per layer) x (input size) x (batch size)) % 32*n ~ 0 (-> analytic formula for when an execution becomes efficient)

- RTC compilation working now (update from this morning), thanks to colab with Gabriele

Question

- Would like to run optimisation for one chosen NN with launch bounds

- Which launch bounds to take? What do they exactly mean? Maybe these:

#define GPUCA_LB_GPUTPCNNClusterizerKernels_runCfClusterizer 512#define GPUCA_LB_GPUTPCNNClusterizerKernels_fillInputNNGPU 512#define GPUCA_LB_GPUTPCNNClusterizerKernels_determineClass1Labels 512#define GPUCA_LB_GPUTPCNNClusterizerKernels_publishClass1Regression 512#define GPUCA_LB_GPUTPCNNClusterizerKernels_publishDeconvolutionFlags 512 - What should be the optimisation objective?

- debugLevel=1: mean(NN kernel times) + fill time

- debugLevel=0: Cluster finder time -> Does not take into account the benefits in track merger due to cluster rejection

- debugLevel=0: Wall time -> This could depend on the number of accepted clusters for tracking and threshold might change from simulation to simulation and network to network

- Which launch bounds to take? What do they exactly mean? Maybe these:

-

10:25

→

10:30

GPU Parameter Optimizations 5mSpeaker: Gabriele Cimador (Universita e INFN Torino (TO))

HS23 Contribution from ALICE

- Tested with Docker on EPN MI50 node

- Started from GPU CI container

- Load dependencies via CVMFS

- Clone O2 into the container

- Patch

/home/gcimador/alice/O2/GPU/GPUTracking/Standalone/cmake/config.cmake- turn off

ENABLE_[CUDA/HIP/OCL]Cmake variables - comment out event visualization

- turn off

- For the test:

set(ENABLE_HIP ON)set(HIP_AMDGPUTARGET "gfx906")ALIBUILD_O2_FORCE_GPU=0

cmake -DCMAKE_INSTALL_PREFIX=../ -DGLFW_DIR=/cvmfs/alice.cern.ch/el9-x86_64/Packages/GLFW/3.3.2-19/lib/cmake/glfw3/ ../../O2/GPU/GPUTracking/Standalone/make -j32 install

Questions

ERROR reading events/o2-pbpb-5kHz-32/tpctransform.dump, invalid size: 4552 (4568 expected)terminate called after throwing an instance of 'std::runtime_error'what(): invalid size

Is there a quick way to adjust my datasets or I have to simulate new again?- We need to find a way to define the GPU architecture to build for. My idea: provide them as argument of the entrypoint script of the container. It will receive two arguments: CUDA/HIP to enable the

ENABLE_CUDA/ENABLE_HIPvariables, and the architecture e.g.gfx906for setting theHIP_AMDGPUTARGETvariable.

Might this be ok? - Do I need to use aliBuild init or can I just clone O2 repository alone?

News from GPU parameter tuning

- Tuned datasets:

- PbPb: 42kHz, 47 kHz, 50 kHz

- pp: 750kHz, 1MHz

- Is the "sweet spot" always the same, or it is a range of values?

- To answer this, tuned the same pp 750 kHz dataset three times

Kernel Name Best (block, grid) - search 1 Best (block, grid) - search 2 Best (block, grid) - search 3 CompressionKernels_step1unattached 384, 120

512, 120 384, 120 GMMergerCollect 512, 540 640, 600 448, 600 GMMergerSectorRefit 64, 660 64, 840 64, 900 GMMergerTrackFit 256, 840 256, 900 256, 660 CompressionKernels_step0attached 192, 60 192, 60 192, 60 GMMergerFollowLoopers 128, 840 64, 660 64, 780 TrackletConstructor 512, 900 512, 780 512, 780 TrackletSelector 128, 720 1024, 900 704, 900 CFClusterizer 448, 900 512, 780 448, 360 - Seems that sweet spot lies on a range of values

- To answer this, tuned the same pp 750 kHz dataset three times

Todos:

- Investigate why grid_size=600 is such an important number (10*CUs)

- Correct the number of trials (minimum reached with less evaluations)

- Implement dynamic parameters in O2?

-

10:30

→

10:35

Efficient Data Structures 5mSpeaker: Dr Oliver Gregor Rietmann (CERN)

Summer Student Milla Bramsted

- She is working on benchmarking SoA code on GPUs

- We will track her project in this google doc

- She is adding CUDA kernels to this repo

- A few Kernel were integrated to the benchmarking framework

- Next steps:

- Add more kernels (bitonic sort)

- Add the other NGT SoA approaches again (were temporarily removed)

- Re-organize the code

- Add more kernels (bitonic sort)

ALICE O2 CI-Pipelines on NGT Cluster

- A fork of the AliceO2 repo is not in the NextGenTrigggers (NGT) GitHub organization

- It has a GitHub action running the standalone benchmark on NGT GPUs (H100)

- Uses the builds in /cvmfs/alice.cern.ch/ of O2 and dependencies (pipeline takes about 7 minutes)

- O2 standalone benchmarks now works on the following ngt-resources:

- Nvidia: H100 188GB NVL, L40S 48GB

- AMD: Instinct MI300X

- Not working yet on AMD Radeon Pro W7900 due to different warp size / wavefront size.

- Not working yet on lastest commits because TPC changed the data format of the event dumps

- Next steps:

- Fix the problems above

- Get the full CI to work

Implement NGT SoA Code in O2 standalone benchmark

- Working on this fork of the AliceO2 repo

- Simplified and optimized the SoA code in the last few weeks

- Everything is running and we have identified the classes apply our SoA code to

- Next steps:

- Implement the SoA code on those classes

- Remark: Jolly wants to implement her reflection approach in O2 standalone (for a conference)

- 10:35 → 10:40

-

10:40

→

10:45

TPC Clusterization / OpenCL / Highly Ionizing Particles 5mSpeaker: Felix Weiglhofer (Goethe University Frankfurt (DE))

OpenCL

- Finished standalone Zero Suppression decoder: Can't reproduce issues from O2...

Clang printf

Fixed OpenCL printf by not mangling the symbol:

diff --git a/clang/lib/Headers/opencl-c-base.h b/clang/lib/Headers/opencl-c-base.h index b6bcf32c09c0..14fa7654849a 100644 --- a/clang/lib/Headers/opencl-c-base.h +++ b/clang/lib/Headers/opencl-c-base.h @@ -688,7 +688,15 @@ template <typename _Tp> struct __remove_address_space<__constant _Tp> { #if defined(__OPENCL_CPP_VERSION__) || (__OPENCL_C_VERSION__ >= CL_VERSION_1_2) // OpenCL v1.2 s6.12.13, v2.0 s6.13.13 - printf -int printf(__constant const char* st, ...) __attribute__((format(printf, 1, 2))); +#ifdef __OPENCL_CPP_VERSION__ +#define CLINKAGE extern "C" +#else +#define CLINKAGE +#endif + +CLINKAGE int printf(__constant const char* st, ...) __attribute__((format(printf, 1, 2))); + +#undef CLINKAGE #endif #ifdef cl_intel_device_side_avc_motion_estimation-> Allows printf usage in OpenCL again

TODO: open upstream PR

With printf, found a third issue in ZS decoder:

Sometimes statements like

if (iThread == 0) printf("...");are printed by all threads. Can be fixed with a barrier after the print.-> Can be reproduced in both O2 and standalone decoder. Unsure how this is connected to the other issues.

-

10:45

→

10:50

ITS Tracking 5mSpeakers: Felix Schlepper (CERN, Heidelberg University (DE)), Gabriele Cimador (CERN, Università and INFN Torino), Matteo Concas (CERN)

# ITS tracking status

1. Quick overview of current CPU refactoring

2. New features currently in development

3. Status of the GPU part---

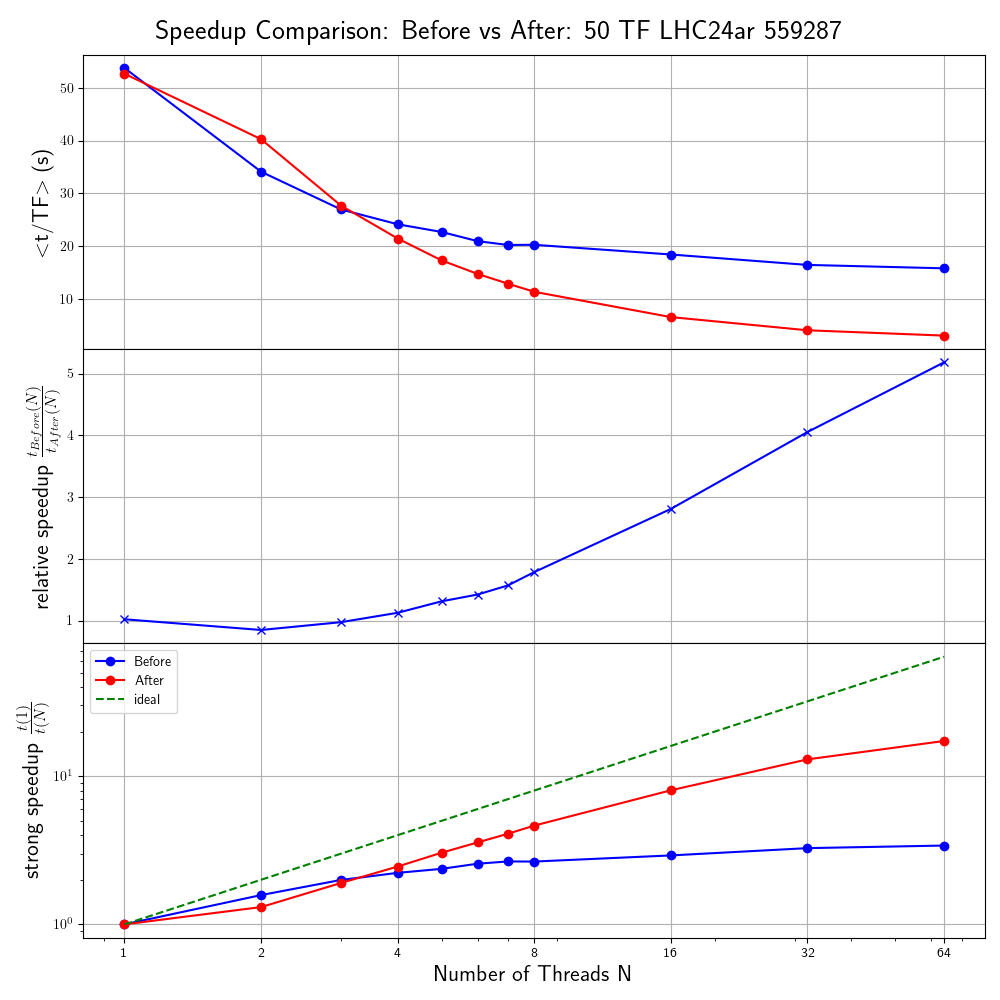

## 1.1 Current CPU refactoring

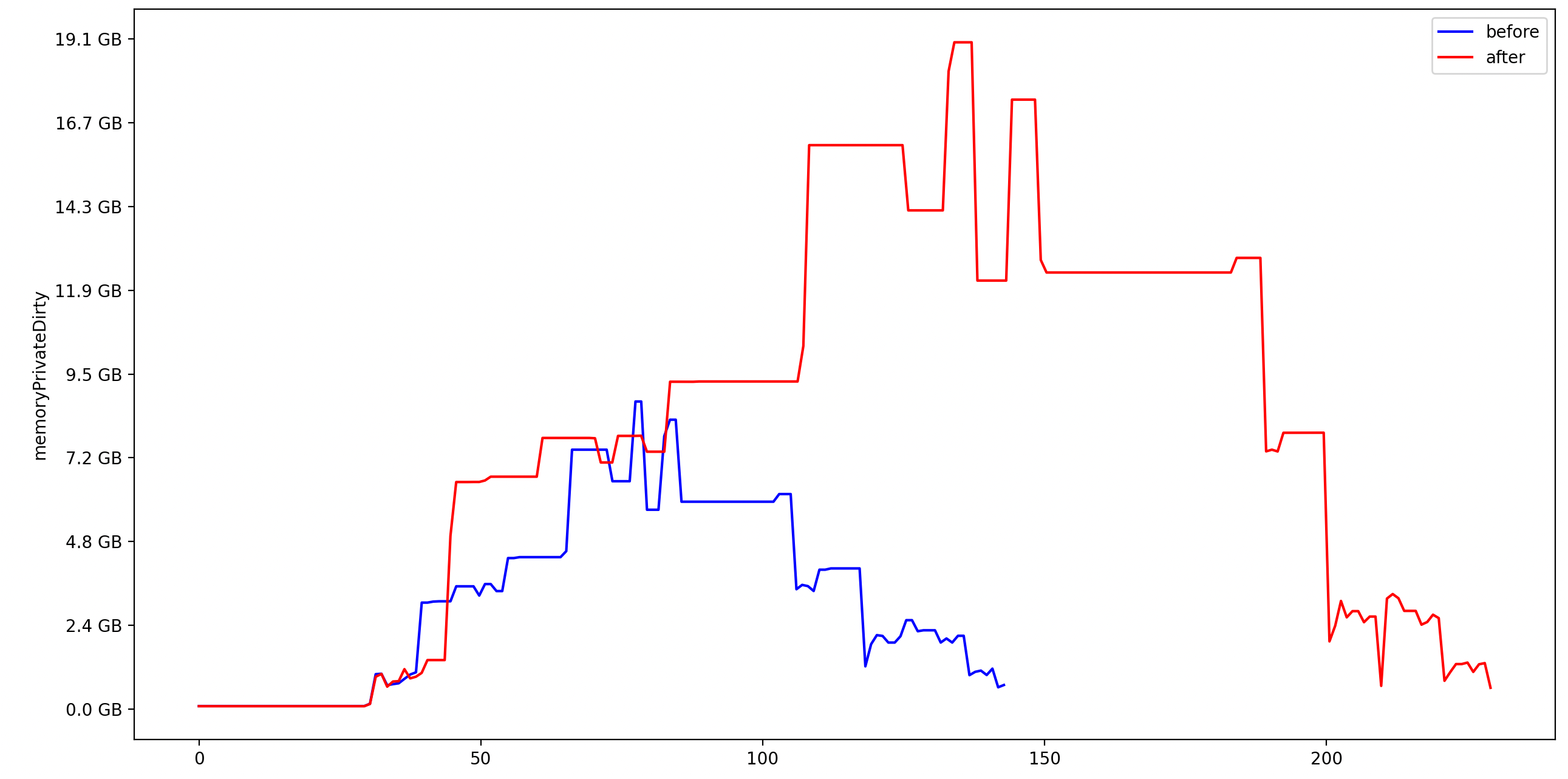

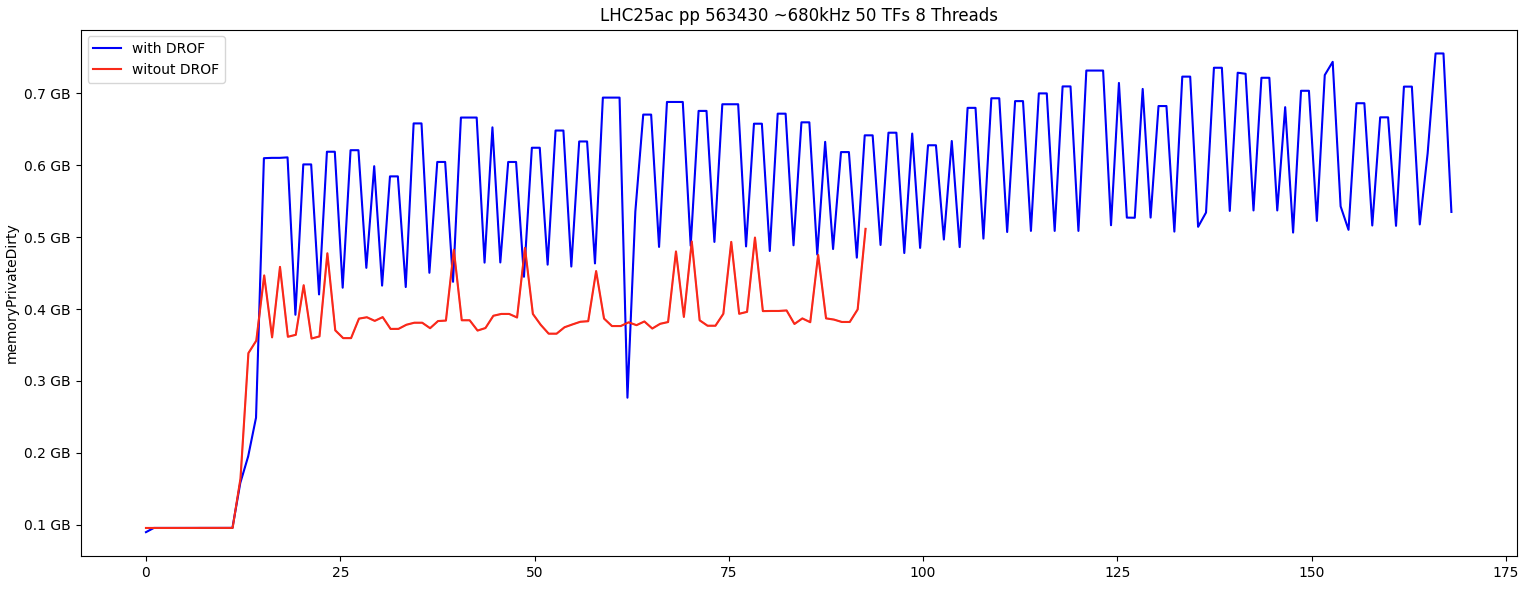

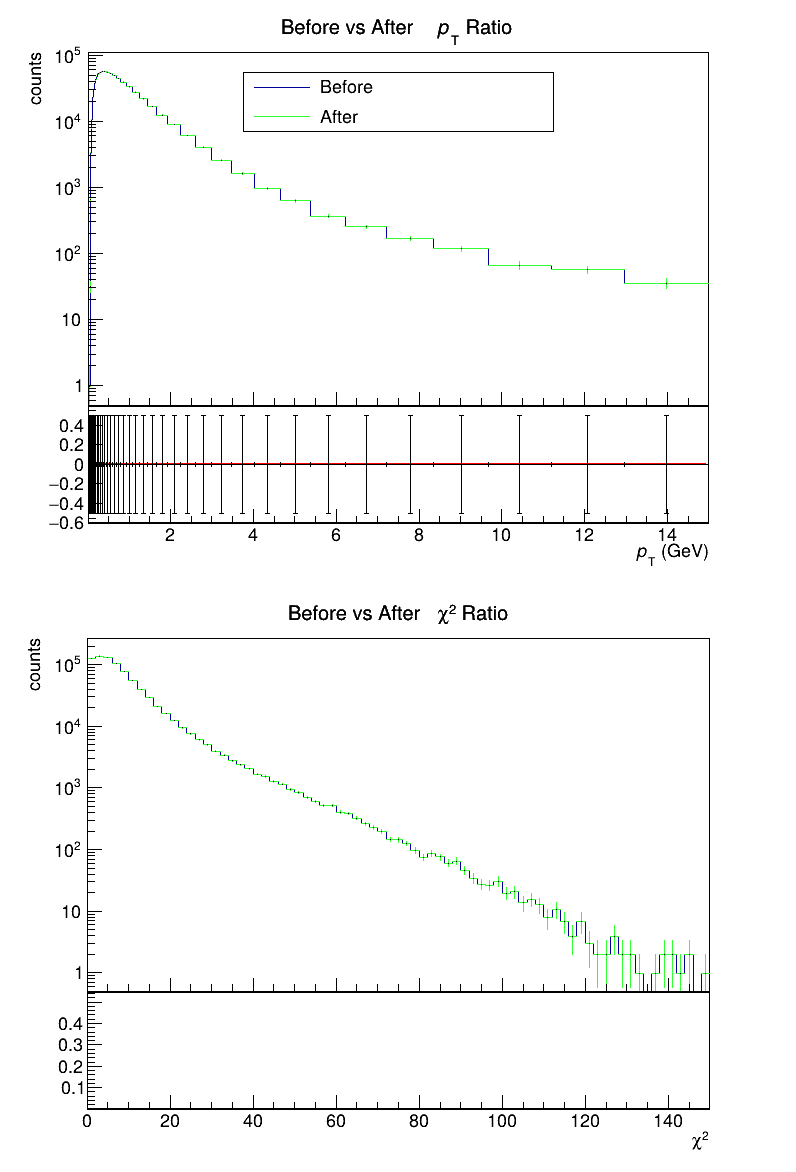

---## 1.2 Memory consumption

Here for one particulary parasitic TF in Pb-Pb with two central collisions in on readout-frame.

Plot shows before(blue)/after(red) refactoring.

Plot shows a special mode `perPrimaryVertexProcessing` which reduces the comb. significantly but has some currently studied side effects. Blue is with this mode enabled now, red as it is before.

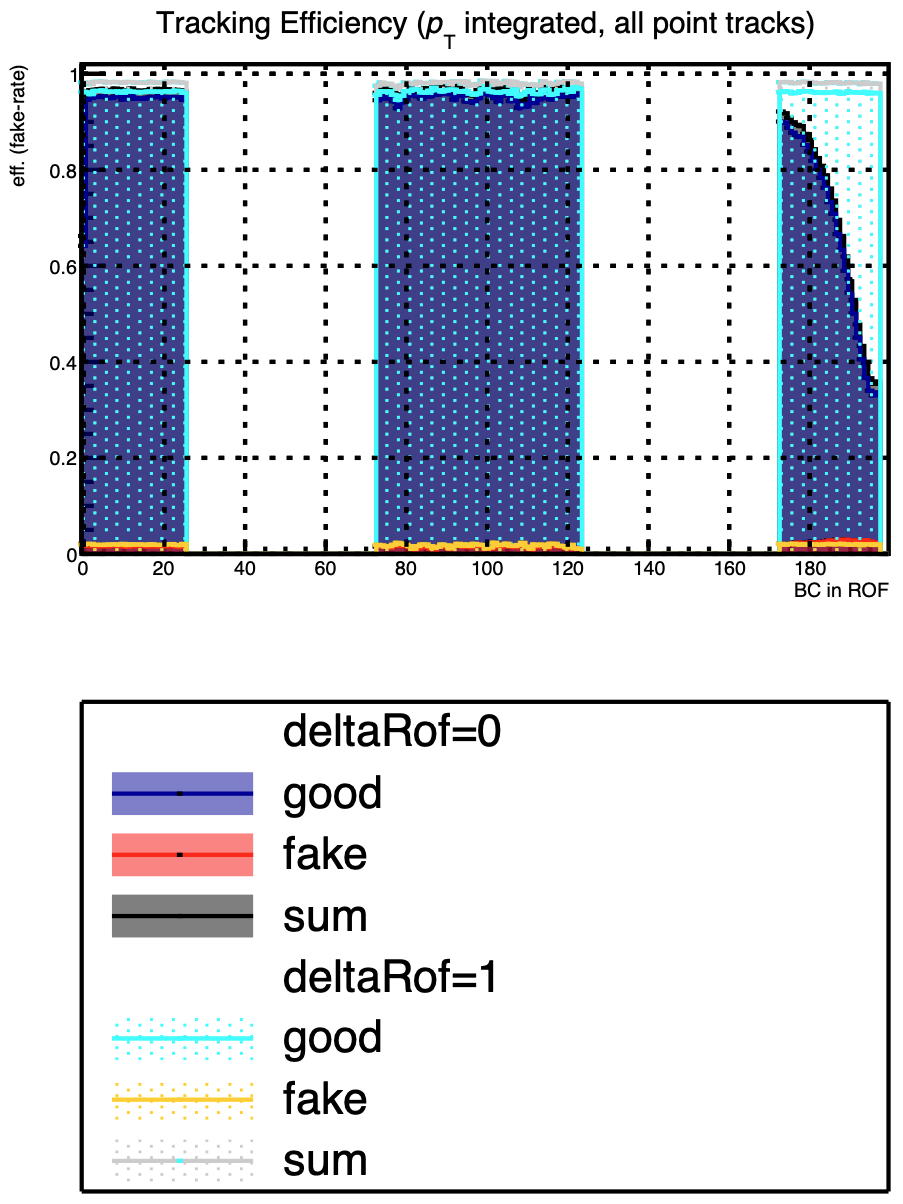

## 2. New/Improved features: `DeltaROF` tracking

In contrived MC with exagerated slope in the chip response function one can clearly see the drop of eff. towards the beginning/end of the readout-frame.

In analysis these collisions are completely cut away (standard selections) ~20% of data loss.

This feature should cure this.

Comes with an increase cost in memory and time (but worth it)

## GPU Part

After the refactoring of the reco code, checked again if GPU is now messed up. After some fixes minor fixes, in debug mode the output is 1:1 the one from the cpu code (10TFs in 40kHz Pb-Pb):

```cpp

#define GPU_BLOCKS GPUCA_DETERMINISTIC_CODE(1, 99999)

#define GPU_THREADS GPUCA_DETERMINISTIC_CODE(1, 99999)// calling kernels like this -> effectively serializing the code (very slow)

gpu::computeLayerTrackletsMultiROFKernel<true><<<o2::gpu::CAMath::Min(nBlocks, GPU_BLOCKS),

o2::gpu::CAMath::Min(nThreads, GPU_THREADS),

0,

streams[iLayer].get()>>>(...)

// now this is not needed anymore and the det. mode is `fast-ish`

```TODOs (help welcome)

- validate new features if they also work on GPU

- porting Vertexing code to GPU

- optimize GPU part:

- `started` using multiple streams very much in its infancy

- optimise load times/processing etc. (probably [not measured] parts could be improved)- optimise number of blocks/threads per kernel (for Pb-Pb + pp sep., fairly small number of kernels to be optimised)

- Have a CI procedure/periodic check that runs ITS GPU reconstruction and then gets some automatic checks and numbers (deterministic mode is more important)

- Ensure gpu-reco-workflow with ITS enabled are the same that one gets from its-reco-workflow --gpu

-

10:50

→

10:55

System Run Coordination Topics 5mSpeaker: Ernst Hellbar (CERN)

-

10:00

→

10:20