US ATLAS Computing Facility (Possible Topical)

Facilities Team Google Drive Folder

Zoom information

Meeting ID: 993 2967 7148

Meeting password: 452400

Invite link: https://umich.zoom.us/j/99329677148?pwd=c29ObEdCak9wbFBWY2F2Rlo4cFJ6UT09

-

-

13:00

→

13:05

WBS 2.3 Facility Management News 5mSpeakers: Alexei Klimentov (Brookhaven National Laboratory (US)), Dr Shawn Mc Kee (University of Michigan (US))

Quarterly reporting is due at the end of the week (Oct 24).

- Missing 2.3.2, 2.3.2.3, 2.3.4.1 (BNL) and all 2.3.5 sections

- Please get them in ASAP

Work on next CA continues

- For WBS 2.3, we need to update the Basis-Of-Estimate (BOE) needed for the next CA submission

- Updates needed for each Tier-2, WBS 2.3.4 (NSF funded parts), WBS 2.3.5 (NSF funded parts)

- Look for emails from Shawn requesting updated text and confirmation of NSF funded effort shortly

Please check/verify milestones https://docs.google.com/spreadsheets/d/1FkVDqLh_5PaHQDP-bfefBJ-PloIfD7LLw3sbP_vTgB0/edit?gid=1361093330#gid=1361093330

Discussion today on live compiling GPU code on every job

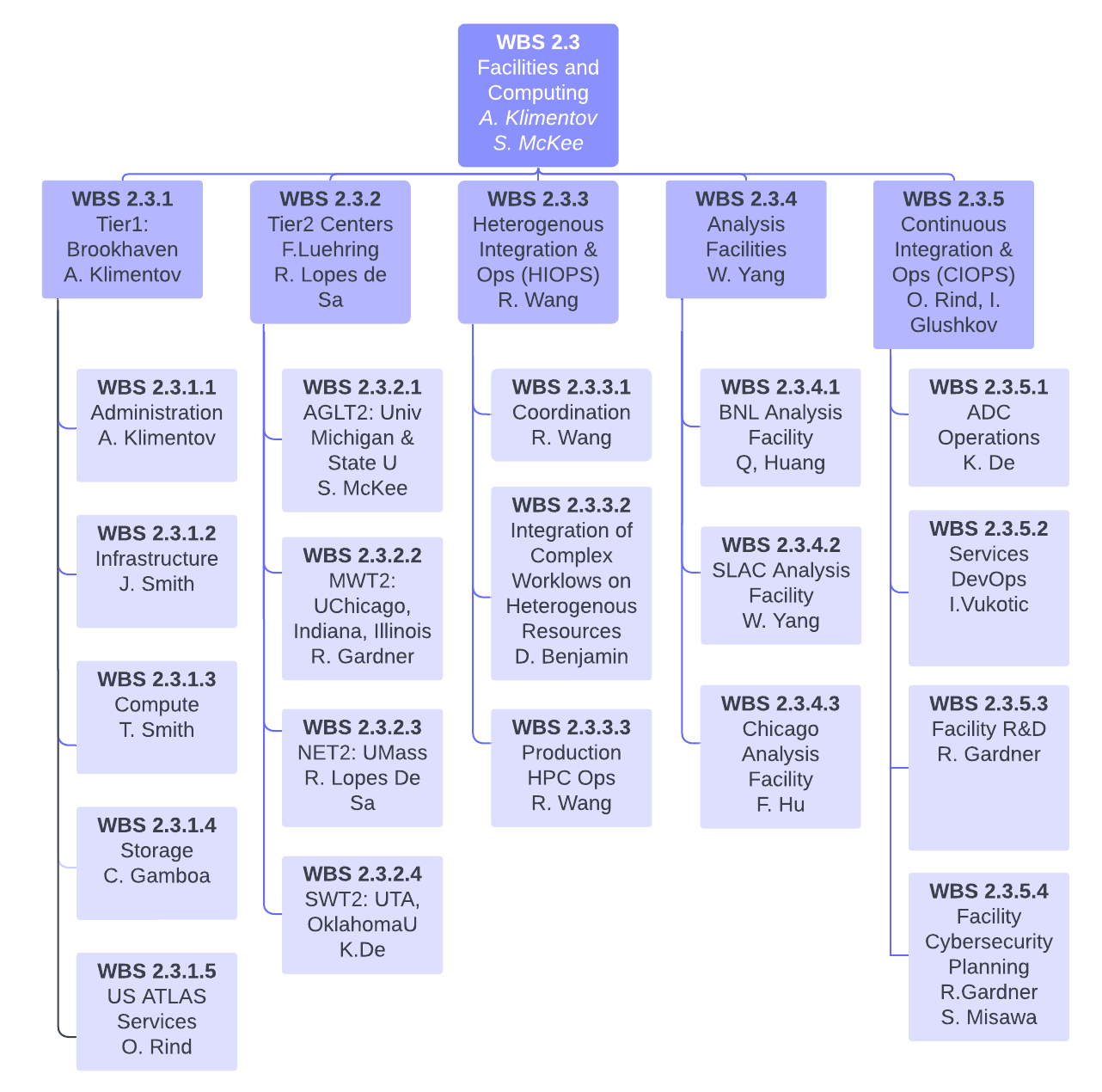

Below is our updated WBS 2.3 Organigram

-

13:05

→

13:10

OSG-LHC 5mSpeakers: Brian Hua Lin (University of Wisconsin), Matyas Selmeci

- OSG 25 released yesterday!

- https://osg-htc.org/docs/release/osg-25/

- Adds EL10 support, updated versions of HTCondor + HTCondor-CE

- Note that there are many packages missing from EPEL 10.0

- Container images are on the way -- we're basing them on EL9

- XRootD 5.9.0 is available in testing repos

- gfal2: the plan on building for EL10

- Do any US ATLAS sites support JLab / EIC / CLAS12?

- OSG 25 released yesterday!

-

13:05

→

13:45

WBS 2.3.1: Tier1 CenterConvener: Alexei Klimentov (Brookhaven National Laboratory (US))

-

13:05

ADC survey : GPU code installation 10m

ADC is surveying sites for GPU code installation info (e.g., nvcc) on WNs, GPUs nodes for use with GPU queues – they want to live compile GPU code with every job?

Question about efficiency of live compilation and waste of limited gpu resources?

JD: Jobs still trying to access CVMFS Projects repo outside of CERN

It will be discussed at today’s WBS 2.3 meetingSpeakers: Costin Caramarcu, John Steven De Stefano Jr (Brookhaven National Laboratory (US)) -

13:15

Tier-1 Infrastructure 5mSpeaker: Jason Smith

-

13:20

Compute Farm 15mSpeaker: Thomas Smith

- Brief VM interruption yesterday (21 Oct) on a subset of VMs affected the atlas T1 Condor central manager

- No real impact, the pool is resilient to brief interruptions in CM activity, CEs continue to schedule and run jobs

- CEs (gridgk03,4,6,7) were unaffected

- Operations for the past week have been completely smooth, even with this event

- Looking at condor_chirp, so job classad attributes can be modified on running jobs on the fly

- condor_chirp is available, I've successfully tested it on my own

- Located in a non standard place as it is not meant to be run on the command line, but invoked from within running jobs

- /usr/libexec/condor/condor_chirp

- May not be in the PATH, so keep this in mind if you wish to use it

- Brief VM interruption yesterday (21 Oct) on a subset of VMs affected the atlas T1 Condor central manager

-

13:35

Storage 5mSpeakers: Carlos Fernando Gamboa (Department of Physics-Brookhaven National Laboratory (BNL)-Unkno), Carlos Fernando Gamboa (Brookhaven National Laboratory (US))

-

13:40

Tier1 Operations and Monitoring 5mSpeaker: Ofer Rind (Brookhaven National Laboratory)

-

13:05

-

13:30

→

13:40

WBS 2.3.2 Tier2 Centers

Updates on US Tier-2 centers

Conveners: Fred Luehring (Indiana University (US)), Rafael Coelho Lopes De Sa (University of Massachusetts (US))- Good running in the last two weeks....

- Minor planned and unplanned disruptions at MWT2, NET2, and CPB

- Another fiber break has knocked out TW-FTT's connection again this week.

- Almost done with the Tier 2 reporting.

- Given how busy people are now, I (Fred) propose pushing the equipment discussion off to November.

- I did not consult with Rafael on this proposal.

- Good running in the last two weeks....

-

13:40

→

13:50

WBS 2.3.3 Heterogenous Integration and Operations

HIOPS

Convener: Rui Wang (Argonne National Laboratory (US))-

13:40

HPC Operations 5mSpeaker: Rui Wang (Argonne National Laboratory (US))

TACC: Finished allocation on Monday, brought UCORE queue offline

- Tested MC/Track Overlay, RawToAll, RDOtoRDOTrig production

Perlmutter: ~8%/32% CPU/GPU allocation remains, stable

- GPU usage is still low

ACCESS: Explorer allocation extended to Oct 2026

Doug& Rob are putting up a note for Overlay cluster setup

-

13:45

Integration of Complex Workflows on Heterogeneous Resources 5mSpeaker: Doug Benjamin (Brookhaven National Laboratory (US))

-

13:40

-

13:50

→

14:10

WBS 2.3.4 Analysis FacilitiesConvener: Wei Yang (SLAC National Accelerator Laboratory (US))

-

13:50

Analysis Facilities - BNL 5mSpeaker: Qiulan Huang (Brookhaven National Laboratory (US))

-

13:55

Analysis Facilities - SLAC 5mSpeaker: Wei Yang (SLAC National Accelerator Laboratory (US))

-

14:00

Analysis Facilities - Chicago 5mSpeaker: Fengping Hu (University of Chicago (US))

- /data Access Issues – Experienced access interruptions last week, caused by a combination of high load and OSD pool performance issues. The problem was resolved after addressing laggy placement groups (PGs) and some adjustment on MDS handling.

- HTCondor Configuration Update – Implemented new restrictions to improve stability. Job submissions from

/dataand file transfers to/from/dataare now disallowed to reduce load on CephFS and prevent scheduler (schedd) disruptions. - Triton Deployment – Refreshed deployment with an updated server version. Work is in progress to produce user documentation for the updated service.

-

13:50

-

14:10

→

14:30

WBS 2.3.5 Continuous OperationsConveners: Ivan Glushkov (Brookhaven National Laboratory (US)), Ofer Rind (Brookhaven National Laboratory)

-

14:10

ADC Operations, US Cloud Operations: Site Issues, Tickets & ADC Ops News 5mSpeaker: Kaushik De (University of Texas at Arlington (US))

- Welcome to Yi-Ru (Jennifer) Chen from TW-FTT, who is currently visiting CERN and has been attending ATLAS and US Ops meetings. We are looking forward to having her provide additional ops support for the US Cloud from the Asia time zone!

- Jennifer and the TW-FTT site are also interested in migrating from ARC-CE to HTCondor-CE

- Welcome to Yi-Ru (Jennifer) Chen from TW-FTT, who is currently visiting CERN and has been attending ATLAS and US Ops meetings. We are looking forward to having her provide additional ops support for the US Cloud from the Asia time zone!

-

14:15

Services DevOps 5mSpeaker: Ilija Vukotic (University of Chicago (US))

Analytics

- running out of space on ES cluster.

- as a temporary solution we will add an old storage node to serve as a cold storage.

Caching physics data

- Discussing http proxies testing with Raphael K. (Wuppertal). They will try ATC, nignx, xroot.

- All xcaches now have new certs

Caching conditions

- the new system works stably

- one of the two k8s clusters running Frontier lost connectivity and the whole cluster had to be rebuilt. No impact on operations.

- lxplus migrated off squids.

- Still need to migrate NERSC off the squids

- Still need to get a local Varnish for BNL.

- will be removing backup proxies this week (both Fermilab and CERN)

- will be moving CERN ITS operated Varnishes to k8s.

Caching CVMFS

- UC now have Prometheus monitoring of all the CVMFS clients. A lot of interesting data.

AI

- A lot of small improvements on AF Assistant.

- Now using OpenAI AgentBuilder and ChatKit for frontend.

- Will present tomorrow at ATLASE Scope Kick-off meeting.

-

14:20

Facility R&D 5mSpeaker: Robert William Gardner Jr (University of Chicago (US))

-

14:25

Cybersecurity plan(s) 5mSpeakers: Robert William Gardner Jr (University of Chicago (US)), Shigeki Misawa (Brookhaven National Laboratory (US))

-

14:10

-

14:25

→

14:35

AOB 10m

-

13:00

→

13:05