Alice Weekly Meeting: Software for Hardware Accelerators

-

-

1

DiscussionSpeaker: David Rohr (CERN)

Color code: (critical, news from this week: blue, news from last week: purple, no news: black)

Meetings 24.9. / 1.10. / 8.10 cancelled due to absense

CHEP Abstracts: https://indico.cern.ch/event/1471803/abstracts/ Deadline Dec. 19.

- What do we do for GPU? I would like to do an overview talk, focussing on GPU usage for offline, highlighting that we could successfully run on GPUs at NERSC. I'd also like to mention that there is progress having more detectors on the GPU, but perhaps Felix wants to show ITS developments anyway?

Sync reconstruction

Async reconstruction

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- New GPU architecture selection for async in O2DPG looks good, should be merged.

- Test with GPU GRID jobs at NERSC pending.

- Asked DPG to run first test with ITS tracking on GPU on EPNs.

GPU ROCm / compiler topics:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- Problem with building ONNXRuntime with MigraphX support.

- Need to find a way to build ONNXRuntime with support for CUDA and for ROCm.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- LLVM Bump to 20.1: merged

- Miscompilation / internal compiler error fixed in new clang for ROCm 7.x, SDMA engine synchronization bug still not fixed.

- Compilation errors fixed in ROCm trunk, to go into ROCm 7.

- Validated standalone benchmark in deterministic mode, and ran FST.

- Performance regression of ~1.5% without RTC, no regression with RTC. Reported to AMD. Not clear if this will be fixed.

- Serialization bug on MI100 still not fixed, still requires the workaround.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object:

- Compilation issues on GPU fixed.

- Matthias did some checks, found two minor bugs to be fixed by Sergey.

- Speed seems ok, 0.25s for merging 2 maps.

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Need to check the problem with ONNX external memory allocator.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows. PR: https://github.com/AliceO2Group/AliceO2/pull/14542

- PR merged, further developments in https://github.com/AliceO2Group/AliceO2/pull/14651.

- Bug in multi-threaded pipeline when timeframes do not arrive in order. Did not happen again after https://github.com/AliceO2Group/AliceO2/pull/14640 was merged, assuming it is fixed.

- gpu-reconstruction quitting with error in some async jobs due to running out of buffers: Problem was due to TPC Sector A11 (the broken one) having much fewer clusters. This, together with Ruben settings for aggressive extrapolation to adjacent sectors, let to too many sector tracks in sector A11, and gpu-reconstruction aborted because the estimated memory size was exceeded. Fixed by taking adjacent sector occupancy into account for the buffer size estimation.

EPN GPU Topics:

-

2

TPC ML ClusteringSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Commissioning at P2

- After bug fix: Successful runs at P2 with all algorithmic approaches. Some stats from the logs (tRateLimit = 240, 500 kHz pp replay)

- Run without backpressure and CF regession, threshold 0.03:

- MI50 (nEPNs = 71): 2.652 +- 0.001 TF/s/EPN

- MI100 (nEPNs = 45): 2.997 +- 0.003 TF/s/EPN

- Run with backpressure and NN regression, threshold 0.03:

- MI50 (nEPNs = 72): 2.119 +- 0.001 TF/s/EPN

- MI100 (nEPNs = 44): 2.440 +- 0.002 TF/s/EPN

- Run without backpressure and NN regression, threshold 0.05:

- MI50 (nEPNs = 78): 2.175 +- 0.002 TF/s/EPN

- MI100 (nEPNs = 50): 2.541 +- 0.001 TF/s/EPN

- Run without backpressure and CF regession, threshold 0.03:

- All runs succesful, no memory access faults, no (clusterizer induced) crashes. In the NN regression: Few runs still showed the PAD IROC-OROC out-of-bounds error (O(20) messages total). To be investigated, although not reproducible in the FST even at high rate, so it might even be legit.

Physics

- Discovered a problem in analysis on real data -> Fixed and rerunning now

- Analysis will follow by the end of the week (if all goes according to plan for job submission)

- ITS-TPC matching efficiency investigation: Pending. Real data & PID analysis has priority this week.

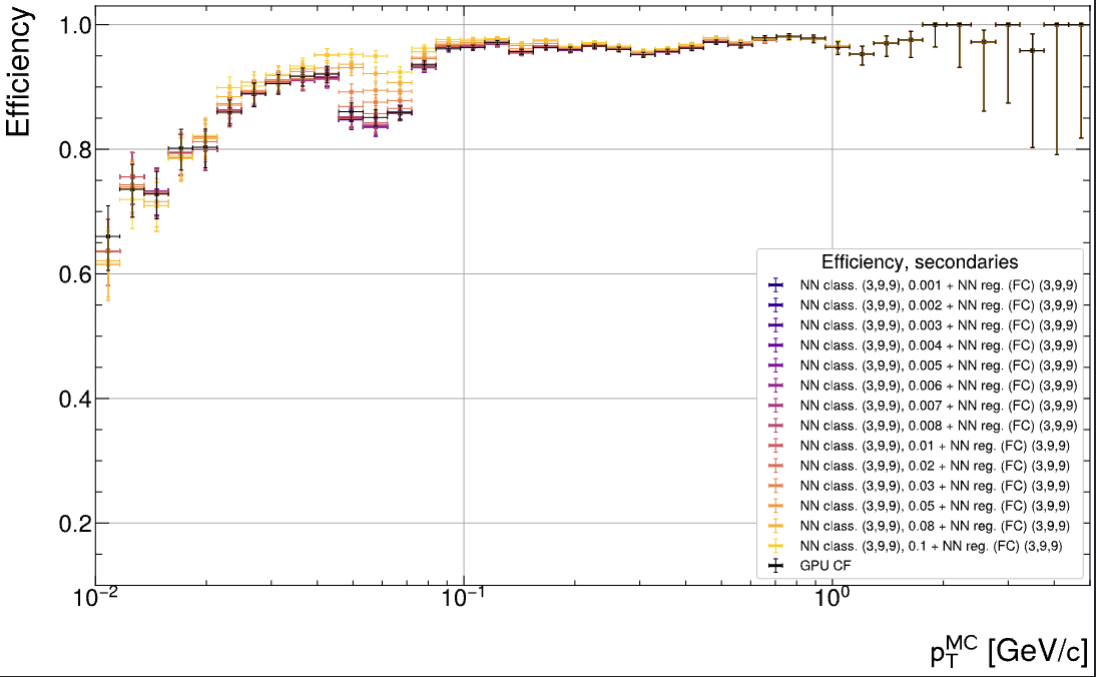

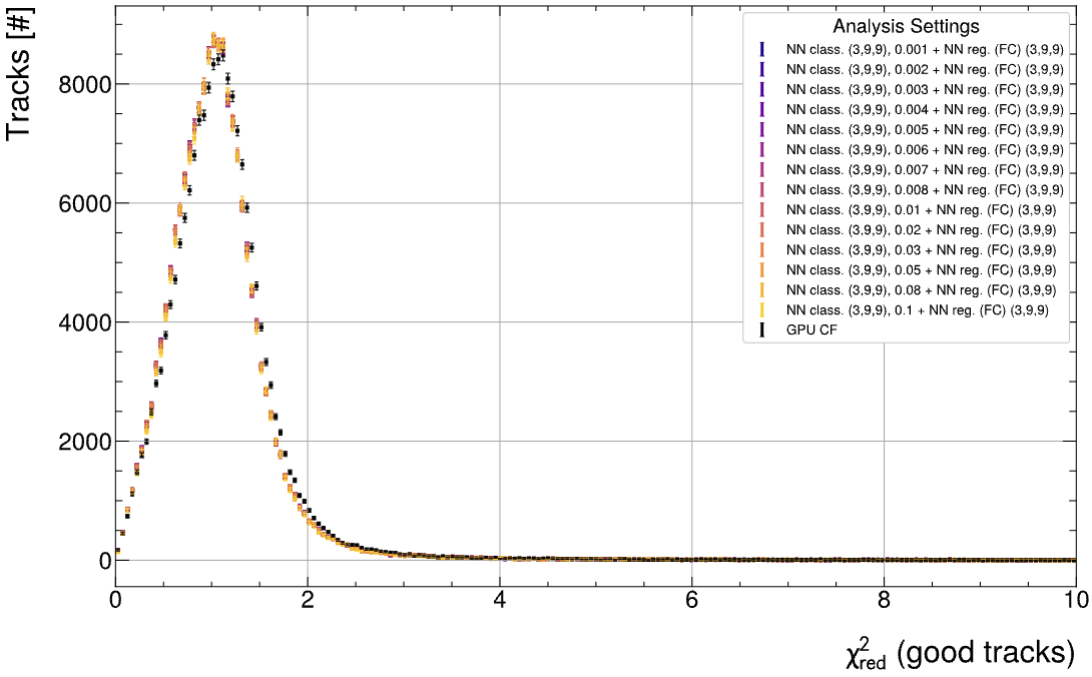

- Sanity check on low rate pp data: Anchored to reference run, minimum bias, no SC distortions (because they are basically negligible with this pp data). Metrics improve or stay unchanged using the networks trained on PbPb 50kHz. Training on SC or no SC data has no visible difference on performance. Most notable difference: Efficiency vs pT for secondaries (first plot) and chi2 for good tracks (second plot). Fake rate stays approximately the same.

Framework

- One more fix needed in O2/dev to run with MC data. https://github.com/AliceO2Group/AliceO2/pull/14677

Otherwise mainly busy writing, correcting, rerunning

- After bug fix: Successful runs at P2 with all algorithmic approaches. Some stats from the logs (tRateLimit = 240, 500 kHz pp replay)

-

3

GPU Parameter OptimizationsSpeaker: Gabriele Cimador (CERN, Università and INFN Torino)

News from GPU parameter tuning

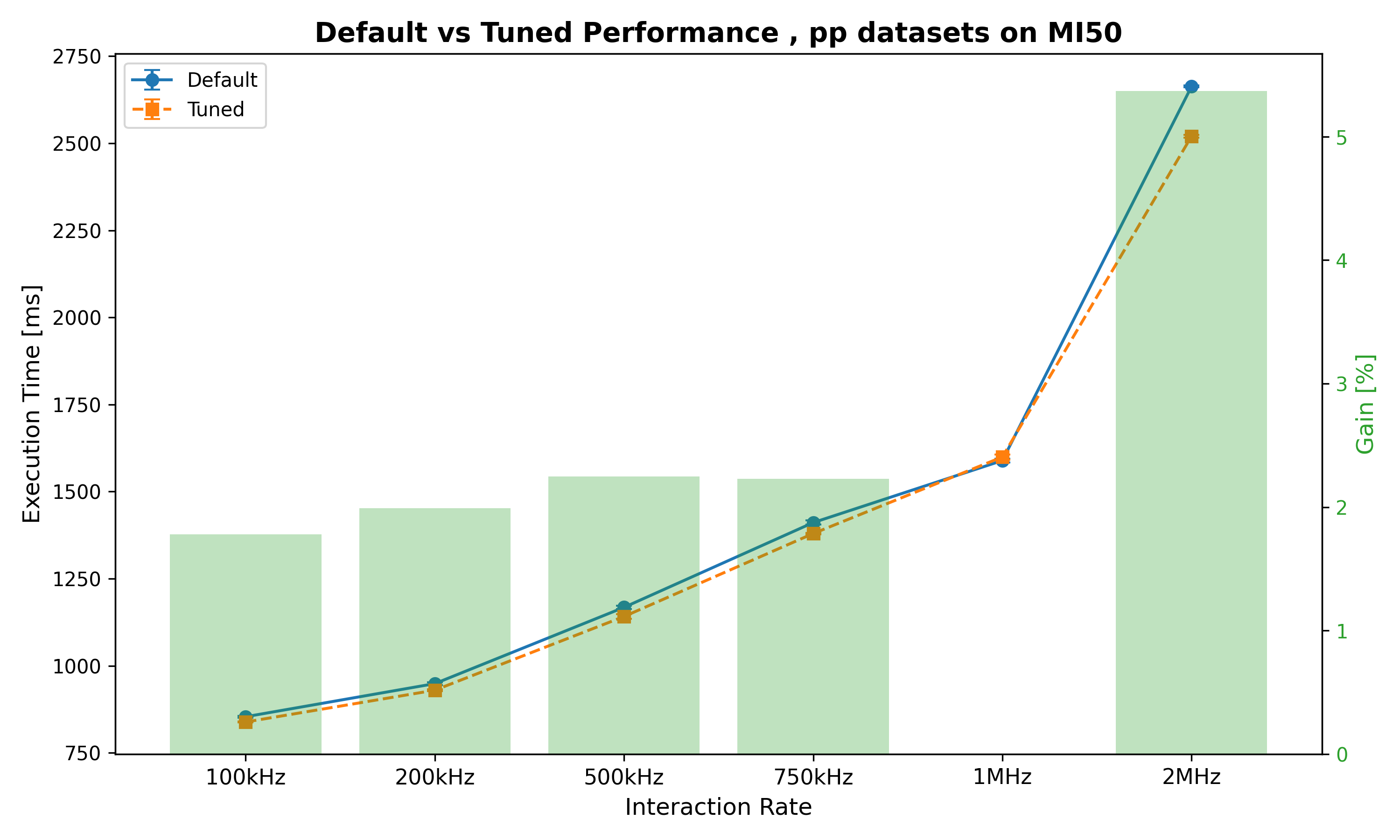

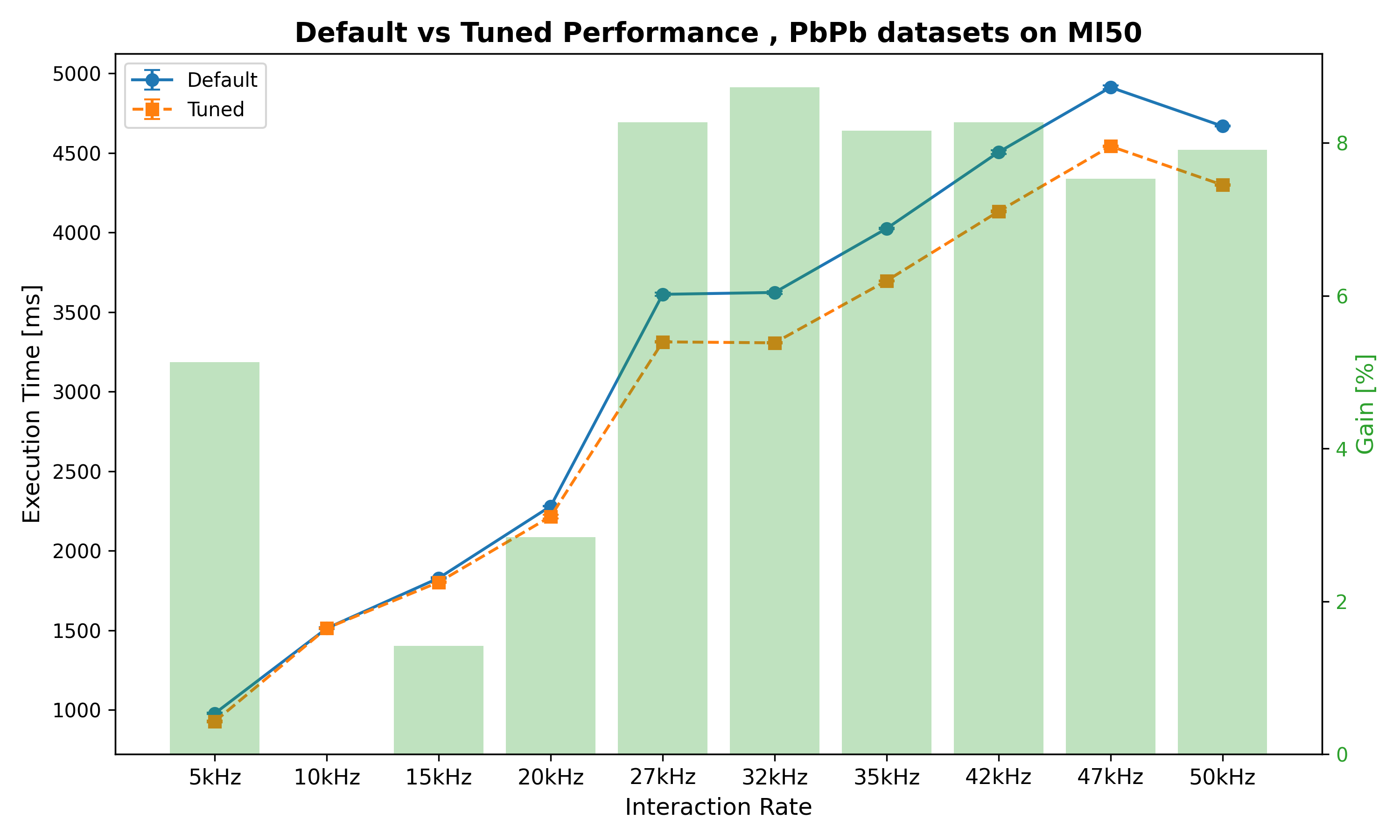

Tested best parameters for PbPb 50 kHz with other IR and beam types:

MI50

pp

Interaction Rate Default mean time [ms] Tuned time [ms] Gain 100kHz 853.65 ms ± 2.79 ms 838.47 ms ± 1.02 ms 1.78% 200kHz 948.67 ms ± 4.06 ms 929.81 ms ± 2.52 ms 1.99% 500kHz 1167.65 ms ± 4.66 ms 1141.34 ms ± 6.77 ms 2.25% 750kHz 1411.01 ms ± 5.84 ms 1379.58 ms ± 2.33 ms 2.23% 1MHz 1589.10 ms ± 5.01 ms 1599.41 ms ± 7.31 ms -0.65% 2MHz 2663.11 ms ± 2.79 ms 2520.22 ms ± 4.48 ms 5.37%

PbPb

Interaction Rate Default mean time [ms] Tuned time [ms] Gain 5kHz 978.91 ms ± 5.20 ms 928.67 ms ± 6.25 ms 5.13% 10kHz 1513.75 ms ± 5.65 ms 1514.12 ms ± 3.63 ms -0.02% 15kHz 1829.28 ms ± 3.76 ms 1803.34 ms ± 3.37 ms 1.42% 20kHz 2281.01 ms ± 0.93 ms 2216.18 ms ± 12.26 ms 2.84% 27kHz 3612.01 ms ± 10.66 ms 3313.26 ms ± 4.76 ms 8.27% 32kHz 3623.72 ms ± 9.07 ms 3307.26 ms ± 4.69 ms 8.73% 35kHz 4025.52 ms ± 4.37 ms 3697.15 ms ± 3.37 ms 8.16% 42kHz 4505.26 ms ± 11.93 ms 4132.51 ms ± 5.75 ms 8.27% 47kHz 4912.68 ms ± 11.40 ms 4542.61 ms ± 3.48 ms 7.53% 50kHz 4668.87 ms ± 3.24 ms 4299.42 ms ± 4.06 ms 7.91%

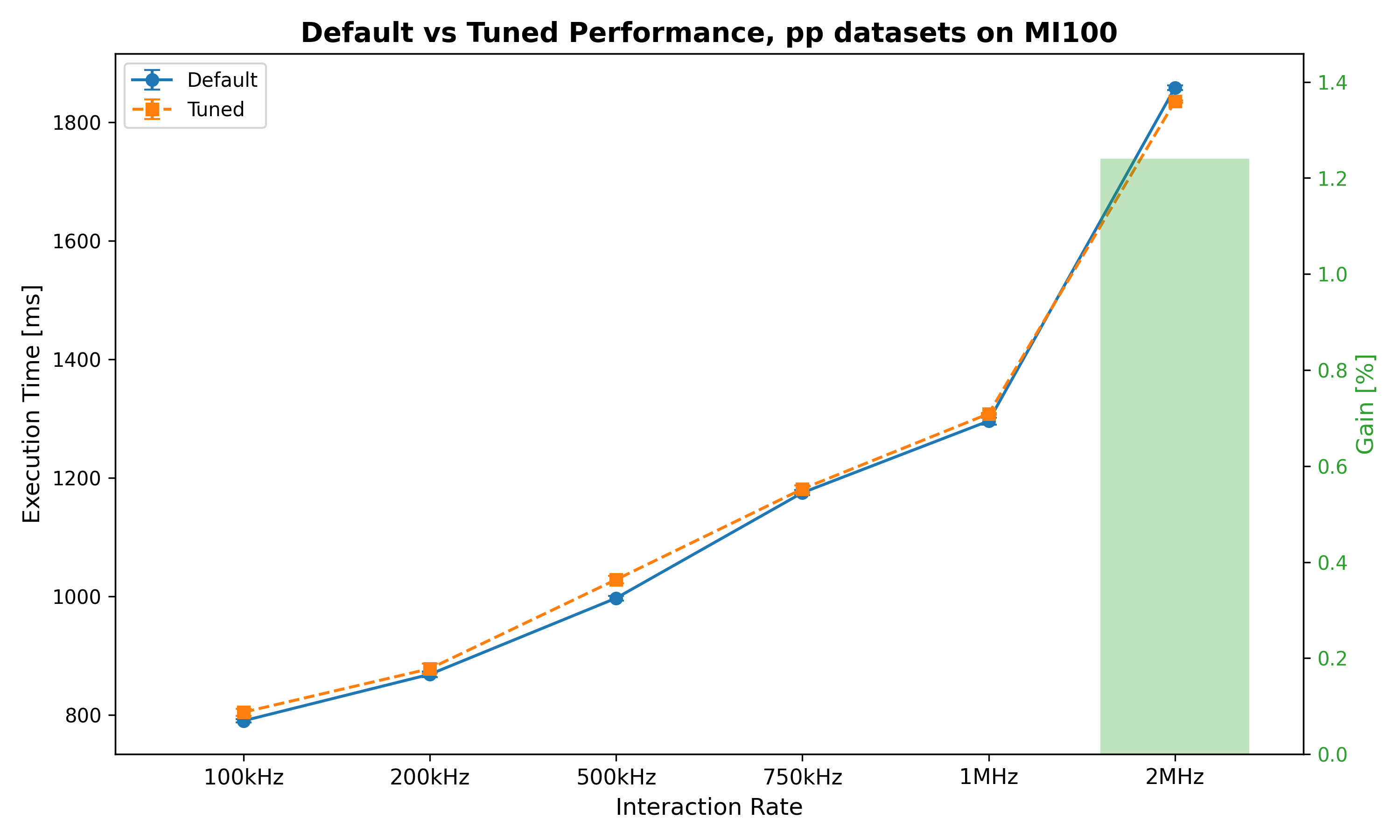

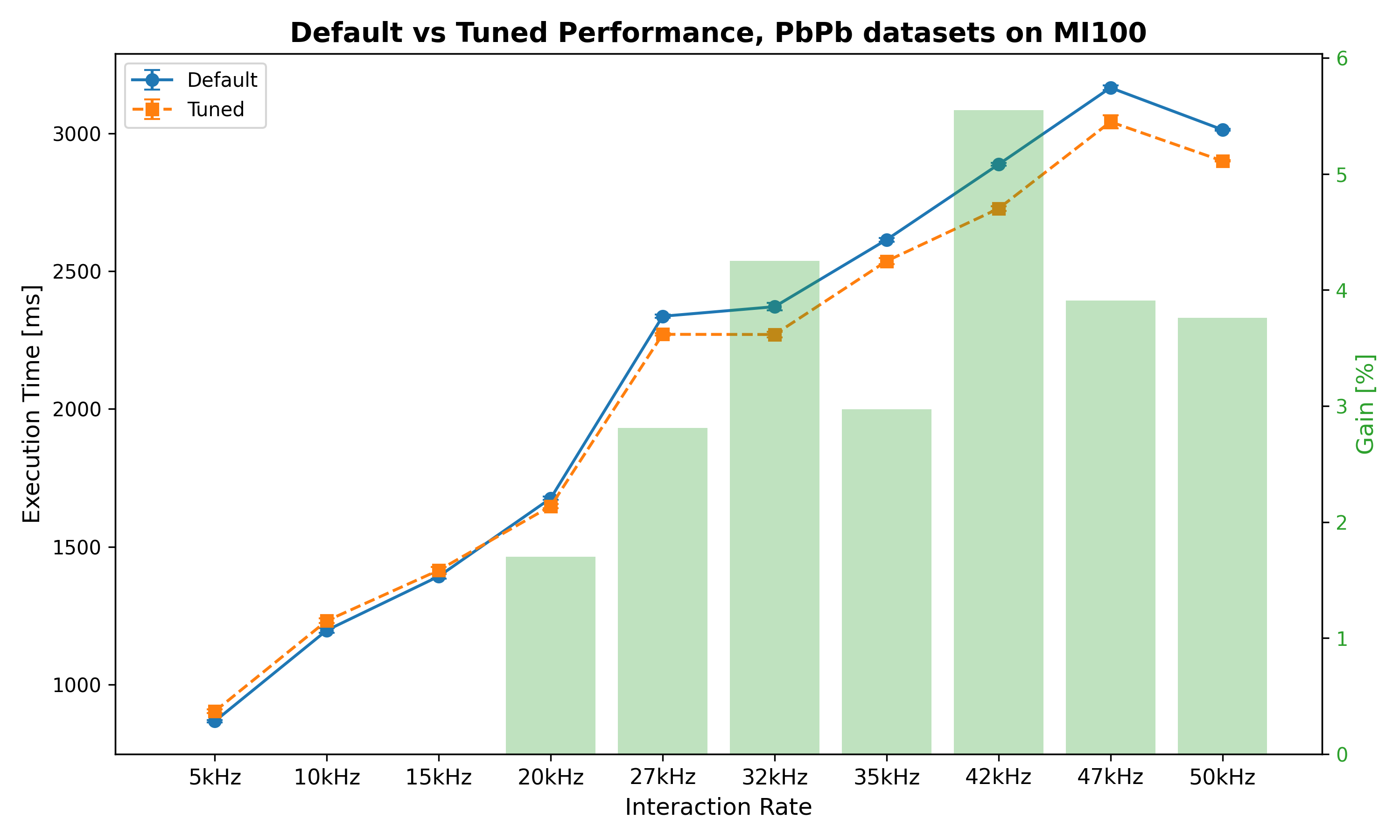

MI100

Tuned also on MI100, and tested best parameters for PbPb 50 kHz with other IR and beam types:

pp

Interaction Rate Default mean time [ms] Tuned time [ms] Gain 100kHz 790.20 ms ± 2.69 ms 804.62 ms ± 5.87 ms -1.82% 200kHz 868.61 ms ± 4.93 ms 878.17 ms ± 8.26 ms -1.10% 500kHz 997.19 ms ± 3.98 ms 1028.51 ms ± 6.37 ms -3.14% 750kHz 1175.06 ms ± 4.23 ms 1181.46 ms ± 6.34 ms -0.54% 1MHz 1296.13 ms ± 5.85 ms 1308.41 ms ± 1.59 ms -0.95% 2MHz 1858.52 ms ± 3.77 ms 1835.43 ms ± 2.23 ms 1.24%

PbPb

Interaction Rate Default mean time [ms] Tuned time [ms] Gain 5kHz 867.53 ms ± 4.21 ms 903.62 ms ± 6.35 ms -4.16% 10kHz 1197.34 ms ± 8.60 ms 1231.92 ms ± 8.03 ms -2.89% 15kHz 1393.01 ms ± 8.96 ms 1415.13 ms ± 11.16 ms -1.59% 20kHz 1675.92 ms ± 5.72 ms 1647.40 ms ± 7.74 ms 1.70% 27kHz 2336.33 ms ± 5.64 ms 2270.74 ms ± 4.78 ms 2.81% 32kHz 2370.99 ms ± 13.98 ms 2270.10 ms ± 10.50 ms 4.25% 35kHz 2613.92 ms ± 6.63 ms 2536.30 ms ± 10.28 ms 2.97% 42kHz 2886.99 ms ± 4.88 ms 2726.75 ms ± 8.61 ms 5.55% 47kHz 3165.24 ms ± 7.95 ms 3041.40 ms ± 23.87 ms 3.91% 50kHz 3012.92 ms ± 3.81 ms 2899.64 ms ± 2.33 ms 3.76%

ToDos:

- Investigate why high grid sizes are preferred

- Investigate why MI100 tuning is not effective as MI50 for other datases

- Make it work for Nvidia cards

ALICE contribution to HS23

- Tomorrow meeting with Robin

-

4

Efficient Data StructuresSpeaker: Dr Oliver Gregor Rietmann (CERN)

-

5

Following up GPU to-dosSpeaker: Dr Vikas Singhal (Department of Atomic Energy (IN))

-

6

TPC Clusterization / OpenCL / Highly Ionizing ParticlesSpeaker: Felix Weiglhofer (Goethe University Frankfurt (DE))

OpenCL

crash when compiling ZS decoder

- Submitted reproducer

- Last update from PoCL devs: Not a trivial fix, requires rewriting one step in compiler pipeline

Memory corruption in ZS decoder

- Attempting to reproduce issue in standalone version

- Rewriting kernel to match 1:1 version in O2 (as far as possible)

New GPU server

Final configuration:

- Dev machine with 64 core threadripper + RTX 5080 (& MI50)

- New 2U server for alibi, repurpose old alibi to CI GPU machine

Order with Guy. Waiting for update.

-

7

ITS TrackingSpeakers: Felix Schlepper (CERN, Heidelberg University (DE)), Gabriele Cimador (CERN, Università and INFN Torino), Matteo Concas (CERN)

ITS reconstruction tests (details below important just highlighted)

https://its.cern.ch/jira/browse/O2-6264

Test 1: ITS GPU tracking

General problem running pp productions on EPNs (not related to ITS!). Had to switch to test tracking in Pb-Pb so the test below is for 38 kHz (not what I wanted but actually stress test now!)

Two things had to be fixed for this to work properly:

- need to give o2-gpu-workflow its reco options https://github.com/AliceO2Group/O2DPG/pull/2119 [merged]

- if we drop the TF due to lack of memory we print out the exception

std::bad_allochowever for grid jobs there is a validation script at the end which explicitly regex scan the logs for this and errors the job even if the pipeline/reconstruction is successful, so just mask this exception https://github.com/AliceO2Group/AliceO2/pull/14671 [merged]

Here from AnalysisQC produced by Igor (thanks!), showing sort of matching efficiency of ITS&TPC against detector occupancy:

Both reconstruction CPU (inverted color)/GPU overlayed, basically looks identical!

Minor differences only at very high occupancy, where lack of statistics (20 minute data chunk) and difference in GPU output becomes visible and enhanced.

But also I think we have to check and compare how many TFs we drop in both (may need to implement deallocation between iterations, GPU memory only wiped after processing the whole TF)!

- ITS tracking on GPUs, output apass2_its_gpu2 - https://alimonitor.cern.ch/prod/jobs.jsp?t=32616; --> 9y 70d

- ITS tracking on CPUs, outpit apass2_its_gpu2_ref - https://alimonitor.cern.ch/prod/jobs.jsp?t=32683; --> 12y 179d

If we can take the timings on monalisa seriously then the processing of the whole production is 36% faster!

But maybe a more in-depth analysis is needed?

Also merged partial implementation of the GPU seeding vertexing code.

-

8

System Run Coordination TopicsSpeaker: Ernst Hellbar (CERN)

-

1