Alice Weekly Meeting: Software for Hardware Accelerators

-

-

10:00

→

10:20

Discussion 20mSpeaker: David Rohr (CERN)

Color code: (critical, news from this week: blue, news from last week: purple, no news: black)

CHEP Abstracts: https://indico.cern.ch/event/1471803/abstracts/ Deadline Dec. 19.

Sync reconstruction

Async reconstruction

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- New GPU architecture selection for async in O2DPG looks good, should be merged.

- Test with GPU GRID jobs at NERSC pending.

- Asked DPG to run first test with ITS tracking on GPU on EPNs.

GPU ROCm / compiler topics:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- Problem with building ONNXRuntime with MigraphX support.

- Need to find a way to build ONNXRuntime with support for CUDA and for ROCm.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Miscompilation / internal compiler error fixed in new clang for ROCm 7.x, SDMA engine synchronization bug still not fixed.

- Serialization bug pending, and with O2 update has become more probable to happen. Still hoping AMD will be able to implement a fix.

- Found a new regression in ROCm 7, which miscompiles on MI100 with latest O2 (independent from serialization bug). Miscompilation does not happen on MI50 or on old O2.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object:

- Sergey opened a new PR with the fixes and compatibility layer in, currently fails in the CI. Must be fixed, then Matthias can continue commissioning.

- Need to check the problem with ONNX external memory allocator.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows. PR: https://github.com/AliceO2Group/AliceO2/pull/14542

- PR merged, further developments in https://github.com/AliceO2Group/AliceO2/pull/14651, investingating some problems now, not clear if related:

- TPC vdrift and laser calibration issues.

- Crash with low-field data at end of run.

- Large memory usage in pp on the GRID.

- Ruben reported a drop of efficiency above 4 GeV.

- Next iteration of tracking improvements in draft PR: https://github.com/AliceO2Group/AliceO2/pull/14651

- PR merged, further developments in https://github.com/AliceO2Group/AliceO2/pull/14651, investingating some problems now, not clear if related:

- gpu-reconstruction quitting with error in some async jobs due to running out of buffers: Problem was due to TPC Sector A11 (the broken one) having much fewer clusters. This, together with Ruben settings for aggressive extrapolation to adjacent sectors, let to too many sector tracks in sector A11, and gpu-reconstruction aborted because the estimated memory size was exceeded. Fixed by taking adjacent sector occupancy into account for the buffer size estimation.

EPN GPU Topics:

-

10:20

→

10:25

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

Real data reconstruction

- Used LHC24ar, apass2

- Full chain done for the following configurations:

- GPU CF (default reco)

- NN 0.03, CF regression

- NN 0.03, full

- NN 0.05, full

- NN 0.1 full

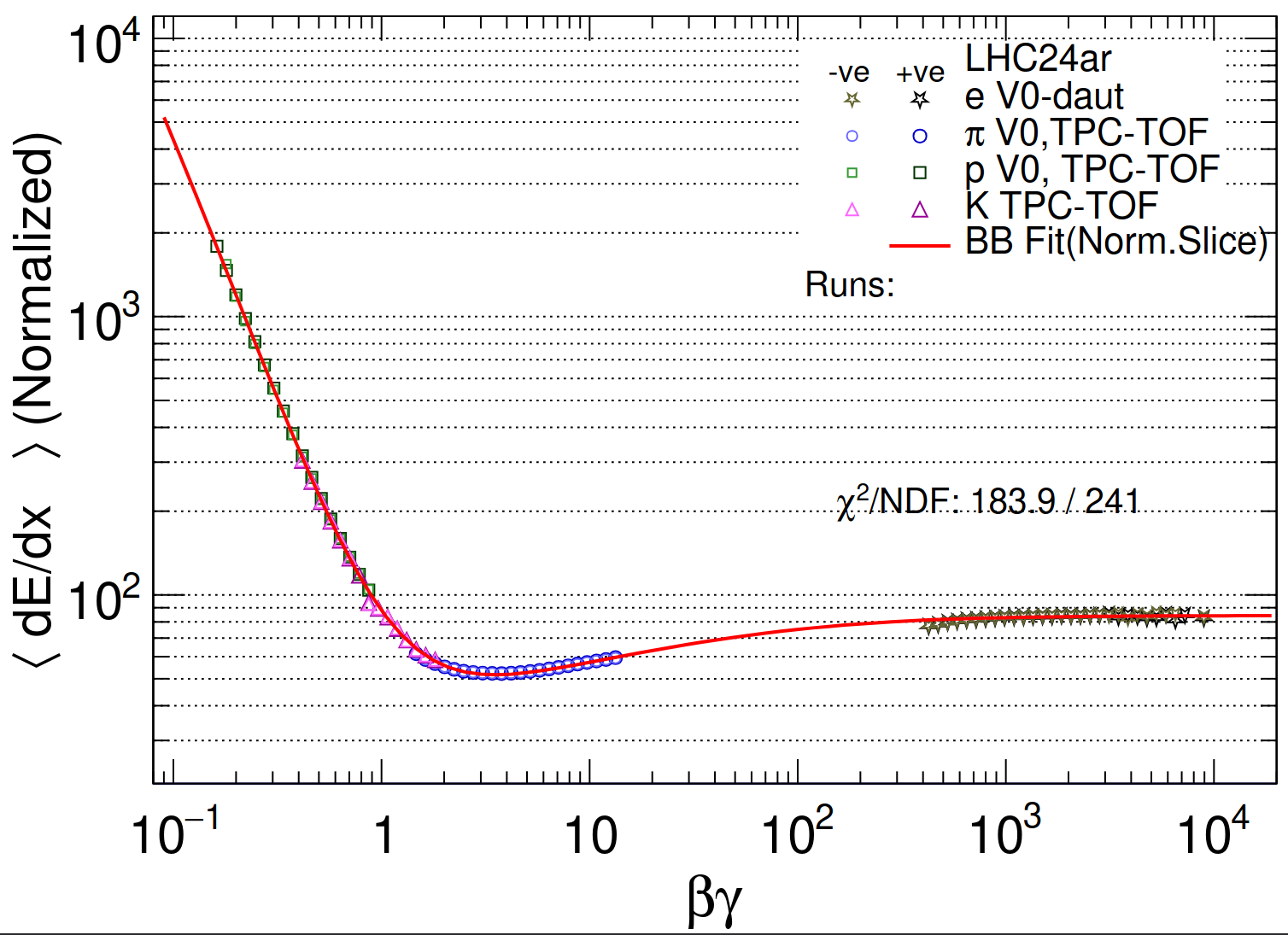

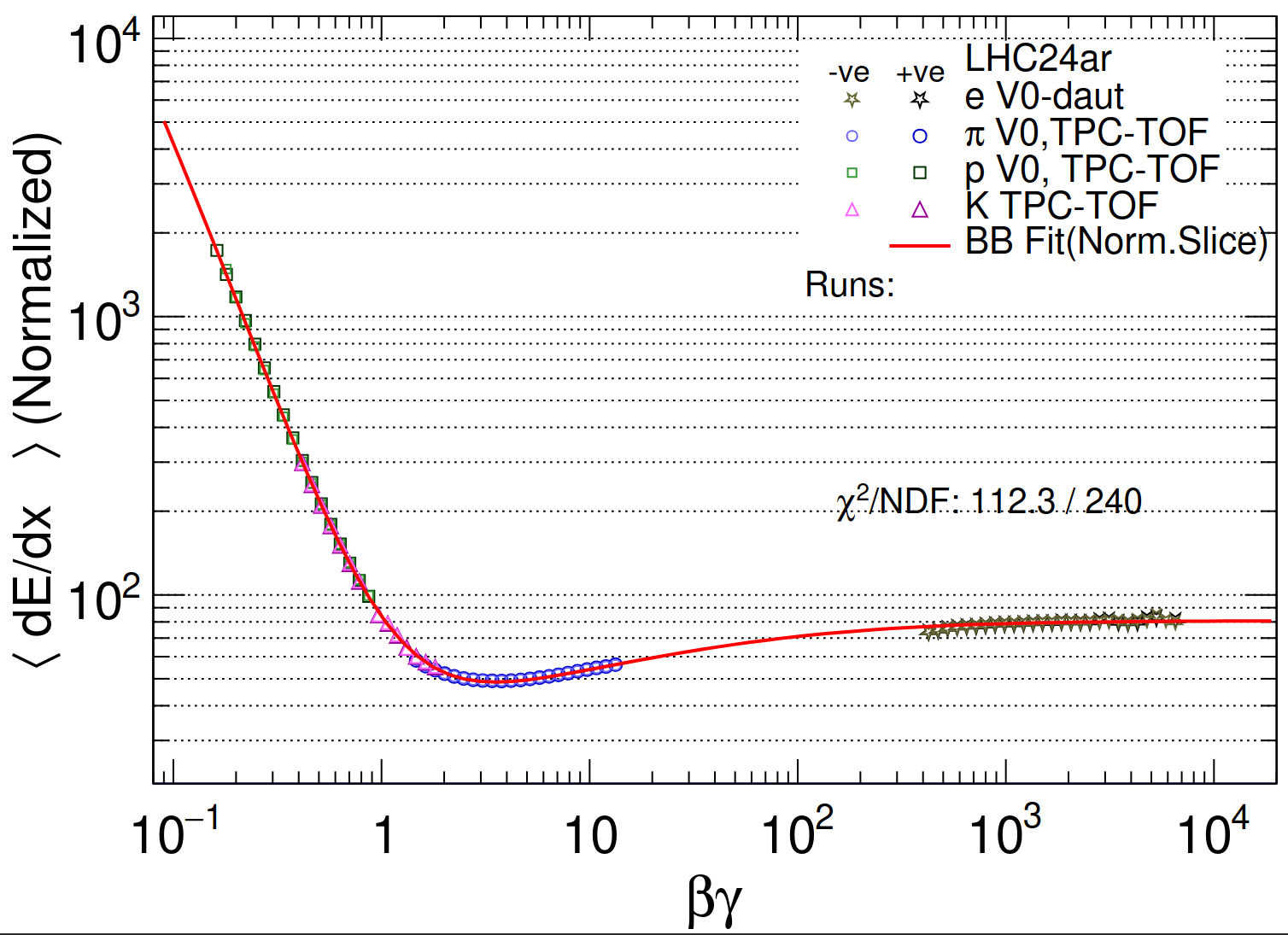

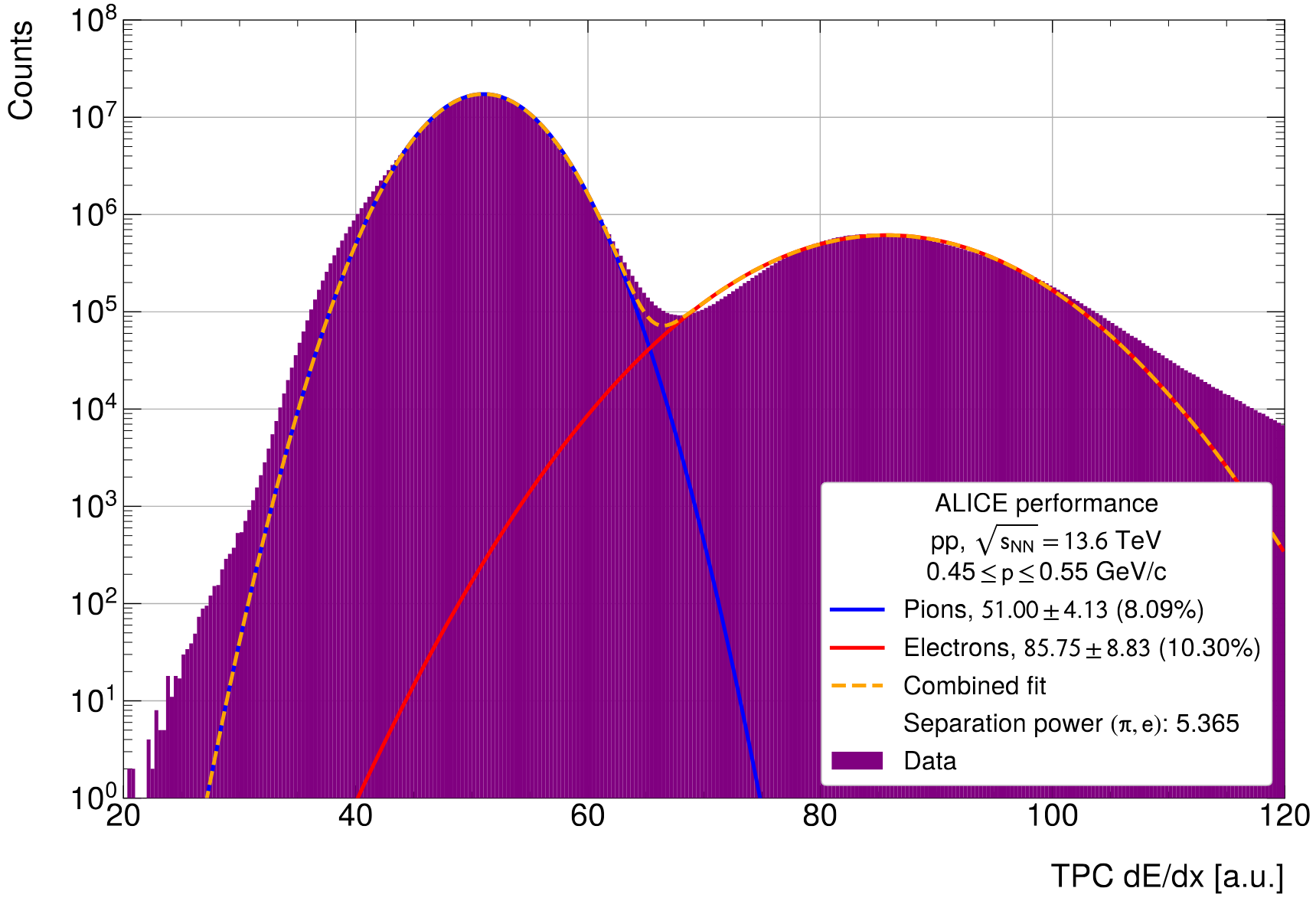

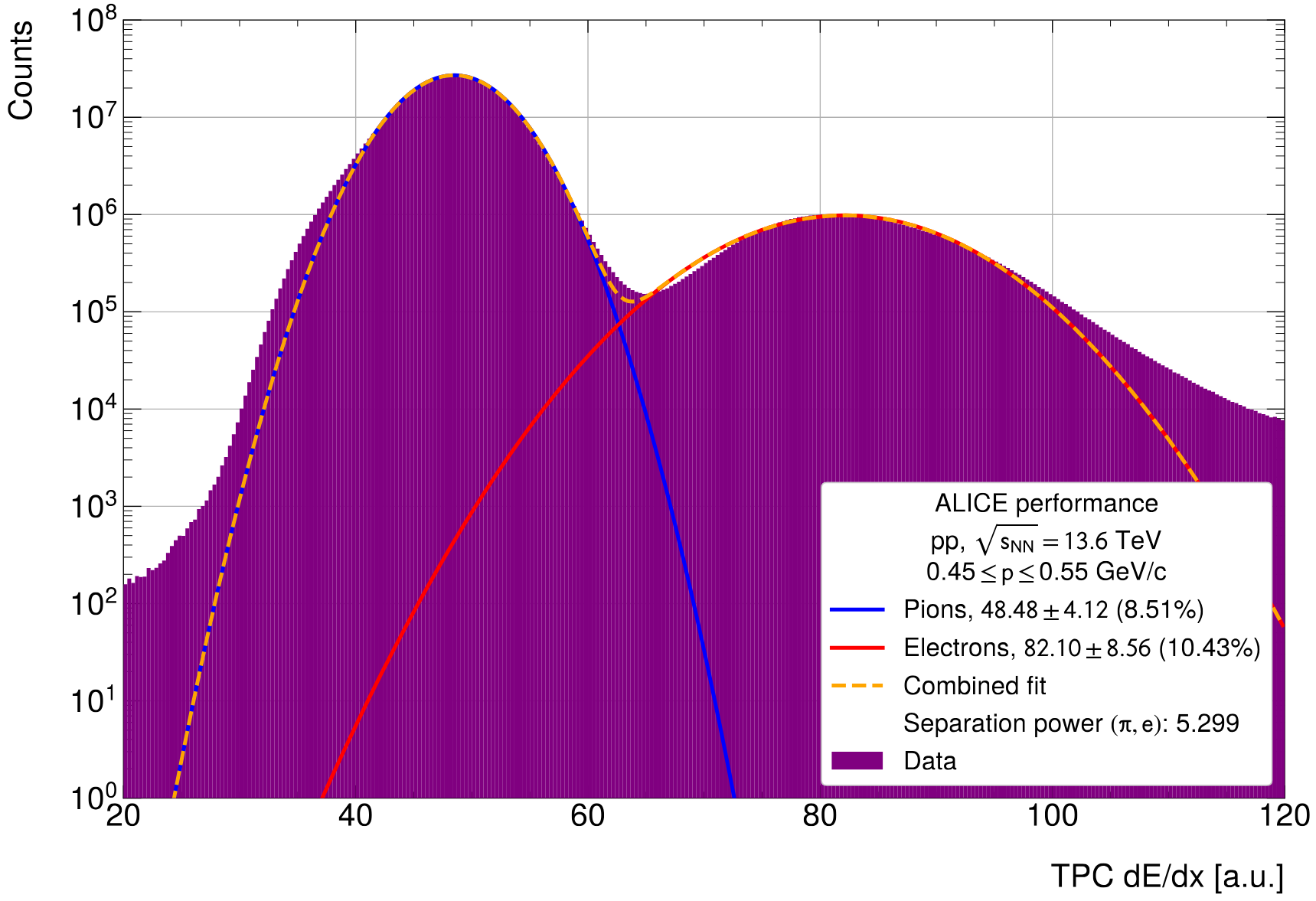

PID calibration

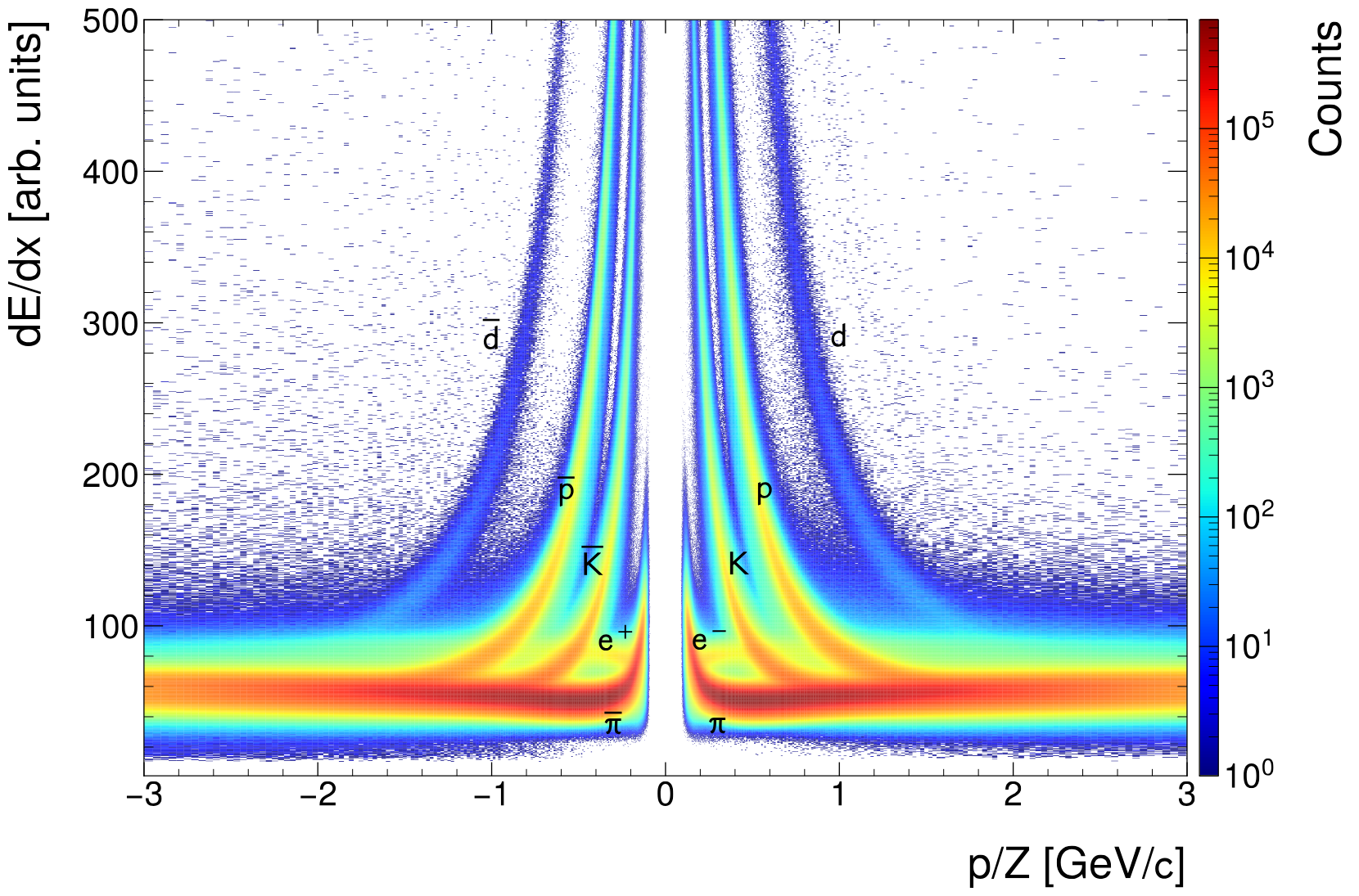

- Successful for all periods, quality maintained (left: NN, right: heuristic)

- With NN PID calibration

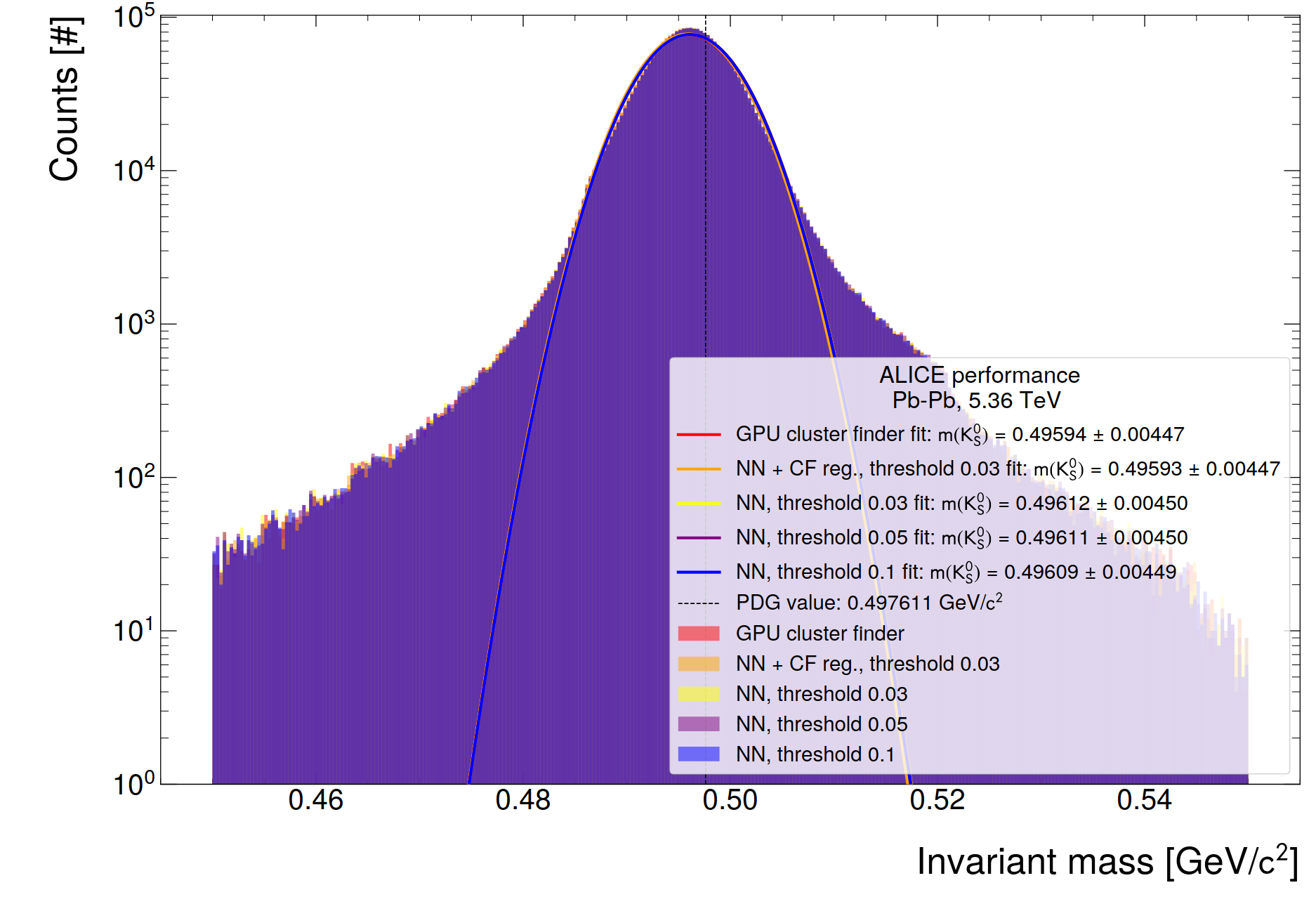

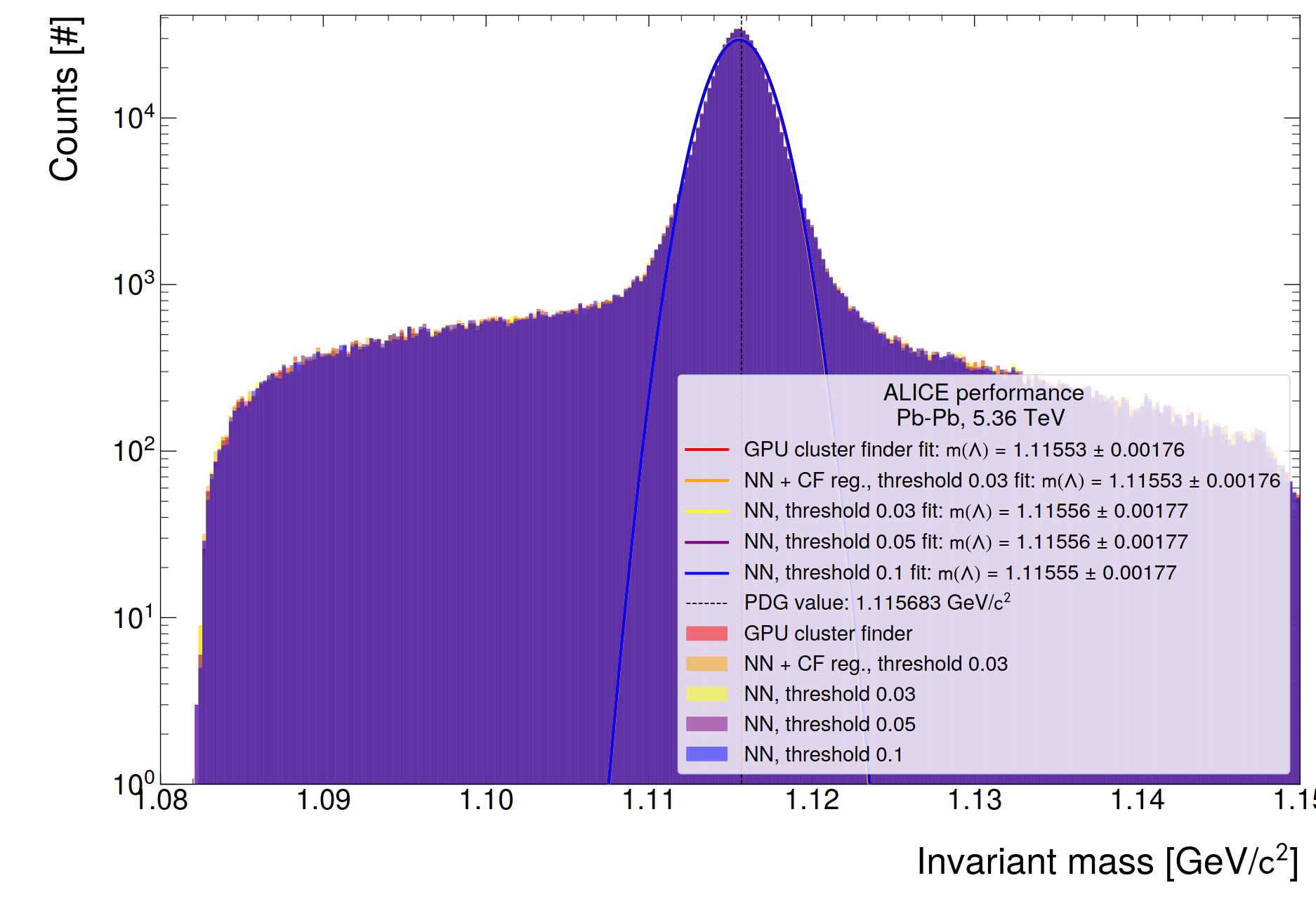

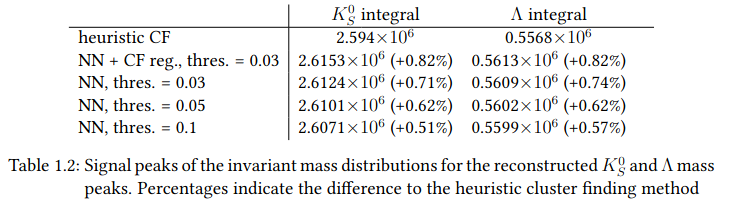

- K0S and Lambda mass peak

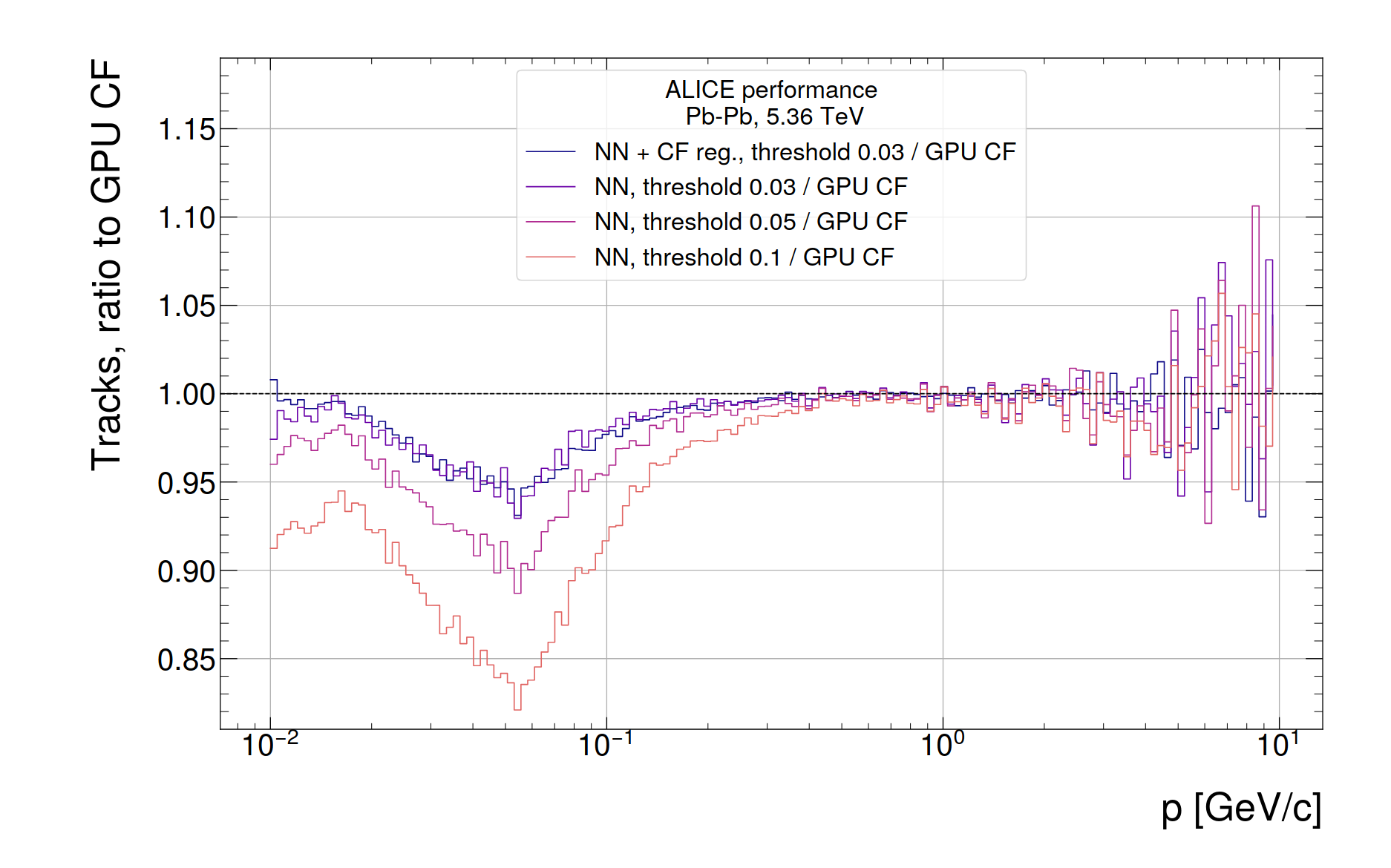

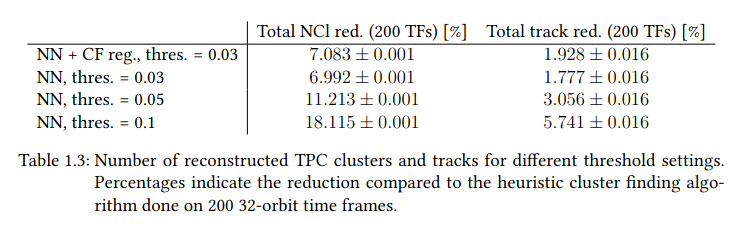

- Ratio of track distribution from 200 TFs

- Reduction until max. 300 MeV, as expected from MC

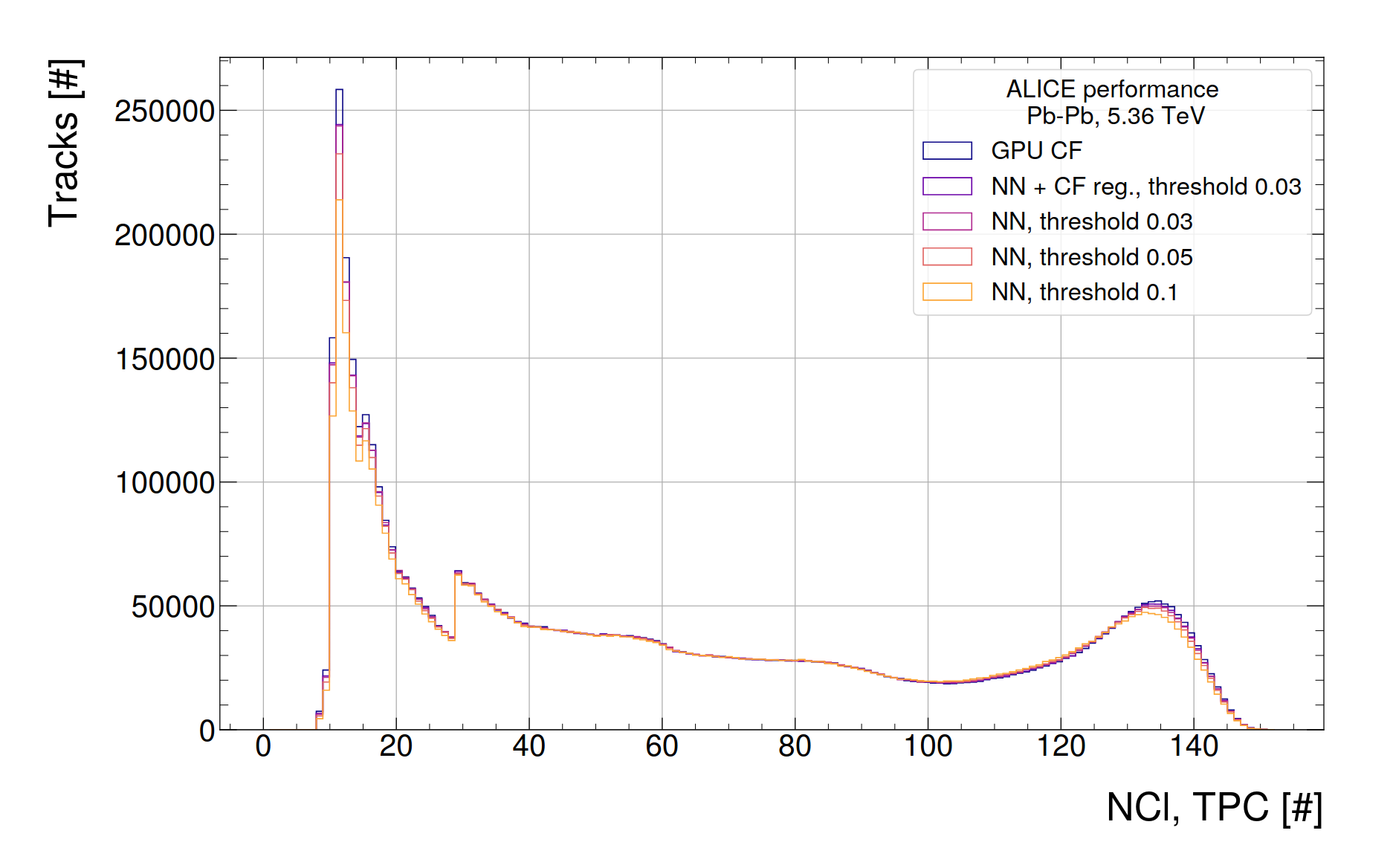

- NCL distribution of tracks from 200 TFs

- Reduction mainly observed for very short tracks

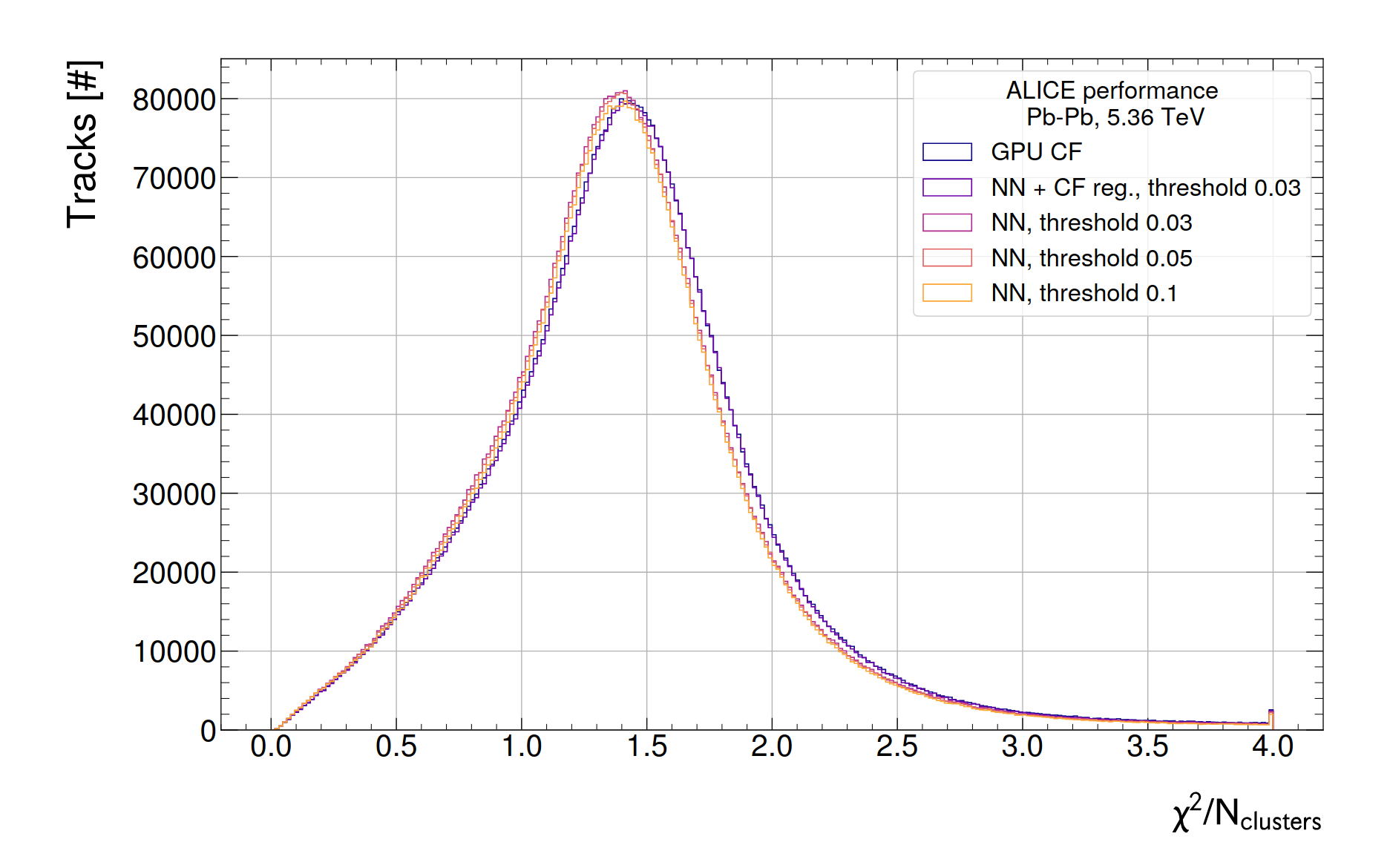

- chi2/NCL (analysis variable) from 200 TFs

- Improvement noticeable for all cases where NN regression is used

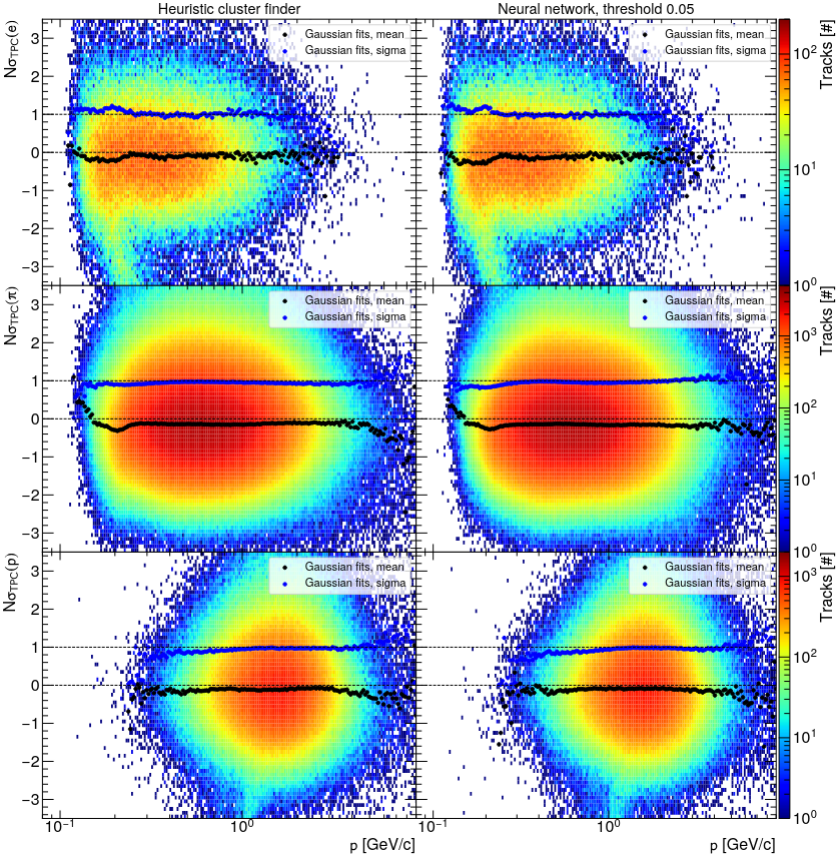

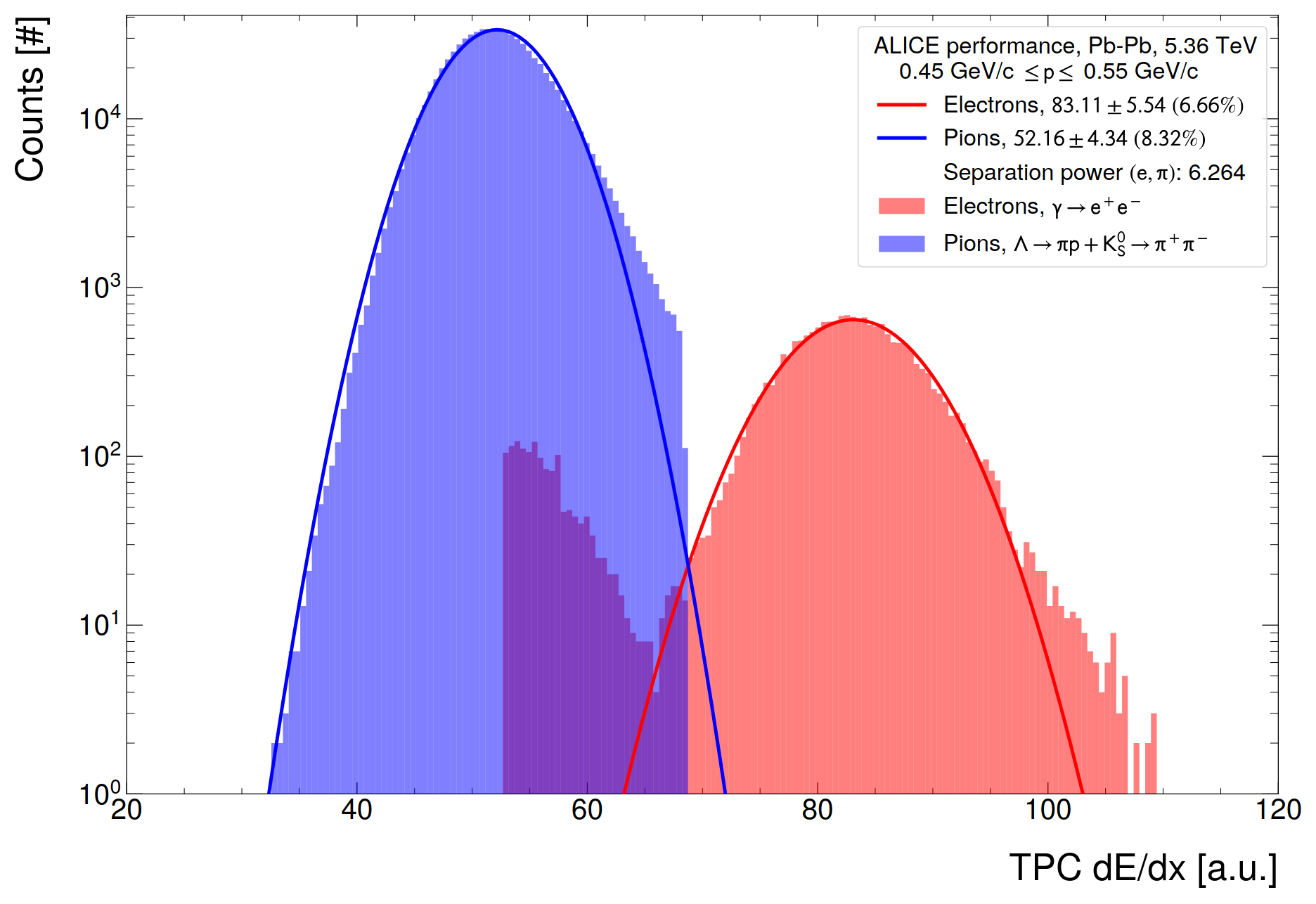

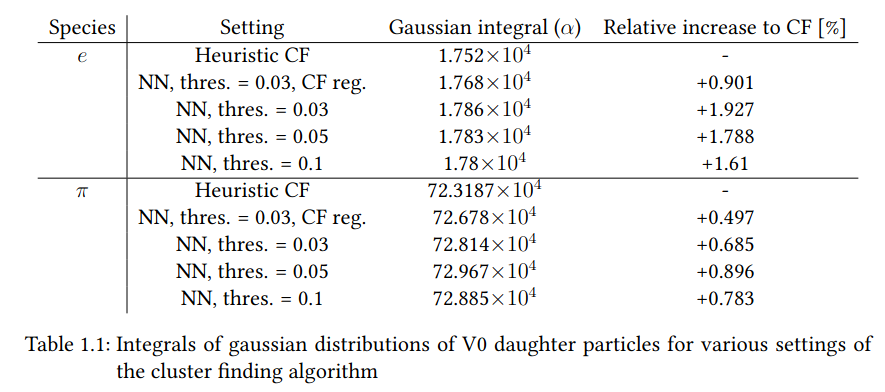

- Separation of pion and electron band from selected V0s

- Showing one figure as example (NN, 0.05)

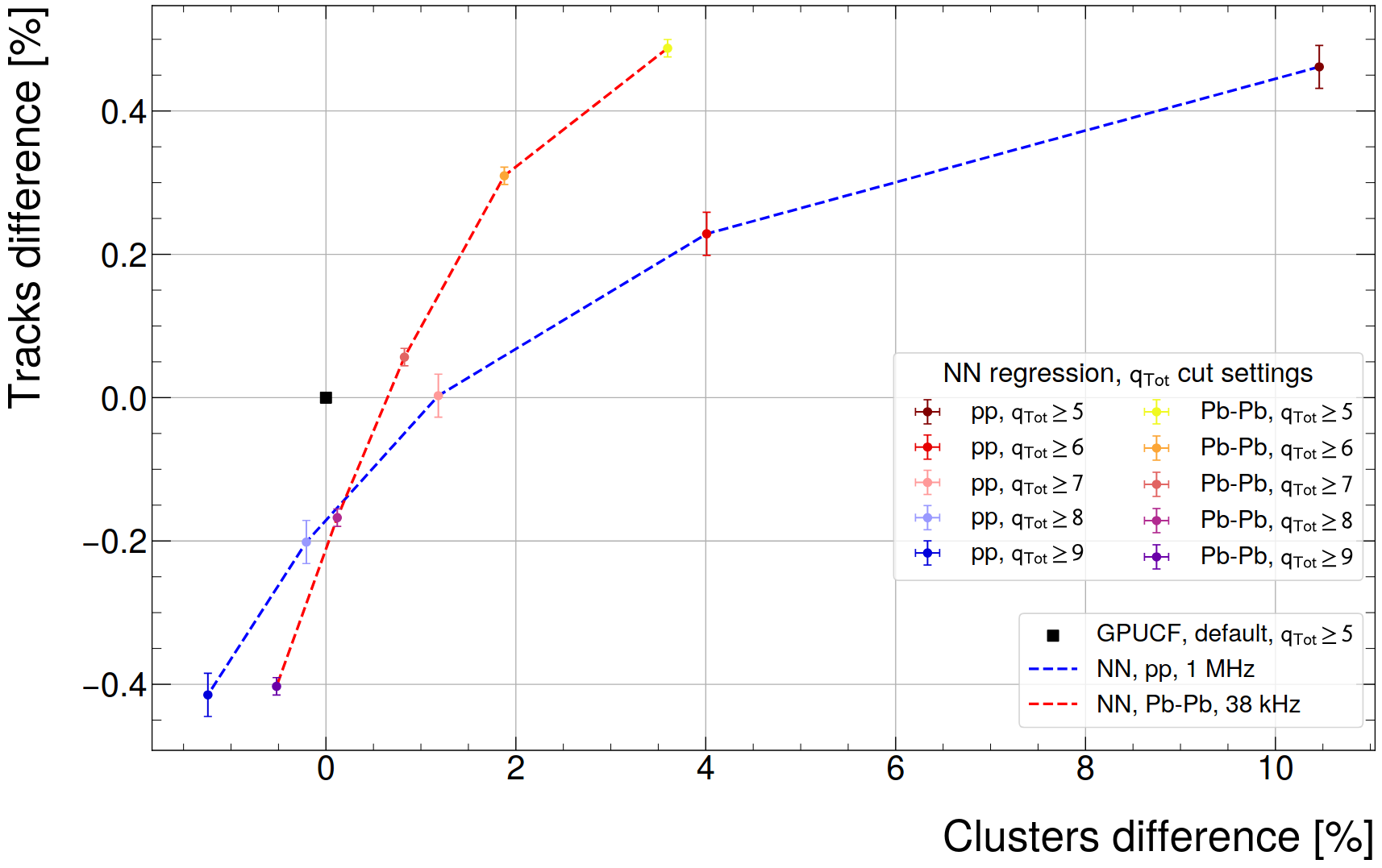

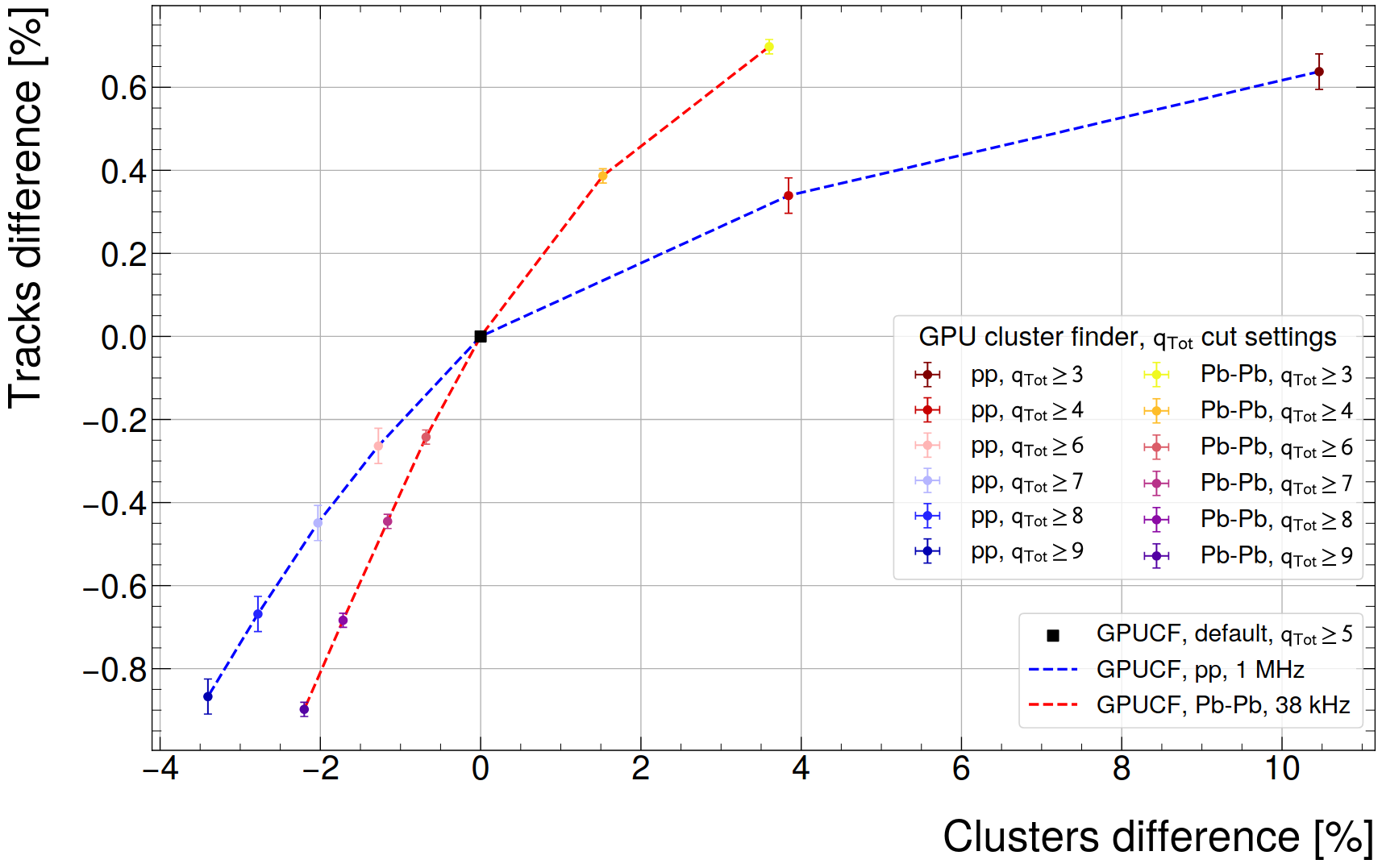

Commissioning runs

- It ran in online (03.10.2025) 🥳🥳🥳

- Noise is significantly higher in real data than in MC

- Adjustment needed for qTot threshold

- Criterion: Adjust until number of tracks and number of clusters roughly match default reco, without using any classification by the NN

- Bonus: Is the current qTot thershold a good choice for the default reconstruction?

- Spoiler: Good for pp but could be loosened for Pb-Pb

- Chosen threshold for online run: qTot \geq 8

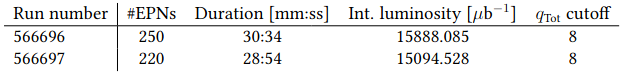

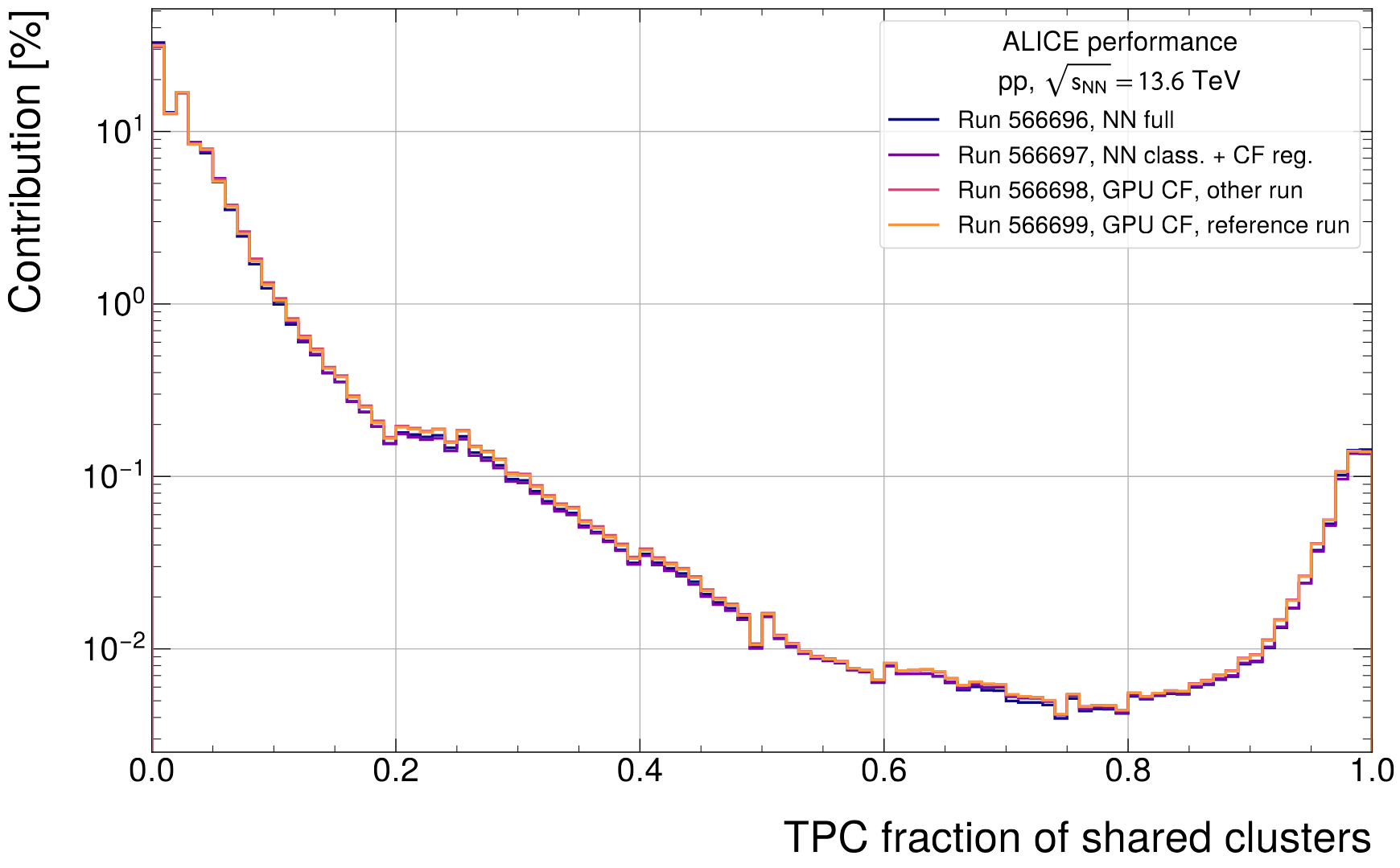

- Actual comissioning runs

- 566696: NN, full configuration, threshold 0.05

- 566697: NN classification, threshold 0.05 + heuristic regression

- Unfortunate mistake: 566697 also has qTot \geq 8, even though it uses the heuristic regression

- Previous investigation: Makes a difference of 2.8% clusters and 0.7% tracks (pp, 1 MHz)

- Realistic check on CTF size: more like 1.4% clusters, because the run was a pp, 500 kHz

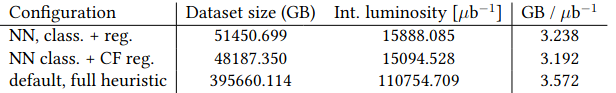

- Data-size reduction: GB / lumi

- Shows expected behavior of 9.4% reduction in total data volume

- Electron-pion separation power at MIP, not V0 but full distribution

- Left: NN, Right: Default reco from another run

- Improves both electrons (1.25%) and pions (5%). Effect is not as strong as in Pb--Pb and not as strong as on V0 sample (because of potential surrounding noise)

- Separation power also improves by 1.3%

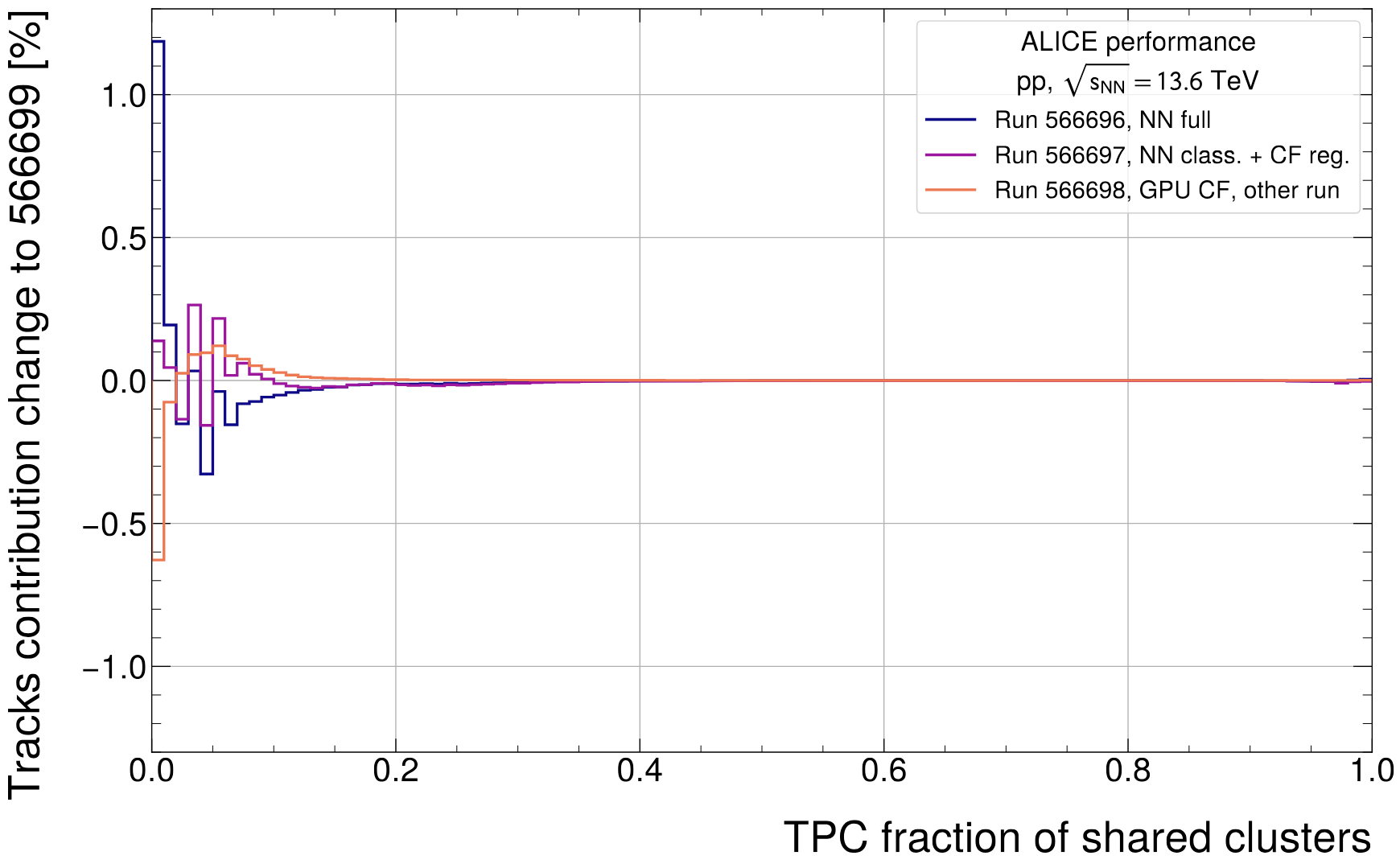

- Shared cluster distribution

- In any case not expected to be critically dominated by shared clusters in low-rate pp, but still good to check

- First: Check absolute counts

- Small, relative improvement close to bin at 0 will have most dominant effect (by orders of magnitude)

- Expected behavior: Increase peak close to 0 (relative to reference run), decrease across the rest of shared clusters

- To be plotted as a ratio to the reference run by strength of relative contribution to absolute track / cluster count

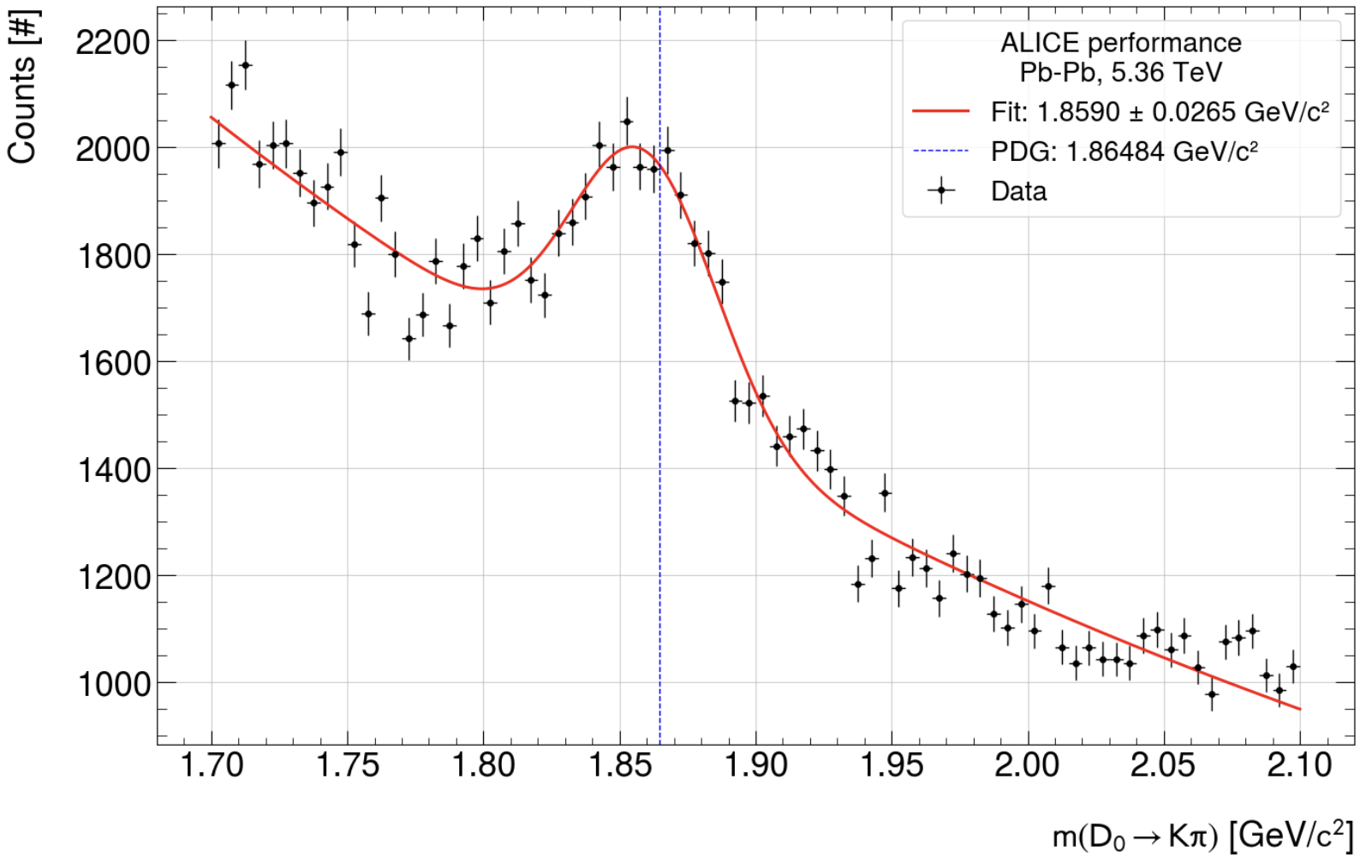

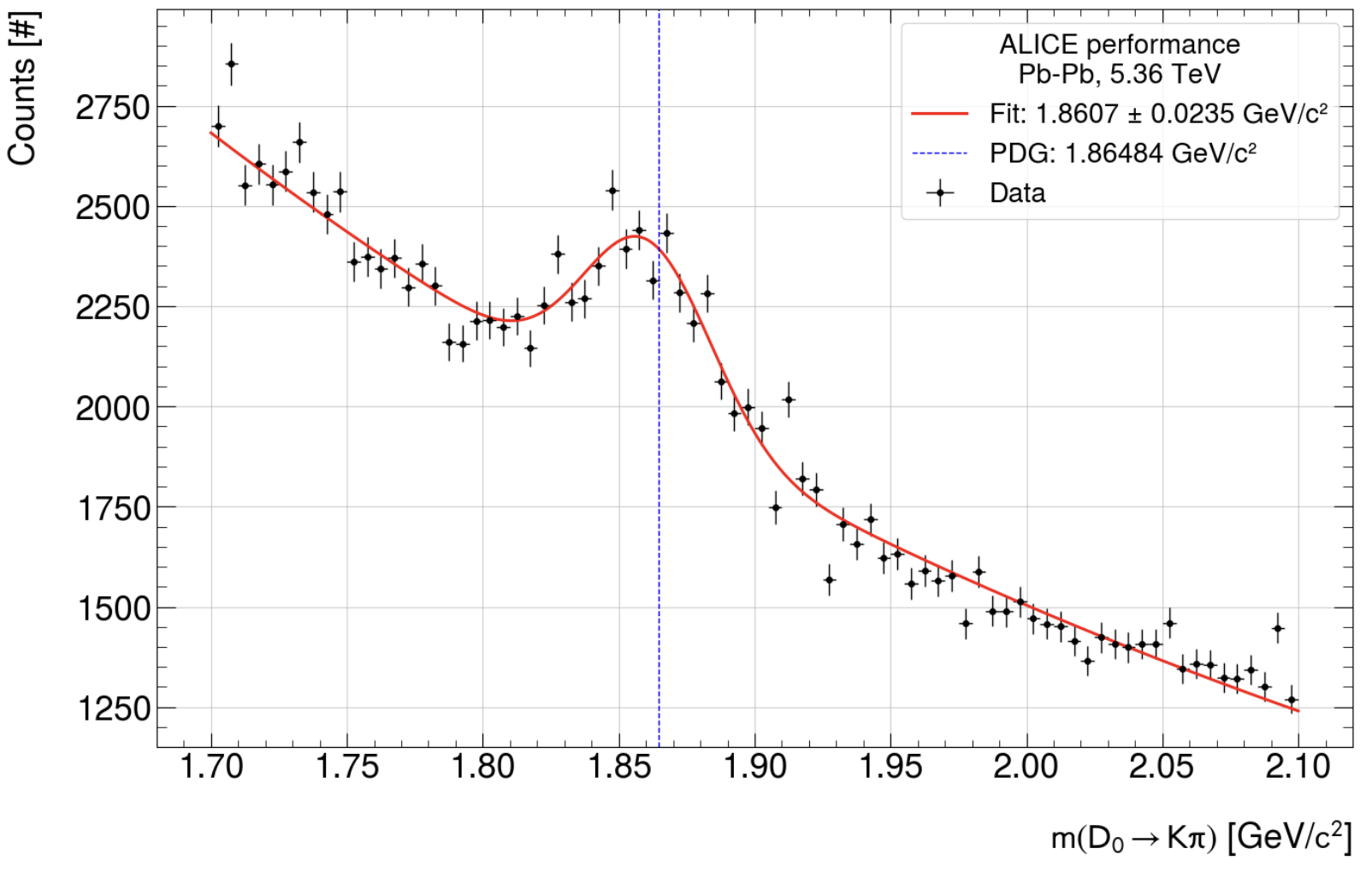

Something at higher level: Reconstruction of D0

Used BDT from Fabrizio Grosa for investigation on two different reconstructions: Default and NN (full) with threshold 0.05

- Wrote an OPTUNA based optimization class that optimises the input variables to a given score metric

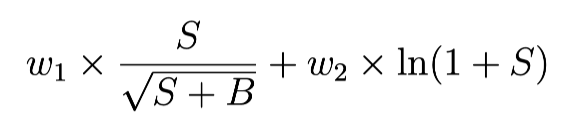

- Chosen score metric:

- w1 = 0.2, w2 = 0.8

- signal: gauss, background: pol3 (tried also exp, both work well)

- Chosen score metric:

- Result (same cuts applied to both datasets)

-

- First: default reco: 'signal': 32.007845773858556, 'sigma': 0.026497547051550507, 'gauss peak': 4.81904342e+02

-

- Second: NN full, 0.05: 'signal': 26.3774060396624, 'sigma': 0.023521459662659887, 'gauss peak': 4.47381356e+02

So: Default reco is still "better", but BDT was used from default reco in the same way with the same cuts for both reconstructions.

After talking to Fabrizio Grosa: The excess in background and signal could actually be real... This heavily depends on ITS-TPC matching, DCA and other observables. Not necessarily anything wrong in the reco.Finally also something cool

3D render of one of the first collisions recorded with the neural network cluster finder in online reco! Full credit to Felix Schlepper

AOB

- CHEP abstract: https://www.overleaf.com/read/vqghvpvzwnks#9b3851

- Timing tests done for MI50 during MD, but MI100 not valid due to serialization issue

- Reran with new tracking on MC, interpretation stays the same

-

10:25

→

10:30

GPU Parameter Optimizations 5mSpeaker: Gabriele Cimador (CERN, Università and INFN Torino)

News from GPU parameter tuning

- Tuner now works on AMD and Nvidia GPUs

- Tuned params for 50 kHz PbPb datasets on NGT cluster:

- Gain of 8.83% for AMD Radeon Pro W7900

- Gain of 14.29% for Nvidia H100

- Tuned dataset used by Oliver

- Gain of 14.34% for AMD Radeon Pro W7900

- Currently tuning for H100

- Integrate dynamic param opt in O2?

- Would it be possible to do some replay tests maybe next year?

- E.g. see if with opt params a lower number of EPNs can be used for the same IR?

-

10:30

→

10:35

Efficient Data Structures 5mSpeaker: Dr Oliver Gregor Rietmann (CERN)

ALICE O2 CI-Pipelines on NGT Cluster

- A fork of the AliceO2 repo is not in the NextGenTrigggers (NGT) GitHub organization

- It has a GitHub action running the standalone benchmark on NGT GPUs

- Uses the builds in /cvmfs/alice.cern.ch/ of O2 and dependencies (pipeline takes about 7 minutes)

- Different GPUs are tested in parallel on different VMs

- O2 standalone benchmark works on the all ngt-resources:

- Nvidia H100 188GB NVL

- AMD Instinct MI300X

- AMD Radeon Pro W7900

- Nvidia L40S

- We are now using custom .par files

- Gabriele generated optimized .par files

- Next steps

- Use Gabrieles .par files

- Possible next steps

- Add new architectures to O2 hardcoded ones?

- Store results in csv format and plot them?

- Display the plot in the github web gui?

NextGenTrigger Task 1.7

- Workshop November 19 - 21

- Need to prepare talk about Task 1.7.

- Collected summaries from people working on Task 1.7 last week

- Next Steps:

- Interview task leads

- Create the slides

- Discussing implementation of our template SoA code in MadGraph with Stefan Roiser

- Next Steps:

- Change template approach to also support SoA with AoS access only

- Next Steps:

- Held a meeting with Jolly and Axel from Task 1.7

- Topic: Merge reflections and template approaches to SoA

- Problems with template approach: Not working with derived classes or private members

- Merged with reflections: https://godbolt.org/z/P1xohnxdv

- Same without, but with boilerplate code: https://godbolt.org/z/ovavdKba7

- Next Steps:

- Implement new ideas in template approach

Implement NGT SoA Code in O2 standalone benchmark

- Working on this fork of the AliceO2 repo

- Simplified the AoS-SoA wrapper code

- Started to apply our AoS-SoA code to:

- Merger

-

GPUTPCGMSectorTrack

-

GPUTPCGMTrackParam

-

- SectorTracker

- GPUTPCBaseTrackParam

- GPUTPCTrackParam

- GPUTPCTrack

- Merger

- Started with SectorTracker

- Get same results (same GPU.out files)

- Execution time was the same =(

- Changed more classes to SoA

- Using hardcoded SoA to assure zero overhead

- Almost done, still fixing a problem about template specializations

- Next Steps:

- Fix last bug

- Re-run test

- Re-run benchmark

-

10:35

→

10:40

Following up GPU to-dos 5mSpeaker: Dr Vikas Singhal (Department of Atomic Energy (IN))

-

10:40

→

10:45

TPC Clusterization / OpenCL / Highly Ionizing Particles 5mSpeaker: Felix Weiglhofer (Goethe University Frankfurt (DE))

-

10:45

→

10:50

ITS Tracking 5mSpeakers: Felix Schlepper (CERN, Heidelberg University (DE)), Gabriele Cimador (CERN, Università and INFN Torino), Matteo Concas (CERN)

Felix:

Since last time added 'feature' to cpu-allocator to use the pinned memory from the gpu-framework, now all to gpu transferred data is prepared in pinned host memory and transferred via DMA (https://github.com/AliceO2Group/AliceO2/pull/14681). Previously, we allocated via the system allocator and pinned the memory ourselves afterward (also unpinned) for every TF, while I did not measure the impact on the timing, it should be obvious that this is better.

Waiting for recipe to clear parts of the memory in-between iterations. Then repeat test production.

What else is needed to commission ITS GPU tracking for async?

News from ITS vertexing (Gabriele)

- Partial porting of the vertex seeding by Felix

- Will port rest of the algorithm

- After that, I would like to start a campaign of optimization of the vertexing

- Is this the best way to split the work among GPU threads?

- Is this the best way to deliver the data to GPU?

- Is this the best algorithm we can use? Can we find a more GPU-friendly one? (s.t. the CPU version is also optimized, and determinism is not broken)

-

10:50

→

10:55

System Run Coordination Topics 5mSpeaker: Ernst Hellbar (CERN)

issue in TPC TimeGain calibration and laser calibration since software update including the TPC GPU tracking improvements

- laser calibration fails, some suspicious negative vdrift correction values quoted in the logs

- will run a laser run with old software

epn-20250905(to get logs for comparison) and rawtf storage (for local reconstructions)

- will run a laser run with old software

- running local reconstruction and TimeGain calibration

- on GPU, CPU

- old software

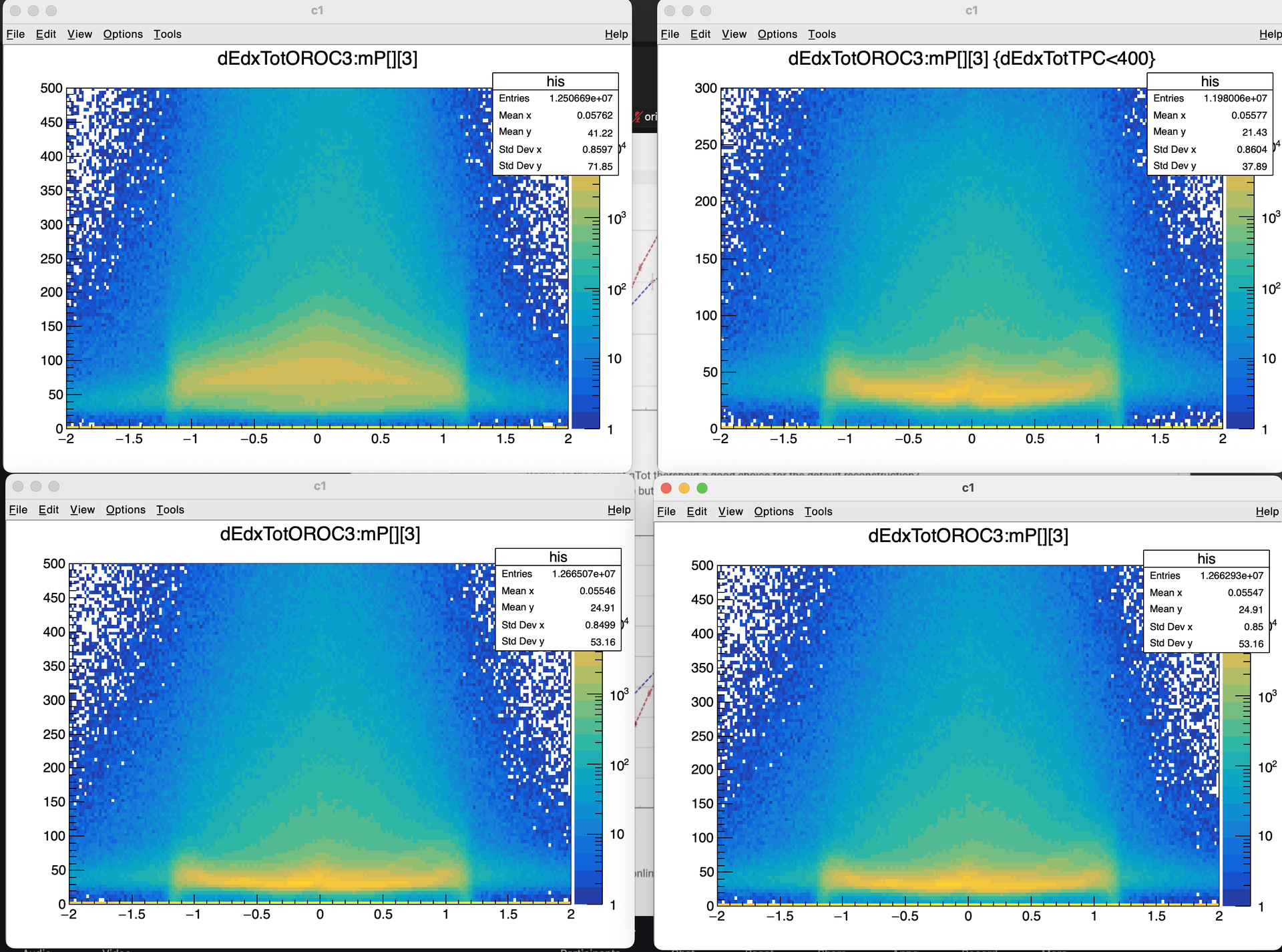

epn-20250901and new softwareepn-20250925.2 - TPC track dEdx and lokal TimeGain calibration look fine for oldSW+GPU/CPU and newSW+CPU, but large difference with newSW+GPU

- TPC track dEdx in OROC3 for 100 TFs of run 566656 which uses an already bad TimeGain object initially

- upper row: new SW, lower row: oldSW

- left: GPU, right: CPU

- laser calibration fails, some suspicious negative vdrift correction values quoted in the logs

-

10:00

→

10:20