Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Fix dropping lifetime::timeframe for good: Still pending: problem with CCDB objects getting lost by DPL leading to "Dropping lifetime::timeframe", saw at least one occation during SW validation.

- Newly spotted bogus message about decreased oldestPossible counter should be suppressed. Status?

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308: Status?.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

Global calibration topics:

- TPC IDC and SAC workflow issues to be reevaluated with new O2 at restart of data taking. Cannot reproduce the problems any more.

Sync reconstruction

- Software frozen for Pb-Pb, Pippo is discussing if we want to do some more updates of only O2 and QC, to avoid too many cherry-picks. To be seen.

- Waiting for RC to test COSMIC replay data set.

- Some crashes of tpc-tracking yesterday in Pb-Pb REPLAY runs, to be investigated.

- No more crashes in Pb-Pb replay in TPC code, fix seems to work.

- STOP timeout discussion: RC did some tests, which shows a significant dependence on the STOP timeout. However only tested in SYNTHETIC. Should repeat in PHYSICS.

- Alice will add number of CTF orbit monitoring to GRID DAQ monitoring website.

- Reported a bug that ECS GUI sends the default string instead of an empty string if a GUI field is empty, fixed by ECS.

- Problem after EPN slurm changes that topology generation failed regularly on staging

- Debugged to be due to stdin not reporting empty but reading fails with "bad file descriptor". Fixed by overriding stdin.

- Fix had side-effect on ccdb-populator, which was fixed by Ernst.

Async reconstruction

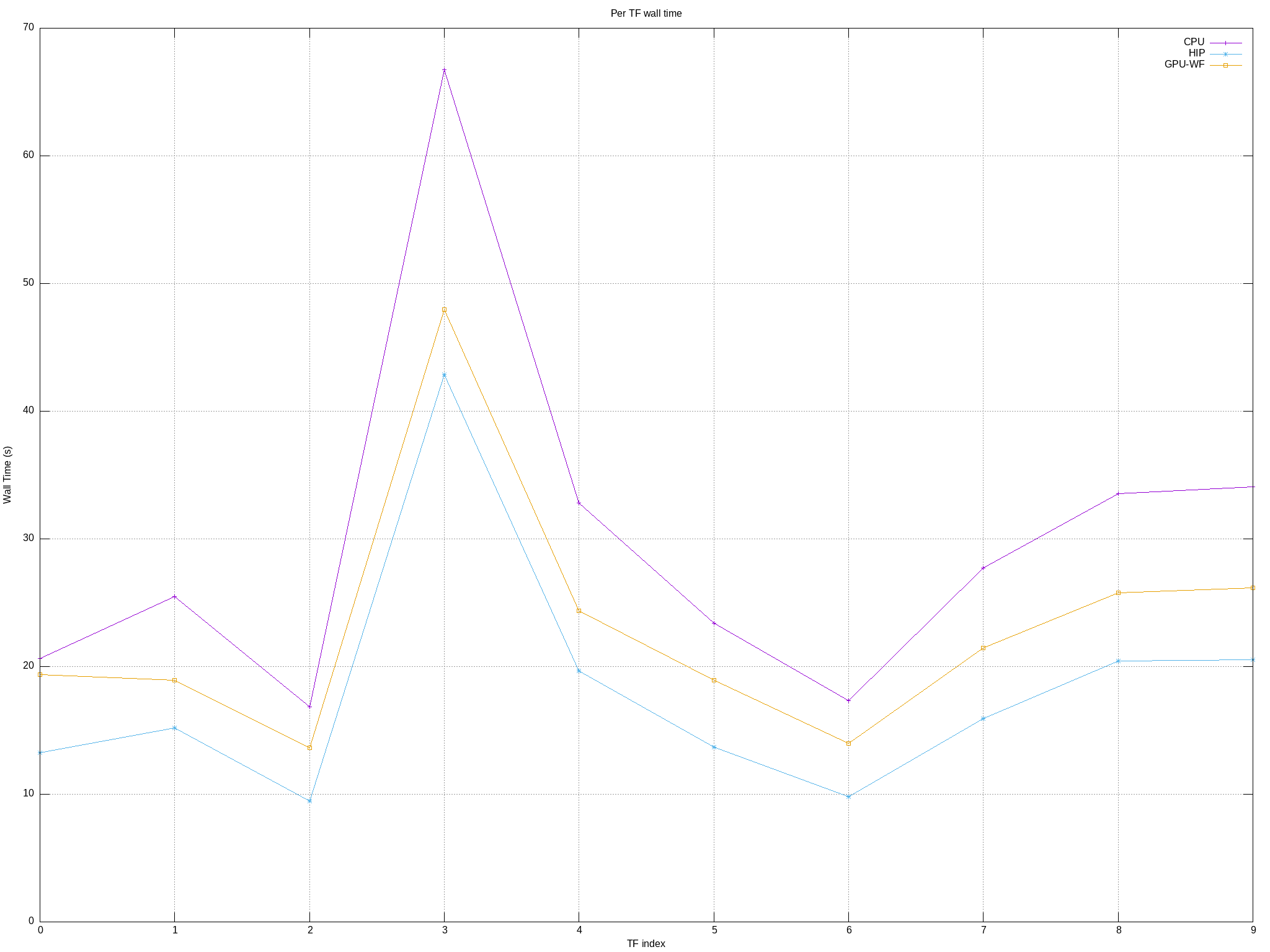

- Remaining oscilation problem: GPUs get sometimes stalled for a long time up to 2 minutes. Checking 2 things:

- does the situation get better without GPU monitoring? --> Inconclusive

- We can use increased GPU processes priority as a mitigation, but doesn't fully fix the issue.

- ḾI100 GPU stuck problem will only be addressed after AMD has fixed the operation with the latest official ROCm stack.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- Problem reported by Max/Ruben that analysis fails, since sometimes at EOR we receive TFs with no data and bogus orbit.

- bogus TF are now detected in readout-proxy: https://github.com/AliceO2Group/AliceO2/pull/13495.

- Giulio will implement tf-status message send by readout-proxy.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Tentative time for ALMA9 deployment: december 2024.

Other EPN topics:

Full system test issues:

Topology generation:

- 2 Occucances where the git repository in the topology cache was corrupted. Not really clear how this can happen, also not reproducible. Was solved by wiping the cache. Will add a check to the topology scripts to check for a corrupt repository, and in that case delete it and check it out anew.

AliECS related topics:

- Extra env var field still not multi-line by default.

GPU ROCm / compiler topics:

- Test with ROCm 6.2.2:

- Seems to be stable, but ran only some short tests yesterday so far.

- Some changes in default compile options, asked amd if there is meanwhile a proper way to handle setting amdgpu-function-calls instead of patching the files in /opt/rocm.

- Adding RTC support, had to use some tricks in CMake to get right compile options for creating device binaries. Will check with AMD if there are proper compile flags to get rid of the hacks (like what is available for the CMake CUDA language).

- Will do some more tests, then we'll setup SLC9-GPU builder as default for FullCI.

- Compilation failure due to missing symbols when compiling with -O0.

- Implemented a fix to make sure that all symbols are always defined.

- Since this has a significant impact on GPU compile time, and fix is on CMake level, we enable it only for CMAKE_BUILD_TYPE=DEBUG

- New miscompilation for >ROCm 6.0

- Waiting for AMD to fix the reproducer we provided.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Created PR to bump GCC to 14.2 (just to test). Problems in binutils, json-c, AlfFred DIM fixed, mostly clean.

- Fails in ROOT compilation for AliRoot: do we use an old ROOT?

- Fails to compile clang on SLC9 for ARM: is this a new failure?

- Expected failure in FullCI due to CUDA not supporting GCC 14.

TPC GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster including average charge and occupancy map errors during seeding.

- Want to switch to int8 ... uint64 types instead of char, short, ...

- TPC asked to be able to apply cluster cuts also at CTF decoding, not only during clusterization. Will add this.

TPC processing performance regression:

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

General GPU Processing