Alice Weekly Meeting: Software for Hardware Accelerators / PDP-SRC

-

-

10:00

→

10:20

Discussion 20mSpeakers: David Rohr (CERN), Giulio Eulisse (CERN)

Color code: (critical, news during the meeting: green, news from this week: blue, news from last week: purple, no news: black)

High priority Framework issues:

- Start / Stop / Start: 2 problems on O2 side left:

-

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

- TPC ITS matching QC crashing accessing CCDB objects. Not clear if same problem as above, or a problem in the task itself:

- All processes are crashing randomly (usually ~2 out of >10k) when restarting. Stack trace hints to FMQ. https://its.cern.ch/jira/browse/O2-4639

-

- Stabilize calibration / fix EoS: New scheme: https://its.cern.ch/jira/browse/O2-4308:

- Discussed with RC to repeat timeout tests in COSMICS and PHYSICS.

- Ernst will verify the processing of calib data after data processing timeout.

- PR with InfoLogger improvements still WIP.

- Processes crashing at shutdown if STOP timeout was short, not clear if related, but should be checked.

- Fix problem with ccdb-populator: no idea yet - since Ole left, someone else will have to take care.

- TF-status message (from https://github.com/AliceO2Group/AliceO2/pull/13495) sent by readout-proxy.

Sync reconstruction

- Waiting for RC to test COSMIC replay data set.

- Waiting for RC to test STOP timeout impact.

- Problem with high CPU load due to DPL metrics. Probably solved by throttling dummy-sink metric on FLP. But still not clear why a 300Hz metric causes trouble, indicates that something is wrong in the monitoring?

- FLPs fixed their problems with new GRPC, so now we could bump alidist at P2.

Async reconstruction

- Need to investigate short GPU stall problem.

- Limiting factor for pp workflow is now the TPC time series, which is to slow and creates backpressure (costs ~20% performance on EPNs). Enabled multi-threading as recommended by Matthias - need to check if it works.

- Async reco on EPNs was running at very low efficiency of ~20%. Several issues fixed bringing it back to 60-80%. Fixing some more issues will hopefully get us back to 90%.

- Fixed:

- Muon MFT Track QC too slow. For now downscaled, proper improvement will come later.

- Include order change let to different defaults, making the workflow use only 1 GPU for Pb-Pb: fixed.

- AOD producer needs more CPU time now, increased its multiplicity.

- Still under investigation:

- DPL dummy sink consuming constant 100% CPU.

- ITS tracker needs very long (O(30) minutes) for some TFs.

- Fixed:

AliECS related topics:

- Extra env var field still not multi-line by default., created https://its.cern.ch/jira/browse/OGUI-1624 to follow this up seperately from other tickets.

GPU ROCm / compiler topics:

- List of important issues with AMD:

- Issues that disappeared but not yet understood: random server reboot with alma 9.4, miscompilation with ROCm 6.2, GPU getting stuck when DMA engine turned off, MI100 stalling with ROCm 5.5.

- Problem with building ONNXRuntime with MigraphX support, to be checked.

- Need to find a way to build ONNXRuntime with support for CUDA and for ROCm.

- Try to find a better solution for the problem with __device__ inline functions leaking symbols in the host code.

- Once we bump arrow (PR opened by Giulio), we can bump LLVM to 19.

- Waiting for ROCm 6.4.1 with the synchronization fix, then we can test the 6.4 version.

TPC / GPU Processing

- WIP: Use alignas() or find a better solution to fix alignment of monte carlo labels: https://its.cern.ch/jira/browse/O2-5314

- Waiting for TPC to fix bogus TPC transformations for good, then we can revert the workaround.

- Waiting for TPC to check PR which uses full cluster errors including average charge and occupancy map errors during seeding.

- Final solution: merging transformation maps on the fly into a single flat object: Still WIP

- Pending OpenCL2 issues:

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Crash in merger, which can be worked around by disabling clang SPIRV optimization. Probably bug in clang, but need to fix printf first to debug.

- Also with optimization disabled, crashing later in TPC merging, need printf to debug.

- Felix debugged the OpenCL clusterization problem to be due to off-by-one offset in NoiseSuppression. Need to check how that can happen only in OpenCL.

- printf not working due to confirmed bug in clang, fix is being prepared. Prevents further debugging for now.

- Next high priority topic: Improvements for cluster sharing and cluster attachment at lower TPC pad rows.

- No almost all GPU constant parameters available as runtime configuration setting for RTC (some exceptions in TPC clusterization still need to be preprocessor defines during host compilation, need to check if I can fix that.)

- Switched many settings from preprocessor define to constexpr, which fixed also some bugs where some settings might not be picked up correctly due to #ifdef typos.

- Need to talk to Gabriele, so that he can include these parameters into his tuning.

- Fixed NN Clusterization compilation with CUDA (this is not tested in the CI, since we can build ONNX only with either ROCm or with CUDA support, so what we build has only ROCm support. Should see if that can be fixed in the future.

- GPU Standalone benchmark can now also compile with ONNX support.

- Will add a GPU Standalone Benchmark build to the FullCI, to avoid breaking it in the future.

- Merged Christian's PR for ONNX stream implementation, would like to check the problem with ONNX external memory allocator.

- Changed last preprocessor define setting in TPC clusterizer to new parameter scheme, now all parameters can be set at runtime for RTC.

- Added option to compile parameter presets per architecture at compile-time, installed with O2, and loadable at runtime. Right now we have (partially outdated) presets for AMD S9000, MI50, MI210, NVIDIA 1080, 2080, 3090.

Other Topics

- Felix is hired, will start 1st of June.

EPN major topics:

- Fast movement of nodes between async / online without EPN expert intervention.

- 2 goals I would like to set for the final solution:

- It should not be needed to stop the SLURM schedulers when moving nodes, there should be no limitation for ongoing runs at P2 and ongoing async jobs.

- We must not lose which nodes are marked as bad while moving.

- 2 goals I would like to set for the final solution:

- Interface to change SHM memory sizes when no run is ongoing. Otherwise we cannot tune the workflow for both Pb-Pb and pp: https://alice.its.cern.ch/jira/browse/EPN-250

- Lubos to provide interface to querry current EPN SHM settings - ETA July 2023, Status?

- Improve DataDistribution file replay performance, currently cannot do faster than 0.8 Hz, cannot test MI100 EPN in Pb-Pb at nominal rate, and cannot test pp workflow for 100 EPNs in FST since DD injects TFs too slowly. https://alice.its.cern.ch/jira/browse/EPN-244 NO ETA

- DataDistribution distributes data round-robin in absense of backpressure, but it would be better to do it based on buffer utilization, and give more data to MI100 nodes. Now, we are driving the MI50 nodes at 100% capacity with backpressure, and then only backpressured TFs go on MI100 nodes. This increases the memory pressure on the MI50 nodes, which is anyway a critical point. https://alice.its.cern.ch/jira/browse/EPN-397

- TfBuilders should stop in ERROR when they lose connection.

- Allow epn user and grid user to set nice level of processes: https://its.cern.ch/jira/browse/EPN-349

- Slurm bump, status?

Other EPN topics:

- Check NUMA balancing after SHM allocation, sometimes nodes are unbalanced and slow: https://alice.its.cern.ch/jira/browse/EPN-245

- Fix problem with SetProperties string > 1024/1536 bytes: https://alice.its.cern.ch/jira/browse/EPN-134 and https://github.com/FairRootGroup/DDS/issues/440

- After software installation, check whether it succeeded on all online nodes (https://alice.its.cern.ch/jira/browse/EPN-155) and consolidate software deployment scripts in general.

- Improve InfoLogger messages when environment creation fails due to too few EPNs / calib nodes available, ideally report a proper error directly in the ECS GUI: https://alice.its.cern.ch/jira/browse/EPN-65

- Create user for epn2eos experts for debugging: https://alice.its.cern.ch/jira/browse/EPN-383

- EPNs sometimes get in a bad state, with CPU stuck, probably due to AMD driver. To be investigated and reported to AMD.

- Understand different time stamps: https://its.cern.ch/jira/browse/EPN-487

- Start / Stop / Start: 2 problems on O2 side left:

-

10:20

→

10:25

Following up JIRA tickets 5mSpeaker: Ernst Hellbar (CERN)

Low-priority framework issues https://its.cern.ch/jira/browse/O2-5226

- Grafana metrics: Might want to introduce additional rate metrics that subtract the header overhead to have the pure payload: low priority.

- Merged workflow fails if outputs defined after being used as input

- needs to be implemented by Giulio

- Cannot override options for individual processors in a workflow

- requires development by Giulio first

- Problem with 2 devices of the same name

- Usage of valgrind in external terminal: The testcase is currently causing a segfault, which is an unrelated problem and must be fixed first. Reproduced and investigated by Giulio.

- Run getting stuck when too many TFs are in flight.

- Do not use string comparisons to derrive processor type, since DeviceSpec.name is user-defined.

- Support in DPL GUI to send individual START and STOP commands.

- Add additional check on DPL level, to make sure firstOrbit received from all detectors is identical, when creating the TimeFrame first orbit.

- Implement a proper solution to detect wheter a device is firstInChain

- Deploy topology with DPL driver

- Automatic creation of CTP/Config/Config by o2-ecs-grp-create call from the ECS

- https://its.cern.ch/jira/browse/O2-5921

- implemented and deployed

PDP-SRC issues

- Check if we can remove dependencies on

/home/epn/odc/filesin DPL workflows to remove the dependency on the NFS- reading / writing already disabled

- remaining checks for file existence?

- check after Pb-Pb by removing files and find remaining dependencies

logWatcher.shandlogFetcherscripts modified by EPN to remove dependencies onepnloguser- node access privileges fully determined by e-groups

- new

log_accessrole to allow access inlogWatchermode to retrieve log files, e.g. for on-call shifters - to be validated on STG

- waiting for EPN for further feedback and modifications of the test setup

- new

BEAMTYPEfor oxygen period- https://its.cern.ch/jira/browse/O2-5797

- beam types

- p-O and O-O

- Ne-Ne highly likely

- scripts to be adjusted to set proper workflow parameters

- tune multiplicities using

MULTIPLICITY_PROCESS_extra env var in P2 configurations- start with pp default multiplicities and tune during data taking if needed

- set ITS pre-scaling pp defaults using

CONFIG_EXTRA_PROCESS_forits-reco-workflowandgpu-reco-workflow - also use pp default pre-scaling parameters for MFT (in case no ITS)?

-

MFT_CONFIG_KEY+="MFTTracking.cutMultClusLow=0;MFTTracking.cutMultClusHigh=3000;"

-

- a couple of more parameters for MFT and MCH, will discuss with Ruben

- tune multiplicities using

- RC asked for a synthetic OO dataset

- new generator config files for OO, pO and NeNe set up by Marco

- default GRPMagField objects for new unanchored MC pO, OO, NeNe timestamps uploaded by Ruben

- O2 code to be checked for pp and PbPb specific variables

-

10:25

→

10:30

TPC ML Clustering 5mSpeaker: Christian Sonnabend (CERN, Heidelberg University (DE))

-

10:30

→

10:35

ITS Tracking 5mSpeaker: Matteo Concas (CERN)

ITS GPU tracking

- General priorities:

- F. Schlepper found some rare (more often in PbPb data) deviations in the deterministic mode, investigations restricted to a few blocks of code (findCellsNeighbours, post-CHEP). ==> Fixed: https://github.com/AliceO2Group/AliceO2/pull/14200

- [WIP] Thrust allocator with external memory management: for the moment it compiles but it does not work, needs dedicated discussion.

- Focusing on porting all of what is possible on the device, extending the state of the art, and minimising computing on the host.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Currently optimising the o2::its::timeframeGPU intialisation GPU transfers, trying to maximise data reuse & anticipating loads when it is possible.

- Moving vertexing routines to the externally managed memory system. -> WIP

- Optimizations:

- Asynchronous parallelisation in the tracklet finding, i.e. Multi-streaming for obvious parallelisations.

- intelligent scheduling and multi-streaming can happen right after.

- Kernel-level optimisations to be investigated.

TODO:-

- Reproducer for HIP bug on multi-threaded track fitting: no follow-up yet.

- Fix possible execution issues and known discrepancies when using

gpu-reco-workflow: no progress.

DCAFitterGPU

- Deterministic approach via using

SMatrixGPUon the host, under particular configuration: no progress.

- General priorities:

-

10:35

→

10:45

TPC Track Model Decoding on GPU 10mSpeaker: Gabriele Cimador (Universita e INFN Torino (TO))

MergerFollowLoopers / CompressionKernels_step0 optimisation

- Run new algorithm on this 4 dimensional step (2 block sizes - 2 grid sizes)

- Best mean time found for pbpb at 50kHz: 1824.58 ms ± 10.85 ms

- When plugging the new parameters in GPUDefParametersDefaults.h and compiling: 2061.96 ms ± 29.28 ms

- There might be a mismatch bewtween RTC and normal compilation?

Problems following new commits

- Appeared invalid configurations that worked before. For some configurations of the two kernels, these error arises:

:0:rocdevice.cpp :3018: 1129822182886d us: Callback: Queue 0x7f263ea00000 aborting with error : HSA_STATUS_ERROR_MEMORY_APERTURE_VIOLATION: The agent attempted to access memory beyond the largest legal address. code: 0x29/usr/bin/rocprofv2: line 324: 3728557 Aborted (core dumped) LD_PRELOAD=$LD_PRELOAD "${@}"

-

10:45

→

10:55

Efficient Data Structures 10mSpeaker: Dr Oliver Gregor Rietmann (CERN)

NextGenTrigger Hardware

- Can be accessed by a Kubernetes cluster

- Thus the code needs to run in a container

- Needs kubectl installed and correctly configured (on personal machine or on lxplus)

How to Run Our Code

Create a file "session.yml" defining a pod. The marked lines you might have to change.

apiVersion: v1

kind: Pod

metadata:

name: session-1

labels:

mount-eos: "true"

inject-oauth2-token-pipeline: "true"

annotations:

sidecar.istio.io/inject: "false"

spec:

containers:

- name: session-1

image: registry.cern.ch/ngt-wp1.7/wp1.7-soa-wrapper:latest

command: ["sleep", "infinity"]

resources:

limits:

nvidia.com/gpu: 1

securityContext:

runAsUser: 0

runAsGroup: 0In the terminal, run the following commands to start the pod and enter an interactive session.

kubectl apply -f session.yml

kubectl exec -it session-1 -- /bin/bashNow you can "git clone" your code and build it.

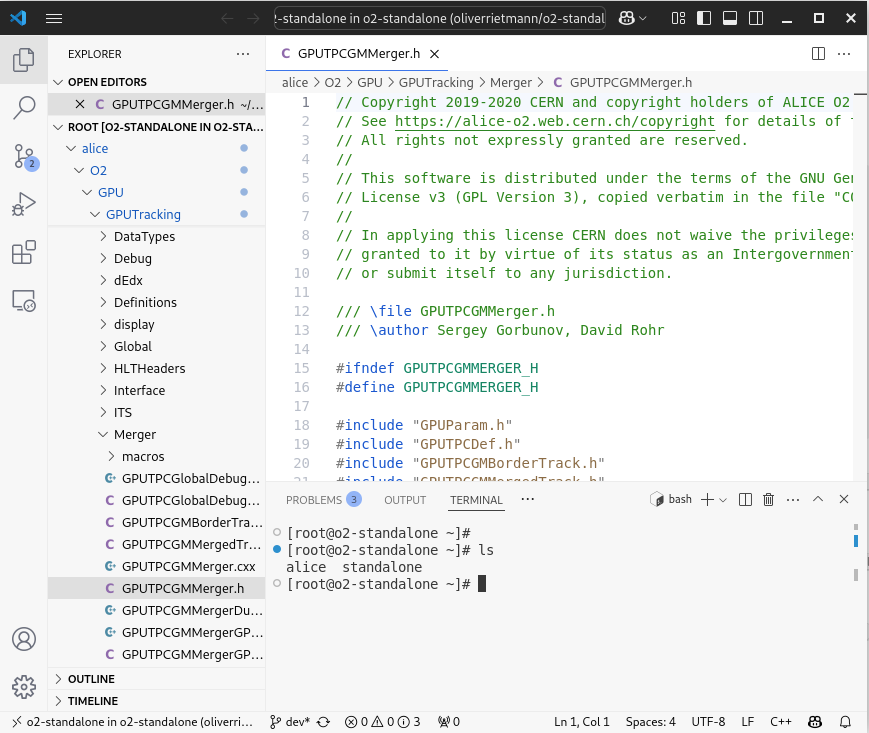

Remote Development (with VS Code)

Remote development with VS Code works as follows.

- Start the container as explained in the previous section.

- Install the kubernetes and dev container extensions of VS Code.

- Attach to you container you started in Sep 1.

After that, we can develop as if we were running everything locally.

Remarks

- We have access to Cernbox under /eos/user/o/orietman, which allows easy file transfer.

- Files in the container can be browsed and edited.

- The terminal is attached to the container, i.e. we can run cmake, git, ...

TODOs

- Persistent volume claim (PVC) to save stuff between sessions

- A secret for the ssh key when we use git in the container

- Merge everything to a YAML file that works out of the box (e.g. with kustomize)

- Find a setting that allows comparable benchmarks (exclusive node access)

-

10:55

→

11:00

Following up GPU to-dos 5mSpeaker: Dr Vikas Singhal (Department of Atomic Energy (IN))

Following the GPU deterministic mode,

https://github.com/AliceO2Group/AliceO2/blob/dev/GPU/documentation/deterministic-mode.md

EnabledGPUCA_DETERMINISTIC_MODE=GPUin cmake.config and rebuild.

-

10:00

→

10:20