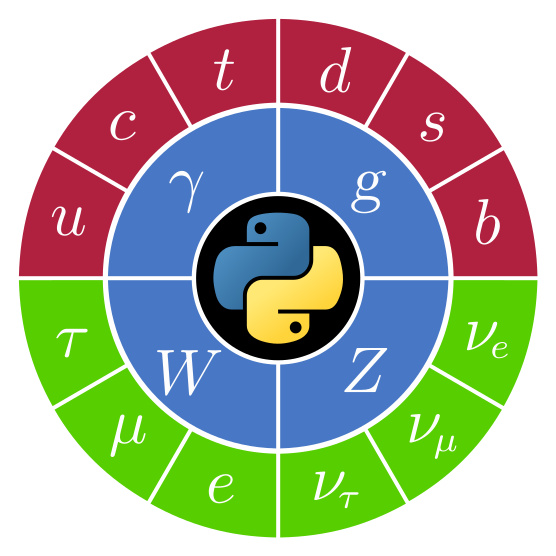

PyHEP 2022 (virtual) Workshop

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

PyHEP 2022 will be a virtual workshop. It will be a forum for the participants and the community at large to discuss developments of Python packages and tools, exchange experiences, and inform the future evolution of community activities. There will be ample time for discussion.

The agenda is composed of plenary sessions:

Organising Committee

Eduardo Rodrigues - University of Liverpool (Chair)

Graeme A. Stewart - CERN

Jim Pivarski - Princeton University

Matthew Feickert - University of Wisconsin-Madison

Nikolai Hartmann - Ludwig-Maximilians-Universität Munich

Oksana Shadura - University of Nebraska-Lincoln

Sponsors

The event is kindly sponsored by

-

-

Plenary Session Monday

-

1

Welcome and workshop overviewSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

2

Level Up Your Python

This tutorial covers intermediate Python, dataclasses, errors, decorators, context managers, logging, debugging, profiling, and more. Participants are expected to have introductory Python knowledge, like basic syntax, function definitions, dicts, lists, and variables.

Speaker: Henry Fredrick Schreiner (Princeton University) -

3

TheBureaucrat, a package to help you organize your work

In science we deal a lot with data acquisition and analysis. The process until we draw a conclusion is usually very complex, involving the acquisition of several different datasets, and the application of many analysis procedures that in turn create new data. Moreover, we often want to repeat this procedure many times. This creates a lot of information that we have to store and keep well organized, which can be a time consuming and very tedious process that we don't like but, sooner or later, we cannot avoid. In this talk I present The Bureaucrat, a simple yet powerful Python package that will help you with this organizational task transparently and easily. And of course, cross platform.

Speaker: Matias Senger (University of Zurich (CH)) -

4

Histograms as Objects: Tools for Efficient Analysis and Interactivity

Histograms are a pillar of analysis in High Energy Physics. Particle physicists utilise histograms in order to find new particles, measure characteristics, and understand data activities. An instance of an application is fitting bumps in histograms to find particular interactions by accumulating huge amounts of data given that the probability of occurrence is low. The Scikit-HEP ecosystem provides a coordinated set of tools for histogramming. This talk aims to discuss these histogramming packages built on the histogram-as-an-object concept with a focus on results, techniques, recent updates, and future directions. The boost-histogram library enables fast and efficient histogramming, while Hist adds useful features by using boost-histogram as a backend. A new library, uproot-browser, has been introduced which enables a user to browse and look inside a ROOT file, completely via the terminal.

Speakers: Aman Goel (University of Delhi), Jay Gohil (IRIS HEP Fellow) -

5

PyHEP and the Climate Crisis [Cancelled]

What can we really do as high energy physicists in our day-to-day programming lives to reduce HEP carbon emissions? In my lightning talk, I will present the key challenges and potential solutions which would make a real difference to the climate crisis.

Speaker: Hannah Wakeling (McGill University) -

16:00

BREAK

-

6

Uproot, Awkward Array, hist, Vector: from basics to combinatorics

This is an introduction to doing particle physics analysis with Scikit-HEP tools: Uproot, Awkward Array, hist, and Vector.

It starts at a basic level—exploring files, making plots—and ramps up to resolving e⁺e⁻e⁺e⁻, μ⁺μ⁻μ⁺μ⁻, and e⁺e⁻μ⁺μ⁻ final states in Higgs decays.

Speaker: Jim Pivarski (Princeton University) -

7

Python in the Belle II experiment

The Belle II software framework, basf2, is newly designed to process the data taken in the Belle II experiment. A single task of data-processing is performed by a single basf2 module, and the basf2 modules are configured in an ordered sequence, called the basf2 path. All configuration of the basf2 module and steering of the basf2 path is done via Python. Moreover, Python plugin packages have been developed to automate the calibration of the Belle II detector and build a pipeline of batch jobs. In this talk, the usage of Python in the Belle II experiment will be covered.

Speaker: Yo Sato (KEK IPNS) -

8

Teaching Python the Sustainable Way: Lessons Learned at HSF Training

With thousands of new members joining the HEP community every year, it is of paramount importance to have training strategies that are efficient and sustainable at scale. The HEP Software Foundation (HSF) Training Working Group offers workshops with this goal in mind. We present our goals, technical setup, and experiences with regard to the most recent training events. The talk also aims to further improve the integration of HSF Training with the HEP community as a whole.

Speakers: Alexander Moreno Briceño (Universidad Antonio Nariño), Aman Goel (University of Delhi), Guillermo Antonio Fidalgo Rodriguez (University of Puerto Rico at Mayagüez) -

9

Uhepp: Sharing plots in a self-contained format

The uhepp (universal high-energy physics plots) ecosystem defines a

self-contained storage format that couples raw histogram data (like a TH1F) with the description of visual histogram stacks, styles, and labels. Since the raw histograms are retained and packaged in a single file, rebinning, recoloring, or merging of processes can be achieved easily at render time in a non-destructive way. The ecosystem is powered by the reference implementation in Python (uhepp on PyPI) that provides utility methods to create, save, and load uhepp plots, and can render plots with Matplotlib. This talk demonstrates how to build, save and load a histogram.Traditionally, plots are shared in a "plot book" containing many histograms in a graphics format that does not allow extracting numerical values or modifying the binning or the plot composition. This talk introduces the online hub uhepp.org. The hub exposes a REST API to facilitate collaboration and sharing of plots via push and pull operations provided by the reference implementation in Python. The online hub offers an interactive preview of uploaded plots in the browser, removing the need for "plot books." Besides the publicly hosted uhepp.org hub instance, the project allows self-hosted deployments of the hub infrastructure.

Speaker: Frank Sauerburger (Albert Ludwigs Universitaet Freiburg (DE)) -

10

jacobi: Error propagation made easy

In HEP, we often use Monte-Carlo simulation or bootstrapping to propagate errors in more complicated scenarios. However, standard error propagation could be done in most cases, if it was easy to compute the derivatives of the mapping function. Jacobi is a new library which offers a very powerful, fast, easy-to-use, and robust numerical derivative calculator. In contrast to libraries which do error propagation with with automatic differentiation, like the popular uncertainties library, Jacobi can compute derivatives for any analytical function, even if the function is opaque and calls into non-Python code. Jacobi is also completely non-intrusive, since it does not require one to replace the number and array types in the analysis with special number or array objects. In the talk, I show how to perform simple and more advanced error propagation with Jacobi.

Speaker: Hans Peter Dembinski (TU Dortmund)

-

1

-

-

-

Plenary Session Tueday

-

11

iminuit: fitting models to data

iminuit is a Pythonic wrapper to the MINUIT2 C++ library which is part of the ROOT framework, but does not require ROOT to be installed. iminuit is a rather simple low-level library to do fitting compared to zfit or pyhf, but its simplicity also makes it is very flexible and easy to learn. The tutorial will cover how to do typical HEP fits with iminuit, typical pitfalls, and how to resolve them. In particular, we will show to perform template fits with the new BarlowBeestonLite cost function implemented in iminuit.

Speaker: Hans Peter Dembinski (TU Dortmund) -

12

Skyhook: Managing Columnar Data Within Storage

The advent of high-speed network and storage devices like RDMA-enabled networks and NVMe SSDs, the fundamental bottleneck in any data management system has shifted from the I/O layer to the CPU layer resulting in reduced scalability and performance. This issue is quite prominent in systems reading popular data formats like Parquet and ORC which involve CPU intensive tasks like decoding and decompression of data on the client. One solution to this problem is adopting computational storage, where CPU intensive tasks like decoding, decompression, and filtering are offloaded/distributed to often underutilized storage server CPUs, getting back scalability and accelerating performance. We build Skyhook, a programmable data management system based on Apache Arrow and Ceph that enables offloading of query processing tasks to storage servers. Skyhook does not require any modifications to Ceph nor assumes computational storage devices, rather its unique design embeds query processing in Ceph objects. This approach makes adding query offloading capabilities to the storage systems a breeze for practitioners. We use Skyhook to manage HEP datasets with storage, i.e., minimizing the creation of additional copies. The current release is deployed in University of Nebraska and University of Chicago and supports offloading of Nano Event filtering and projection queries. Our roadmap includes supporting joins with a distributed query execution framework that partitions Substrait query plans and distributes them for execution on clients, worker nodes, and storage objects. For the execution we plan to use the Acero (Arrow Compute Engine). For generating Substrait query plans we are planning to use Ibis. We are also collaborating with Argonne National Lab to extend Skyhook to other storage systems such as the Mochi software-defined storage system using RDMA for data transport to accelerate Skyhook query performance.

Speaker: Jayjeet Chakraborty -

13

The Particle & DecayLanguage packages

An overview of the Particle & DecayLanguage packages is given. Particle provides a pythonic interface to the Particle Data Group particle data tables and particle identification codes, with extended particle information and extra goodies. DecayLanguage provides tools to parse so-called .dec decay files, and describe, manipulate and visualise decay chains. DecayLanguage also implements a language to describe and convert particle decays between digital representations, effectively making it possible to interoperate several fitting programs.

Speaker: Eduardo Rodrigues (University of Liverpool (GB)) -

14

PhaseSpace + DecayLanguage

PhaseSpace is a Python package used for simulations of n-body decays and uses TensorFlow as the computational backend.

During this lightning talk, I will present a new feature in PhaseSpace: importing and simulating decays created using DecayLanguage, a package used for describing decays of particles. This improvement makes it possible to simulate complex decays in PhaseSpace while simultaneously improving the interface between various packages in the Scikit-HEP and zfit ecosystem.

A brief overview of PhaseSpace and DecayLanguage will be given, as well as a demonstration of the new feature and the customizations that are available.Speaker: Simon Thor (CERN) -

15

Constructing HEP vectors and analyzing HEP data using Vector

Vector is a Python library for 2D, 3D, and Lorentz vectors, including arrays of vectors, designed to solve common physics problems in a NumPy-like way. Vector currently supports pure Python Object, NumPy, Awkward, and Numba-based (Numba-Object, Numba-Awkward) backends.

This talk will focus on introducing Vector and its backends to the HEP community through a data analysis pipeline. The session will build up from pure Python Object based vectors to Awkward based vectors, ending with a demonstration of Numba support. Furthermore, we will discuss the latest developments in the library's API and showcase some recent enhancements.

Speaker: Saransh Chopra (Cluster Innovation Centre, University of Delhi) -

16:00

BREAK

-

16

Matplotlib with HSF Training

Based on the Matplotlib for HEP workshop developed by HSF Training, we will present a short introduction to matplotlib and perform an HEP analysis using ATLAS and CMS open data in order to get publishable plots. We will also use mplhep, a matplotlib wrapper for easy plotting required in HEP. This tutorial aims to present a subset of the complete matplotlib training module and requires a beginner level understanding of Python. Some knowledge of Scikit-HEP modules is helpful but not essential.

Speaker: Alexander Moreno Briceño (Universidad Antonio Nariño) -

17

Lessons learned converting a production-grade Python CMS analysis to distributed RDataFrame

The high-level and lazy programming model offered by RDataFrame has proven to be both user-friendly while at the same time providing satisfactory performance for many HEP analysis use cases. With the addition of a Pythonic layer for automatic distribution of workloads to multiple machines, RDataFrame can easily serve as a swiss knife tool for developing a full production-scale analysis with an ergonomic interface. This talk will present how a full-scale CMS analysis was translated from a traditional iterative approach with PyROOT to using RDataFrame and how such translation made it possible to automatically parallelize the analysis computations and merge their results. The analysis is benchmarked on a next generation analysis facility developed in the context of INFN R&D activities, which provides the users with a JupyterLab session and a built-in connection to HTCondor distributed resources accessible via the Dask Python library.

Speakers: Tommaso Tedeschi (Universita e INFN, Perugia (IT)), Vincenzo Eduardo Padulano (Valencia Polytechnic University (ES))

-

11

-

Social time: Tuesday Meet and Mingle

Get to know the other PyHEP participants better and help to reinforce our community.

We will be using the RemotelyGreen platform. Click the link below to join. Here are some tips to help you join and participate:

- Use a laptop or desktop.

- Join early to sign-in and test your camera and mic. Make an account by connecting with LinkedIn or using an email and password (check for a verification email in this case).

- You can choose your networking topics and set up your business card before the event begins. Choose as many of the event's topics that interest you.

- You can also specify your preferred networking topics at the start of the event and in between encounters. Fill in your business card to make follow-ups easier by clicking on your avatar or the username in the top-right corner of the screen.

- Wait for the session to begin - you'll be shuffled with other participants automatically. If you arrive late, you will be able to join at the next shuffle.

Attached is a short flyer with more details.

-

-

-

Plenary Session Wednesday

-

18

The SuperNova Early Warning System & Software for Studying Supernova Neutrinos

The SuperNova Early Warning System (SNEWS) connects different neutrino experiments to quickly alert the astronomy community once the next galactic supernova happens. Since 2019, it has been completely redesigned for the new era of multimessenger astronomy.

This talk will give an overview over SNEWS’ fully Python-based toolchain, covering communication of experiments with SNEWS, coincidence detection and combined analyses like supernova triangulation. I will also introduce physics simulation software that SNEWS is developing for use by the broader supernova neutrino community.Speaker: Jost Migenda (King’s College London) -

19

Python Usage Within the LHCb Experiment

Expanding HEP datasets and analysis challenges continues to give rise to advancing software within the ecosystem. The LHCb experiment has seen many analyzers make the change to Python-based analysis software tools, making use of many of the scikit and scikit-hep packages. Additional development of flavour-physics aimed packages is spearheaded by many users within the collaboration. A broad overview of the tools and specific applications of such tools is provided to encourage the discussion and growth within the PyHEP community.

Speaker: Nathan Grieser (University of Cincinnati (US)) -

20

Basic Physics Analyses Implemented Using Apache Spark.

Apache Spark is a very successful open-source tool for data processing. This talk will focus on the use of Spark and its DataFrame API in the context of HEP. We will go through a few demos of some simple and outreach-style analyses implemented using Jupyter notebooks and the Spark Python API (PySpark). We will wrap up with a short discussion of the key features in Spark and its ecosystem that can be useful for Physics analysis and what still needs improvements.

Speaker: Luca Canali (CERN) -

21

Automatic Resource Management with Coffea and Work Queue for analysis workflows

In this notebook talk we will demonstrate the Coffea Work Queue executor for analysis workflows. Work Queue is a framework for building large scale manager-worker applications. When used together with Coffea, it can measure the resources, such as cores and memory, that chunks of events need and adapt their allocations to maximize throughput. Further, we will demonstrate how the executor can dynamically modify the size of chunks of events when the memory available is not enough, and adapt it to desired resource usage. We will introduce its basic use for small local runs, and how it can automatically export the needed python environments when working in a cluster with no previous setup.

Speaker: Benjamin Tovar Lopez (University of Notre Dame) -

16:00

BREAK

-

22

Data Management Package for the novel data delivery system, ServiceX, and its application to an ATLAS Run-2 Physics Analysis Workflow

Recent developments of HEP software in the Python ecosystem allow novel approaches to physics analysis workflows. The novel data delivery system, ServiceX, can be very effective when accessing large datasets at remote grid sites. ServiceX can deliver user-selected columns with filtering and run at scale. I will introduce the ServiceX data management package, ServiceX DataBinder, for easy manipulations of ServiceX delivery requests and delivered data using a single configuration file. I will also introduce the effort to integrate ServiceX and Coffea into an ongoing ATLAS Run-2 physics analysis.

Speaker: Kyungeon Choi (University of Texas at Austin (US)) -

23

EOS -- A software for Flavor Physics Phenomenology

EOSis an open-source software for a variety of computational tasks in flavor physics. Its use cases include theory predictions within and beyond the Standard Model of particle physics, Bayesian inference of theory parameters from experimental and theoretical likelihoods, and simulation of pseudo events for a number of signal processes.EOSensures high-performance computations through aC++back-end and ease of usability through aPythonfront-end. To achieve this flexibility,EOSenables the user to select from a variety of implementations of the relevant decay processes and hadronic matrix elements at run time. We describe the general structure of the software framework and provide basic examples. Further details and in-depth interactive examples are provided as part of theEOSonline documentation.Speaker: Danny van Dyk -

24

Correctionlib

Correctionlib provides a well-structured JSON data format for a wide variety of ad-hoc correction factors encountered in a typical HEP analysis and a companion evaluation tool suitable for use in C++ and python programs. The format is designed to be self-documenting and preservable, while the evaluator is designed to have good performance.

Speaker: Nick Smith (Fermi National Accelerator Lab. (US)) -

25

pyhepmc: a Pythonic interface to HepMC3

pyhepmc is a Pythonic frontend for the HepMC3 library and part of Scikit-HEP. It allows one to read/write HepMC3 records in various formats and to convert any other particle record to HepMC3. pyhepmc was originally proposed to become the official Python interface for HepMC3. HepMC3 eventually got an alternative Python interface which is an automatic translation of the C++ interface, while pyhepmc offers a hand-written interface with a Pythonic feel. Another advantage of pyhepmc is that it is listed on PyPI and can be easily installed with pip, thanks to Scikit-HEP releasing binary wheels for common platforms.

Speaker: Hans Peter Dembinski (TU Dortmund) -

26

What's new in Python 3.11

Python 3.11 is coming out in October! We will look through the major features you can expect, such as results from the Faster CPython project, enhanced exceptions, new typing functionality, a very nice new asyncio feature, and several exciting enhancements sprinkled throughout the standard library. A couple of major new changes will be rolling in in future versions, as well, like the removal of distutils (3.12) and utf-8 by default (3.15); 3.11 provides some assistance for those, as well.

Speaker: Henry Fredrick Schreiner (Princeton University)

-

18

-

-

-

Social time: Thursday Meet and Mingle

Get to know the other PyHEP participants better and help to reinforce our community.

We will be using the RemotelyGreen platform. Click the link below to join. Here are some tips to help you join and participate:

- Use a laptop or desktop.

- Join early to sign-in and test your camera and mic. Make an account by connecting with LinkedIn or using an email and password (check for a verification email in this case).

- You can choose your networking topics and set up your business card before the event begins. Choose as many of the event's topics that interest you.

- You can also specify your preferred networking topics at the start of the event and in between encounters. Fill in your business card to make follow-ups easier by clicking on your avatar or the username in the top-right corner of the screen.

- Wait for the session to begin - you'll be shuffled with other participants automatically. If you arrive late, you will be able to join at the next shuffle.

Attached is a short flyer with more details.

-

Plenary Session Thursday

-

27

Analysis Optimisation with Differentiable Programming

This tutorial will cover how to optimise various aspects of analyses -- such as cuts, binning, and learned observables like neural networks -- using gradient-based optimisation. This has been made possible due to work on the

relaxedsoftware package, which offers a set of standard HEP operations that have been made differentiable.In addition to targeting Asimov significance, we will also use the full analysis significance that incorporates systematic uncertainties as an optimisation objective. Finally, we will reproduce the

neosmethod for learning systematic-aware observables, and you'll see how you can modify it for your use-case.Speaker: Mr Nathan Daniel Simpson (Lund University (SE)) -

28

Speeding up differentiable programming with a Computer Algebra System

In the ideal world, we describe our models with recognizable mathematical expressions and directly fit those models to large data samples with high performance. It turns out that this can be done by formulating our models with SymPy (a Computer Algebra System) and using its symbolic expression trees as template to computational back-ends like JAX and TensorFlow. The CAS can in fact further simplify the expression tree, which results in speed-ups in the numerical back-end.

In this talk, we have a look at amplitude analysis as a case study and use the Python libraries of the ComPWA project to formulate and fit large expressions to unbinned, multidimensional data sets.Speaker: Mr Remco de Boer (Ruhr University Bochum) -

29

The pythia8 python interface

Pythia is one of the most widely used general-purpose Monte Carlo event generators in HEP. It has included a python interface to the underlying C++ since

v8.219, and it was redesigned to handleC++11usingpyBind11sincev8.301, allowing users to generate a custom python interface.This talk will showcase the power and flexibility of Pythia's default, simplified python interface by presenting its basic features and walking through a simple event-generation and analysis workflow.

Speaker: Michael Kent Wilkinson (University of Cincinnati (US)) -

16:00

BREAK

-

30

End-to-end physics analysis with Open Data: the Analysis Grand Challenge

The IRIS-HEP Analysis Grand Challenge (AGC) provides an environment for investigating analysis methods in the context of a realistic physics analysis. It features an analysis task that captures all relevant workflow aspects encountered in LHC analyses, reaching from data delivery to statistical inference. By using publicly available Open Data, the AGC allows anyone interested to test different analysis approaches and implementations at scale.

This tutorial showcases a complete Python implementation of the AGC analysis task, making heavy use of Scikit-HEP libraries and coffea. It demonstrates how these libraries provide the required functionality and interfaces for an end-to-end analysis pipeline. This includes the organization of input datasets, columnar data processing, evaluation of systematic uncertainties, histogram creation, statistical model assembly and inference, alongside the relevant visualizations that a physicist running this pipeline requires.

Speaker: Alexander Held (University of Wisconsin Madison (US)) -

31

Awkward RDataFrame Tutorial

This Jupyter notebook tutorial will cover usage of Awkward Arrays within an RDataFrame.

In Awkward Array version 2, the ak.to_rdataframe function presents a view of an Awkward Array as an RDataFrame source. This view is generated on demand and the data is not copied. The column readers are generated based on the run-time type of the views. The readers are passed to a generated source derived from ROOT::RDF::RDataSource.

The ak.from_rdataframe function converts the selected columns as native Awkward Arrays.

The tutorial demonstrates examples of the column definition, applying user-defined filters written in C++, and plotting or extracting the columnar data as Awkward Arrays.

Speaker: Ianna Osborne (Princeton University) -

32

Developing implicitly-parallel Python analysis tools for NOvA

The NOvA collaboration together with a Dept. of Energy ASCR supported SciDAC-4 project, have been exploring Python-based analysis workflows for HPC platforms. This research has been focused on adapting machine-learning application workflows using highly-parallel computing environments for neutrino-nucleon cross section measurements. This work accelerates scientific analysis and lowers the learning barriers required to leverage leadership computing platforms.

Users of these HPC workflows have often been required to have significant experience with parallel computing libraries and principles in addition to dedicated access to accounts and resource allocations at off-site HPC/Supercomputing centers such as NERSC and the Argonne Leadership Computing Facility. With the commissioning of the new Fermilab analysis cluster, which will provide dynamically provisioned pools of HPC resources, we are now exploring ways to improve the efficiency and approachability of Python-based analysis tools. These include data organizations using HDF5 and the PandAna analysis framework, both of which natively support highly data-parallel operations.

Our research enables fully data-parallel exploration, selection, and aggregation of neutrino data, which are the fundamental operations required for neutrino cross section analysis work in NOvA. These operations are executed with the analysis cluster through Jupyter notebook interfaces and have been demonstrated to achieve low execution latencies, which are highly compatible with interactive analysis time-scale(s). We have developed a method for constructing large monolithic HDF5 based files, which each represent an entire NOvA dataset, and we have demonstrated a factor of more than $10\times$ speedup of basic event selection using this data representation, relative to equivalent multi-file composite representations of the datasets. We have developed a complete, implicitly-parallel analysis workflow with basic histogram operations and demonstrated its scalability using a realistic neutrino cross section measurement on the Perlmutter system at NERSC. These tools will enable real-time turnaround of more physics results for the NOvA collaboration and wider HEP community.

Speaker: Derek Doyle (Colorado State University)

-

27

-

Hackashop

-

33

Intro talk - how to get your set-up to "hack around"Speaker: Aman Goel (University of Delhi)

-

33

-

-

-

Hackashop

-

Plenary Session Friday

-

34

Dask Tutorial

Dask provides a foundation to natively scale Python libraries and applications. Dask collection libraries like

dask.arrayanddask.dataframemimic the ubiquitous APIs of NumPy and Pandas to parallelize and/or distribute NumPy-like and Pandas-like workflows. Thedask.delayedcollection supports parallalization of custom algorithms. In this tutorial we will introduce the core Dask collections, the concepts behind them (partitioned objects represented by task graphs), and Dask's distributed execution engine that is compatible with common HEP batch compute systems. Finally, we will introduce recently developed Dask collections that support partitioned and distributed representations ofawkwardarrays andboost-histogramobjects.Speaker: Doug Davis -

35

Uproot + Dask

This lightning talk will focus on introducing the new features in Uproot v5, with most focus on the newly-introduced uproot.dask function. The dask integration will be demoed through example workflows that explore all the new features of the uproot.dask function. Important options of the function's API like delaying the opening of files and variable chunk sizes will be demoed in the Jupyter notebook. The talk will also include updates and brief demos of the dask-awkward and awkward-pandas projects showing how they tie in with the Uproot update.

Speaker: Kush Kothari -

36

Enabling Dask Interoperability with XRootD Storage Systems

This lightning talk will introduce fsspec-xrootd, a newly published middleware software package that allows Dask and other popular data analysis packages to directly access XRootD storage systems. These packages use the fsspec api as their storage backends and fsspec-xrootd adds an XRootD implementation to fsspec. This means that when using fsspec-xrootd, the user will be able to access XRootD content within Dask itself without resorting to an external program. The talk will include an explanation of fsspec-xrootd as well as a demonstration in jupyter notebook.

Speaker: Scott Demarest (Florida Institute of Technology) -

37

3D and VR Industrial Use Cases in Python

Why Python is a good choice for making digital twins for the industry/research?

Through several examples of practical use cases the talk will present our experiences of 3D and Virtual Reality, all implemented in Python with the help of our 3D package "HARFANG 3D" :

- Human factor study of a railway station in virtual reality

- Using a aircraft simulation sandbox for AI training

- Tele-operating a humanoid robot in VR

- A 2-way operation robotic digital twin in 3D

Use case #1, Railway station study

Duplicating a railway station in virtual reality to build a behavioral study, all implemented in Python.

- What are the benefits of the language here?

- Let's dive into the production workflow

- What is the protocol needed for a reliable scientific study?Use case #2, Flight simulator sandbox

Using a Python aircraft simulation sandbox for trajectory visualization and AI reinforcement learning.

- How the simulation sandbox works?

- A network interface to harness the simulation easily

- DemoUse case #3, Pollen Robotics Reachy, in VR

The future of industry, tele-operating an humanoid robot in VR.

- Best way to read and decode an URDF file with PyBullet

- Inverse kinematic with PyBullet again :)

- Teleoperation in VR

- Limitations and challengeUse case #4, Poppy Ergo Jr, in 3D

How many lines of codes of Python are needed to create a digital twin ? (spoiler : 150 loc)

- From the STL files to a realistic realtime 3D digital twin

- Piloting the robot in Python

- Compliance mode driving the 3D digital twin in realtimeUsing simple diagrams, code snippets, photos and videos, the talk will demonstrate the inputs and outputs of these experiments, and how much Python is relevant when it comes to implement 3D and VR applications in the industrial and scientific fields.

TLDR;

Most of the talk is about virtual reality and 3D images, so the impact of visuals is quite relevant. We would like to share with the audience that Python combined with HARFANG makes an exellent toolkit to build first-class VR experiences.

Speaker: Francois Gutherz -

38

Using C++ From Numba, Fast and Automatic

The scientific community using Python has developed several ways to accelerate Python codes. One popular technology is Numba, a Just-in-time (JIT) compiler that translates a subset of Python and NumPy code into fast machine code using LLVM. We have extended Numba’s integration with LLVM’s intermediate representation (IR) to enable the use of C++ kernels and connect them to Numba accelerated codes. Such a multilanguage setup is also commonly used to achieve performance or to interface with external bare-metal libraries. In addition, Numba users will be able to write the performance-critical codes in C++ and use them easily at native speed.

This work relies on high-performance, dynamic, bindings between Python and C++. Cppyy, which is the basis of PyROOT's interfaces to C++ libraries. Cppyy uses Cling, an incremental C++ interpreter, to generate on-demand bindings of required entities and connect them with the Python interpreter. This environment is uniquely positioned to enable the use of C++ from Numba in a fast and automatic way.

In this talk, we demonstrate using C++ from Numba through Cppyy. We show our approach which extends Cppyy to match the object typing and lowering models of Numba and the necessary additions to the reflection layers to generate IR from Python objects. The uniform LLVM runtime allows optimizations such as inlining which can in the future remove the C++ function call overhead. We discuss other optimizations such as lazily instantiated C++ templates based on input data. The talk also briefly outlines the non-negligible, Numba-introduced JIT overhead and possible ways to optimize it. Since this is built as a Cppyy extension Numba supports all bindings automatically without any user intervention.

Speaker: Baidyanath Kundu (Princeton University (US)) -

16:00

BREAK

-

39

zfit - binned fits and histograms

zfit is a scalable, pythonic model fitting library that aims at implementing likelihood fits in HEP. So far, the main functionality was focused on unbinned fits. With zfit 0.10, the concept of binning is introduced and allows for binned datasets, PDFs and losses such as Chi2 or Binned likelihoods. All of these elements are interchangeable and convertable to unbinned counterparts and allow for an arbitrary mixture of both.

In this talk, we will introduce the binned part of zfit and its integration into the existing Scikit-HEP ecosystem.Speaker: Jonas Eschle (University of Zurich (CH)) -

40

abcd_pyhf: Likelihood-based ABCD method for background estimation and hypothesis testing with pyhf

The ABCD method is a common background estimation method used by many physics searches in particle collider experiments and involves defining four regions based on two uncorrelated observables. The regions are defined such that there is a search region (where most signal events are expected to be) and three control regions. A likelihood-based version of the ABCD method, also referred to as the "modified ABCD method", can be used even when there may be significant contamination of the control regions by signal events. Code for applying this method in an individual analysis has generally been developed using the RooFit and RooStats packages within the ROOT software framework. abcd_pyhf is a standalone implementation of this method utilizing pyhf, a pure-Python package providing the functionality of the statistical analysis tools available in RooFit/RooStats. This implementation does not make any assumptions about the underlying analysis and can thus be used or adapted in any analysis using the ABCD method. This talk will provide an overview of the likelihood-based ABCD method and present an example of how to use abcd_pyhf to obtain statistical results in such an analysis.

Speaker: Mason Proffitt (University of Washington (US)) -

41

Pyhf to Combine Converter

The use of statistical models to accurately represent and interpret data from high-energy physics experiments is crucial to gaining a better understanding of the results of those experiments. However, there are many different methods and models that researchers are using for these representations, and although they often generate results that are useful for everyone in the field of HEP, they also often slightly deviate in results between different models, and that deviation is difficult to interpret. Fortunately, many statistical models use similar frameworks, as well as the same mathematics, so it is quite feasible to convert a model generated in one environment to a different environment. This will allow the results to be more consistently replicated across models, as well as give deeper insight into certain differences in results between models. In addition, both pyhf and Combine offer unique tools and nuances within their respective models, and an easy conversion would allow someone that is familiar with one environment to develop the model in that environment, and then transfer it to the other in order to take advantage of both sets of tools. The python script that I have developed successfully does this conversion, and I have also verified mathematical agreement between the models. Even with complex models, the inferences made by both pyhf and Combine agree within a 5% of the relative uncertainty of those inferences. Moreover, even for large models, the conversion takes less than 30 seconds.

Speaker: Mr Petey Ridolfi -

42

Scalable, Sparse IO with larcv

At the intersection of high energy physics, deep learning, and high performance computing there is a challenge: how to efficiently handle data I/O of sparse and irregular datasets from high energy physics, and connect them to python and the deep learning frameworks? In this lightning talk we present

larcv, an open source tool built on HDF5 that enables parallel, scalable IO for irregular datasets with simple python access. This tool is highly optimized for reading sparse and irregular datasets up to 10s of thousands of process, with latency of milliseconds when combined with GPU computing.Speaker: Corey Adams -

43

JetNet library for machine learning in high energy physics

Machine learning is becoming ubiquitous in high energy physics for many tasks, including classification, regression, reconstruction, and simulations. To facilitate development in this area, and to make such research more accessible and reproducible, we present the open source Python JetNet library with easy to access and standardised interfaces for particle cloud datasets, implementations for HEP evaluation and loss metrics, and more useful tools for ML in HEP.

Speaker: Raghav Kansal (Univ. of California San Diego (US)) -

44

Workshop close-outSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

34

-