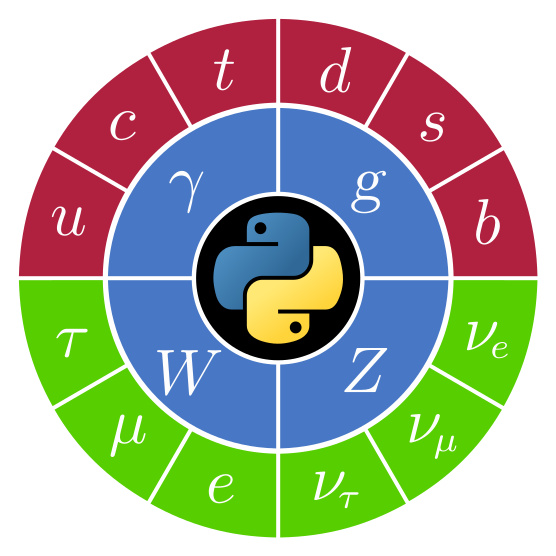

PyHEP 2023 - "Python in HEP" Users Workshop (online)

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

PyHEP 2023 will be an online workshop. It will be a forum for the participants and the community at large to discuss developments of Python packages and tools, exchange experiences, and inform the future evolution of community activities. There will be ample time for discussion.

The agenda is composed of plenary sessions:

SSL BinderHub link: binderhub.ssl-hep.org

Organising Committee

Eduardo Rodrigues - University of Liverpool (Chair)

Graeme A. Stewart - CERN

Jim Pivarski - Princeton University

Matthew Feickert - University of Wisconsin-Madison

Nikolai Hartmann - Ludwig-Maximilians-Universität Munich

Oksana Shadura - University of Nebraska-Lincoln

Sponsors

The event is kindly sponsored by

-

-

Plenary Session MondayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Oksana Shadura (University of Nebraska Lincoln (US))

-

1

Welcome and workshop overviewSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

2

Feynman diagrams in python: Revamping feynml and pyfeyn

feynmlis a project to develop an XML dialect for describing Feynman diagrams as used in quantum field theory calculations. A primary goal is the creation of a clear and definitive XML representation of Feynman diagram structures, serving as a standard that can be effortlessly translated into diverse formats. Similarly to HTML/CSS the physical/topological content is separated from the stylized representation.That graphical representation can be realized through

pyfeyn2, which took the approach ofpyfeynand extended it to a broader range of render engines namelymatplotlib,PyX,tikz, ASCII, Unicode,feynmpand the DOT language. The package allows for manual or automatic placement of vertices, enabling customization and flexibility from within a Notebook.The main difference to existing Mathematica-based solutions lies in the modular approach. This modularity enables easy interfacing with a range of tools. For instance, LHE and HEPMC files can be visualized as Feynman diagrams through

pyLHEandpyHEPMC. Additionally, theoretical computations are streamlined through interfaces such as the python-based UFO standard andqgraf. Further, the transformation of diagrams into amplitudes is supported, which can be further processed with tools likeformorsympy.The projects are hosted on github

https://github.com/APN-Pucky/pyfeyn2

https://github.com/APN-Pucky/feynmlSpeaker: Alexander Puck Neuwirth (Institute for Theoretical Physics at the University of Münster) -

3

What's new with Vector? First major release is out!

Vector is a Python library for 2D, 3D, and Lorentz vectors, especially arrays of vectors, designed to solve common physics problems in a NumPy-like way. Vector currently supports pure Python Object, NumPy, Awkward, and Numba-based (Numba-Object, Numba-Awkward) backends.

Vector had its first major release shortly after PyHEP 2022, and there have been several new and exciting updates along and after v1.0.0, including, but not limited to, new constructors, better error handling, fewer bugs, and awkward v2 support.

This lightning talk will cover the recent advancements in vector and how it still seamlessly integrates and is up-to-date with the rest of the Scikit-HEP ecosystem.

Speaker: Saransh Chopra (Cluster Innovation Centre, University of Delhi) -

4

A Generic Main Control Software Structure in a Distributed Data Acquisition Platform: D-Matrix

In addition to data acquisition, DAQ systems often incorporate monitoring and controlling tasks. In contrast to developing custom control software for individual experiments, a unified control software architecture abstraction is expected. The core of the generic control software is developed using Python and primarily comprises core function groups and executors. The core functional group encompasses various functionalities required for system control, including user management, subsystem configuration, system operational control, and so on. The functional group related to system configuration interacts with the database. Due to the predominant use of C++ in the development of the D-Matrix system software, the system operational control of the main controller requires interaction with C++. We have encapsulated and abstracted the interaction interfaces, primarily utilizing ZeroMQ and boost.python. The core functional group ensures functionality extensibility by scanning and adding Python files in specific working directories. The core executors consist of three types: terminal console, script executor, and web server. All three executors actually utilize the same command format to invoke various functionalities: 'group name + command name [+ parameters]'. The terminal console supports interactive command-line sessions or single-command execution. The script executor can execute pre-written script files. The web server is developed using Flask and interacts with the front-end web pages. The front-end pages also use the same command format to perform various operations and retrieve response data. The diversity of executors within the architecture enables the control software to have a wide range of application scenarios.

Speaker: Zhengyang Sun (University of Science and Technology of China) -

5

General likelihood fitting with zfit & hepstats

Likelihood fits and inference are an essential part of most analyses in HEP. While some fitting libraries specialize on specific types of fits, zfit offers a general model fitting library.

This tutorial will introduce fitting with zfit and hepstats. The introduction will cover unbinned and binned model building, custom models, simultaneous fits and toy studies to cover most of the use-cases in HEP. Furthermore, hepstats will be used for statistical inference of limit setting, significance and sWeights. Other libraries from Scikit-HEP will be touched upon for data loading, plotting, binning, minimization - all in the context of likelihood fits.Speaker: Jonas Eschle (University of Zurich (CH))

-

1

-

16:00

BREAK

-

Plenary Session MondayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Matthew Feickert (University of Wisconsin Madison (US))

-

6

A Python-based Software Stack for the LEGEND Experiment

The LEGEND experiment is designed to search for the lepton-number-violating neutrinoless double beta decay of $^{76}$Ge. The collaboration has selected Python as main programming language of its open source primary software stack, employed in decoding digitizer data and up to the most high-level analysis routines. Numba-based hardware acceleration of digital signal processing algorithms is provided through a package of potentially great interest for digitized data stream analyses. Moreover, the framework can be of general interest for any kind of tier-based analysis flow, with customizable chains of algorithms (kernels) defined through JSON configuration files. The LEGEND file format is HDF5-based, to ensure compliance with FAIR data principles. Modern development practices on packaging, style checking, CI/CD and documentation have been adopted. In this talk, I will present an overview of the LEGEND software stack and discuss its role within the PyHEP community.

Speaker: Luigi Pertoldi (Techincal University of Munich) -

7

Tracking with Graph Neural Networks

Recent work has demonstrated that graph neural networks (GNNs) trained for charged particle tracking can match the performance of traditional algorithms while improving scalability. This project uses a learned clustering strategy: GNNs are trained to embed the hits of the same particle close to each other in a latent space, such that they can easily be collected by a clustering algorithm.

The project is fully open source and available at https://github.com/gnn-tracking/gnn_tracking/. In this talk, we will present the basic ideas while demonstrating the execution of our pipeline with a Jupyter notebook. We will also show how participants can plug in their own model.Speaker: Kilian Adriano Lieret (Princeton University (US)) -

8

Python and Fast Imperative Code: Lowering the Barriers

In a typical HEP data analysis process, data is explored by a physicist loading large amounts of data into an interactive Python environment. The physicist performs various analyses of this data. The results of the first analysis tell the physicist what the next steps should be. Python as a dynamically typed language is ideal for this task. The downside is that Python is not very fast.

C++ as a statically typed language is fast. It is perfect for writing the performance critical components that speed things up. Python is used to arrange and connect these components. Thus at runtime the physicist can rearrange these components interactively, without reloading the data.

We will look at a few examples how to write your own analysis components and connect them via:

* Conversions of Awkward Arrays to and from RDataFrame (C++)

* Standalone cppyy (C++)

* Passing Awkward Arrays to and from Python functions compiled by Numba

* Passing Awkward Arrays to Python functions compiled for GPUs by Numba

Header-only libraries for populating Awkward Arrays from C++ without any Python dependenciesWe will introduce Awkward Arrays in Julia via a recent development of Awkward Arrays PyJulia/PyCall.jl-based bridges.

Speaker: Ianna Osborne (Princeton University)

-

6

-

-

-

Plenary Session TuesdayConveners: Dr Graeme A Stewart (CERN), Oksana Shadura (University of Nebraska Lincoln (US))

-

9

Pyg4ometry : a python package to manipulate Monte Carlo geometry

Creating, manipulating, editing and validating detector or accelerator geometry for Monte Carlo codes such a Geant4, FLUKA, MCNP and PHITS is a time consuming and error prone process. Diverse tools for achieving typical work flows are available but rarely under a single coherent package. Pyg4ometry is a python based code to manipulate geometry, mainly for Geant4 but also FLUKA and soon MCNP and PHITS. Pyg4ometry allows the conversion of geometry between different codes and CAD files. Pyg4ometry can act as a validator, to check for common issues in geometry that prevents MC code operation, e.g. overlaps. Pyg4ometry uses python as an effective parametric scripting scripting language for the creation or editing of geometry. Pyg4ometry is also an effective compositor allowing the creation of detectors where the geometry comes from a diversity of sources. Pyg4ometry heavily uses the Computational Algorithms Library (CGAL), Visualisation Toolkit (VTK) and OpenCascade Technology (OCT) all accessed in python via pybind11. Pyg4ometry originated in accelerator background simulation where there is limited person-power for geometry creation and rapid prototyping is important.

Speaker: Prof. Stewart Takashi Boogert (Royal Holloway, University of London) -

10

Executing Analysis Workflows at Scale with Coffea+Dask+TaskVine

During this talk I will present our experiences executing analysis workflows on thousands of cores. We use TaskVine, a general-purpose task scheduler for large scale data intensive dynamic python applications, to execute the task graph generated by Coffea+Dask. As task data becomes available, TaskVine adapts the cores and memory allocated to maximize throughput and minimize retries. Additionally, TaskVine tries to minimize data movement by temporarily and aggressively caching data at the compute nodes. TaskVine executes these workflows without a previous setup in the compute nodes, as it dynamically delivers the dependencies using conda-based environment file.

Speaker: Benjamin Tovar Lopez (University of Notre Dame) -

11

PocketCoffea: a configuration layer for CMS analyses with Coffea

A configuration layer for the analysis of CMS data in the NanoAOD format is presented. The framework is based on the columnar analysis of proton-proton collision events with the Coffea Python package and it focuses on configurability and reproducibility of analysis tasks.

All the operations needed to extract the relevant information from events are performed by a Coffea processor object that takes the NanoAOD events as input and returns a set of output histograms or arrays.

PocketCoffea defines a configuration scheme to specify all the parameters and settings of the processor: the datasets definition, object and event selections, Monte Carlo weights, systematic uncertainties and the output histograms characteristics. The configuration layer is user friendly and speeds up the setup of many common analysis tasks.

A structured processor performing operations that are common among CMS analyses is defined and can be customized with derived processor classes, allowing code sharing between different analyses workflows in a hierarchical structure.

With its configurable structure, PocketCoffea is a suitable tool to perform any CMS analysis in a highly reproducible, computational efficient and user-friendly way.Speaker: Matteo Marchegiani (ETH Zurich (CH))

-

9

-

16:00

BREAK

-

Plenary Session TuesdayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Dr Graeme A Stewart (CERN)

-

12

RDataFrame: interactive analysis at scale by example

With the increasing dataset sizes brought by the current LHC data analysis workflows and the future expectations that estimate even greater computational needs, data analysis software must strive to optimise the processing throughput on a single core and ensure an efficient distribution of tasks across multiple cores and computing nodes. RDataFrame, the high-level interface for data analysis offered by ROOT, natively supports distributing Python applications seamlessly across a plethora of computing cluster deployments, via the Spark and Dask execution engines. This contribution reports known use cases of the distributed RDataFrame tool at scale on different deployments, including resources at CERN and externally. In particular, a focus is devoted to the user experience aspect of distributed RDataFrame, showing how notable functionality for an interactive workflow is included natively in the tool. For example, the possibility to visualise in real time the events processed by the distributed tasks.

Speaker: Dr Vincenzo Eduardo Padulano (CERN) -

13

Self-driving Telescope Schedules: A Framework for Training RL Agents based on Telescope Data

Reinforcement Learning (RL) is becoming one of the most effective paradigms of Machine Learning for training autonomous systems.

In the context of astronomical observational campaigns, this paradigm can be used for training autonomous telescopes able to optimize sequential schedules based on a given scientific reward, avoiding the intervention of manual optimization which may result in suboptimal policies in terms of performance due to the complexity of the problem.

This work wants to present a Python software framework under development that can be used to train RL algorithms on any offline dataset containing simulated or real interactions between a telescope and the sky. The dataset should contain a discrete set of sites of the sky to be visited, and RL algorithms will optimize the schedule of these sites, building a telescope able to change its pointing, site to site, in order to maximize a cumulative reward based on some observation variables as input included in the dataset.

Given a dataset respecting a certain predefined format required by the framework and some experiment configurations, a training buffer will adapt it for acting as an environment, and several RL algorithms for discrete action spaces will be supported, all based on the PTAN (PyTorch AgentNet) library.

Different well-known features are enabled by the framework, including the holdout method and K-Fold cross-validation with splitting strategies adapted for Modified Julian Days, data preprocessing strategies such as normalization, observation space reduction, and missing values handling.

Rendering options are available to visualize autonomous telescope decisions through time ensuring interpretability and visualization metrics to monitor performances.

Several other features are embedded in the framework, such as hyperparameters optimization based on the Optuna library, seed control, and multiple parallel training environments.

The framework will enable the monitoring of multiple metrics throughout the training of the RL agent, and a dedicated testing module will utilize a predefined metric that can be used for the purpose of comparing agents and visualizing the transitions determined within the schedule of each of them.Speaker: Franco Terranova (University of Pisa, Fermi National Accelerator Laboratory) -

14

pymcabc: A Particle Physics Toy Toolbox for the ABC Model

We present the pymcabc software which is a High Energy Physics toy toolkit for the ABC model. The ABC model is a pedagogical model that consists of three scalar particles of arbitrary masses. The only interaction among these particles occurs when all three of them are present together. The pymcabc software can calculate all the leading-order cross-sections as well as decay widths within the ABC model. The software can be used as an Event Generator to simulate all the scattering processes within the ABC model. Moreover, it simulates the decays associated with the heavy-particle final state, leading to a $2\rightarrow 3$ or a $2\rightarrow 4$ type final states within the ABC model. We also apply toy gaussian detector effects to simulate the detector response of a toy tracker for three-momentum measurements and a toy calorimeter for energy measurements. Using the results of the pymcabc software, we have also illustrated some well-known physics analyses techniques such as the analysis of the lineshape of a heavy propagator and recoil mass reconstruction.

Speaker: Aman Desai -

15

Bridging RooFit and SymPy with applications in amplitude analysis

RooFit is a C++ library for statistical modelling and analysis. It is part of

the ROOT framework and also provides Python bindings. RooFit provides some

basic building blocks to construct probability density functions for data

modelling. However, in some application areas, the analytical physics-driven

shapes for data modelling have become so complicated that they can't be covered

by a relatively low-level tool like RooFit. One of these application areas is

partial wave analysis (also known as amplitude analysis), where the model

shapes are motivated by quantum field theory. These models are complicated

enough to warrant separate frameworks for building them, like, for example, the

Python packages maintained by the ComPWA organization. These tools often

formulate the models with SymPy, a Python library for symbolic mathematics.

Since SymPy is able to export formulae as C++ code, it is natural to use these

formulae in the RooFit exosystem, especially for the well-established

statistical analysis procedures implemented in ROOT.This contribution highlights some recent developments in RooFit to support

complex mathematical expressions formulated in SymPy. This includes the

evaluation of the corresponding likelihood functions on the GPU, as well as the

generation of analytic gradient code with automatic differentiation (AD) tools

like Clad. This development is very relevant for RooFit users who want to also

use modern amplitude analysis codes written in Python.Speaker: Jonas Rembser (CERN) -

16

A Framework for Data Simulation and Analysis of the BabyCal Electromagnetic Calorimeter

This research introduces an automated system capable of efficiently simulating, translating, and analyzing high-energy physics (HEP) data. By leveraging HEP data simulation software, computer clusters, and cutting-edge machine learning algorithms, such as convolutional neural networks (CNNs) and autoencoders, the system effectively manages a dataset of approximately 10,000 entries.

Using the framework, we generated simulated data of muon and antimuon particles and implemented CNNs and autoencoders to analyze the data. The experiment results showed that autoencoders were able to reconstruct muons, achieving accuracies of up to 97%. This work is a starting point that serves as a helpful data analysis tool, aiding researchers in their investigations.Speaker: Daniel Hebel (Federico Santa Maria Technical University (CL))

-

12

-

-

-

Plenary Session WednesdayConveners: Matthew Feickert (University of Wisconsin Madison (US)), Nikolai Hartmann (Ludwig Maximilians Universitat (DE))

-

17

pyhf tutorial and exploration

As

pyhfcontinues to be developed and as the user community has grown significantly, both in users and in subfields of physics, the needs of the user base have begun to expand from beyond simple inference tasks. In this tutorial we will cover some recent features added topyhfas well as give a short tour of possible example use cases across high energy collider physics, flavour physics, particle physics theory, and Bayesian analysis.Speaker: Matthew Feickert (University of Wisconsin Madison (US)) -

18

Comparative benchmarks for statistical analysis frameworks in Python

The statistical models that are used in modern HEP research are independent of their specific implementations. As a consequence, many different tools have been developed to perform statistical analyses in HEP. These implementations differ both in their performance, but also in their usability. In this scenario, comparative benchmarks are essential to aid users in choosing a library and to support developers in resolving bottlenecks or usability shortcomings.

This contribution showcases a comparison of some of the most used tools in statistical analyses. The python package pyhf focuses on template histogram fits, which are the backbone of many analyses at the ATLAS and CMS experiments. In contrast, zfit mainly focuses on unbinned fits to analytical functions. The third tool, RooFit, is part of the ROOT analysis framework, implemented in C++ but with Python bindings. RooFit can perform both template histogram and unbinned fits and is thus compared to both other packages.

To benchmark pyhf and RooFit, the "Simple Hypothesis Testing" example from the pyhf tutorial is used. The complexity of this example is scaled up by increasing the number of bins and measurement channels. In the benchmark of zfit and RooFit, the same unbinned fits are used as in the original zfit paper.

In all frameworks, the minimizer Minuit2 is used with identical settings, leading to a comparison of the different likelihood evaluation backends. While working on these benchmarks, we were in contact with developers of all three benchmarked frameworks. This talk will focus on the results of the comparative benchmarks between the different frameworks.Speaker: Daniel Werner -

19

HEP in the browser using JupyterLite and Emscripten-forge

When enumerating the environments in which HEP researchers perform their analyses, the browser may not be the first that comes to mind. Yet, recent innovations in the tooling behind conda-forge, Emscripten, and web-assembly have made it easier than ever to deploy complex, multiple-dependency environments to the web.

An introduction will be given to the technologies that make this possible, followed by a demonstration of deploying a HEP tutorial analysis to GitHub pages. An emphasis will be made upon the importance of porting HEP packages with compiled code to leverage these new features.

Speaker: Angus Hollands (Princeton University)

-

17

-

16:00

BREAK

-

Plenary Session WednesdayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Jim Pivarski (Princeton University)

-

20

Bayesian and Frequentist Methodologies in Collider Physics with pyhf

Collider physics analyses have historically favored frequentist statistical methodologies, with some exceptions of Bayesian inference in LHC analyses through use of the Bayesian Analysis Toolkit (BAT). In an effort to allow for advanced Bayesian methodologies for binned statistical models based on the HistFactory framework, which is often used in High-Energy physics, we developed the Python package Bayesian_pyhf. It allows for the evaluation of models built using pyhf, a pure Python implementation of the HistFactory framework, with the Python library PyMC. Based on Monte Carlo Chain Methods, PyMC enables Bayesian modeling and together with the arviz library offers a wide range of Bayesian analysis tools. Utilizing the frequentist analysis methodologies already present with pyhf, we demonstrate how Bayesian_pyhf can be used for the parallel Bayesian and frequentist evaluation of binned statistical models within the same framework.

Speaker: Malin Elisabeth Horstmann (Technische Universitat Munchen (DE)) -

21

Awkward Target for Kaitai Struct

Data formats for scientific data often differ across experiments due the hardware design and availability constraints. To interact with these data formats, researchers have to develop, document and maintain specific analysis software which are often tightly coupled with a particular data format. This proliferation of custom data formats has been a prominent challenge for the Nuclear and High Energy Physics (NHEP) community. Within the Large Hadron Collider (LHC) experiments, this problem has largely been mitigated with the widespread adoption of ROOT.

However, not all experiments in the NHEP community use ROOT for their data formats. Experiments such as Cryogenic Dark Matter Search (CDMS) continue to use custom data formats to meet specific research needs. Therefore, simplifying the process of converting a unique data format to analysis code still holds immense value for the broader NHEP community. We propose adding Awkward Arrays, a Scikit-HEP library for storing nested, variable data into Numpy-like arrays, as a target language for Kaitai Struct for this purpose.

Kaitai Struct is a declarative language that uses a YAML-like description of a binary data structure to generate code, in any supported language, for reading a raw data file. Researchers can simply describe their data format in the Kaitai Struct YAML (.ksy) language just once. Then this KSY format can be converted into a compiled Python module (C++ files wrapped up in pybind11 and Scikit-Build) which takes the raw data and converts it into Awkward Arrays.

This talk will focus on introducing the recent developments in the Awkward Target for Kaitai Struct Language. It will demonstrate the use of given KSY to generate Awkward C++ code using header-only LayoutBuilder and Kaitai Struct Compiler.

Speaker: Manasvi Goyal (Princeton University (US)) -

22

Standalone framework for the emulation of HGCAL firmware trigger primitives in the CMS online trigger system

The fast-approaching High Luminosity LHC phase introduces significant challenges and opportunities for CMS. One of its major detector upgrades, the High Granularity Calorimeter (HGCAL), brings fine segmentation to the endcap regions. It requires a fast online trigger system (12.5 us latency) to extract interesting information from the ~100Tb of data produced every second by its custom read-out chips. A fraction of that time is devoted to the generation of trigger primitives (TPs) in the firmware. TPs represent the building blocks of the physical quantities used to decide whether an event is worth further inspection.

The emulation of the reconstruction of TPs, so far available through the official CMS software in C++, has been ported to a standalone Python framework, and has been fully validated. The emulation can now be run locally, which is ideally suited for fast testing, parameter optimization and exploration and development of new algorithms. In parallel, we have implemented a simplified version of the full geometry of HGCAL, used to debug the official C++ geometry and to create event displays to validate, inspect and illustrate the reconstruction. The framework is being currently used by a small team, and is expected to become the basis of the remaining optimisation studies on TPs' reconstruction.

In this talk we cover the framework's structure, conceived to be modular and fast, targeting datasets with high pile-up. We will briefly cover some reconstruction algorithms available in the emulator, as well as the two- and three-dimensional interactive event displays being used. We conclude by discussing different ways to share interactive visualizations, including the deployment of a simple web application using CERN's Platform-as-a-Service.Speakers: Bruno Alves (Centre National de la Recherche Scientifique (FR)), Marco Chiusi (Centre National de la Recherche Scientifique (FR))

-

20

-

-

-

Plenary Session ThursdayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Matthew Feickert (University of Wisconsin Madison (US))

-

23

Unified and semantic processing for heterogeneous Monte-Carlo data

This talk presents a comprehensive toolset tailored to high energy physics (HEP) research, comprising

graphicle,showerpipe,colliderscope, andheparchy. Each component integrates into a cohesive workflow, addressing distinct stages of HEP data analysis.These tools fill a niche within the HEP software ecosystem in Python by offering unified representations of Monte-Carlo event records. Routines are provided for filtering over all components of the event record, using operations based on the semantics of each particle attributes, eg. momentum, PDG code, status code, etc. These may be combined in complex queries, leading to powerful high level analysis.

showerpipe, as the foundational component, provides essential capabilities for simulating particle showers in HEP experiments. It provides a Pythonic interface with Pythia8 event generators and Les Houches files, generating simulated data.PythiaGeneratorwrapspythia8with a standard Python iterator. Successive events are generated by simply looping over an instance of this class. The event record is contained in aPythiaEvent, whose properties expose particle data vianumpyarrays. This enables easy downstream analysis and portability in many pipelines. The ancestry of particles within the event is given as a directed acyclic graph, in coordinate (COO) format.Building upon

showerpipe's data generation capabilities,graphicleprovides routines to unify the heterogeneous data record. It offers a sophisticated approach to semantic data querying. Withgraphicle, researchers can navigate ancestry via the DAG, select specific event regions based on status codes, form clusters, and extract aggregate properties, like mass. This semantic querying of heterogeneous data bridges the gap between data generation and analysis, streamlining the process.colliderscopecomplements the toolchain by providing a dynamic visualization layer. It enhances data understanding with interactive HTML displays, including directed acyclic graphs (DAGs) for event history representation and scatter plots for particle distribution analysis. In addition to visualising individual event records,colliderscopeoffers histogram tools for statistical analysis of many events.colliderscopemay be installed with an optional web-interface, which provides realtime data generation, visualisation, clustering, and mass calculations.To complete the toolset,

heparchyhandles input and output (IO) operations for the generated data. It enables efficient data storage, retrieval, and management with HDF5 files. It standardises the difficult task of efficient storage of, and access to, HEP data. Data may be compressed either with LZF or GZIP, for wider compatibility outside of Python.heparchymay be integrated with dataloaders in ML toolchains to offer convenient and high performance data retrieval for training.This talk will demonstrate how these libraries are used together to perform data analysis. Examples will show its use cases as an exploratory tool, a work-horse to produce high performance analysis scripts, or as part of a pre-processing pipeline for machine learning applications. Attendees will gain insights into the symbiotic relationship between data generation, semantic querying, visualization, and data management, resulting in a comprehensive toolset that empowers numerical scientists in HEP research.

Speaker: Jacan Chaplais -

24

Spey: smooth inference for reinterpretation studies

Statistical models are at the heart of any empirical study for hypothesis testing. We present a new cross-platform Python-based package which employs different likelihood prescriptions through a plug-in system. This framework empowers users to propose, examine, and publish new likelihood prescriptions without developing software infrastructure, ultimately unifying and generalising different ways of constructing likelihoods and employing them for hypothesis testing, all in one place. Within this package, we propose a new simplified likelihood prescription that surpasses its predecessors' approximation accuracy by incorporating asymmetric uncertainties. Furthermore, our package facilitates the inclusion of various likelihood combination routines, thereby broadening the scope of independent studies through a meta-analysis. By remaining agnostic to the source of the likelihood prescription and the signal hypothesis generator, our platform allows for the seamless implementation of packages with different likelihood prescriptions, fostering compatibility and interoperability.

Speaker: Jack Y. Araz (IPPP - Durham University) -

25

Python based detector simulation software - RASER

We developed a fast simulation software for semi-conductor detector in python - RASER (RAdiation SEmi-conductoR). RASER aims to estimate both spatial and timing resolution for semiconductor (silicon and SiC for now) detectors, providing predicts for detector design. It works relied on Geant4 and DEVSIM. Nonuniform charge deposition is solved from Geant4. The python bindings for Geant4 depends on g4py. The process of solving for the electric field is implemented in DEVSIM by creating a mesh, defining doping, and incorporating models such as the Shockley-Read-Hall model and Hatakeyama avalanche model. In devsim, equations are transformed into integral forms and solved. Timing resolution research of SiC planar and 3D PiN has published based on RASER simulation. TCT study and telescope frame is ongoing.

Speakers: Dr Suyu Xiao (Shandong Institute of Advanced Technology, Jinan, China), Xin Shi (Chinese Academy of Sciences (CN)) -

26

Workshop close-outSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

23

-