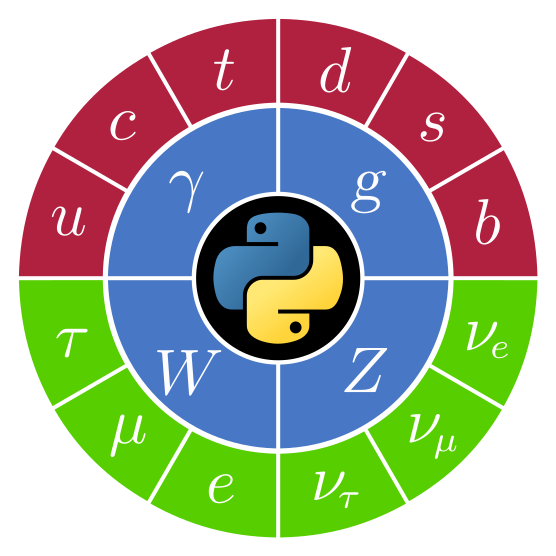

PyHEP 2021 (virtual) Workshop

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

The PyHEP workshops are a series of workshops initiated and supported by the HEP Software Foundation (HSF) with the aim to provide an environment to discuss and promote the usage of Python in the HEP community at large. Further information is given on the PyHEP Working Group website.

PyHEP 2021 will be a virtual workshop. It will be a forum for the participants and the community at large to discuss developments of Python packages and tools, exchange experiences, and inform the future evolution of community activities. There will be ample time for discussion.

The agenda is composed of plenary sessions:

2) Topical sessions.

Organising Committee

Eduardo Rodrigues - University of Liverpool (Chair)

Ben Krikler - University of Bristol (Co-chair)

Jim Pivarski - Princeton University (Co-chair)

Matthew Feickert - University of Illinois at Urbana-Champaign

Oksana Shadura - University of Nebraska-Lincoln

Philip Grace - The University of Adelaide

Sponsors

The event is kindly sponsored by

|

|

|

|

-

-

Plenary Session MondayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Oksana Shadura (University of Nebraska Lincoln (US))

-

1

Welcome and workshop overviewSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

2

Level-up your Python (Part I)

This is a short course in intermediate Python. Attendees will learn about class design patterns, the python memory model, debugging, profiling, and more.

Speaker: Henry Fredrick Schreiner (Princeton University) -

3

Astropy (cancelled)Speaker: Dr Brigitta Sipocz

-

4

A Python package for distributed ROOT RDataFrame analysis

The declarative approach to data analysis provides high-level abstractions for users to operate on their datasets in a much more ergonomic fashion compared to previous imperative interfaces. ROOT offers such a tool with RDataFrame, which has been tested in production environments and used in real-world analyses with optimal results. Its programming model acts by creating a computation graph with the operations issued by the user and executing it lazily only when the final results are queried. It has always been oriented towards parallelisation, with native support for multi-threading execution on a single machine.

Recently, RDataFrame has been extended with a Python layer that is capable of steering and executing the RDataFrame computation graph over a set of distributed resources. In addition, such layer requires minimal code changes for an RDataFrame application to run distributedly. The new tool features a modular design, such that it can support multiple backends in order to exploit the vast ecosystem of distributed computing frameworks with Python bindings.

This work presents Distributed RDataFrame, its programming model and design. It also demonstrates its current compatibility with two different distributed computing frameworks, namely Apache Spark and Dask, with more to come in the future.Speaker: Vincenzo Eduardo Padulano (Valencia Polytechnic University (ES)) -

16:10

Break

-

5

Monolens: view part of your screen in grayscale or simulated color vision deficiency

Monolens is a platform-independent app written in Python that uses the Qt framework (PySide6) to create a window on the screen, which shows the part under the window in grayscale or simulated color vision deficiency. The purpose of this app is to make it easy to preview how scientific plots would appear in b/w print or to a person with color vision deficiency. While there are other ways to obtain the same result, Monolens is particularly easy to use on/off. Monolens uses Numpy and Numba to perform the color transformation of the pixels on the computer screen in real-time and in parallel on several cores.

Speaker: Dr Hans Peter Dembinski (TU Dortmund) -

6

Easily report the progress of a program to your mobile

Many times we perform automated tasks that demand a lot of time (hours, days, weeks), for example some measurement in the lab sweeping some parameter and acquiring data. Furthermore, no matter how much care we have when coding, there is always the possibility for some unexpected problem to occur and stop the execution of our program, without us noticing it (unless we go there and see it crashed). To deal with this problem I developed a very simple yet useful package to easily report the progress of loops using a Telegram bot. This package reports in a Telegram conversation (i.e. to your cell phone and/or any PC worldwide) the percentage of progress and the remaining time to complete the execution of the program. By default it sends one update per minute. When the program is finished, it sends a notification informing whether there was an error or not. In this way there is no need to regularly check the status/progress of some task, which is especially useful when it may require some days or even weeks to complete. This package is plug 'n play, you install it and it is ready to go, it is cross platform and requires no special permission so it should work anywhere. In this talk I will show how to use it.

Speaker: Matias Senger (Universitaet Zuerich (CH)) -

7

Create the shell experience thanks to cmd module

I will present the idea of the python module cmd which allows to have a shell with dedicated command, auto-completion, help.

In a second stage, I will present the customization that we have done within MG5aMC allowing to present a fully functional experience and various scenario under which this module is used.

Speaker: Olivier Mattelaer (UCLouvain) -

8

Detector design for HL-LHC in FreeCAD using python scripts

The LHC machine is planning an upgrade program which will smoothly bring the luminosity to about $5-7.5\times10^{34}$cm$^{-2}$s$^{-1}$, to possibly reach an integrated luminosity of $3000-4000\;$fb$^{-1}$ over about a decade. This High Luminosity LHC scenario, HL-LHC, starting in 2027, will require an upgrade program of the LHC detectors known as Phase-2 upgrade. As part of the HL-LHC detector upgrade programme, the CMS experiment is developing a new Outer Tracker with reduced material budget, higher radiation tolerance, and inbuilt trigger capabilities.

While powerful proprietary CAD (Computer Aided Design) and CAE (Computer-Aided Engineering) software is traditionally used in the design phase of modern detectors, these softwares only provide limited scripting capabilities. Instead, FreeCAD is a customizable, open-source parametric 3D CAD built from scratch to be totally controlled by Python scripts.

In this presentation, we will show how Python scripting in FreeCAD has been used to develop, study and validate the design of services for the CMS Outer Tracker Endcaps, and how it can be used to prepare for the assembly of the detector. This approach is shown to provide an excellent interoperability with the rest of the HEP ecosystem and an exceptionally quick turnaround during development.

Speaker: Christophe Delaere (Universite Catholique de Louvain (UCL) (BE)) -

9

Computing tag-and-probe efficiencies with Apache Spark and Apache Parquet

In this talk we demonstrate a new framework developed by the muon physics object group in CMS to compute tag-and-probe (T&P) efficiencies and scale factors by leveraging the power and scalability of Apache Spark clusters. The package, named “spark_tnp”, allows physics analyzers and other users to quickly and seamlessly compute efficiencies for their own custom objects and identification criteria, developed to meet a diverse set of physics goals within the Collaboration. For the backend cluster, we use CERN’s Spark and Hadoop services (“analytix” cluster). The ntuples with event information are produced separately in ROOT and converted to Apache Parquet format, which are then stored at CERN’s Hadoop filesystem (HDFS) facility. The combined leverage of Spark and Parquet files in HDFS enables a substantial speed-up of T&P computations, with custom scale factors derived in a matter of minutes, compared to days in a previous framework. The tutorial itself will focus on a Jupyter notebook example of a T&P computation, using CERN’s SWAN service for easy access to the analytix cluster within an interactive environment (though the package also supports scripted execution for official production).

Speaker: Andre Frankenthal (Princeton University (US))

-

1

-

-

-

Social time: Tuesday Meet and Mingle

Get to know the other PyHEP participants better and help to reinforce our fledgling community.

We'll be using a different platform, RemotelyGreen. To join:

1. Click the link just below

2. Make an account by connecting with LinkedIn or using an email and password (check for a verification email in this case).

3. Choose as many of the event's topics that interest you.

4. Wait for the session to begin - you'll be shuffled with other participants automatically. If you arrive late, you will be able to join at the next shuffle.Conveners: Benjamin Krikler (University of Bristol (GB)), Eduardo Rodrigues (University of Liverpool (GB)), Jim Pivarski (Princeton University), Matthew Feickert (Univ. Illinois at Urbana Champaign (US)), Oksana Shadura (University of Nebraska Lincoln (US)), Philip Grace (The University of Adelaide) -

Plenary session TuesdayConveners: Eduardo Rodrigues (University of Liverpool (GB)), Oksana Shadura (University of Nebraska Lincoln (US))

-

10

Level-up your Python (part II)

This is a short course in intermediate Python. Attendees will learn about using packages, decorators, and code acceleration in Numba and pybind11.

Speaker: Henry Fredrick Schreiner (Princeton University) -

11

Data visualization with Bokeh

We discuss and justify the application of Python's Bokeh library to non-interactive and interactive visualization. A comparison between Bokeh and some widely used alternatives is made. We include a tutorial covering the key aspects necessary to create virtually any interactive plot needed in HEP, and provide custom examples and code.

Speaker: Bruno Alves (LIP Laboratorio de Instrumentacao e Fisica Experimental de Part) -

12

Distributed statistical inference with pyhf

pyhfis a pure-python implementation of the HistFactory statistical model for multi-bin histogram-based analysis with interval estimation based on asymptotic formulas.pyhfsupports modern computational graph libraries such as JAX, PyTorch, and TensorFlow to leverage features such as auto-differentiation and hardware acceleration on GPUs to reduce the time to inference.pyhfis also well adapted to performing distributed statistical inference across heterogeneous computing resources (clusters, clouds, and supercomputers) and task execution providers (HTCondor, Slurm, Torque, and Kubernetes) when paired with high-performance Function as a Service platforms likefuncXor highly scalable systems like Google Cloud Platform that allow for resource bursting. In this notebook talk we will give interactive examples of performing statistical inference on public probability models from ATLAS analyses published to HEPData in which we leverage these resources to reproduce the analyses results with wall times of minutes.Speaker: Matthew Feickert (Univ. Illinois at Urbana Champaign (US)) -

13

Binned template fits with cabinetry

The cabinetry library provides a Python-based solution for building and steering binned template fits. It implements a declarative approach to construct statistical models. The instructions for building all template histograms required for a statistical model are executed using other libraries in the pythonic HEP ecosystem. Instructions can additionally be injected via custom code, which is automatically executed when applicable at key steps of the workflow. A seamless integration with the pyhf library enables cabinetry to provide interfaces for all common statistical inference tasks. The cabinetry library furthermore contains utilities to study and visualize statistical models and fit results.

This tutorial provides an overview of cabinetry and shows its use in the creation and operation of statistical models. It also demonstrates how to use cabinetry for common tasks required during the design of a statistical analysis model.

Speaker: Alexander Held (New York University (US)) -

16:00

Break

-

14

Uproot and Awkward Array tutorial

Uproot provides an easy way to get data from ROOT files into arrays and DataFrames, and Awkward Array lets you manipulate arrays of complex data types. This tutorial starts at the beginning, showing how an Uproot + Awkward Array (+ Hist + Vector) workflow differs from ROOT based workflows, how to extract objects and arrays from ROOT files, how to apply cuts and restructure arrays, and it ends with a walk-through of advanced topics: gen-reco matching and resolving combinatorics in H → ZZ → 4μ. Numba, a just-in-time compiler for Python, is used in physics examples involving Lorentz vectors, and I'll talk about best practices for speeding up computations and taming complexity by doing things one step at a time.

Speaker: Jim Pivarski (Princeton University) -

15

Fastjet: Vectorizing Jet Finding

Jet finding is an essential step in the process of Jet analysis. The currently available interfaces cannot take multiple events in one function call, which introduces a significant overhead. To remedy this problem, we present an interface for FastJet using Awkward Arrays to represent multiple events in one array.

The package depends on other SCIKIT-HEP packages like Vector and by leveraging its functionality the user can also perform coordinate transformations for Lorentz vectors. The vectorized and multi event data handling also makes it modern and compatible with (future) parallelized implementation of Fastjet. It is intended to be the replacement for all the Python interfaces for Fastjet available right now.

Speaker: Aryan Roy (Manipal Institute of Technology) -

16

PyTorch INFERNO

The INFERence-aware Neural Optimisation (INFERNO) algorithm (de Castro and Dorigo, 2018 https://www.sciencedirect.com/science/article/pii/S0010465519301948), allows one to fully optimise neural networks for the task of statistical inference by including the effects of systematic uncertainties in the training. This has significant advantages for work in HEP, where the uncertainties are often only included right at the end of an analysis, and spoil the usage of classification as a proxy task to statistical inference.

The loss itself, however, can be somewhat difficult to integrate into traditional frameworks due to its requirements to access the model and the data at different points during the optimisation cycle. Including both a lightweight neural-network framework for PyTorch, and the required inference functions, the PyTorch-INFERNO package provides a "drop-in" implementation of the INFERNO loss. The package also aims to serve as a demonstration of how potential users can implement the loss themselves to drop into their framework of choice.

In this lightning talk, I will give a quick overview of both the algorithm and the package, a well as discuss some of the more general requirements for implementing the algorithm as a drop-in loss.

GitHub: https://github.com/GilesStrong/pytorch_inferno

Docs: https://gilesstrong.github.io/pytorch_inferno/

Blog-posts (part 1 of 5): https://gilesstrong.github.io/website/statistics/hep/inferno/2020/12/04/inferno-1.htmlSpeaker: Dr Giles Chatham Strong (Universita e INFN, Padova (IT)) -

17

dEFT - a pure Python tool for constraining the SMEFT with differential cross sections

The Standard Model Effective Field Theory (SMEFT) extends the SM with higher-dimensional operators each scaled by a dimensionless Wilson Coefficient $c_i$ to model scenarios of new physics at some large scale $\Lambda$. Thus effects of new physics in the LHC data may be sought by fitting the $c_i$ to appropriate LHC data. Differential cross sections have numerous advantages as the inputs to such fits. First, they are abundant and precise, even in extreme regions of phase space where SMEFT effects are maximal. Second, as detector effects have been corrected for, the fits can be performed outside experimental collaborations without access to detailed simulation of the detector response. Third, fits can be continually and easily updated without re-analysis of experimental data as theoretical improvement to SMEFT predictions become available. Fourth, combined fit using data from multiple experiments can be performed with approximations of covariances across experimental data. The differential Effective Field Theory Tool (dEFT) is a pure Python package that aims to automate the fit of the $c_i$ to differential cross section data. The tool generates and validates a multivariate polynomial model of the differential cross section in the N $c_i$ being considered and numerically estimates the N-dimensional likelihood function using the popular emcee package. Bayesian credible intervals in 1-D for each coefficient and 2-D for each pair of coefficients are generated using the corner package. A given fit is entirely defined by a single human-readable json file. Preliminary results from a benchmark analysis using an alpha version of dEFT will be presented to demonstrate the structure and philosophy of the dEFT package and future plans and functionalities towards a first stable release of dEFT will be discussed.

Speaker: James Michael Keaveney (University of Cape Town (ZA))

-

10

-

-

-

Plenary Session WednesdayConveners: Jim Pivarski (Princeton University), Matthew Feickert (Univ. Illinois at Urbana Champaign (US))

-

18

High-Performance Histogramming for HEP Analysis

Recent developments in Scikit-HEP libraries have enabled fast, efficient histogramming powered by boost-histogram. Hist provides useful shortcuts for plotting and profiles based on boost-histogram. This talk aims to discuss these histogramming packages built on the histogram-as-an-object concept.

The attendees would learn how to use these tools to easily make histograms, perform various operations on them, and make their analysis more efficient and easy. The talk would also discuss some examples and use cases, recent developments and future plans for these packages.

Speakers: Aman Goel (University of Delhi), Henry Fredrick Schreiner (Princeton University), Shuo Liu -

19

MadMiner: a python based tool for simulation-based inference in HEP

MadMiner is a python based tool that implements state-of-the-art simulation-based inference strategies for HEP. These techniques can be used to measure the parameters of a theory (eg. the coefficients of an Effective Field Theory) based on high-dimensional, detector-level data. It interfaces with MadGraph and "mines gold" associated to the differential cross-section at the parton level and then passes this information through a detector simulation (e.g. Delphes). Finally, it uses pytorch and recently developed loss functions to learn the likelihood ratio and/or optimal observables. Finally, it can perform basic statistical tests based on the learned likelihood ratio or optimal observables. The package is on distributed on PyPI and has pre-built docker containers for the event generation and learning stages. In addition, there are also REANA workflows that implement common analysis use cases for the library.

Speaker: Kyle Stuart Cranmer (New York University (US)) -

20

Introduction to RAPIDS, GPU-accelerated data science libraries

Data volumes and computational complexity of analysis techniques have increased, but the need to quickly explore data and develop models is more important than ever. One of the key ways to achieve this has been through GPU acceleration. In this talk we introduce RAPIDS, a collection of GPU accelerated data science libraries, and illustrate how to use Dask and RAPIDS to dramatically increase performance for common ETL/ML workloads.

Speaker: Benjamin Zaitlen (NVIDIA) -

16:00

Break

-

21

zfit introduction, minimization and interoperability

zfit is a Python library for (likelihood) model fitting in pure Python and aims to establish a well defined API and workflow. zfit provides a high level interface for advanced model building and fitting while also designed to be easily extendable, allowing the usage of custom and cutting-edge developments from the scientific Python ecosystem in a transparent way.

This tutorial is an introduction to zfit with a focus on zfits newest developments in the area of minimization and a better interoperability with other libraries and pure Python functions.Speaker: Jonas Eschle (Universitaet Zuerich (CH)) -

22

Automating Awkward Array testing

The talk would cover how we can find loopholes in Awkward Array using software testing methodologies. Sometimes when dealing with array manipulations we face breakpoints in the process when using certain inputs. Therefore to find out these cases, input values have to be generated automatically based on the constraints of various functions and fed to these functions in order to find out which input case throws an error.

This problem can be solved using the hypothesis testing library to auto-generate these test input cases based on kernel function constraints. Kernel function is the lowest layer in awkward array that is responsible for all sorts of array manipulation. To find out which input creates a corner case in a function we use different hypothesis strategies. The talk would cover the way to select the best strategy for testing a certain function. The reason for using hypothesis over other libraries is that it can automate the testing process, generate input values or even generate a test function without writing too many lines of code. The talk would cover an overview of this entire process.

Speaker: Santam Roy Choudhury (National Institute of Technology, Durgapur) -

23

mplhep

mplhep is a small library on top of matplotlib, designed to simplify making plots common in HEP, which are not necessarily native to matplotlib, as well as, to distribute plotting styles and fonts to minimize the amount of needed cookie-cutter code and produce consistent results across platforms.

Speaker: Andrzej Novak (RWTH Aachen (DE)) -

24

pyBumpHunter : A model agnostic bump hunting tool in python

The BumpHunter algorithm is a well known test statistic designed to find a excess (or a deficit) in a data histogram with respect to a reference model. It will compute the local and global p-values associated with the most significant deviation of the data distribution. This algorithm has been used in various High Energy Physics analyses. The pyBumpHunter package [1] proposes a new public implementation of BumpHunter that has been recently accepted in Scikit-HEP. In addition to the usual scan and signal injection test with 1D distributions, pyBumpHunter also provides several features, such as an extension of the algorithm to 2D distributions, multiple channels combination and side-band normalization.

This presentation will give an overview of the available features as well as a few practical examples of application.Speaker: Louis Vaslin (Université Clermont Auvergne (FR)) -

25

root2gnn: GNN for HEP data

We developed a python-based package that facilitates the usage of graph neural network on HEP data. It is featured with pre-defined GNN models for edge

classification and event classification. It also

contains a couple of realistic examples using GNN to solve HEP

problems, for example, top tagger (event classification) and boosted

boson reconstruction (edge classification). One can import the

modules to convert the HEP data to different graph types, run the training, monitor the performance, and launch automatic hyperparameter tuning (missing for

now). It can be found at https://github.com/xju2/root_gnn

(documentations are in development). Below is a selection of its features/wishes:- Common interface for converting physics events saved in different

data formats to (fully-connected) graph structures. To be developed

so as to allow different graph types (such as hypergraphs, customized

edges) - Pre-defined GNN models; Ultimately, we would like to cover all GNN models used in physics publications, i.e. one-stop GNN model shopping

station - Pytorch-lightning style trainers to make sure an easy training procedure

- Metrics monitoring

- Practical examples to get started with GNNs with public datasets

Speaker: Xiangyang Ju (Lawrence Berkeley National Lab. (US)) - Common interface for converting physics events saved in different

-

26

Hepynet - a DNN assistant framework for HEP analysis

Hepynet stands for "High energy physics, the python-based, neural-network framework". It's been developed to help with the neural network application in high energy physics analysis tasks. Different tasks like train/tune/apply are implemented based on popular packages used in the industry like Tensorflow. All jobs are defined by simple config files and the functionalities are collected in the package centrally, which helps keep a good record of the study history and saves time on implementing codes used repeatedly. The auto-tuning functionality is also implemented to help people get the best hyperparameter set for the model training which "take the human out of the loop".

Speaker: Zhe Yang (University of Michigan (US)) -

27

Introduing NatPy, a simple and convenient python module for dealing with and converting natural units.

In high energy physics, the standard convention for expressing physical quantities is natural units. The standard paradigm sets c = ℏ = ε₀ = 1 and hence implicitly rescales all physical quantities that depend on unit derivatives of these quantities.

We introduce NatPy, a simple python module that levarages astropy.units.core.Unit and astropy.units.quantity.Quantity objects to define user friendly unit objects that can be used and converted within any predefined system of units.

In this talk, we will overview the algebraic methods utilized by the NatPy module, as well as some worked examples to demonstrate how NatPY can seemlesly integrate into any pythonic particle physics workflow.

Speaker: Andre Scaffidi (INFN)

-

18

-

-

-

Plenary Session ThursdayConveners: Benjamin Krikler (University of Bristol (GB)), Jim Pivarski (Princeton University)

-

28

CUDA and Python with Numba

Numba is a Just-in-Time (JIT) compiler for making Python code run faster on CPUs and NVIDIA GPUs. This talk gives an introduction to Numba, the CUDA programming model, and hardware. The aim is to provide a brief overview of:

- What Numba is,

- Who uses it,

- Whether you should use it in your application,

- How to use it, and

- Some different ways to get access to CUDA hardware.

Speaker: Dr Graham Markall (NVIDIA) -

29

Powerful Python Packaging for Scientific Codes

This talk will cover the the best practices of making a highly compatible and installable Python package based on the Scikit-HEP developer guidelines and scikit-hep/cookie. There will be a strong focus on compiled extensions. The latest developments in key libraries, like pybind11, cibuildwheel, and build will be covered, along with potential upcoming advancements in Scikit-Build + CMake.

Speaker: Henry Fredrick Schreiner (Princeton University) -

30

Active Learning for Level Set Estimation

Excursion is a python package that efficiently estimates level sets of computationally expensive black box functions. It is a confluence of Active Learning and Gaussian Process Regression. The difference between Level Set Estimation and Bayesian Optimization is that the latter focuses on finding maxima and minima while the former intends to find regions of the parameter space where the function takes a specified value, like a countour.

Excursion uses GPyTorch with state-of-the-art fast posterior fitting techniques and takes advantage of GPUs to scale computations to a higher dimensional input space of the black box function than traditional approaches.Speaker: Irina Espejo Morales (New York University (US)) -

31

Gallifray: A Geometric Modelling and Parameter Estimation Framework for Black hole images using Bayesian Techniques

Recent observations from the EHT of the center of the M87 galaxy have opened up a whole new era for testing general relativity using BH (Black hole) images generated from VLBI. While different theories have their version of BH solutions, there are some ‘geometric models’ as well which can be approximated to visualize the image of a BH in addition to understand the geometric properties of the radio source such that ring size, width, etc. To incorporate and implement such a framework, different methods and techniques are needed to be explored for doing such model comparison. We present ‘Gallifray’ [1], an open-source Python-based framework for geometric modeling and estimation/extraction of parameters. We employ Bayesian techniques for the analysis and extraction of parameters. In my presentation, I will talk about the workflow, preliminary results obtained, and applications of the library for image/model comparison. I will also talk about the scope of the library in testing Black hole images for any possible deviation from Kerr spacetime.

References:

[1] https://github.com/Relativist1/Gallifray/Speaker: Saurabh Saurabh (University of Delhi) -

32

Ray/DaskSpeaker: Mr Clark Zinzow (AnyScale)

-

16:10

Break

-

33

Python for XENON1T

Over the last decades noble liquid time projection chambers (TPCs) have become one of the forefront technologies for the search in WIMP dark matter. As the detector increases in size, so do the amount of data and data-rates, leading to higher demands on the analysis software.

The "streaming analysis for xenon experiments" (strax) is a software package developed by the XENON collaboration for the upcoming XENONnT experiment. It provides a framework for signal processing, data storage and reduction as well as corrections handling for noble liquid TPCs. The software is written in Python and relies heavily on the SciPy-stack. The data itself is organized in a tabular format utilizing a combination of numpy structured arrays and numba for high performances. This approach allows live online processing of the data with a throughput of 60 MB raw / sec / core. Strax is an open source project and is used by a couple of smaller liquid xenon TPCs such as XAMS and XEBRA.

In this talk we will explain the working principle and infrastructure provided by strax. We will show how a complex processing streamline can be build up via so called strax plugins based on XENON-collaborations open source package called straxen

Speaker: Joran Angevaare (Nikhef) -

34

Liquid Argon Reconstruction Done in pythON : LARDON

Liquid Argon Time Projection Chambers (LArTPC) are widely used as detectors in current and future neutrino experiments.

One of the advantage of LArTPCs is the possibility to collect data from a very large active volume (up to tens of kilo-ton in the case of the DUNE experiment) with a mm^3-scale resolution. LArTPC technology using different designs are currently being tested in large-scale prototypes at CERN by collecting cosmic rays and beam-test data. One of the prototypes collected cosmic data at a 10 Hz rate; each event sized 115 Mbytes : hence a fast online reconstruction tool is mandatory.

The reconstruction of LArTPC events relies on the combination of several 2D projections (along time and space) together, in order to retrieve the 3D and the calorimetric informations. This can be done by many ways: with pattern recognition, using particle-flow techniques, or by fitting tracks as line in each projections and then 3D match them.

The latter is the algorithm flow chosen in the LARDON pipeline. By profiting from the array structure of numpy, and with the help of other optimized libraries such as numba, rtree, sklearn, LARDON is able to reconstruct an event in 3D in 20s, which is about 60 times faster than the official code of the collaboration with comparable performances. In particular, a large fraction of the LARDON code focuses on the noise - which is currently reduced by a factor of 10. In the light of a future data taking runs with cosmic rays in fall 2021, LARDON is foreseen to be used as the online reconstruction code for one of the DUNE prototypes.

In this talk, I will first explain the specificities of the LArTPC events. By using a notebook, I will show how the different type of noise are handled and removed. The hit finder and track fitting methods will be also shown. The remaining issues and possible improvements of the code will be then discussed.Speaker: Laura Amelie Zambelli (Centre National de la Recherche Scientifique (FR)) -

35

Python-based tools and frameworks for KM3NeT

The KM3NeT collaboration builds, operates and maintains two water Cherenkov detectors for neutrino astronomy and physics studies, which are currently being built in the Mediterranean Sea. In order to operate the detector infrastructure and exploit scientifically the recorder data, a sophisticated software environment is necessary. For several tasks, e.g. the monitoring of the detector, Python-based solutions are available. The main data acquisition software is implemented in C++ and the data is written out to a customized ROOT file format. In order to provide access to the data within a Pythonic environment, the km3io project utilizes the uproot package. The processing of data can be handled using km3pipe, which is a pipeline work flow framework based on the thepipe project and adding many specialized KM3NeT functionalities. For the simulation of neutrino events the GiBUU neutrino generator is adapted to the KM3NeT detector environment via the km3buu Python package. Besides a general overview over the Python tools and frameworks developed and utilized in KM3NeT, the focus of the talk lies on those three packages.

Speaker: Johannes Schumann (University of Erlangen) -

36

Quantum machine learning for jet tagging at LHCb

At LHCb, $b$-jets are tagged using several methods, some of them with high efficiency but low purity or high purity and low efficiency. Particularly our aim is to identify jets produced by $b$ and $\bar{b}$ quarks, which is fundamental to perform important physics searches, e.g. the measurement of the $b-\bar{b}$ charge asymmetry, which could be sensitive to New Physics processes. Since this is a classification task, Machine Learning (ML) algorithms can be used to achieve a good separation between $b$- and $\bar{b}$-jets: classical ML algorithms such as Deep Neural Networks have been proved to be far more efficient than standard methods since they rely on the whole jet substructure. In this work, we present a new approach to $b$-tagging based on Quantum Machine Learning (QML) which makes use of the QML Python library Pennylane integrated with the classical ML frameworks PyTorch and Tensorflow. Official LHCb simulated events of $b\bar{b}$ di-jets are studied and different quantum circuit geometries are considered. Performances of QML algorithms are compared with standard tagging methods and classical ML algorithms, preliminarily showing that QML performs almost as good as classical ML, despite using fewer events due to time and computational constraints.

Speakers: Davide Nicotra (Universita e INFN, Padova (IT)), Davide Zuliani (Universita e INFN, Padova (IT))

-

28

-

-

-

Plenary Session FridayConvener: Philip Grace (The University of Adelaide)

-

37

Python for HEP outreach in Rio de Janeiro, Brazil

This presentation will highlight the socio-economic elements of Python. Considering the social differences in big cities like Rio de Janeiro, learning Python offers new opportunities for underprivileged groups. We will show how we use the combination of Python and the CMS Open data to encourage high-school students to follow their curiosity in STEM and how we foster this attraction through undergraduate projects at a later stage of their academic path.

Speaker: Helena Brandao Malbouisson (Universidade do Estado do Rio de Janeiro (BR)) -

38

Using Python, coffea, and ServiceX to Rediscover the Higgs. Twice.

CERN's Open Data Portal has 1000's of datasets, in many formats. The ServiceX project reads experiment data files of various formats and translates them into columnar formats. This talk will demonstrate taking the CMS and ATLAS demonstration Higgs samples from the CERN Open Data Portal and using Servicex, coffea, and other python tools like awkward array to re-discover the Higgs from both experiments. Differences between working with data from the two experiments will be used to illustrate how the tool-chain works together to produce final plots.

Speakers: Mr Baidyanath Kundu, Gordon Watts (University of Washington (US)) -

39

FuncADL: Functional Analysis Description Language

There is an increasing demand for declarative analysis interfaces that allow users to avoid writing event loops. This simplifies code and enables performance improvements via vectorized columnar operations. A new analysis description language (ADL) inspired by functional programming, FuncADL, was developed using Python as a host language. In addition to providing a declarative, functional interface for transforming data, FuncADL borrows design concepts from the field of database query languages to decouple the interface from the underlying physical and logical schemas. In this way, the same query can easily be adapted to select data from very different data sources and formats. In this talk, I will demonstrate the FuncADL query language interface by implementing the example analysis tasks designed by HSF and IRIS-HEP to benchmark the functionality of ADLs.

Speaker: Mason Proffitt (University of Washington (US)) -

40

An Introduction to LUMIN: A deep learning and data science ecosystem for high-energy physics

LUMIN is a deep-learning and data-analysis ecosystem for High-Energy Physics. Similar to Keras and fastai it is a wrapper framework for a graph computation library (PyTorch), but includes many useful functions to handle domain-specific requirements and problems. It also intends to provide easy access to state-of-the-art methods, but still be flexible enough for users to inherit from base classes and override methods to meet their own demands.

It has already been used in a diverse range of applications, e.g. HEP searches with DNNs, industry predictive analytics using feature filtering and interpretation, and final-state reconstruction with 3D CNNs and GNNs.

This notebook tutorial aims to introduce new users to how to approach and solve typical supervised classification problems using the package, as well as describe a few of the more advanced features.

GitHub: https://github.com/GilesStrong/lumin

Docs: https://lumin.readthedocs.io/en/stable/?badge=stableSpeaker: Dr Giles Chatham Strong (Universita e INFN, Padova (IT)) -

16:00

BREAK

-

37

-

Plenary - Julia in HEP & PythonConvener: Eduardo Rodrigues (University of Liverpool (GB))

-

41

Julia in HEP

The Julia programming language was created in 2009, and version 1.0 with its promise of API stability was released in 2018. Its support for interactive programming, for parallel and concurrent paradigms, and its good interoperability with C++ make it a compelling option for high energy and nuclear physics.

In this talk we give a brief overview of the language, and demonstrate how to solve different HEP-specific problems in Julia.

Speakers: Jan Strube (PNNL), Marcel Stanitzki (Deutsches Elektronen-Synchrotron (DE)) -

42

Julia, a HEP dream comes true

Execution speed is critical for code developed for high energy physics (HEP) research. HEP experiments are typically highly demanding in terms on computing power. The LHC experiments uses a computing grid, the Worldwide LHC computing grid, with one million computer cores to process their data. In this talk we will investigate the potential of the Julia programming language for HEP data analysis. Julia is a high-level and high-performance programming language that provides at the same time, ease of code development similar to Python and running performance similar to C, C++, and Fortran. It offers the same level of abstraction as Python, an interpreter-like experience based on a similar technique as the interpreter of ROOT, and a Jupyter notebook kernel. Results of performance measurements specific to HEP applications with a comparison with Python and C++ will also be presented.

Speaker: Philippe Gras (Université Paris-Saclay (FR)) - 43

-

44

Workshop close-outSpeaker: Eduardo Rodrigues (University of Liverpool (GB))

-

41

-