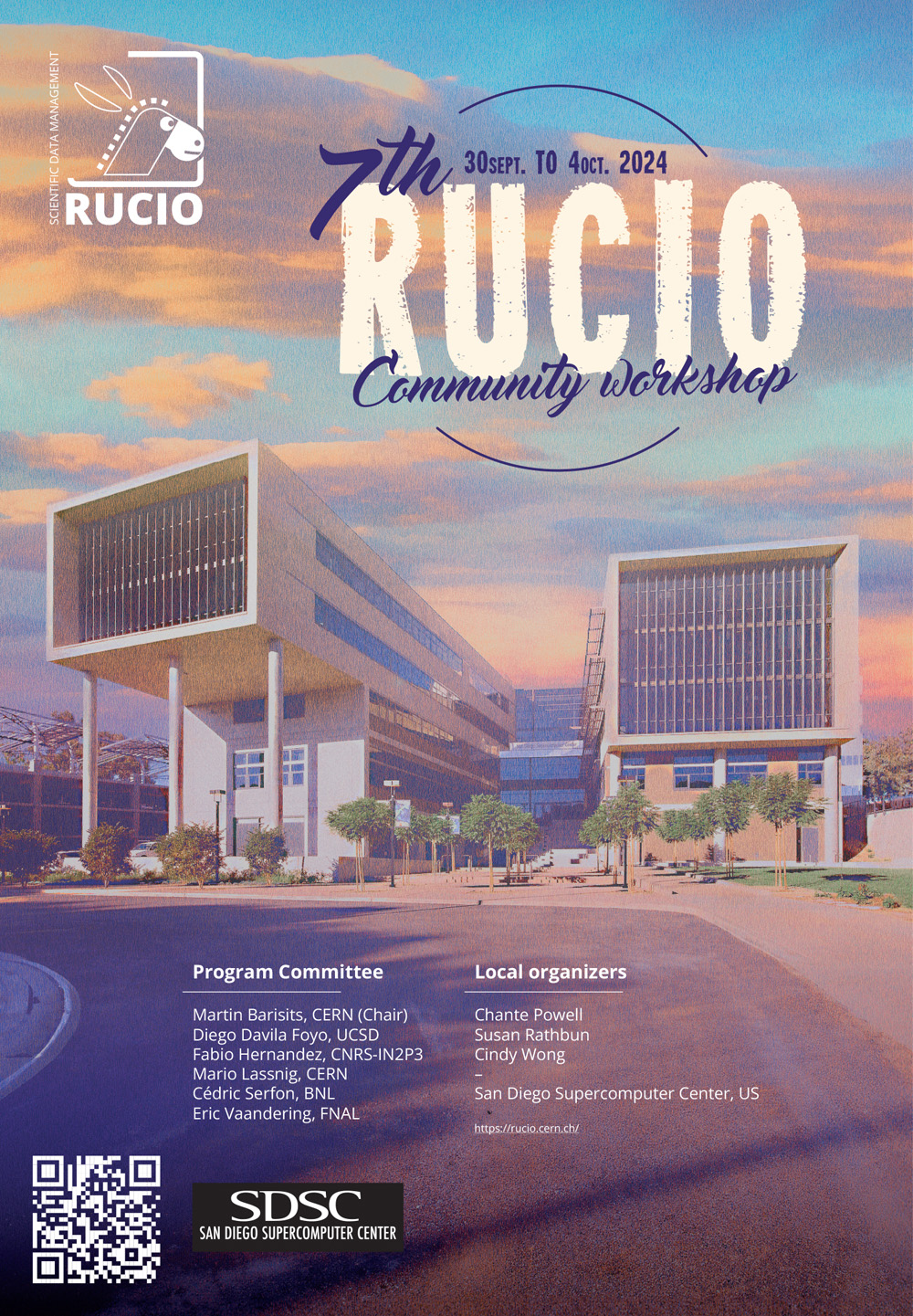

7th Rucio Community Workshop

San Diego Supercomputer Center, US

Rucio is a software framework that provides functionality to organize, manage, and access large volumes of scientific data using customisable policies. The data can be spread across globally distributed locations and across heterogeneous data centers, uniting different storage and network technologies as a single federated entity. Rucio offers advanced features such as distributed data recovery or adaptive replication, and is highly scalable, modular, and extensible. Rucio has been originally developed to meet the requirements of the high-energy physics experiment ATLAS, and is continuously extended to support LHC experiments and other diverse scientific communities.

For this 7th edition of our workshop we will meet at San Diego Super Computer Center, US (Sep 30 - Oct 4).

We have a mailing list and mattermost channel, to which you can subscribe, where we will send more details about the program.

We also support the possibility to participate to the workshop remotely via Zoom. Please register to receive the connection details.

-

-

08:00

→

09:00

Registration 1h

-

09:00

→

10:30

Welcome and Introduction

- 09:00

-

09:05

Logistics 5mSpeaker: Susan Rathbun

-

09:10

Introduction to SDSC and more 35mSpeaker: Frank Wuerthwein (Univ. of California San Diego (US))

- 09:45

-

10:30

→

11:00

Coffee break 30m

-

11:00

→

12:30

Community talksConvener: Diego Davila Foyo (Univ. of California San Diego (US))

-

11:00

Selection of Rucio to manage the SKA data lake 20m

In this talk, we will summarise the process by which the SKA formally selected Rucio as the Distributed Data Management solution for the first version (0.1) of the SKA Regional Centre network (SRCNet) stack. Presented with two possible solutions for managing the archive of SKA Observatory data products, we set out to objectively select the tool which best matched the SRCNet architecture, non-functional requirements and use cases. Here we will present an overview of this process and highlight the features of Rucio that made it the more suitable candidate and where work remains to be done.

Speakers: Dr James Collinson (SKAO), Dr Rosie Bolton (SKAO) -

11:20

CMS Rucio Community Report 20m

This presentation seeks to provide an overview of the operational and infrastructural aspects of Rucio as utilised by the CMS Experiment.

We will discuss the current status of Rucio within CMS, highlighting key operational metrics, system performance, and the challenges encountered. Additionally, we will explore ongoing developments and efforts to support the data management needs of the CMS Experiment.Speaker: Rahul Chauhan (CERN) -

11:40

Creating a rucio service for km3net [remote] 20m

The km3net experiment will generate about 0.5 PB of raw data, and 2 PB of simulation data per year when the detector will be completed. To manage the data, the Netherlands eScience Center has been asked to set up a rucio server. In this presentation we want to show how we set up rucio with Kubernetes,using flux CD and a custom helm chart which deploys the official rucio helm charts.

Speaker: Victor Azizi -

12:00

DUNE Community Report 20m

In this presentation, we will delve into the Rucio experience with the DUNE experiment, focusing on several key aspects. We will review the DUNE data module and share insights from our 2024 big data tests, including the WLCG Data Challenge, collaboration with LHC experiments, and our dress rehearsal. Additionally, we will discuss findings from the ongoing ProtoDUNE data taking with beam at CERN. We will outline our plans for the upcoming year, with a particular emphasis on the integration of CILogon tokens. Finally, we will offer recommendations for the Rucio development team based on our experiences and findings.

Speaker: Yuyi Guo (Fermi National Accelerator Lab. (US))

-

11:00

-

12:30

→

14:00

Lunch 1h 30m

-

14:00

→

15:30

Community talksConvener: Cedric Serfon (Brookhaven National Laboratory (US))

-

14:00

DaFab: Extending Rucio for Enhanced Earth Observation Data Management 20m

The DaFab project introduces a significant application and extension of Rucio in the domain of Earth Observation (EO) data management. This initiative aims to enhance Copernicus data exploitation by leveraging and expanding Rucio's catalog capabilities, alongside advanced AI and High-Performance Computing technologies. We outline how DaFab plans to utilize Rucio's existing metadata management features as a foundation for addressing unique challenges of EO data. Central to the project's vision is the expansion of Rucio's metadata capabilities, including the development of a unified, searchable catalogue of interlinked EO metadata. These enhancements are designed to facilitate improved discovery, access, and utilization of EO data. By exploring these proposed developments, we illustrate how the DaFab project not only aims to benefit from Rucio's existing strengths in cataloging but also intends to contribute back to the Rucio community, expanding its applicability in other data-intensive scientific domains.

Speaker: Dimitrios Xenakis (CERN) -

14:20

Adopting RUCIO for the Italian datalake: National experience and perspectives 20m

With four national laboratories and twenty divisions, the National Institute for Nuclear Physics (INFN) is one of the largest research institutions in Italy. INFN has been running for more than 20 years a distributed computing infrastructure (WLCG Tier-1 at Bologna-CNAF plus 9 Tier-2 centers) which currently offers about 200k CPU cores, 250 PB of enterprise-level disk space and 200 PB of tape storage, serving more than 100 international scientific collaborations. Some of the resources provided by each INFN computing center are integrated in world wide Grid infrastructures, while other resources are exposed through Cloud interfaces.

Thanks also to the "Italian National Centre on HPC, Big Data and Quantum Computing" and the TeRABIT projects (both funded in the context of the Italian "National Recovery and Resilience Plan") INFN is now revising and expanding its infrastructure to tackle the computing challenges of the next decades and to harmonize the management of the grid and cloud stacks. This process involves also the federation of the national computing centers such as CINECA HPC and its pre-exascale Leonardo machine.

In this context RUCIO and its ancillary services have been identified as a key architectural component of the national datalake architecture.

This talk will first present our operational experiences with RUCIO, from deployment up to heterogeneous backend federation, passing through the "onboarding" of the first scientific communities. In addition, we will show how we addressed a few specific requirements such as authorization models, user registration and support for external metadata catalogs. Finally, we will provide an insight on how to extend the adoption of a Rucio-based data management infrastructure at national Level.

Speaker: Antonino Troja -

14:40

Challenges and pitfalls of setting up Rucio for Small and Medium Experiments 20m

The CERN IT department provides support for the needs of major LHC experiments and also small and medium experiments (SMEs).

In this talk we'll explain the deployments performed by the CERN IT community and the challenges and pitfalls we faced during the commissioning of Rucio prototype clusters for SMEs.Speaker: Hugo Gonzalez Labrador (CERN) -

15:00

Rucio Status at IHEP [remote] 20m

With the development of large scaled data experiments located in China, the Computing Center of Institute of High Energy Physics (IHEP) started to use Rucio system to manage Grid data distribution among multiple storage sites. At present, HERD, JUNO, CEPC experiment at IHEP are using or have determined to use Rucio as distributed data management system. This report will share our uses cases on HERD, JUNO and CEPC, including data management design and policies for each experiment, experiment-oriented API and plugins development and Rucio system deployment and maintenance experiences. This report will also discuss the plan and design on data management system based on Rucio for future large-scale scientific facilities at IHEP.

Speaker: Xuantong Zhang (Chinese Academy of Sciences (CN))

-

14:00

-

15:30

→

16:00

Coffee break 30m

-

16:00

→

17:30

Technology talksConvener: Eric Vaandering (Fermi National Accelerator Lab. (US))

-

16:00

Disk and Tape evolution in the Exabyte-scale era 30m

Disk and tape technology are continuously evolving. Rucio relies on multiple storage technologies to accommodate the data deluge from different scientific endeavors. In this talk, we'll deep dive into two storage technologies developed at CERN: EOS (disk media) and CTA (tape media), and we'll give an outlook on the current trends behind these media and what the market looks like.

Speaker: Hugo Gonzalez Labrador (CERN) -

16:30

Rucio WebUI 20m

This presentation will cover the latest advancements in the Rucio WebUI, offering a comprehensive overview of its current features and capabilities. In addition, we will outline the roadmap for future enhancements and planned improvements. The talk will also highlight key aspects of the interface designed to enhance the user experience. Furthermore, we will discuss how to deploy and get started with the Rucio WebUI, providing valuable insights for new users.

Speaker: Mayank Sharma (University of Michigan (US)) -

16:50

Data Challenge 2024 Retrospective 20m

The Data Challenges are major orchestrated tests in preparation for the High-Luminosity LHC (increase by a factor of ten), with the participation of all stakeholders (multiple experiments and their data-management services, sites, networks). The second Data Challenge was conducted in February 2024. This talk will offer a summary of DC24, its goals and its achievements, with a focus on the takeaways for Rucio.

Speaker: Dimitrios Christidis (CERN)

-

16:00

-

17:30

→

20:30

Welcome reception 3h

15th floor at 7th college West

We will walk together from SDSC to the venue

-

08:00

→

09:00

-

-

09:00

→

10:30

Community talksConvener: Eric Vaandering (Fermi National Accelerator Lab. (US))

-

09:00

Rucio at PIC [remote] 20m

In this talk I will give an overview of the current state of Rucio at Port d'Informació Científica (PIC). I will review the projects that are currently using it and those for which we are considering its use. I will also provide an explanation of the developments we have made for its deployment and integration.

Speaker: Francesc Torradeflot -

09:20

Rucio at LSST/Rubin 20m

In this presentation, we will explore the Rucio experience with the Rubin Observatory experiment. Our discussion will cover several key areas:

Scalability Tests: Insights into the performance and scalability evaluations of Rucio in the context of Rubin’s data needs.

Integration with Rubin’s Data Butler: An overview of integrating Rucio with Rubin’s Data Butler using Hermes-K, which involves message passing through Kafka.

Monitoring and Support: Current status of Rucio and PostgreSQL monitoring and Rucio deployment and support within the Rubin environment.

Tape RSE Implementation: Deal with the order of magnitude more files going to tape than HEP.

Future Needs: An examination of Rubin’s evolving requirements for Rucio services and how we plan to address them.Speaker: Yuyi Guo (Fermi National Accelerator Lab. (US)) -

09:40

Cherenkov Telescope Array Observatory (CTAO) report [remote] 20m

For the Cherenkov Telescope Array Observatory (CTAO), Rucio has been selected as the core technology for managing and archiving raw data collected from the La Palma and Chile telescope sites. It is a key component of the Bulk Data Management System (BDMS) within the Data Processing and Preservation System (DPPS), providing efficient storage, transfer, and replication. In addition to raw data and auxiliary data from the Cherenkov cameras, Rucio handles higher-level data and simulated data from DPPS's workload and pipelines, with combined data volumes projected to reach several petabytes per year.

In this talk, we share our experience deploying and operating Rucio for BDMS prototyping in Docker and Kubernetes. We highlight the successful implementation of data transfers and replication to grid-based storage elements (RSEs), utilizing Rucio's conveyor daemons, FTS server, X.509 certificates, and proxies. Through unit and integration tests in a GitLab CI environment, we validated Rucio's functionalities, and we outline future plans for deploying Rucio’s to DPPS data centers to meet the evolving needs of CTAO's BDMS.

Speakers: Georgios Zacharis (INAF - OAR), Syed Anwar Ul Hasan (ETH Zurich) -

10:00

Rucio and the International Gravitational Wave Network 20m

Instrumental observations from the International Gravitational Wave Network (IGWN) are aggregated into bulk data files for access in distributed computing environments via the OSDF and for long-term archival storage. I will discuss how Rucio is used to manage these data products in LIGO and Virgo, focusing particularly on the LIGO deployment architecture and client tooling used to exploit the rich features of Rucio as a comprehensive bulk data management solution in the gravitational wave community.

Speakers: James Clark, James Clark (LIGO Laboratory, California Institute of Technology)

-

09:00

-

10:30

→

11:00

Coffee break 30m

-

11:00

→

11:45

Technology talksConvener: Martin Barisits (CERN)

-

11:00

Rucio JupyterLab extension status [remote] 20m

In this talk, we will provide an overview of the Rucio JupyterLab extension, explaining its architecture and functionalities. We'll also discuss the latest developments and outline the roadmap for the future.

Speaker: Francesc Torradeflot -

11:20

Data management services for the SRCNet in v0.1 20m

Following a software downselect, SKAO has chosen Rucio to form the backbone of its data management solution for the global network of regional centres in SRCNet v0.1.

In this presentation I will discuss the development of complementary services that integrate with the Rucio ecosystem to deliver the essential functionalities for SRCNet, focusing on data ingestion, astronomical data discovery, and data access.

Speaker: Rob Barnsley

-

11:00

-

11:45

→

12:30

Keynote: ESNet, title TBDConvener: Inder Monga (Lawrence Berkeley National Laboratory)

-

12:30

→

14:00

Lunch 1h 30m

-

14:00

→

15:00

Community talksConvener: Diego Davila Foyo (Univ. of California San Diego (US))

-

14:00

The XENONnT data handling with Rucio [remote] 20m

We present how the XENONnT experiment handles data, acquired at a rate of ~1PB/year, with the help of Rucio as data management tool. We focus mainly on the data structure, its distribution on the RSEs, then the strategy adopted to perform the reprocessing of an entire Science Run. We will highlight a few issues that impact our performances.

Speaker: Luca SCOTTO LAVINA -

14:20

Integrating Rucio for Advanced Data Management at the ePIC Experiment [remote] 20m

The science program of the Electron-Ion Collider (EIC) is both broad and compelling. Finding worldwide endorsement reflected by the rapidly growing ePIC collaboration, which aims to realize the primary experiment at the EIC. The collaboration has over 850 members from more than 173 institutions around the world. Over the last two years, there has been excellent progress in the design of the experiment, and the collaboration is working jointly with the EIC Project on the Technical Design Report of the detector. To help facilitate the design process, ePIC runs monthly simulation campaigns on the Open Science Grid (OSG). This cadence produces many different types of simulations, taking up many TerraBytes split between thousands of files. With all of this data, the collaboration sought a method to manage the simulation data. The data must be easily accessible and discoverable for the collaboration. Additionally, the means of storage should be transparent to the user. With these constraints in mind, Rucio was chosen for scientific data management at ePIC. To facilitate the transition to Rucio, a test instance was stood up and successfully tested at Jefferson Lab. This talk will describe the use case for Rucio in the ePIC collaboration and the efforts taken to incorporate Rucio. Additionally, a roadmap for Rucio in ePIC will be presented, including present challenges with the adoption of Rucio.

Speaker: Thomas Britton -

14:40

Rucio in Belle II 20m

The Belle II experiment is a B-factory experiment located on the SuperKEKB accelerator complex at KEK in Japan. Since January 2021, Rucio is responsible for managing all the data produced across the data centers used by Belle II over their full lifecycle. In particular it ensures the proper replication of all the data according to the replication policies of Belle II. In this talk we will show an overview of the usage of Rucio in Belle II with some focus on the recent decision to use Rucio as metadata service for Belle II.

Speaker: Cedric Serfon (Brookhaven National Laboratory (US))

-

14:00

-

15:00

→

15:30

Coffee break 30m

-

15:30

→

15:50

Technology talksConvener: Cedric Serfon (Brookhaven National Laboratory (US))

-

15:30

What’s Next for Tokens in Rucio 20m

The Data Challenge 2024 was the first large-scale use of the new OAuth 2.0 token implementation in Rucio. Though declared a success, numerous concerns and open questions were voiced. This talk will offer a quick summary of the token design, then cover the lessons learned, the current efforts to refine the third-party-copy workflow, and the development on the client workflows.

Speaker: Dimitrios Christidis (CERN)

-

15:30

-

15:50

→

16:30

Discussions: Token discussionConvener: Cedric Serfon (Brookhaven National Laboratory (US))

-

09:00

→

10:30

-

-

08:55

→

10:30

OSDF SessionConvener: Diego Davila Foyo (Univ. of California San Diego (US))

-

08:55

Welcome and Introduction 5mSpeaker: Diego Davila Foyo (Univ. of California San Diego (US))

-

09:00

Overview of the Pelican Platform 25mSpeaker: Justin Hiemstra

-

09:25

Open Science Data Federation - operation and monitoring 20m

Extensive data processing is becoming commonplace in many fields of science. Distributing data to processing sites and providing methods to share the data with collaborators efficiently has become essential. The Open Science Data Federation (OSDF) builds upon the successful StashCache project to create a global data access network. The OSDF expands the StashCache project to add new data origins and caches, access methods, monitoring, and accounting mechanisms. Additionally, the OSDF has become an integral part of the U.S. national cyberinfrastructure landscape due to the sharing requirements of recent NSF solicitations, which the OSDF is uniquely positioned to enable. The OSDF continues to be utilized by many research collaborations and individual users, which pull the data to many research infrastructures and projects.

Speaker: Fabio Andrijauskas (Univ. of California San Diego (US)) - 09:45

-

10:10

Integrations between XCache and Rucio 20mSpeaker: Wei Yang (SLAC National Accelerator Laboratory (US))

-

08:55

-

10:30

→

11:00

Coffee break 30m

-

11:00

→

12:30

OSDF SessionConvener: Cedric Serfon (Brookhaven National Laboratory (US))

-

11:00

Software-Defined Network for End-to-end Networked Science at Exascale 25m

Science domains often view the network as an opaque infrastructure, lacking real-time interaction capabilities for control, status information, or performance negotiation. The Software-Defined Network for End-to-end Networked Science at Exascale (SENSE) system is motivated by a vision for a new smart network and smart application ecosystem that will provide a more deterministic and interactive environment for science domains. SENSE's model-based architecture enables automated end-to-end network service instantiation across multiple administrative domains, allowing applications to express high-level service requirements through an intent-based interface.

SENSE aims to empower National Labs and Universities to request and provision intelligent network services for their workflows. This comprehensive approach includes deploying SDN infrastructure across multiple labs and WANs, focusing on usability, performance, and resilience. Key features include policy-guided orchestration, auto-provisioning, scheduling of network devices, and Site Resources. Additionally, real-time full life-cycle network monitoring, measurement, and feedback provide resilience and efficiency for science domains.

In this talk, we will cover SENSE and its key components, including the Orchestrator, Network Resource Manager (NetworkRM), and Site Resource Manager (SiteRM). We will discuss the capabilities of these components and explore several use cases, including NRP Kubernetes Operator, QoS, Real-Time monitoring and debugging, and L2/L3VPN. These examples will illustrate how SENSE can enable intelligent, end-to-end network services, enhancing the performance and efficiency of science applications.Speakers: Justas Balcas (California Institute of Technology (US)), Xi Yang (LBNL) -

11:25

Integration between Rucio and SENSE 25mSpeaker: Diego Davila Foyo (Univ. of California San Diego (US))

-

11:50

XRootd & XCache - news from the frontier 25m

- Recent developments in XRootd, XrdCl and XCache

- XRootd & XCache in the Pelican project

- Plans for XRootd-6

Speaker: Matevz Tadel (Univ. of California San Diego (US))

-

11:00

-

12:30

→

14:00

Lunch 1h 30m

-

14:00

→

15:00

Technology talksConvener: Mario Lassnig (CERN)

-

14:00

PostgreSQL operation for RUCIO service at BNL 20m

Belle II experiment at KEK, Japan, has been using RUCIO as a data management service, maintained at BNL for several years. It is currently the 2nd largest RUCIO service behind ATLAS experiment at CERN in terms of the number of files stored in its catalog. RUCIO service uses the relational database underneath to store information about data that manages. For the choice of the relational database, while ATLAS uses ORACLE, Belle II uses PostgreSQL. Although both databases have similar capabilities, they differ quite a bit in their operations of the services. Since RUCIO is developed at CERN, and since it has been used for experiments at CERN, their operational knowledge of using ORACLE as the backend relational database is well known and documented. On the other hand, for the other database, PostgreSQL, the information about its configuration and operational information for high performance RUCIO service is not as well documented. In this presentation, the experience of running PostgreSQL database for Belle II RUCIO at BNL will be presented. It will highlight certain operations and settings in PostgreSQL that will help administrators of RUCIO PostgreSQL services in a production environment.

Speaker: Hironori Ito (Brookhaven National Laboratory (US)) -

14:20

Streamlining opendata policies in Rucio data management platform 20m

Rucio is used to manage data by ATLAS and CMS among other scientific experiments. As Rucio does not recognise open data as a type, this is managed outside the system as it therefore likely duplicated, today this volume is 10 petabytes (85% of data in CERN open data), and it is growing. CERN experiments are mandated by CERN Open Science policy to release internal data as open data, and similar policies are implemented by other scientific institutions.

There is an opportunity to optimize storage costs and streamline open data policies by relying on Rucio to provide secure access to experimental open data artifacts without incurring extra storage costs, while preserving the safeguards for long-term data preservation and adhering to FAIR principles.

In this talk we'll present the rationale behind this project proposal and its implementation roadmap, funded by the OSCARS EU project.

Speaker: Hugo Gonzalez Labrador (CERN) -

14:40

Decentralized Storage with Rucio: Seal Storage and the ATLAS Project at CERN 20m

With the seemingly exponential growth in the volume of data in recent years, the challenges for data engineering teams in operationalizing their big data workloads (e.g. AI/ML) while ensuring access and integrity have grown increasingly more complex. More often than not, these challenges have to be surmounted with limited budgets, which can be swiftly consumed depending on the cloud storage provider used. To effectively solve these challenges requires a radical departure from the status quo, putting data providers back in control of their data. Fortunately, recent developments in blockchain technology have conceived novel solutions to these in the form of decentralized storage networks. Seal Storage has been, and continues to be, at the forefront in the nascent ecosystem of such networks. Their decentralized storage platform is a secure, sustainable and high-performance solution for easily storing, synchronizing, and searching large datasets at scale, at a fraction of the cost compared to traditional cloud storage. By leveraging decentralized storage protocols such as FileCoin and IPFS, it bolsters the concept of No Single Point of Failure (SPOF), increasing data resiliency at zero cost for data providers. By providing APIs and associated toolchains using common protocols such as Simple Storage Service (S3), onboarding data can be achieved with minimal effort on behalf of data providers. All content on Seal’s storage platform is indexed with a unique fingerprint (cryptographic hash) called a Content Identifier (CID), which can be used at any time to verify the integrity of data on the blockchain. In this presentation we will highlight Seal’s storage platform, principles in depth, and how the ATLAS project at CERN has leveraged their platform for the long-term 10PiB R&D project. Finally, we will briefly cover our roadmap for future plans.

Speaker: Mr Matt Nicholls (SEAL Storage)

-

14:00

-

15:00

→

15:30

Meet the developers / Q&A

-

15:30

→

16:00

Coffee break 30m

-

16:00

→

17:30

Meet the developers / Q&A

-

16:00

→

17:30

Tutorials

-

16:00

Rucio tutorial for newcomers 1h 30mSpeakers: Mario Lassnig (CERN), Riccardo Di Maio (CERN)

-

16:00

-

08:55

→

10:30

-

-

09:00

→

09:45

Keynote: Bridging the Data Gaps to Democratize AI in Science, Education and SocietyConvener: Dr Ilkay Altintas (SDSC)

-

09:45

→

10:30

Community talksConvener: Martin Barisits (CERN)

-

09:45

Distributed Data Management with Rucio for the Einstein Telescope [remote] 20m

Modern physics experiments are often led by large collaborations including scientists and institutions from different parts of the world. To cope with the ever increasing computing and storage demands, computing resources are nowadays offered as part of a distributed infrastructure. Einstein Telescope (ET) is a future third-generation interferometer for gravitational wave (GW) detection, and is currently in the process of defining a computing model to sustain ET physics goals. A critical challenge for present and future experiments is an efficient and reliable data distribution and access system. Rucio is a framework for data management, access and distribution. It was originally developed by the ATLAS experiment and has been adopted by several collaborations within the high energy physics domain (CMS, Belle II, Dune) and outside (ESCAPE, SKA, CTA). In the GW community Rucio is used by the second-generation interferometers LIGO and Virgo, and is currently being evaluated for ET. ET will observe a volume of the Universe about one thousand times larger than LIGO and Virgo, and this will reflect on a larger data acquisition rate. In this contribution, we briefly describe Rucio usage in current GW experiments, and outline the on-going R&D activities for integration of Rucio within the ET computing infrastructure, which include the setup of an ET Data Lake based on Rucio for future Mock Data Challenges. We discuss the customization of Rucio features for the GW community: in particular we describe the implementation of RucioFS, a POSIX-like filesystem view to provide the user with a more familiar structure of the Rucio data catalogue, and the integration of the ET Data Lake with mock Data Lakes belonging to other experiments within the astrophysics and GW communities. This is a critical feature for astronomers and GW data analysts since they often require access to open data from other experiments for sky localisation and multi-messenger analysis.

Speakers: Federica Legger (Universita e INFN Torino (IT)), Lia Lavezzi (INFN Torino) -

10:05

Rucio framework in the Bulk Data Management System for the CTA Archive – Release 0 [remote] 20m

In this talk we are going to describe the operational and conceptual design of the bulk archive management system involved in prototyping activities of the Cherenkov Telescope Array Observatory (CTAO) Bulk Archive. This particular archive in the CTA Observatory takes care of storage and management of the lower data level products coming from the Cherenkov telescopes, incuding their cameras, auxiliary subsystems and simulations. Scientific raw data produced from the two CTAO telescope sites, one in the Northern hemisphere and the second in the Southern, will be transferred to four off-site data centers where they will be accessed and automatically reduced to higher level data products. This Archive system will provide a set of tools based on the OAIS (Open Archive) standards, including a data transfer system, a general and replicated catalog to be queried, an easy interface to retrieve and access data as well as a customized and versatile data organization depending on the user requirements.

We have already developed the first version of the user’s interface based on Rucio package which can perform three basic functions according to the CTA Observatory Requirements: file ingestion, search and retrieval. This version has been deployed and tested successfully in a DESY (Zeuthen) test-cluster with a pre-installed Kubernetes framework. • a newly recorded data file is ingested as a replica into Rucio cluster using a JSON schema file as input and it automatically acquires a unique Physical File Name (PFN) provided by Rucio; a second replica can be created upon demand as a back up for safety reasons • an already ingested file can be searched through its DID («scope»:«filename») or its unique PFN • finally, an already ingested file can be retrieved and saved locally using the existing interface again only through its filename Currently, we are working on a database kubernetes module in order to store all relevant metadata information scanned during the ingestion, in a dedicated external non relation database. This module will extend the Release 0 BDMS functionalities and allow searching and retrieving archived data by their physical information. The database technology currently used for this scope is a replicated and sharded cluster of RethinkDB. We are about to build it on a K8s instance in order to test developed interfaces in the next coming weeks.Speaker: Georgios Zacharis (INAF, Astronomical Observatory of Rome)

-

09:45

-

10:30

→

11:00

Coffee break 30m

-

11:00

→

11:50

Technology talksConvener: Eric Vaandering (Fermi National Accelerator Lab. (US))

-

11:00

FTS 2024: Current and Future [remote] 40m

A short overview of the FTS project throughout 2024, including development highlights, thoughts on the DataChallenge'24, token transition work and conclusions from the FTS & XRootd Workshop 2024.

Finally, the presentation addresses the future direction of the FTS software, exposing the main scalability and scheduling problems and how (some of) those are addressed.

Speaker: Mihai Patrascoiu (CERN)

-

11:00

-

11:50

→

12:30

Discussions: Development roadmap - community discussionConvener: Eric Vaandering (Fermi National Accelerator Lab. (US))

-

12:30

→

14:00

Lunch 1h 30m

-

14:00

→

15:30

Meet the developers / Q&A

-

14:00

→

15:30

Tutorials

- 14:00

-

15:30

→

16:00

Coffee break 30m

-

16:00

→

17:30

Datacenter tour

-

16:00

→

17:30

Meet the developers / Q&A

-

18:00

→

21:00

Workshop dinner 3h Ballast Point Brewery

Ballast Point Brewery

9045 Carroll Way San Diego CA 92121Bus transportation will be provided from/to SDSC to the Ballast Point Brewery

-

09:00

→

09:45

-

-

09:00

→

09:30

Community talksConvener: Martin Barisits (CERN)

-

09:00

Rucio for ATLAS Computing: Special Topics 20m

Rucio for ATLAS Computing: Special Topics

Speaker: Mario Lassnig (CERN)

-

09:00

-

09:30

→

10:30

Technology talksConvener: Mario Lassnig (CERN)

-

09:30

Conditions payload data management with Rucio [remote] 20m

The HSF Conditions Data management schema design factorises metadata management from the conditions data payloads themselves. Rucio would be a natural solution for managing replicas of those conditions data payload files. Discuss!

Speaker: Paul James Laycock (Universite de Geneve (CH)) -

09:50

Rucio and Cloud Storage Providers: Atlas updates 20m

In this presentation, we explore the integration of cloud storage solutions within the ATLAS experiment using Rucio and FTS, with a focus on the SEAL case study. Cloud storage providers offer compelling use cases for the scientific community, such as on-demand scaling, multi-cloud Kubernetes clusters, and long-term archival options. We will discuss SEAL's current infrastructure, including performance metrics like transfer efficiency and throughput, as well as ongoing improvements with ESnet integration and storage consistency checking.

Additionally, we introduce the Rucio Cloud Cost Management Daemon, designed to optimize cloud usage and reduce operational costs.

Speaker: Mayank Sharma (University of Michigan (US)) -

10:10

Monitoring Rucio 20m

Monitoring of Rucio has been a desired feature brought up by many communities over the years. I have developed and deployed a simplified monitoring solution that utilises Prometheus, Hermes, and Logstash to provide dashboards for communities.

Speaker: Timothy John Noble (Science and Technology Facilities Council STFC (GB))

-

09:30

-

10:30

→

11:00

Coffee break 30m

-

11:00

→

12:00

Conclusion & Closing

- 11:00

-

09:00

→

09:30