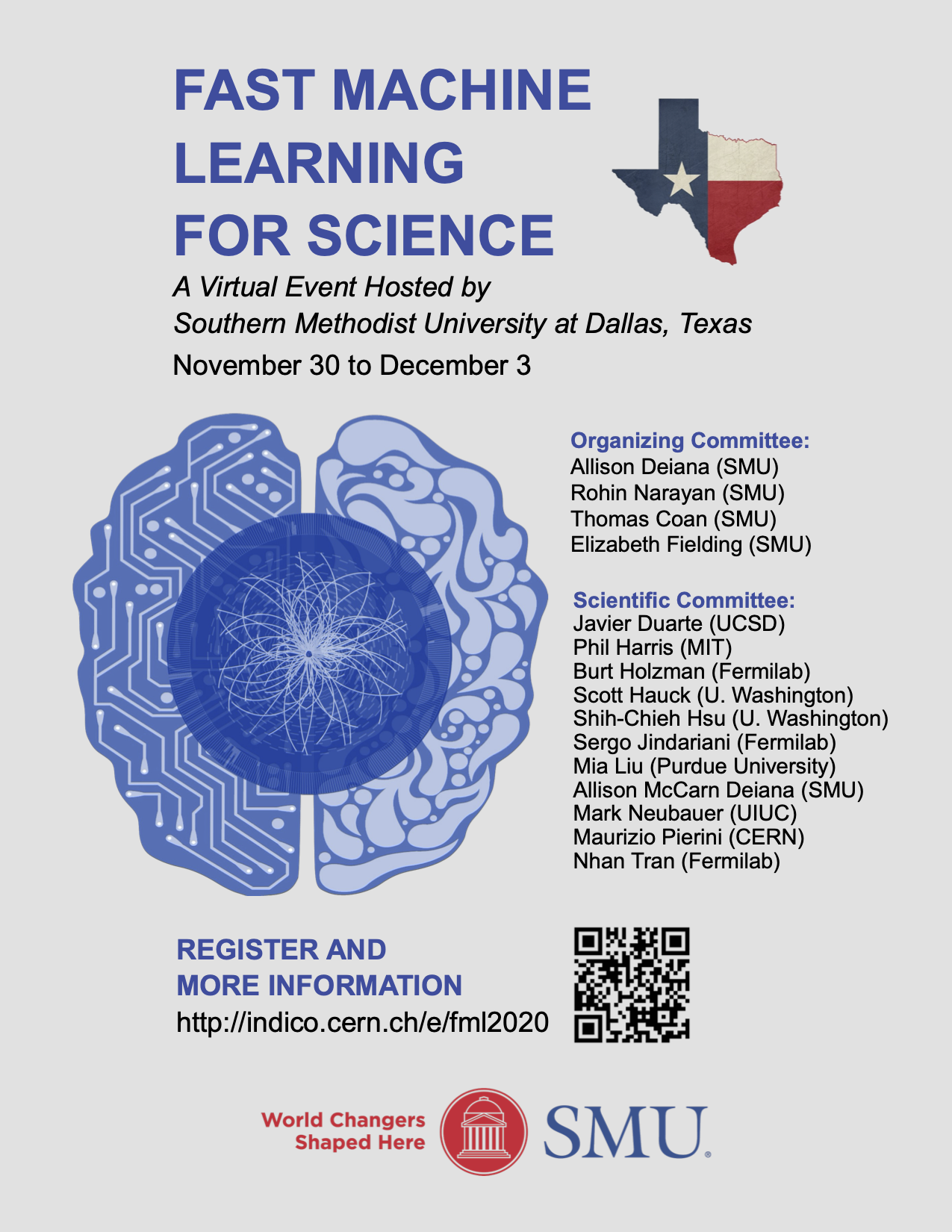

Fast Machine Learning for Science Workshop

Southern Methodist University

We are pleased to announce a four-day event "Fast Machine Learning for Science”, which will be hosted virtually by Southern Methodist University from November 30 to December 3. The first three days (Nov 30 - Dec 2) will be workshop-style with invited and contributed talks. The last day will be dedicated to technical demonstrations and coding tutorials.

As advances in experimental methods create growing datasets and higher resolution and more complex measurements, machine learning (ML) is rapidly becoming the major tool to analyze complex datasets over many different disciplines. Following the rapid rise of ML through deep learning algorithms, the investigation of processing technologies and strategies to accelerate deep learning and inference is well underway. We envision this will enable a revolution in experimental design and data processing as a part of the scientific method to greatly accelerate discovery. This workshop is aimed at current and emerging methods and scientific applications for deep learning and inference acceleration, including novel methods of efficient ML algorithm design, ultrafast on-detector inference and real-time systems, acceleration as-a-service, hardware platforms, coprocessor technologies, distributed learning, and hyper-parameter optimization.

Abstract submission deadline: October 30, 2020 3:59PM CDT

Organizing Committee:

Allison Deiana (Southern Methodist University)

Rohin Narayan (Southern Methodist University)

Thomas Coan (Southern Methodist University)

Elizabeth Fielding (Southern Methodist University)

Scientific Committee:

Javier Duarte (UCSD)

Phil Harris (MIT)

Burt Holzman (Fermilab)

Scott Hauck (U. Washington)

Shih-Chieh Hsu (U. Washington)

Sergo Jindariani (Fermilab)

Mia Liu (Purdue University)

Allison McCarn Deiana (Southern Methodist University)

Mark Neubauer (U. Illinois Urbana-Champaign)

Maurizio Pierini (CERN)

Nhan Tran (Fermilab)

-

-

10:00

→

10:05

Welcome and Orientation 5mSpeaker: Allison Mccarn Deiana (Southern Methodist University (US))

-

10:05

→

10:10

Welcome from SMU 5mSpeaker: Dr Karisa Cloward (Southern Methodist University)

- 10:10 → 10:15

- 10:20 → 10:50

-

11:00

→

11:15

Coffee Break 15m

-

11:15

→

11:45

Efficient Neural Network Training and Inference 30mSpeaker: Amir Gholami

-

11:55

→

12:55

Lunch 1h

- 12:55 → 13:25

- 13:35 → 14:05

-

14:15

→

14:30

Coffee Break 15m

-

14:30

→

14:38

Quantifying DNA Damage in Comet Assay images using Neural Networks 8m

Proton therapy for cancer treatment is a rapidly growing field and increasing evidence suggests it induces more complex DNA damage than photon therapy. Accurate comparison between the two treatments requires quantification of the damage caused, one method being the comet assay. The program outlined here is based on neural network architecture and aims to speed up analysis of comet assay images and provide accurate, quantified assessment of the DNA damage levels apparent in them.

The comet assay is an established technique in which DNA fragments are spread out under the influence of an electric field, producing a comet-like object. The elongation and intensity of the comet tail (consisting of DNA fragments) indicate the level of damage incurred. Many methods to measure this damage exist, using a variety of algorithms. These can be time consuming, so often only a small fraction of the comets available in an image are analysed. The automatic analysis presented here aims to improve this.

Object detection and localisation, implemented by a Mask-RCNN neural network, are used to perform instance segmentation of the comets. The identified comet instances are then saved as masks, which when overlaid onto the original image, provide pixel coordinates of the identified comets. A minimum accuracy of 90% has been achieved by the model in identifying comets in an image. The model has been trained via transfer learning from Microsoft’s extensive COCO model, which is based on over 200,000 labelled images. This has significantly reduced both training time and also the number of images required for training (less than 70 images have been used here).

To supplement the training and testing of the network a Monte Carlo model is being developed in order to create simulated comet assay images.

Speaker: Selina Dhinsey -

14:40

→

14:46

Autoencoders for anomaly detection in real-time at the LHC 6m

At the LHC, data are collected at 40 MHz but only 1 kHz of data can be stored for physics studies. A typical LHC experiment operates a real-time selection system, that has to decide if an event should be stored or discarded. The first stage of this system, the L1 trigger, runs on custom electronic boards, mounting FPGAs. A L1 algorithm needs to operate within O(1μsec) latency. In this system, we aim to operate an unsupervised algorithm designed to identify outliers. Possibly highlighting the occurrence of new phenomena in LHC collisions. To this purpose, we design an autoencoder processing particle four momenta and we exploit hls4ml to deploy the model on an FPGA and evaluate its resource consumption and latency in various configurations.

Speaker: Katya Govorkova (CERN) -

14:48

→

14:56

Design of a reconfigurable autoencoder algorithm for detector front-end ASICs 8m

The next generation of particle detectors will feature unprecedented readout rates and require optimizing lossy data compression and transmission from front-end application-specific integrated circuits (ASICs) to the off-detector trigger processing logic. Typically, channel aggregation and thresholding are applied, removing information useful for particle reconstruction in the process. A new approach to this challenge is directly embedding machine learning (ML) algorithms in ASICs on the detector front-end to allow intelligent data compression before transmission. We present an algorithm optimized for the High-Granularity Endcap Calorimeter (HGCal) installed in the CMS Experiment for the high-luminosity upgrade to the Large Hadron Collider. We trained a neural-network (NN) autoencoder to achieve optimal compression fidelity for physics reconstruction while respecting hardware constraints on internal parameter precisions, computational (circuit) complexity, and area footprint. The autoencoder improves over non-ML algorithms in reconstructing low-energy signals in high-occupancy environments. Quantization-aware training is performed using qKeras and is implemented in RTL using the hls4ml compiler tool. Finally, we discuss our solution's flexibility, wherein sensors may be individually tuned to optimize performance across the full detector and over the range of expected run conditions during the detector's lifetime.

Speaker: Giuseppe Di Guglielmo (Columbia University) -

14:58

→

15:04

Large and compressed Convolutional Neural Networks on FPGAs with hls4ml 6m

We present ultra low-latency Deep Neural Networks with large convolutional layers on FPGAs using the hls4ml library. Taking benchmark models trained on public datasets, we discuss various options to reduce the model size and, consequently, the FPGA resource consumption: pruning, quantization to fixed precision, and extreme quantization down to binary or ternary precision. We demonstrate how inference latencies of O(10) micro seconds can be obtained while high accuracy is maintained

Speaker: Vladimir Loncar (CERN) -

15:06

→

15:12

Convolutional Neural Network Fast Inference Deployment on FPGAs 6m

From self-driving cars to particle physics, the uses of convolutional neural networks are plentiful. To greatly decrease inference latency, CNNs and other deep learning architectures can be deployed to hardware compute environments in the form of Field Programmable Gate Arrays (FPGAs). The open source package HLS4ML is leveraged to complete model conversion and RTL synthesis. The work presented here describes methods with which the generated Verilog/VHDL can be further optimized to yield further latency reductions and smaller hardware resource requirements.

Speaker: Andrew Harmon Reis (Southern Methodist University (US)) -

15:14

→

15:20

Convolutional Neural Networks for real-time processing of ATLAS Liquid-Argon Calorimeter signals with FPGAs 6m

Physicists use the Large Hadron Collider (LHC) at CERN/Geneva to create proton-proton (pp) collisions to study rare particle-physics processes at high energies. Within the Phase-II upgrade, the LHC and the particle detectors will be prepared for high luminosity operation, starting in 2027. One challenge is the high level of signal pile-up caused by up to 200 simultaneous pp collisions. Moreover, in the case of the Liquid-Argon (LAr) Calorimeters of the ATLAS detector, the signals of up to 25 subsequent collisions overlap, which further increases the difficulty to reconstruct the energy deposit in the detector.

In order to cope with this, the readout electronics of the ATLAS LAr Calorimeters will be upgraded, which will allow a real-time processing of the full sequence of digitized pulses sampled at 40 MHz. Conventional signal processing applies an optimal filter to reconstruct the energy of the detector hits. However, the high level of pile-up and a new trigger scheme requires a more advanced signal reconstruction method.

We have developed a dilated convolutional neural network (CNN) which improves the efficiency to identify significant energy deposits above a given noise threshold and which reduces the number of incorrectly identified hits when compared to an optimal filter. Since the implementation target of the CNN is a Field Programmable Gate Array (FPGA), the number of parameters and the mathematical operations are well controlled. A second network structure aims at reconstructing the hit energy, using the information of the hit identification network. The CNN training data are generated by a dedicated simulation program, called AREUS, which provides realistic signal sequences including all noise sources.

Moreover, we implemented the CNN structure in firmware in an automated way, translating the CNN training output file into VHDL, targeting an INTEL Stratix-10 FPGA. Linearized sigmoid activation functions are tested and compared to the full-precision calculation. Very good agreement between FPGA and computer based calculations is observed. We also analyzed the FPGA resource usage and the maximum frequency at which the algorithm can be executed.

The presentation will summarize the latest performance results obtained with the CNN approach and the most recent prototype implementations in FPGA firmware.

Speakers: Anne-Sophie Berthold (Technische Universitaet Dresden (DE)), Nick Fritzsche (Technische Universitaet Dresden (DE)) -

15:22

→

15:28

FastCaloGAN: a tool for fast simulation of the ATLAS calorimeter system with Generative Adversarial Networks 6m

Building on the recent success of deep learning algorithms, Generative Adversarial Networks (GANs) are exploited for modelling the response of the ATLAS detector calorimeter to different particle types and simulating calorimeter showers for photons, electrons and pions over a range of energies (between 256~MeV and 4~TeV) in the full detector η range. The properties of showers in single-particle events and of jets in di-jets events are compared with full detector simulation performed by GEANT4. The good performance of FastCaloGAN demonstrates the potential of GANs to perform a fast calorimeter simulation for the ATLAS experiment.

Speaker: Michele Faucci Giannelli (INFN e Universita Roma Tor Vergata (IT)) -

15:30

→

15:36

A OneAPI backend of hls4ml to speed up Neural Network inference on CPUs 6m

A recent effort to explore a neural network inference in FPGAs using High-Level Synthesis language (HLS), focusing on low-latency applications in triggering subsystems of the LHC, resulted in a framework called hls4ml. Deep Learning model converted to HLS using the hls4ml framework can be executed on CPUs, but have subpar performance. We present an extension of hls4ml using the new Intel oneAPI toolkit that converts deep learning models into high-performance Data Parallel C++ optimized for Intel x86 CPUs. We show that inference time on Intel CPUs is improved hundreds of times over previous HLS-based implementation, and several times over unmodified Keras/TensorFlow.

Speaker: Vladimir Loncar (CERN) -

15:38

→

15:44

A Quartus backend for hls4ml: deploying low-latency Neural Networks on Intel FPGAs 6m

We describe the new Quartus backend of hls4ml, designed to deploy Neural Networks on Intel FPGAs. We list the supported network components and layer architectures (dense, binary/ternary, and convolutional neural networks) and evaluate its performance on a benchmark problem previously considered to develop the Vivado backend of hls4ml. We also introduce the support for recurrent layers and introduce a new asynchronous calling model to increase performance for larger models. In addition to that, we also demonstrate the use of this new model to optimize large-sparse networks.

Speaker: Hamza Javed (Pakistan Institute of Engin. and (PK))

-

10:00

→

10:05

-

- 10:00 → 10:30

-

10:40

→

11:10

Health Sensing, Detection, and Monitoring 30mSpeakers: Nabil Alshurafa (Northwestern University), Sougata Sen (Northwestern University)

-

11:20

→

11:35

Coffee Break 15m

- 11:35 → 12:05

-

12:15

→

13:15

Lunch 1h

-

13:15

→

13:45

Deep Learning Acceleration of Progress in Fusion Energy Research 30m

Accelerated progress in delivering accurate predictions in science and industry have been accomplished by engaging advanced statistical methods featuring artificial intelligence/deep learning/machine learning (AI/DL/ML). Associated techniques have enabled new avenues of data-driven discovery in key scientific applications areas such as the quest to deliver Fusion Energy – identified by the 2015 CNN “Moonshots for the 21st Century” televised series as one of 5 prominent grand challenges for the world today. An especially time-urgent and challenging problem facing the development of a fusion energy reactor is the need to reliably predict and avoid large-scale major disruptions in magnetically-confined tokamak systems such as the EUROFUSION Joint European Torus (JET) today and the burning plasma ITER device in the near future -- -- a ground-breaking $25B international burning plasma experiment with the potential capability to exceed “breakeven” fusion power by a factor of 10 or more with “first plasma” targeted for 2026 in France. Meanwhile, a key challenge is to deliver significantly improved methods of prediction with better than 95% predictive accuracy to provide advanced warning for disruption avoidance/mitigation strategies to be effectively applied before critical damage can be done to ITER

This presentation describes advances in the deployment of deep learning recurrent and convolutional neural networks in Princeton’s Deep Learning Code -- "FRNN” – that have enabled the rapid analysis of large complex datasets on supercomputing systems that have accelerated progress in predicting tokamak disruptions with unprecedented accuracy and speed (Ref. “NATURE,” (April 26, 2019). This represented the first adaptable predictive DL software trained on leadership class systems to deliver accurate predictions for disruptions across different tokamak devices (DIII-D in the US and JET in the UK) – with the unique capability to carry out efficient “transfer learning” via training on a large data base from one experiment (i.e., DIII-D) and be able to accurately predict disruption onset on an unseen device (i.e., JET) ! Moreover, in recent advances, the FRNN inference engine has recently been deployed in a real-time plasma control system on the DIII-D tokamak facility in San Diego,CA. This opens up exciting avenues for moving from passive disruption prediction to active real-time control with subsequent optimization for reactor scenarios.

Speaker: Bill Tang (Princeton University) -

13:55

→

14:10

Coffee Break 15m

-

14:10

→

14:18

Making ML easier at CERN with Kubeflow 8m

Different groups at CERN have been focusing on changing existing workflows and processes to rely on machine learning, covering trigger farms, fast simulation, anomaly detection, reinforcement learning, etc.

To help end users in these tasks a service must hide the underlying infrastructure complexity and integrate well with existing identity and storage services, as well as easing the tasks of data preparation, model training, serving, among others.

In this talk we present a new solution available at CERN based on Kubeflow, the ML platform running on top of Kubernetes. We describe how the underlying resources - CPUs and GPUs - are offered to the end user hiding the complex details that allow the service to scale horizontally, and shared with the goal of optimizing resource usage. We present how existing on-premise capacity can be extended to external resources (public clouds) without users realizing, and for use cases where on-demand usage is cost effective such as covering for peak periods.

In the second part of the talk we cover the complete ML lifecycle. Examples will include quick code development and iteration using notebooks; submission of analysis pipelines allowing workloads to easily scale out, and including the direct conversion of a notebook to a pipeline; distributed model training with submission via both a web interface and an API; hyper-parameter tuning support with multiple search algorithms available; and finally model storage and serving.

Speaker: Dejan Golubovic (CERN) -

14:20

→

14:26

Using an Optical Processing Unit for tracking and calorimetry at the LHC 6m

Experiments at HL-LHC and beyond will have ever higher read-out rate. It is then essential to explore new hardware paradigms for large scale computations. We have considered the Optical Processing Unit (OPU) from LightOn https://lighton.ai , which is an analog device to multiply a binary 1 mega pixel image by a (fixed) 1E6x1E6 random matrix, resulting in a mega pixel image, at a 2kHz rate. It could be used for the whole branch of Machine Learning using random matrix in particular for dimensionality reduction. In this talk, we have explored the potential of OPU for two typical HEP use cases:

1) “Tracking”: high energy proton collisions at the LHC yield billions of records with typically 100,000 3D points corresponding to the trajectory of 10.000 particles. Using two datasets from previous tracking challenges, we investigate the OPU potential to solve similar or related problems in high-energy physics, in terms of dimensionality reduction, data representation, and preliminary results.

2) “Calorimeter Event classification”: high energy proton collision at the Large Hadron Collider have been simulated, each collision being recorded as an image representing the energy flux in the detector. The task is to train a classifier to separate signal from the background. The OPU allows fast end-to-end classification without building intermediate objects (like jets). This technique is presented, compared with more classical particle physics approaches.

Speaker: David Rousseau (IJCLab-Orsay) -

14:28

→

14:34

Level 1 trigger track quality machine learning models on FPGAs for the Phase 2 upgrade of the CMS experiment 6m

In 2026, the LHC will be upgraded to the HL-LHC which will provide up to 10 times as many proton-proton collisions per bunch crossing. In order to keep up with the increase in data rates, the CMS collaboration is updating the Level 1 Trigger system to run particle selection and reconstruction algorithms on FPGAs in real-time with the data collection system. One such particle algorithm measures the quality of the reconstructed tracks to classify them as "real" or "fake" reconstructed tracks. In this work, we develop supervised machine learning algorithms for track quality classification and test these models on simulated FPGAs using the HLS4ML and Conifer open-source packages.

Speaker: Claire Savard (University of Colorado Boulder (US)) -

14:36

→

14:42

Adversarial mixture density network for particle reconstruction: a case study in collider simulation 6m

An adversarial mixture density network (AMDN) with gaussian kernels is used to simulate muon reconstruction in the setup of collider detectors. The network is trained on events generated using Madgraph5, Pythia8 and the Delphes3 fast detector simulation implementation for the Compact Muon Solenoid (CMS). It is observed that the network can reproduce relevant kinematic distributions with a very good level of agreement, and at the same time the underlying correlations between reconstructed variables. Without prior collider-specific constraints,the trained network also acquires the azimuthal symmetry, a key feature in CMS simulation. While popular generative models, such as generataive adversarial networks (GANs), demonstrates wide success in various research areas, our work demonstrates that an alternative algorithmic approach more specific to Monte Carlo simulation in collider physics can be favourable and help tackle the increasing computing demands from simulation in collider experiments.

Speaker: Kin Ho Lo (University of Florida (US)) -

14:44

→

14:50

Application of a neural network based technique for track identification in Nuclear Track Detectors (NTD) 6m

Nuclear Track Detectors (NTDs) have been in use for decades,

mainly as detectors of heavily ionizing particles. Existence of natural

thresholds of detection makes them an ideal choice as detectors in the

search for rare, heavily ionizing hypothesized particles (e.g. Monopoles,

Strangelets etc.) against a large low-Z background in cosmic rays as well

as particle accelerators. But identification of particle tracks in

NTDs presents a significant challenge, with conventional image analysis

software coming up short, requiring the intervention of human experts.

This makes the job of scanning NTDs a painstakingly slow process, prone

to human errors. In recent years, the use of Machine Learning techniques

has opened up the possibilities of new advances in image analysis. In this

work, we have taken a technique combining sequential application of

convolution and de-convolution previously developed by us and further

upgraded it with the use of Artificial Neural Network. This has further

reduced the need for manual intervention, is producing better

results than commercially available software and is promising to dramatically

speed up the scanning process, thereby facilitating the more widespread

adaptation of NTDs.Speaker: Dr Kanik Palodhi (University of Calcutta, Kolkata) -

14:52

→

15:00

Ultra Low-latency, Low-area Inference Accelerators using Heterogeneous Deep Quantization with QKeras and hls4ml 8m

While the quest for more accurate solutions is pushing deep learning research towards larger and more complex algorithms, edge devices demand efficient inference i.e. reduction in model size, speed and energy consumption. A technique to limit model size is quantization, i.e. using fewer bits to represent weights and biases. Such an approach usually results in a decline in performance. In this CERN-Google collaboration, we introduce a novel method for designing optimally heterogeneously quantized versions of deep neural network models for minimum-energy, high-accuracy, nanosecond inference and fully automated deployment on-chip. With a per-layer, per-parameter type automatic quantization procedure, sampling from a large base of quantizers, model energy consumption and size are minimized while high accuracy is maintained. This is crucial for the event selection procedure in proton-proton collisions at the CERN Large Hadron Collider, where resources are limited and a latency of O(1) micro second is required. Nanosecond inference and a resource consumption reduced by a factor of 50 when implemented on FPGA hardware is achieved.

Speaker: Thea Aarrestad (CERN) -

15:02

→

15:08

Matrix Element Regression with Deep Neural Networks -- breaking the CPU barrier 6m

The Matrix Element Method (MEM) is a powerful method to extract information from measured events at collider experiments. Compared to multivariate techniques built on large sets of experimental data, the MEM does not rely on an examples-based learning phase but directly exploits our knowledge of the physics processes. This comes at a price, both in term of complexity and computing time since the required multi-dimensional integral of a rapidly varying function needs to be evaluated for every event and physics process considered. This can be mitigated by optimizing the integration, as is done in the MoMEMta package, but the computing time remains a concern, and often makes the use of the MEM in full-scale analysis unpractical or impossible. We investigate in this paper the use of a Deep Neural Network (DNN) built by regression of the MEM integral as an ansatz for analysis, especially in the search for new physics.

Speaker: Florian Bury (UCLouvain - CP3) -

15:10

→

15:18

An Early Exploration into the Interplay between Quantization and Pruning of Neural Networks 8m

Machine Learning (ML) is already being used as a powerful tool in High Energy Physics, but the typically high computational cost associated with running ML workloads is often a bottleneck in data processing pipelines. Even on high performance hardware such as Field Programmable Gate Arrays (FPGAs) and Application Specific Integrated Circuits (ASICs) the speed and size of these models are often heavily constrained by available hardware resources. Various model optimization techniques, such as pruning and quantization, have been used in an attempt to alleviate the high performance costs, but often not together, or without fully understanding the interplay of the two techniques. We attempt to explore this interplay between quantization and pruning in order to better understand how they interact. Targeting FPGAs and ASICs, we attempt to determine how to yield the best performance with both quantization and pruning. In this presentation, we explore these techniques by optimizing the HLS4ML 3 Hidden Layer Jet Substructure tagging model, finding that we can successfully optimize the model down to 3-5% of its original size while retaining comparable performance to the original network. Finally, we discuss some next steps into understanding how the different optimization techniques affect the model internally, beyond standard performance metrics.

Speaker: Mr Benjamin Hawks (Fermi National Accelerator Laboratory) -

15:20

→

15:26

Real-time Artificial Intelligence for Accelerator Control: A Study at the Fermilab Booster 6m

We describe a method for precisely regulating the gradient magnet power supply (GMPS) at the Fermilab Booster accelerator complex using a neural network (NN). We demonstrate preliminary results by training a surrogate machine-learning model on real accelerator data, and using the surrogate model in turn to train the NN for its regulation task. We additionally show how the neural networks that will be deployed for control purposes may be compiled to execute on field-programmable gate arrays (FPGAs). This capability is important for operational stability in complicated environments such as an accelerator facility.

Speaker: Christian Herwig (Fermi National Accelerator Lab. (US)) -

15:28

→

15:36

Building the tools to run large scale machine learning with FPGAs with two new approaches: AIGEAN and FAAST 8m

FPGA programming is becoming easier as the vendors begin to provide environments, such as for machine learning (ML), that enable programming at higher levels of abstraction.The vendor platforms target FPGAs in a single host server.To scale to larger systems of FPGAs requires communication through the hosts, which has a significant impact on performance. We demonstrate the deployment of ML algorithms on single FPGAs through FAAST a newly developed FPGA based infrastructure framework. We also present a new Framework, AIGEAN, to run multiple FPGA and CPU heterogeneous system that can leverage direct FPGA-to-FPGA communication links. AIgean and FAAST, take as input an ML algorithm created with a standard ML framework and a specification of the available FPGA and CPU resources. The outputs are software and hardware cores that can compute one or more ML layers. These layers can be distributed across a heterogeneous cluster of CPUs and FPGAs for execution. As part of this work we present an optimized FPGA implementation of a CNNs. We show that in some cases FPGAs can exceed the performance of other accelerators, including GPUs.

Speaker: Naif Tarafdar (University of Toronto) -

15:38

→

15:44

muon detection using deep learning, applied to CONNIE events 6m

The CONNIE experiment (Coherent Neutrino-Nucleus Interaction Experiment) is a collaboration from some countries in South America, EEUU and Switzerland . The data collected during the CONNIE experiment can be used to search for time variations of particles arriving at the detectors with periodic and stochastic nature. This experiment uses 12 high resistivity CCDs (Charge-Coupled Devices) placed in the vicinity of the Angra dos Reis nuclear reactor (Planta Almirante Alvaro Alberto, Rio de Janeiro, Brazil), with the purpose of detecting the antineutrinos generated in the reactor by measuring low-energy recoils from coherent elastic scattering (CEνNS). The sensors have recorded images of particles during the last 2 years in 3 hour expositions, where the majority of particles in the images are muon and beta particles that are considered as background. This work uses a deep learning algorithm to classify and detect muon particles in the images in order to remove them from the images for the purpose of neutrino studies, and also to build a time series that can be used as a stability monitor of the detection system.

Speaker: Mr Javier Bernal (Facultad De Ingenieria UNA) -

15:46

→

15:52

GPU-accelerated machine learning inference as a service for computing in neutrino experiments 6m

Machine learning algorithms are becoming increasingly prevalent and performant in the reconstruction of events in accelerator-based neutrino experiments. These sophisticated algorithms can be computationally expensive. At the same time, the data volumes of such experiments are rapidly increasing. The demand to process billions of neutrino events with many machine learning algorithm inferences creates a computing challenge. We explore a computing model in which heterogeneous computing with GPU coprocessors is made available as a web service. The coprocessors can be efficiently and elastically deployed to provide the right amount of computing for a given processing task. With our approach, Services for Optimized Network Inference on Coprocessors (SONIC), we integrate GPU acceleration specifically for the ProtoDUNE-SP reconstruction chain without disrupting the native computing workflow. With our integrated framework, we accelerate the most time-consuming task, track and particle shower hit identification, by a factor of 17. This results in a factor of 2.7 reduction in the total processing time when compared with CPU-only production. For this particular task, only 1 GPU is required for every 68 CPU threads, providing a cost-effective solution.

Speaker: Mike Wang

-

-

10:00

→

10:30

Accelerator-based Neutrinos 30mSpeaker: Jeremy Edmund Hewes (University of Cincinnati (US))

-

10:40

→

11:10

Neutrino Astrophysics 30mSpeakers: Kate Scholberg (Duke University), Kate Scholberg (Duke University)

-

11:18

→

11:20

Acknowledgments 2mSpeaker: Philip Coleman Harris (Massachusetts Inst. of Technology (US))

-

11:20

→

11:35

Coffee Break 15m

- 11:35 → 12:05

-

12:15

→

13:15

Lunch 1h

-

13:15

→

13:45

ASICs and Circuits 30mSpeaker: Tobi Delbruck (ETH Zurich)

- 13:55 → 14:25

-

14:35

→

14:50

Coffee Break 15m

-

14:50

→

14:58

Development of ML FPGA filter for particle identification with transition radiation detector. 8m

Transition Radiation Detectors (TRD) have the attractive features of being able to separate particles by their gamma factor. A new TRD development, based on a GEM technology, is being carried out as a R&D project for the future Electron Ion Collider (EIC) and for upgrade of the GlueX experiment. This detector combines a high precision GEM tracker with TRD functionality and optimized for electron identification.

Modern concepts of trigger-less readout and data streaming will produce a very large data volume to be read from detectors. From a resource standpoint, it appears strongly advantageous to perform both the pre-processing of data and data reduction at earlier stages of a data acquisition. Following this trend, we began to develop an FPGA based Machine Learning algorithm for a real-time particle identification with GEMTRD. This research is important for streaming readout systems being developed now at JLab for EIC. The report will describe first steps in the development of ML-FPGA filter for GEMTRD.Speaker: Sergey Furletov (Jefferson Lab) -

15:00

→

15:08

AI-assisted Tracking Algorithm 8m

In this work we describe the development of machine learning models to assist the CLAS12 detector tracking algorithm. Several networks were implemented to assist tracking algorithm to overcome drift chambers inefficiencies using auto-encoders to de-noise wire chamber signals and corruption detection.A classifier network was used to identify track candidates from numerous combinatorial segments using different types of networks including: Convolutional Neural Networks (CNN), Multi-Layer Perceptron (MLP) and Extremely Randomized Trees (ERT). The final implementation provided an accuracy >99%. The implementation of AI assisted tracking into the CLAS12 reconstruction workflow and provided code speedup of up to 4 times.

Speaker: Gagik Gavalian (Jefferson Lab) -

15:10

→

15:18

Anomaly Detection with Spiking Neural Networks on Neuromorphic Chips 8m

We describe anomaly detection applications on Neuromorphic Chips, exploiting Spiking Neural Networks on the Intel Loihi chip. We describe different workflows to train models directly on Loihi or to convert Neural Networks to Spiking Neural Networks. As a benchmark, we consider the problem of Gravitational Wave detection without a-priori assumption of the wave profile. We discuss baseline models and compare their reach to that of Spiking Neural Networks.

Speaker: Bartlomiej Pawel Borzyszkowski -

15:20

→

15:28

Deep Learning based acceleration of Gravitational Waves 8m

In gravitational-wave detectors, regression techniques are applied to remove noise artifacts in order to improve the ability to observe and extract information from astrophysics signals. We present a deep learning-based noise regression method called DeepClean that can subtract linear and non-linear noise in gravitational-wave data from the Advanced LIGO detectors. We also discuss our work toward a new computing model in gravitational-wave data analysis where GPU and FPGA acceleration on machine learning inference can be deployed on an as-a-service basis. We use DeepClean as a use-case for exploring such computing models in order to achieve real-time capabilities and overall flexibility such models provide.

Speaker: Alec Gunny -

15:30

→

15:38

Accelerating Graph Neural Networks on FPGAs for Particle Track Reconstruction using OpenCL and hls4ml 8m

Current charged particle tracking algorithms at the CERN Large Hadron Collider (LHC) scale quadratically or worse with increasing number of overlapping proton-proton collisions in an event (pileup). As the LHC moves into its high-luminosity phase, pileup is expected to increase to an average of 200 overlapping collisions, highlighting the need for new algorithmic strategies. Recent work has shown that graph neural networks (GNNs) are well-suited to classifying segments of tracks. The real-time data filter at the LHC (L1 trigger) requires sub-microsecond latencies that can only be met by devices like field-programmable gate arrays (FPGAs). Accelerating neural networks on FPGAs facilitates energy efficient data-processing on large datasets with execution times that meet the L1 trigger latency requirements.

In this talk, we present two complementary FPGA implementations of an interaction network, a type of GNN, using OpenCL, an open-source framework for writing programs that execute across heterogenous acceleration platforms, and

hls4ml, an open-source compiler of machine learning models into firmware. The OpenCL implementation adopts a CPU-plus-FPGA coprocessing approach where the CPU host program manages the application and all computational operations are accelerated using dedicated kernels deployed to the FPGA and take advantage of the FPGA hardware architecture to parallelize operations. Thehls4mlimplementation utilizes Xilinx high-level-synthesis tools to convert the GNN model to FPGA firmware making it suitable for both FPGA-only and co-processing applications. We will present comparisons of the two implementations in terms of their resource usage, latency, and tracking performance on the publicly-available TrackML benchmark dataset.Speaker: Aneesh Heintz (Cornell University (US)) -

15:40

→

15:46

SONIC: Coprocessors as a service for deep learning inference in high energy physics 6m

In the next decade, the demands for computing in large scientific experiments are expected to grow tremendously. During the same time period, CPU performance increases will be limited. At the CERN Large Hadron Collider (LHC), these two issues will confront one another as the collider is upgraded for high luminosity running. Alternative processors such as graphics processing units (GPUs) can resolve this confrontation provided that algorithms can be sufficiently accelerated. In many cases, algorithmic speedups are found to be largest through the adoption of deep learning algorithms. We present a comprehensive exploration of the use of GPU-based hardware acceleration for deep learning inference within the data reconstruction workflow of high energy physics. We present several realistic examples and discuss a strategy for the seamless integration of coprocessors so that the LHC can maintain, if not exceed, its current performance throughout its running.

Speaker: Dylan Sheldon Rankin (Massachusetts Inst. of Technology (US))

-

10:00

→

10:30

-

-

09:00

→

12:00

Tutorial Session

-

09:00

hls4ml Tutorial 3hSpeaker: Sioni Paris Summers (CERN)

-

09:00

-

12:00

→

13:00

Lunch 1h

-

13:00

→

16:00

Tutorial Session

-

13:00

hls4ml Tutorial 3hSpeaker: Sioni Paris Summers (CERN)

-

13:00

-

09:00

→

12:00