- Compact style

- Indico style

- Indico style - inline minutes

- Indico style - numbered

- Indico style - numbered + minutes

- Indico Weeks View

20th Real Time Conference

→

Europe/Rome

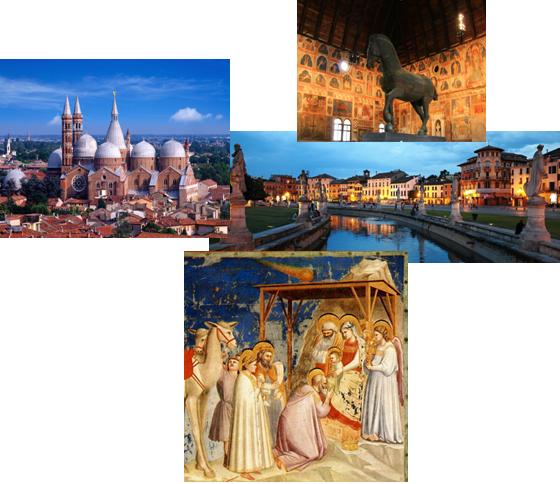

Padova, Italy

Padova, Italy

<a href="https://goo.gl/maps/vWFxL">Centro Congressi A. Luciani

Via Forcellini, 170/A

Padova

ITALY</a>

Adriano Luchetta

(Consorzio RFX),

Dora Merelli

(IEEE),

Gianluca Moro,

Martin Grossmann,

Martin Lothar Purschke

(Brookhaven National Laboratory (US)),

Patrick Le Dû,

Rejean Fontaine

(Université de Sherbrooke),

Sascha Schmeling

(CERN),

Stefan Ritt

(Paul Scherrer Institute),

Zhen-An Liu

(IHEP,Chinese Academy of Sciences (CN))

Description

20th Real Time Conference

Welcome to Padova!

We invite you at the Centro Congressi “A. Luciani” in Padova for the 2016 Real Time Conference (RT2016). It will take place Monday 6 through Friday 10 June 2016, with optional pre-conference tutorials Sunday, June 5.

Like the previous editions, RT2016 will be a multidisciplinary conference devoted to the latest developments on realtime techniques in the fields of Plasma and Nuclear Fusion, particle physics, nuclear physics and astrophysics, space science, accelerators, medical physics, nuclear power instrumentation and other radiation instrumentation.

Important Dates:

- June 5, 2016 - Short-Courses

- June 6-10, 2016 - Conference

Surveys

Bus Transportation to/from Conference Venue

-

-

Short Course - Real-time data visualization and control using modern Web technologies Caffe Pedrocchi (Padova)

Caffe Pedrocchi

Padova

-

1

Real-time data visualization and control using modern Web technologies 1 Caffe Pedrocchi (Padova, Italy)

Caffe Pedrocchi

Padova, Italy

Via VIII Febbraio, 15 35122 Padova (PD)Speaker: Stefan Ritt (Paul Scherrer Institute) -

11:00

Coffee Break Caffe Pedrocchi (Padova)

Caffe Pedrocchi

Padova

-

2

Real-time data visualization and control using modern Web technologies 2 Cafe Pedrocchi, Padova

Cafe Pedrocchi, Padova

-

1

-

09:35

Registration Caffe Pedrocchi (Padova)

Caffe Pedrocchi

Padova

-

Break: Lunch

-

Short Course - Real-time data acquisition and processing applications using NIRIO FPGAs-based technology and NVIDIA GPUs Caffe Pedrocchi

Caffe Pedrocchi

Padova, Italy

-

3

Real-time data acquisition and processing applications using NIRIO FPGAs-based technology and NVIDIA GPUs 1 Caffe Pedrocchi

Caffe Pedrocchi

Padova, Italy

Via VIII Febbraio, 15 35122 Padova (PD)Speaker: Mariano Ruiz (Technical University of Madrid) -

15:30

Coffee Break Caffe Pedrocchi (Padova, Italy)

Caffe Pedrocchi

Padova, Italy

-

4

Real-time data acquisition and processing applications using NIRIO FPGAs-based technology and NVIDIA GPUs 2 Caffe Pedrocchi

Caffe Pedrocchi

Padova, Italy

-

3

-

-

-

Bus Transfer to Conference Venue

Bus Transfer to Conference Venue

-

Registration Centro Congressi (Padova)

Centro Congressi

Padova

-

Opening Session 1 Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: Adriano Francesco Luchetta (Consorzio RFX), Martin Lothar Purschke (Brookhaven National Laboratory (US))-

5

Welcome words and conference informationWelcome words and conference informationSpeakers: Adriano Francesco Luchetta (Consorzio RFX), Martin Lothar Purschke (Brookhaven National Laboratory (US)), Rejean Fontaine (Université de Sherbrooke), Dr Sascha Schmeling (CERN)

-

6

ITER DIAGNOSTICS DEVELOPMENTITER is the largest and most technically advanced magnetic fusion device ever and is under construction in France. It is also a nuclear installation. As a result, monitoring and controlling this device using diagnostics is crucial for successful operation. Design, construction and planning for operation of these diagnostics are now well underway with some buildings complete and several more under construction. A sufficient diagnostic set is needed to cover the reliable routine operation, advanced operation and physics exploitation. This involves many boundary penetrations and in general interfaces with many of the ITER major components. These diagnostics also have to be fully operational in many diverse scenarios with managed redundancy as needed in critical areas. Demonstration of the success of ITER will come through the diagnostics. To facilitate this, a set of 50 diagnostics will be deployed, each one with its own set of specific requirements. These diagnostics are divided up in to categories including magnetics, neutrons, bolometer, optical, microwave and operational systems. The latter including pressure gauges, infrared systems and a range of observation systems for tritium and dust. Incorporation of all these systems provides a very large matrix of interfaces across virtually the whole of the device from inside to outside. These interfaces also include the control system. Managing these interfaces is a complex task. It is further complicated by the fact that many teams (more than 60 teams) are working on the systems and these are stationed around the world in the partner and supplier laboratories as well as at the construction site. From the control and operations perspective, the systems will need to be tightly managed to ensure that the whole system is built up in a coherent way. This will ensure that all the hard and soft interfaces are integrated. The environment has also to deal with neutrons, activation, maintenance and ultra-high vacuum. All these together provide a complex design path with components and hence the diagnostics being designed to be secure, cost effective and reliable. This talk will focus on the approaches and the challenges of implementing a full suite of diagnostics on ITER.Speaker: Dr Michael Walsh (ITER)

-

7

Real Time Control Of Suspended Test Masses In Advanced Virgo Laser Interferometer

Virgo seismic isolation system is composed by 10 complex mechanical structures named “Superattenuators”, or simply “Suspensions”, that isolate optical elements of Virgo interferometer from seismic noise at frequency larger than a few Hz. Each structure can be described by a model with 80 vibrational modes and is controlled by 24 coil-magnet pairs actuators. The suspension status is observed using 20 local sensors plus 3 global sensors available when the VIRGO interferometer is locked, that is when all optical lengths are controlled.

Since early beginning we made extensive use of digital control techniques implemented on custom Digital Signal Processor boards and software tools designed and developed within our group. With Advanced Virgo we are now at the third generation of Suspension Control Systems and our data conditioning, conversion and processing boards, developed in accordance with a custom variation of MicroTCA.4.

Results of our design and development efforts will be presented focusing mainly on real time issues and key performances of the overall system

Speaker: Dr Alberto Gennai (INFN)

-

5

-

Break: Coffee

-

Opening Session 2 Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: David Abbott (Jefferson Lab), Stefan Ritt (Paul Scherrer Institut (CH))-

8

Tide control on Venice Lagoon

The lagoon of Venice is the largest of the Mediterranean Sea, facing the North Adriatic Sea. It is about 55km2 wide and 1,5 m average deep. The astronomic tide maximum excursion is about ± 50 cm over the mean sea level. Tides force a water flush in the lagoon, carrying inside oxygenated and expelling de-oxygenated water, two times per day. This “breath” is essential to biological life and to connected ecosystem services. However, in some meteorological conditions, the level of the sea can be substantially higher (max registered + 2 m over the mean value in 1966). In these occasions, the historical city of Venice, located in the centre of the lagoon, is flooded. Climate changes and local anthropic pressures caused an intensification of flooding events in the last century. They can occur more than 20 times in a year, with different severity levels. Meteorological conditions can be forecast, with a precision which rises in the few hours before the event. The municipality operates an alert system and ensures services such as walkpaths.

However, the only way to protect Venice’s from any flooding, including the most severe ones, is to temporarily close the entrance of sea water into the lagoon, when needed and as long as necessary, i.e. until the sea level will be back to a ”safe” quote.

For this reason, a complex system of mobile barriers at the lagoon inlets (MOSE) is being constructed, funded by the Italian State and controlled by the Ministry of Infrastructure and Transport. Work began in 2003 and is continuing in parallel at the Lido, Malamocco and Chioggia inlets. Worksites are now in the final stage. The first barrier (North Lido) has been completed with the installation of the housing caissons and of the 21 gates. In the others barriers (South Lido, Malamocco and Chioggia) works will be concluded by the 2018. In that moment, the lagoon of Venice will become the first “regulated lagoon” in the world.Speaker: Dr Pierpaolo Campostrini (MOSE)

-

8

-

RT simulation and RT safety and security Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: Martin Lothar Purschke (Brookhaven National Laboratory (US)), Satoru Yamada (KEK)-

9

Design and Implementation of EAST Data Visualization in VEAST SystemThe Experimental Advanced Superconductive Tokamak (EAST) Device began operation in 2006. EAST’s inner structure is very complicated and contains a lot of subsystems which have a variety of different functions. In order to facilitate the understanding of the device and experimental information and promote the development of the experiment, the virtual EAST system has established an EAST virtual reality scene in which the user can roam and access to information by interacting with the system. However, experiment-related parameter information, diagnostic information and magnetic measurement information are displayed in the form of charts, figures, tables and two dimensional graphics. In order to express the experimental results directly, three-dimensional data visualization results are created using computer graphics technology. Data visualization is the process of visualizing data based on the characteristics of the data, the selection of the appropriate data structure and the proper sequence of visual pipeline. We use the visualization toolkit(VTK) to realize the data visualization in VEAST system and give the detailed steps of data visualization of plasma column, electron cyclotron emission diagnostic and plasma magnetic field. Besides, the general format is defined for the users to organize their data so that they can visualize their data in our system.Speaker: Prof. Xiao Bingjia (Hefei institutes of physical science chinese academy of sciences)

-

10

Model based fast protection system for High Power RF tube amplifiers used at European XFEL acceleratorThe driving engine of the superconducting accelerator of the European X-ray Free-Electron Laser (XFEL) are 27 Radio Frequency (RF) stations. Each of an underground RF station consists from multi-beam horizontal klystron which can provide up to 10MW of power at 1.3GHz. Klystrons are sensitive devices with limited lifetime and high mean time between failures. In the real operation the lifetime of the tube can be thoroughly reduced by failures. To minimize the influence of service conditions to the klystrons lifetime the special fast protection system named as Klystron Lifetime Management System (KLM) has been developed. The main task of this system is to detect all events which can destroy the tube as quickly as possible and switch off driving signal. Detection of events is based on comparison of model of high power RF amplifier with real signals. All algorithms are implemented in Field Programmable Gate Array (FPGA). For the XFEL implementation of KLM is based on the standard Low Level RF (LLRF) Mi-cro TCA technology (MTCA.4 or xTCA). This article focus on the klystron model estimation for protection system and implementation of KLM in FPGA on MTCA.4 architecture.Speaker: Łukasz Butkowski (DESY)

-

9

-

Break: Lunch

-

Bus Transfer to Palazzo Bo

Bus Transfer to Palazzo Bo

-

14:05

Welcome words

-

Upgrades 1 Palazzo Bo (Padova)

Palazzo Bo

Padova

Conveners: Martin Grossmann, Dr Sascha Schmeling (CERN)-

11

The ALICE C-RORC GBT card, a prototype read-out solution for the ALICE upgrade.ALICE (A Large Ion Collider Experiment) is the detector system at the LHC (Large Hadron Collider) optimized for the study of heavy-ion collisions at interaction rates up to 50 kHz and data rates beyond 1 TB/s. Its main aim is to study the behavior of strongly interacting matter and the quark gluon plasma. ALICE is preparing a major upgrade and starting from 2021, it will collect data with several upgraded sub-detectors (TPC, ITS, Muon Tracker and Chamber, TRD and TOF). The ALICE DAQ read-out system will be upgraded as well, with a new read-out link called GBT (GigaBit Transceiver) with a max. speed of 4.48 Gb/s and a new PCIe gen.3 x16, interface card called CRU (Common Read-out Unit). Several test beams have been scheduled for the test and characterization of the prototypes or parts of new detectors. The test beams usually last for a short period of one or two weeks and it is therefore very important to use a stable read-out system to optimize the data taking period and be able to collect as much statistics as possible. The ALICE DAQ and CRU teams proposed a data acquisition chain based on the current ALICE DAQ framework in order to provide a reliable read-out system. The new GBT link, transferring data from the front-end electronics, will be directly connected to the C-RORC, the current read-out PCIe card used in the ALICE experiment. The ALICE DATE software is a stable solution in production since more than 10 years. Moreover, most of the ALICE detector developers are already familiar with the software and its different analysis tools. This setup will allow the detector team to focus on the test of their detectors and electronics, without worrying about the stability of the data acquisition system. An additional development has been carried on with a C-RORC-based Detector Data Generator (DDG). The DDG has been designed to be a realistic data source for the GBT. It generates simulated events in a continuous mode and sends them to the DAQ system through the optical fibers, at a maximum of 4.48 Gb/s per GBT link. This hardware tool will be used to test and verify the correct behavior of the new DAQ read-out card, CRU, once it will become available to the developers. Indeed the CRU team will not have a real detector electronics to perform communication and performance tests, so it is vital during the test and commissioning phase to have a data generator able to simulate the FEE behavior. This contribution will describe the firmware and software features of the proposed read-out system and it will explain how the read-out chain will be used in the future tests and how it can help the development of the new ALICE DAQ software.Speaker: Filippo Costa (CERN)

-

12

The new Global Muon Trigger of the CMS experimentFor the 2016 physics data runs the L1 trigger system of the CMS experiment is undergoing a major upgrade to cope with the increasing instantaneous luminosity of the CERN LHC whilst maintaining a high event selection efficiency for the CMS physics program. Most subsystem specific trigger processor boards are being exchanged with powerful general purpose processor boards, conforming to the MicroTCA standard, whose tasks are performed by firmware on an FPGA of the Xilinx Virtex 7 family. Furthermore, the muon trigger system is undergoing change from a subsystem centred approach, where each of the three muon detector systems provides muon candidates to the global muon trigger, to a region based system, where muon track finders combine information from the subsystems to generate muon candidates in three detector regions that are then sent to the upgraded global muon trigger. The upgraded global muon trigger receives up to 108 muons from the sector processors of the muon track finders in the barrel, overlap, and endcap detector regions. The muons are sorted and duplicates are identified for removal in two steps. The first step treats muons from different sector processors of a track finder in one detector region. Muons from track finders in different detector regions are compared in the second step. With energy sums from the calorimeter trigger an isolation variable is calculated and added to each muon, before the best eight are sent to the upgraded global trigger where the final trigger decision is taken. The upgraded global muon trigger algorithm is implemented on one of the general purpose processor boards that uses about 70 optical links at 10 Gb/s to receive the input data from the muon track finders and the calorimeter energy sums, and to send the selected muon candidates to the upgraded global trigger. The design of the upgraded global muon trigger in the context of the CMS L1 trigger upgrade, and experience from commissioning and data taking with the new system are presented here within.Speaker: Thomas Reis (CERN)

-

11

-

Break: Coffee

-

Upgrades 2 Palazzo Bo (Padova)

Palazzo Bo

Padova

Upgrade 2

Conveners: Masaharu Nomachi, Wolfgang Kuehn (Justus-Liebig-Universitaet Giessen (DE))-

13

The development of the Global Feature Extractor for the LHC Run-3 upgrade of the ATLAS L1 Calorimeter trigger systemThe Global Feature Extractor (gFEX) is one of several modules in the LHC Run-3 upgrade of the Level 1 Calorimeter (L1Calo) trigger system of the ATLAS experiment. It is a single Advanced Telecommunications Computing Architecture (ATCA) module for large-area jet identification with three Xilinx UltraScale FPGAs for data processing and a system-on-chip (SoC) FPGA for control and monitoring. A pre-prototype board has been designed to verify all functionalities. The performance of this pre-prototype has been tested and evaluated. As a major achievement, the high-speed links in FPGAs are stable at 12.8 Gb/s with Bit Error Ratio (BER) < 10-15 (no error detected). The low-latency parallel GPIO (General Purpose I/O) buses for communication between FPGAs are stable at 960 Mb/s. Besides that, the peripheral components of Soc FPGA have also been verified. After laboratory tests, the link speed test with LAr (Liquid Argon Calorimeter) Digital Processing Blade (LDPB) AMC card has been carried out at CERN for determination of the link-speed to be used for the links between LAr and L1Calo systems. The links from LDPB AMC card to gFEX run properly at both 6.4 Gb/s and 11.2 Gb/s. Test results of pre-prototype board validate the gFEX technologies and architecture. Now the prototype board design with three UltraScale FPGAs is on the way, the status of development will be presented.Speaker: Weihao Wu (Brookhaven National Laboratory (US))

-

14

SWATCH: Common software for controlling and monitoring the upgraded CMS Level-1 triggerThe Large Hadron Collider at CERN restarted in 2015 with a higher centre-of-mass energy of 13 TeV. The instantaneous luminosity is expected to increase significantly in the coming years. An upgraded Level-1 trigger system is being deployed in the CMS experiment in order to maintain the same efficiencies for searches and precision measurements as those achieved in the previous run. This system must be controlled and monitored coherently through software, with high operational efficiency. The legacy system is composed of approximately 4000 data processor boards, of several custom application-specific designs. These boards have been controlled and monitored by a medium-sized distributed system of over 40 computers and 200 processes. The legacy trigger was organised into several subsystems; each subsystem received data from different detector systems (calorimeters, barrel/endcap muon detectors), or with differing granularity. Only a small fraction of the control and monitoring software was common between the different subsystems; the configuration data was stored in a database, with a different schema for each subsystem. This large proportion of subsystem-specific software resulted in high long-term maintenance costs, and a high risk of losing critical knowledge through the turnover of software developers in the Level-1 trigger project. The upgraded system is composed of a set of general purpose boards, that follow the MicroTCA specification, and transmit data over optical links, resulting in a more homogeneous system. This system will contain the order of 100 boards connected by 3000 optical links, which must be controlled and monitored coherently. The associated software is based on generic C++ classes corresponding to the firmware blocks that are shared across different cards, regardless of the role that the card plays in the system. A common database schema will also be used to describe the hardware composition and configuration data. Whilst providing a generic description of the upgrade hardware, its monitoring data, and control interface, this software framework (SWATCH) must also have the flexibility to allow each subsystem to specify different configuration sequences and monitoring data depending on its role. By increasing the proportion of common software, the upgrade system's software will require less manpower for development and maintenance. By defining a generic hardware description of significantly finer granularity, the SWATCH framework will be able to provide a more uniform graphical interface across the different subsystems compared with the legacy system, simplifying the training of the shift crew, on-call experts, and other operation personnel. We present here, the design of the control software for the upgrade Level-1 Trigger, and experience from using this software to commission the upgraded system.Speaker: Tom Williams (STFC - Rutherford Appleton Lab. (GB))

-

15

Unified Communication Framework (UCF)UCF is a unified network protocol and FPGA firmware for high speed serial interfaces employed in Data Acquisition systems. It provides up to 64 different communication channels via a single serial link. One channel is reserved for timing and trigger information whereas the other channels can be used for slow control interfaces and data transmission. All channels are bidirectional and share network bandwidth according to assigned priority. The timing channel distributes messages with fixed and deterministic latency in one direction. From this point of view the protocol implementation is asymmetrical. The precision of the timing channel is defined by the jitter of the recovered clock and is typically in the order of 10-20 ps RMS. The timing channel has highest priority and a slow control interface should use the second highest priority channel in order to avoid long delays due to high traffic on other channels. The framework supports point-to-point connections and star-like 1:n topologies but only for optical networks with passive splitter. It always employs one of the connection parties as a master and the others as slaves. The star-like topology can be used for front-ends with low data rates or pure time distribution systems. In this case the master broadcasts information according to assigned priority whereas the slaves communicate in a time sharing manner to the master. Inside the OSI layer model the UCF can be classified to the layers one to three which includes the physical, the data and the network layer. The above presented framework can be used in trigger and fast data processes as well as slow data or monitoring processes. It can be applied to every kind of experiment, may it be the neutron lifetime experiment PENeLOPE in Munich, the Belle II experiment at KEK or the COMPASS experiment at CERN. In all these experiments UCF is currently beeing implemented and tested. The project is supported by the Maier-Leibnitz-Laboratorium (Garching), the Deutsche Forschungsgemeinschaft and the Excellence Cluster "Origin and Structure of the Universe".Speaker: Dominic Maximilian Gaisbauer (Technische Universitaet Muenchen (DE))

-

13

-

Fast data Transfer links and networks Palazzo Bo (Padova)

Palazzo Bo

Padova

Conveners: Stefan Ritt (Paul Scherrer Institut (CH)), Prof. Zhen-An Liu (IHEP,Chinese Academy of Sciences (CN))-

16

MicroTCA.4 based data acquisition system for KSTAR TokamakThe Korea Superconducting Tokamak Advanced Research (KSTAR) control system comprises various heterogeneous hardware platforms. This diversity of platforms raises a maintenance issue. In addition, limited data throughput rate and the need for higher quality data drives us to find the next generation control platform. Investigation shows that many leading experiments in the field of high energy physics are seriously pursuing the modern high performance open architecture based modular design. An extension of Micro Telecommunications Computing Architecture (MTCA) initiated by the Physics community, MTCA.4, provides modular structure of high-speed links and allows flexible reconfiguration of system functionality. For the systematic standardization of the real time control at KSTAR, we developed a new functional digital controller based on the MTCA.4 Standard. The KSTAR Multifunction Control Unit (KMCU, K-Z35) is realized using Xilinx System-On-Chip SOC architecture. The KMCU development is the result of a successful international collaboration. The KMCU features a Xilinx ZYNQ7000 SOC with ARM processor, FPGA fabric with multi-gigabit transceivers, 1GB DDR-3 memory, as well as a single VITA-57 FMC site reserved for future functional expansion. KMCU is matched with a dedicated Rear Transition Module (RTM) with sites for two FMC-like analog Data Acquisition (DAQ) modules. The RTM pinout is compatible with the DESY standard Zone-3 interface, D1.0. The first DAQ system to be implemented is the Motional Stark Effect (MSE) diagnostic. The MSE DAQ system uses two analog modules each with 16 channel simultaneous ADC sampling at 2MSPS with programmable gain. By using a single RTM with many compatible high performance analog modules, the programmable and reconfigurable KMCU takes advantage of the modular design concept. An internal EPICS IOC easily manages the selected hardware configuration. Another novel function of KMCU is simultaneous two point streaming data transmission. Some parts of the Plasma Control System (PCS) at KSTAR demand data from diagnostics for 3D reconstruction or specific data processing. KMCU duplicates input data from a rear module and simultaneously transmits it to the CPU and external host system through the MTCA backplane and front SFP+ interface. We are now developing a new functionally optimized KMCU series. The paper presents the complete data acquisition system and commissioning results of the MSE diagnostics based on the MTCA.4 Standard. We also introduce a conceptual design for real time processing node for plasma control system based on the KMCU.Speaker: Dr Woong-ryol Lee (National Fusion Research Institute)

-

17

The readout system upgrade for the LHCb experimentThe LHCb experiment is designed to study differences between particles and anti-particles as well as very rare decays in the charm and beauty sector at the LHC. The detector will be upgraded in 2019 and a new trigger-less readout system has to be implemented in order to significantly increase its efficiency. In the new scheme, event building and event selection are carried out in software and the event filter farm receives all data from every LHC bunch-crossing. Another feature of the system is that data coming from the front-end electronics is delivered directly into the event builders memory through a specially designed PCIe card called PCIe40. The PCIe40 board handles the data acquisition flow as well as the distribution of fast and slow controls to the detector front-end electronics. It embeds one of the most powerful FPGAs currently available on the market with 1.2 million logic cells. The board has a bandwidth of up to 490 Gbits/s in both input and output over optical links and up to 100 Gbits/s over the PCI Express bus to the CPU. We will present how data flows through the board and to its associated server during event building. We will focus on specific issues regarding the design of the different firmwares being developed for the FPGA, showing how to manage flows of 100 Gbits/s, and all the techniques put in place when different firmwares are developed by distributed teams of sub-detector experts.Speaker: Paolo Durante (CERN)

-

18

Performance of the new DAQ system of the CMS experiment for run-2.The data acquisition system (DAQ) of the CMS experiment at the CERN Large Hadron Collider assembles events at a rate of 100 kHz, transporting event data at an aggregate throughput of 100 GByte/s to the high-level trigger (HLT) farm. The HLT farm selects and classifies interesting events for storage and offline analysis at a rate of around 1 kHz. The DAQ system has been redesigned during the accelerator shutdown in 2013/14. The motivation was twofold: Firstly, the compute nodes, networking and storage infrastructure reached the end of their lifetime. Secondly, in order to handle higher LHC luminosities and event pileup, a number of sub-detectors are upgraded, increasing the number of readout channels and replacing the off-detector readout electronics with a μTCA implementation. The new DAQ architecture takes advantage of the latest developments in the computing industry. For data concentration, 10/40 Gbit Ethernet technologies are used, as well as an implementation of a reduced TCP/IP in FPGA for a reliable transport between custom electronics and commercial computing hardware. A 56 Gbps Infiniband FDR CLOS network has been chosen for the event builder with a throughput of ~4 Tbps. The HLT processing is entirely file-based. This allows the DAQ and HLT systems to be independent, and to use the same framework for the HLT as for the offline processing. The fully built events are sent to the HLT with 1/10/40 Gbit Ethernet via network file systems. A hierarchical collection of HLT accepted events and monitoring meta-data are stored in to a global file system. The monitoring of the HLT farm is done with the Elasticsearch analytics tool. This paper presents the requirements, implementation, and performance of the system. Experience is reported on the first year of operation in the LHC pp runs as well as at the heavy ion Pb-Pb runs in 2015.Speaker: Jeroen Hegeman (CERN)

-

19

RTM RF Backplane Extensions for MicroTCA.4 Crates – Concept and Performance MeasurementsThe idea of the Rear Transition Module (RTM) Backplane was originally created to simplify cable management of an MicroTCA.4 based LLRF control system for the European XFEL project. The first RTM backplane (called an RF Backplane) was designed to distribute about dozen of precise RF and clock signals to uRTM cards. It was quickly found out, that this backplane offers very powerful extension possibilities for the MTCA.4 standard and can be used also more widely than for the RF applications only. Nowadays, the RTM Backplane is compliant with the PICMG standard and an optional crate extensions. The RTM Backplane provides multiple links for high-precision clock and RF signals (DC to 6GHz) to analog µRTM cards it ) together with distribution of a low noise managed power supply and data transmission to RTM cards. In addition, the RTM backplane offers a possibility to add so called extended RTMs (eRTM) and RTM Power Modules (RTM-PM) to a 12 slot MicroTCA crate. Up to three 6 HE wide eRTMs and two RTM-PMs can be installed behind the front PM and MCH modules. An eRTM attached to the MCH via Zone 3 connector is used for analog signal management on the RTM backplane. This eRTM allows also installing a powerful CPU to extend the processing capacity of the MTCA.4 crate. Three additional eRTMs provide significant space extensions of the MTCA.4 crate that can be used e.g. for analog electronics designed to supply RF signals to the uRTMs. The RTM-PMs deliver a managed low-noise (separated from front crate PMs) analog bipolar power supply (+VV, -VV) for the µRTMs and an unipolar power supply for the eRTMs. This extends functionality of the MicroTCA.4 crate and offers unique performance improvement for analog front-end electronics. This paper covers a new concept of the RTM Backplane, a new implementation for the real-time LLRF control system and performance evaluation of designed prototype, including precise measurements of RF loss, impedance matching and crosstalk.Speaker: Krzysztof Czuba (Warsaw University of Technology)

-

16

-

Welcome Reception: Caffé Pedrocchi, in front of Palazzo Bo - University Caffe Pedrocchi (Padova)

Caffe Pedrocchi

Padova

-

-

-

Bus Transfer to Conference Venue

Bus Transfer to Conference Venue

-

DAQ 1 / Front End Electronics Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: Denis Calvet (CEA/IRFU,Centre d'etude de Saclay Gif-sur-Yvette (FR)), Dr Marco Bellato (INFN - Padova)-

20

Register-Like Block RAM: Implementation, Testing in FPGA and Applications for High Energy Physics Trigger SystemsIn high energy physics experiment trigger systems, block memories are utilized for various purposes, especially in indexed searching algorithms. It is often demanded to globally reset all memory locations between different events which is a feature not supported in regular block memories. Another common demand is to be able to update the contents in any memory location in a single clock cycle. These two demands can be fulfilled with registers but the cost of using registers for large memory is unaffordable. In this paper, a register-like block memory design scheme is presented, which allows updating memory locations in single clock cycle and effectively resetting entire memory within a single clock. The implementation and test results are also discussed.Speaker: Jinyuan Wu (Fermi National Accelerator Lab. (US))

-

21

A 3.9 ps RMS Resolution Time-to-Digital Convertor Using Dual-sampling Method on Kintex UltraScale FPGAThe principle of tapped-delay line (TDL) style field programmable gate array (FPGA)-based time-to-digital converters (TDC) requires finer delay granularity for higher time resolution. Given a tapped delay line constructed with carry chains in an FPGA, it is desirable to find a solution subdividing the intrinsic delay elements further, so that the TDC can achieve a time resolution beyond its cell delay. In this paper, after exploring the available logic resource in Xilinx Kintex UltraScale FPGA, we propose a dual-sampling method to have the TDL status sampled twice. The effect of the new method is equivalent to double the number of taps in the delay line, therefore a significant improvement in time resolution should present. Two TDC channels have been implemented in a Kintex UltraScale FPGA and the effectiveness of the new method is investigated. For fixed time intervals in the range from 0 to 440 ns, the average time resolutions measured by the two TDC channels are respectively 3.9 ps with the dual-sampling method and 5.8 ps by the conventional single-sampling method. In addition, the TDC design maintains advantages of multichannel capability and high measurement throughput in our previous design. Every part of TDC, including dual-sampling, code conversion and on-line calibration could run at 500 MHz clock frequency.Speakers: Dr Chong Liu (University of Science and Technology of China), Prof. Yonggang Wang (University of Science and Technology of China)

-

22

Timing distribution and Data Flow for the ATLAS Tile Calorimeter Phase II UpgradeThe Hadronic Tile Calorimeter (TileCal) detector is one of the several subsystems composing the ATLAS experiment at the Large Hadron Collider (LHC). The LHC upgrade program plans an increase of order five times the LHC nominal instantaneous luminosity culminating in the High Luminosity LHC (HL-LHC). In order to accommodate the detector to the new HL-LHC parameters, the TileCal read out electronics is being redesigned introducing a new read out strategy with a full-digital trigger system. In the new read out architecture, the front-end electronics allocates the MainBoards and the DaughterBoards. The MainBoard digitizes the analog signals coming from the PhotoMultiplier Tubes (PMTs), provides integrated data for minimum bias monitoring and includes electronics for PMT calibration. The DaughterBoard receives and distributes Detector Control System (DCS) commands, clock and timing commands to the rest of the elements of the front-end electronics, as well as, collects and transmits the digitized data to the back-end electronics at the LHC frequency (~25 ns). The TileCal PreProcessor (TilePPr) is the first element of the back-end electronics. It receives and stores the digitized data from the DaughterBoards in pipeline memories to cope with the latencies and rates specified in the new ATLAS DAQ architecture. The TilePPr interfaces between the data acquisition, trigger and control systems and the front-end electronics. In addition, the TilePPr distributes the clock and timing commands to the front-end electronics for synchronization with the LHC clock with fixed and deterministic latency. The complete new read out architecture is being evaluated in a Demonstrator system in several Test Beam campaigns during 2015 and 2016. At the end of this year, a complete TileCal module with the upgraded electronics will be inserted in the ATLAS detector. This contribution shows a detailed description of the timing distribution and data flow in the new read out architecture for the TileCal Phase II Upgrade and presents the status of the hardware and firmware developments of the upgraded front-end and back-end electronics and preliminary results of the TileCal demonstrator program.Speaker: Fernando Carrio Argos (Instituto de Fisica Corpuscular (ES))

-

23

The TOTEM precision clock distribution system.To further extend the measurement potentialities for the experiment at luminosities where the pile-up and multiple tracks in the proton detectors make it difficult to identify and disentangle real diffractive events from other event topologies, TOTEM has proposed to add a timing measurement capability to measure the time-of-flight difference between the two outgoing protons. For such a precise timing measurements, a clock distribution system that empowers time information at spatially separate points with picosecond range precision, is needed. For the clock distribution task, TOTEM will adopt an adaptation of the Universal Picosecond Timing System, developed for the FAIR (Facility for Antiproton and Ion Research) facility at GSI, actually installed as BUTIS system. In this system an optical network, using dense wavelength division multiplex (DWDM) technique, is used to transmit two reference clock signals from the counting room to a grid of receivers in the tunnel. To these clocks another signal is added that is reflected back and used to continuously measure the delays of every optical transmission line; these delay measurements will be used to correct the time information generated at the detector location. The usage of the DWDM make it possible to transmit multiple signals generated with different wavelengths, over a common single mode fibers. Moreover allows to employ standard telecommunication modules conform to international standards like the ITU (International Telecommunications Union) ones. The prototype of this system, showed that the influence of the transmition system on the jitter is negligible and that the total jitter of the clock transmission, is practically due to the inherent jitter of clock sources and the end user electronics. By the time of the Conference, the system will be commissioned in the interaction point 5 (IP5) TOTEM control room. Details on the system design, tests and characterization, will be given in this contribution.Speakers: Francesco Cafagna (Universita e INFN, Bari (IT)), Michele Quinto (Universita e INFN-Bari (IT))

-

24

A Programmable Read-out chain for Multichannel Analog front-end ASICsIn this contribution we introduce an innovative multiplexed ASICs read-out system based on 32 analog channels sampling at 40 MHz with 12 bits resolution and 96 digital I/O with selectable voltage standard ranging from differential signaling and 1.8 or 3.3 V CMOS. The ADCs and ASICs read-out is managed by a Kintex-7 FPGA and the communication with the host computer relies on the fast USB 3 communication protocol. The main feature of this system is the new idea of FPGA firmware development based on an easy to use graphical interface, which is able to carry out all the requested functions for an ASIC read-out system (for instance state machines, triggers, counters, time delays, and so on..), without the need to write any Hardware Description code. Furthermore, we also introduce a cloud compiling service, which allows the user to avoid to install the FPGA development environment to create a measurement setup based on this read-out system.Speaker: Mr Francesco Caponio (Nuclear Instruments)

-

20

-

Break: Coffee

-

Upgrades 3 Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: Christian Bohm (Stockholm University (SE)), Lorne Levinson (Weizmann Institute of Science (IL))-

25

FELIX: the new detector readout system for the ATLAS experimentFrom the ATLAS Phase-I upgrade and onward, new or upgraded detectors and trigger systems will be interfaced to the data acquisition, detector control and timing (TTC) systems by the Front-End Link eXchange (FELIX). FELIX is the core of the new ATLAS Trigger/DAQ architecture. Functioning as a router between custom serial links and a commodity network, FELIX is implemented by server PCs with commodity network interfaces and PCIe cards with large FPGAs and many high speed serial fiber transceivers. By separating data transport from data manipulation, the latter can be done by software in commodity servers attached to the network. Replacing traditional point-to-point links between Front-end components and the DAQ system by a switched network, FELIX provides scaling, flexibility uniformity and upgradability. Different Front-end data types or different data sources can be routed to different network endpoints that handle that data type or source: e.g. event data, configuration, calibration, detector control, monitoring, etc. This reduces the diversity of custom hardware solutions in favour of software. Front-end connections can be either high bandwidth serial connections from FPGAs (e.g. 10 Gb/s) or those from the radiation tolerant CERN GBTx ASIC which aggregates many slower serial links onto one 5 Gb/s high speed link. Already in the Phase 1 Upgrade there will be about 2000 fiber connections. In addition to connections to a commodity network and Front-ends, FELIX receives Timing, Trigger and Control (TTC) information and distributes it with fixed latency to the GBTx connections. As part of the FELIX implementation, the firmware and Linux software for a high efficiency PCIe DMA engine has been developed. The system architecture of FELIX will be described; the results of the demonstrator program and the first prototype, along with future plans, will be presented.Speaker: Julia Narevicius (Weizmann Institute of Science (IL))

-

26

Upgrade of the TOTEM data acqusition system for the LHC's Run TwoThe TOTEM (TOTal cross section, Elastic scattering and diffraction dissociation Measurement at the LHC) experiment at LHC, has been designed to measure the total proton-proton cross-section with a luminosity independent method, based on the optical theorem, and to study the elastic and diffractive scattering at the LHC energy. To cope with the increased intensity of the LHC run 2 phase, and the increase on statistics required by the extension of the TOTEM physics program, approved for the 2016 run campaign, the previous VME based DAQ has been substituted by a new one based on the Scalable Readout System (SRS). The system is composed of 16 SRS-FECs, and one SRS-SRU; it features a throughput of ~120MB/s, saturating the SRS-FEC 1Gb/s link, for an overall 2GB/s data transfer rate into the online PC farm. This guarantee a baseline maximum trigger rate of ~24kHz, to be compared with the 1KHz of the previous VME based system. This trigger rate will be further improved,up to 100kHz trigger rate, implementing second level trigger algorithm in the SRS-SRU. The new system design fulfills the requirements for an increased efficiency, providing higher bandwidth, and increasing the purity of the data recorded supporting both a zero suppression algorithm and a second-level trigger based on pattern recognition algorithms implemented in hardware. Moreover a full compatibility with the legacy front-end hardware has been guaranteed, as well as the interface with the CMS experiment DAQ and the LHC Timing Trigger and Control (TTC) system. A complete re-design of the firmware, leveraging the usage of industrial strength firmware technologies, has been undertook to provide a set of common interfaces and services between the standard system modules to the specific one of the user's application. This to allow an efficient development and easier insertion of different zero suppression and second-level trigger algorithms and a share of firmware blocks between different SRS components. Furthermore, to avoid packed losses and improve reliability of the UDP data transmission, a solution has been adopted that uses the Ethernet Flow control and New API (NAPI) mode driver, featuring a ticketing algorithms at the application layer. In this contribution we will describe in details the full system and performances during the commissioning phase at the LHC Interaction Point 5 (IP5).Speaker: Michele Quinto (Universita e INFN-Bari (IT))

-

27

Phase-I Trigger Readout Electronics Upgrade for the ATLAS Liquid-Argon CalorimetersFor the Phase-I luminosity upgrade of the LHC, a higher granularity trigger readout of the ATLAS LAr Calorimeters is foreseen in order to enhance the trigger feature extraction and background rejection. The new readout system digitizes the detector signals, which are grouped into 34000 so-called Super Cells, with 12-bit precision at 40 MHz. The data is transferred via optical links to a digital processing system which extracts the Super Cell energies. A demonstrator version of the complete system has now been installed and operated on the ATLAS detector. The talk will give an overview of the Phase-I Upgrade of the ATLAS LAr Calorimeter readout and present the custom developed hardware including their role in real-time data processing and fast data transfer. This contribution will also report on the performance of the newly developed ASICs including their radiation tolerance and on the performance of the prototype boards in the demonstrator system based on various measurements with the 13 TeV collision data. Results of the high speed link test with the prototype of the LAr Digital Processing Boards will be also reported.Speaker: Nicolas Chevillot (Centre National de la Recherche Scientifique (FR))

-

28

Large-scale DAQ tests for the LHCb upgradeThe Data Acquisition (DAQ) of the LHCb experiment will be upgraded in 2020 to a high-bandwidth triggerless readout system. In the new DAQ event fragments will be forwarded to the to the Event Builder (EB) computing farm at 40 MHz. Therefore the front-end boards will be connected directly to the EB farm through optical links and PCI Express based interface cards. The EB is requested to provide a total network capacity of 32 Tb/s, exploiting about 500 nodes. In order to get the required network capacity we are testing various technology and network protocols on large scale clusters. We developed on this purpose an Event Builder implementation designed for an InfiniBand interconnect infrastructure. We present the results of the measurements performed to evaluate throughput and scalability measurements on HPC scale facilities.Speaker: Antonio Falabella (Universita e INFN, Bologna (IT))

-

25

-

Conference Photo

-

Break: Lunch Centro Congressi (Padova)

Centro Congressi

Padova

-

Mini Oral 1 Centro Congressi (Padova)

Centro Congressi

Padova

Conveners: Christian Bohm (Stockholm University (SE)), Rejean Fontaine (Université de Sherbrooke)-

29

085 TAWARA_RTM: A complete platform for a real time monitoring of contamination events of drinking waterSpeaker: Sandra Moretto (University of Padova)

-

30

060 Development of ATLAS Liquid Argon Calorimeters Readout Electronics for HL-LHCSpeaker: Kai Chen (Brookhaven National Laboratory (US))

-

31

084 Software Integrity Analysis Applied to IRIO EPICS Device Support Based On FPGA Real-Time DAQ SystemsSpeaker: Diego Sanz

-

32

018 Fuzzy-PID based heating control systemSpeaker: Jian Wang (Univ. of Sci. & Tech. of China)

-

33

012 High Speed Ethernet Application for the Trigger Electronics of the New Small WheelSpeaker: Kun Hu (University of Science and Technology of China)

-

34

232 NaNet: FPGA-based Network Interface Cards Implementing Real-time Data Transport for HEP ExperimentsSpeaker: Michele Martinelli (INFN)

-

35

004 A hardware implementation of the Levinson routine in a radio detector of cosmic rays to improve a suppression of the non-stationary RFISpeaker: Zbigniew Szadkowski (University of Lodz)

-

36

021 Adaptive IIR-notch filter for RFI suppression in a radio detection of cosmic raysSpeaker: Zbigniew Szadkowski (University of Lodz)

-

37

119 DCT trigger in a high-resolution test platform for a detection of very inclined showers in the Pierre Auger surface detectorsSpeaker: Zbigniew Szadkowski (University of Lodz)

-

38

040 Implementation of ITER Fast Plant Interlock System using FPGAs with cRIOSpeaker: Mariano Ruiz (Technical University of Madrid)

-

39

150 FPGA online tracking algorithm for the PANDA straw tube trackerSpeaker: Yutie Liang

-

40

095 Design and test of a GBTx based board for the upgrade of the ALICE TOF readout electronicsSpeaker: Davide Falchieri (Universita e INFN, Bologna (IT))

-

41

104 Exploring RapidIO technology within a DAQ system event building networkSpeaker: Simaolhoda Baymani (CERN)

-

42

076 Online calibration of the TRB3 FPGA TDC with DABC softwareSpeaker: Joern Adamczewski-Musch (GSI)

-

43

039 FPGA Implementation of Toeplitz Hashing Extractor for Real Time Post-processing of Raw Random NumbersSpeaker: xiaoguang zhang

-

44

062 New LLRF control system at LNLSpeaker: Stefano Pavinato (INFN - National Institute for Nuclear Physics)

-

45

011 An Extensible Induced Position Encoding Readout Method for Micro-pattern Gas DetectorsSpeaker: Binxiang Qi (University of Science and Technology of China)

-

46

066 An I/O Controller for Real Time Distributed Tasks in Particle AcceleratorsSpeaker: Davide Pedretti (Universita e INFN, Legnaro (IT))

-

47

005 Design and Testing of the Bunch-by-Bunch Beam Transverse Feedback Electronics for SSRFSpeaker: Mr Jinxin Liu (University of Science and Technology of China)

-

48

054 Control system optimization techniques for real-time applications in fusion plasmas: the RFX-mod experienceSpeaker: Leonardo Pigatto (Consorzio RFX)

-

49

061 High-speed continuous DAQ system for reading out the ALICE SAMPA ASICSpeaker: Ganesh Jagannath Tambave (University of Bergen (NO))

-

50

046 Brain Emulation for Image ProcessingSpeaker: Pierluigi Luciano (Universita di Pisa & INFN (IT))

-

51

176 Readout electronics for Belle II imaging Time of Propagation detectorSpeaker: Dr Dmitri Kotchetkov

-

52

010 An α/γ Discrimination Method for BaF2 Detector by FPGA-based Linear Neural NetworkSpeaker: Chenfei Yang

-

53

007 Design of the Readout Electronics Prototype for LHAASO WCDASpeaker: Ma Cong (University of Science and Technology of China)

-

54

053 A Calculation Software Based on Pipe-and-Filter Architecture for the 4πβ-γ Digital Coincidence Counting EquipmentSpeaker: Mr Zhiguo Ding

-

55

229 Evaluation of 100 Gb/s LAN networks for the LHCb DAQ upgradeSpeaker: Balazs Voneki (CERN)

-

56

031 TaskRouter: A newly designed online data processing frameworkSpeaker: Minhao Gu (IHEP)

-

57

114 A High Frame Rate Test System for The HEPS-BPIX based on NI-sbRIO BoardSpeaker: Jingzi Gu

-

58

192 Particle identification on an FPGA accelerated compute platform for the LHCb Upgrade.Speaker: Christian Faerber (CERN)

-

59

074 Field Waveform Digitizer for BaF2 Detector Array at CSNS-WNSSpeaker: qi wang

-

60

109 Signal Processing Scheme for a Low Cost LiF:ZnS(Ag) Neutron Detector with Silicon PhotomultiplierSpeaker: Mr Kevin Pritchard (NCNR)

-

61

213 Design of a Compact Hough Transform for a new L1 Trigger Primitives Generator for the upgrade of the CMS Drift Tubes muon detector at the HL-LHCSpeaker: Nicola Pozzobon (Universita e INFN, Padova (IT))

-

62

248 White Rabbit based sub-nsec time synchronization, time stamping and triggering in distributed large scale astroparticle physics experimentsSpeaker: Martin Brückner (Paul Scherrer Institut)

-

63

086 Enabling Real Time Reconstruction for High Resolution SPECT SystemsSpeaker: Mélanie Bernard

-

64

135 Modular Software for MicroTCA.4 Based Control ApplicationsSpeaker: Nadeem Shehzad (DESY Hamburg)

-

29

-

Break: Coffee

-

Poster session 1 Centro Congressi (Padova)

Centro Congressi

Padova

-

65

A Calculation Software Based on Pipe-and-Filter Architecture for the 4πβ-γ Digital Coincidence Counting EquipmentThe 4πβ − γ coincidence efficiency extrapolation method is the most popular for the absolute determination of radioactivity. In this paper, a calculation software based on pipe-and-filter architecture for the 4πβ-γ digital coincidence counting (DCC) equipment is presented. The equipment has ability to handle four 500MSPS 8bit resolution channels and four 62.5MSPS 16 bits resolution channels with ±1ns synchronization accuracy. However, digitizing pulse-trains in high speed and high resolution brings challenges of controlling and processing. In parallel, it allows DCC system digitizing and processing the pre-amplifier pulses themselves, which avoid the associated loss of information inherent in the operation of pulse shaping amplifiers. To meet these new challenges and demands, the software is designed in pipe-and-filter architecture, which support reuse and concurrent execution. Results indicate that this design is effective, easy to implement and extend for real-time acquisition controlling and off-line processing.Speaker: Zhiguo Ding (University of Science and Technology of China, Department of morden physics)

-

66

A coprocessor for the Fast Tracker SimulationThe Fast Tracker (FTK) executes real time tracking for online event selection in the ATLAS experiment. Data processing speed is achieved by exploiting pipelining and parallel processing. Track reconstruction is executed on a 2-level pipelined architecture. The first stage, implemented on custom ASICs called Associative Memory (AM) Chips, performs Track Candidate (road) recognition in low resolution. The second stage, implemented on FPGAs (Field Programmable Gate Arrays), builds on the track candidate recognition, performing Track Fitting in full resolution. The use of such parallelized architectures for real time event selection opens up a new huge computing problem related to the analysis of the acquired samples. For each type of implemented trigger, millions of events have to be simulated to determine, within a small statistical margin of error, the efficiency and the bias of that trigger. The AM chip emulation is a particularly complicated task. This paper proposes the use of a hardware co-processor, in place of its simulation, to solve the problem. We report on the implementation and performance of all the functions complementary to the pattern matching in a modern, compact embedded system for track reconstruction. That system is the miniaturization of the complex FTK processing unit, which is also well suited for powering applications outside the realm of High Energy Physics as well.Speaker: Christos Gentsos (Aristotle Univ. of Thessaloniki (GR))

-

67

A Digital On-line Implementation of a Pulse-Shape Analysis Algorithm for Neutron-gamma Discrimination in the NEDA DetectorModern nuclear physics experiments involving fusion-evaporation reactions frequently require the detection of particles (alphas,protons,neutrons) which provide crucial information about the nucleus under study. Some reaction channels involving neutron detection have very low-cross section and require the use of large scintillator arrays which are also sensitive to the gamma-rays, hence, meaning that neutron-gamma discrimination (NGD) techniques must be applied. Besides, due to the high counting rates at which the experiments are carried out and the need of using digital electronics, the NGD is expected to be implemented digitally in the earlier stages in order to decrease the total data throughput which would be mostly produced by the gamma-rays. NGD has been largely used in a wide assortment of neutron detectors (Neutron Wall) using analog electronics employing pulse-shape analysis (PSA) techniques such as the zero cross-over (ZCO) and charge-comparison (CC) methods. Due to the inherent limitations of the analog electronics, an effort is put into moving these PSA methods for NGD to the digital domain using programmable devices such as FPGA, so a higher degree of flexibility and integration can be achieved without losing performance in terms of the discrimination performance. In this paper we analyze the performances, complexity and resources of two widely-used PSA algorithms (Zero-CrossOver and Charge Comparison) in order to implement them using digital electronics based on FPGA. The chosen algorithm will be set in the new-digital electronics of the NEDA (Neutron Detector Array) detector, currently in a development stage. It is expected, by employing this algorithm in an FPGA, to provide a simple mechanism to discard a large amount of gamma-rays while preserving the flexibility and robustness that digital systems offer.Speaker: Francisco Javier Egea Canet

-

68

A hardware implementation of the Levinson routine in a radio detector of cosmic rays to improve a suppression of the non-stationary RFIRadio detector of the ultra high-energy cosmic rays in the Pierre Auger Observatory operates in the frequency range 30-80 MHz, which is often contaminated by the human-made RFI. Several filters were used to suppress the RFI: based on the FFT, IIR notch filter and FIR filter based on the liner prediction. It refreshes the FIR coefficients calculating either in the external ARM processor, internal soft-core NIOS processor implemented inside the FPGA or hard-core embedded processors (HPS) being a silicon part of the FPGA chip. Refreshment times significantly depend on used type of calculation process. For stationary RFI the FIR coefficients can be refreshed each minute or rarer. However, an efficient suppression of non-stationary short-term contaminations requires a much faster response. FIR coefficients calculated by an external ARM take several seconds, by NIOS on the level of hundreds milliseconds. The HPS allows a reduction of refreshment time to ~20 ms (for 32-stage FIR filter). This is still not too long. A symmetry of covariance matrix allows using much faster Levinson procedure instead of typical Gauss routine solving a set of linear equations. The Levinson procedure calculated even in the HPS takes relatively a lot of time. A hardware implementation this procedure inside the FPGA fabric as specialized microprocessor requires only ~40 000 clock cycles. By the 200 MHz ADC and global FPGA clock, this corresponds to ~200 us - 2 level of magnitudes less than for the HPS. We practically tested this algorithm on the radio-detector Front-End Board and compared with the previous approaches: FFT, IIR, NIOS and HPS. As a signal source was used the Butterfly antenna with the LNA used in the Auger Engineering Radio Array. The code has been implemented into several various chips for a comparison of speed, resource occupancies, however, a target is Cyclone V E FPGA 5CEFA9F31I7 used in the Front-End Board for the Pierre Auger radio detector. The FIR filter should operate in the fly, it means with the same clock as ADCs. In order to avoid aliasing, according to Nyquist rule the sampling frequency should be at least twice higher than the higher frequency in the signal spectrum. The spectrum is formed by the band-pass filters to 30-80 MHz. Selected sampling frequency in the radio detectors is 200 MHz. The hardware Levinson procedure does not need to operate with the same ADC clock, however, it is recommended to avoids temporarily memories. The 200 MHz speed has been achieved in the StratixIII FPGAs (speed grade - 2). Cyclone V (speed grade - 7) needs some more optimizations and probably additional pipeline stages. Nevertheless, the algorithm operating with lower clock then 200 MHz can be used also in the FIR filter. 180 MHz obtained at present in Cyclone V enlarges a refreshment time on ~10% only. We plan to test the algorithm in real radio stations in Argentinean pampas.Speaker: Zbigniew Szadkowski (University of Lodz)

-

69

A High Frame Rate Test System for The HEPS-BPIX based on NI-sbRIO BoardHEPS-BPIX is a pixel detector designed for the High Energy Photon Source (HEPS) in China. As a hybrid pixel detector, it consists of a silicon sensor and a readout chip which is bump-bonded to the sensor with Indium. The detector contains an array of 104×72 pixels while each pixel measures 150 μm×150 μm. Each pixel of the readout chip comprises a preamplifier, a discriminator and a counter. Aiming at X-ray imaging, HEPS-BPIX works in the single photon counting mode, the counting depth of every pixel is 20 bits. The test system of the detector which implements all the control, calibration, readout and real-time imaging has been developed based on the NI-sbRIO board (sbRIO-9626). The field programmable gate array (FPGA) of the NI-sbRIO board deserializes the data from the pixel array and translates the clock as well as the serial configuration data to the detector. The FPGA firmware and the simple data acquisition (DAQ) system have been designed with LabVIEW environment in order to decrease the time of the development. Through the use of the LabVIEW programmed DAQ software, the test system can control the signal generator by Ethernet to calibrate the detector automatically. Meanwhile, it can monitor the real-time image and change the configuration data to make the debugging much easier. The test system has been utilized for the X-ray test and the beam line test of the detector. A series of X-ray images have been taken and a high frame rate of 1.2kHz has been realized. This paper will give the details of the test system and present results of the performance of the HEPS-BPIX.Speakers: Jie Zhang (Institute of High Energy Physics, Chinese Academy of Sciences), Jingzi Gu (Institute of High Energy Physics, Chinese Academy of Sciences)

-

70

A Hybrid Analog-digital Integrator for EAST DeviceA hybrid analog-digital integrator has been developed to be compatible with the long pulse plasma discharges on Experimental Advanced Superconductor Tokamak (EAST), in which a pair of analog integrators are used to integrate the input signal by turns to reduce the error caused by the leakage of integration capacitors, and the outputs of two integrators can be combined to construct a continuous integration signal by a Field Programmable Gate Array (FPGA) built in the digitizer. The integration drift is almost linear and stable in controlled temperature, so a period of typically 50 s is used to determine the effective drift slope, which is used to rectify the integration signal in real time. The data integrated in the internal FPGA can be directly transferred into the reflective memory installed in the same PCI eXtensions for Instrumentation (PXI) chassis. The test results show that the processed integration drift is less than 200 uVs during 1000 s integration, which will meet the accuracy of magnetic diagnostics in EAST experimental campaigns.Speaker: Dr Yong Wang (Institute of Plasma Physics, Chinese Academy of Sciences)

-

71

A new electronic board to drive the Laser calibration system of the ATLAS hadron calorimeterThe LASER calibration system of the ATLAS hadron calorimeter aims at monitoring the ~10000 PMTs of the TileCal. The LASER light injected in the PMTs is measured by sets of photodiodes at several stages of the optical path. The monitoring of the photodiodes is performed by a redundant internal calibration system using an LED, a radioactive source, and a charge injection system. The LASer Calibration Rod (LASCAR) electronics card is a major component of the LASER calibration scheme. Housed in a VME crate, its main components include a charge ADC, a TTCRx, a HOLA part, an interface to control the LASER, and a charge injection system. The 13 bits ADC is a 2000pc full-scale converter that processes up to 16 signals stemming from 11 photodiodes, 2 PMTs, and 3 charge injection channels. Two gains are used (x1 and x4) to increase the dynamic range and avoid a saturation of the LASER signal for high intensities. The TTCRx chip (designed by CERN) retrieves LHC signals to synchronize the LASCAR card with the collider. The HOLA mezzanine (also designed by CERN) transmits LASER data fragments (e.g. digitized signal from the photodiodes) to the DAQ of ATLAS. The interface part is used during the pp collisions when the LASER is flashed in empty bunch-crossings. A time correction may then be performed, depending on the LASER intensity requested. The charge injection part aims at monitoring the linearity of the photodiode preamplifiers by injecting a 5V max signal with a 16-bits dynamics. All these features are managed with a field-programmable gate array (FPGA Cyclone V) and a microcontroler (Microchip pic32) equipped with an ethernet interface to the Detector Control System (DCS) of ATLAS.Speaker: Philippe Gris (Univ. Blaise Pascal Clermont-Fe. II (FR))

-

72

A Time-to-Digital Converter Based on a Digitally Controlled OscillatorTime measurements play a crucial role in trigger and data acquisition systems (TDAQ) of High Energy Physics (HEP) experiments, where calibration, synchronization between signals and phase-measurements accuracy are often required. Although the various elements of a time measurement system are typically designed using a classical mixed-signal approach, state-of-art research is also focusing on all-digital architectures. Mixed signal approach has the advantage to reach better performances, but it requires more development time than a fully-digital design. Moreover, the porting of analog IP blocks into a new technology typically requires a significant design effort compared with digital IPs. In this work, we present a fully-digital TDC application, based on a synthesizable DCO, where the TDC measures the phase relationship between a timing signal and a 40 MHz reference clock. The DCO design is technology-independent, it is described by means of a hardware description language and it can be placed and routed with automatic tools. We present the TDC architecture, the DCO performances and the results on a preliminary implementation on a 130 nm ASIC prototype, in terms of output jitter, power consumption, frequency range, resolution, linearity and differential non-linearity. The TDC will be used in the new readout chip that is under development for the Muon detector electronic upgrade in LHCb experiment at CERN. The TDC presented in this paper has the fundamental task of measuring the phase difference between the 40 MHz LHC machine clock and a digital signal coming from the muon detector, in order to allow the phase shift of the detector signal according to the required resolution of the experiment.Speaker: Luigi Casu (Universita e INFN (IT))

-

73

Adaptive IIR-notch filter for RFI suppression in a radio detection of cosmic raysRadio stations can observe radio signals caused by coherent emissions due to geomagnetic radiation and charge excess processes. Auger Engineering Radio Array (AERA) observes the frequency band from 30 to 80 MHz. This range is highly contaminated by human-made RFI. In order to improve the signal to noise ratio RFI filters are used in AERA to suppress this contamination. The AERA uses the IIR notch filters operating with fixed parameters and suppressing four narrow bands. They are not sensitive on new source of RFI as walkie-talkie, mobile communicators and other human-made RFI. In order to increase an efficiency of a self-trigger the signal should be cleaned from the RFI to improve a signal to noise ratio. One of the source of RFI are narrow-band transmitters. This type of RFI can be significantly suppressed by digital filters after a signal digitization in the ADCs. IIR filters are generally potentially unstable due to feedbacks, however than are much shorter and power efficient than FIR filters. We implemented a NIOS virtual processor calculating new set of IIR filter coefficients, which are reloaded dynamically on the fly. The spectrum analysis of 30-80 MHz MHz band is supported by the Altera FFT IP Core. The NIOS adjusts the new coefficients the poles of the filter to be inside the unique complex radius (a condition of stability) as well as it tunes a width of the notch filter. Practical implementation was tested in the laboratory with signal and pattern generators as well as with the LPDA antenna with LNA - a set used in real AERA radio stations.Speaker: Zbigniew Szadkowski (University of Lodz)

-

74

An Extensible Induced Position Encoding Readout Method for Micro-pattern Gas DetectorsThe requirement of a large number of electronics channels has become an issue to the further applications of Micro-pattern Gas Detectors (MPGDs), and poses a big challenge for the integration, power consumption, cooling and cost. Induced position encoding readout technique provides an attractive way to significantly reduce the number of readout channels. In this paper, we present an extensible induced position encoding readout method for MPGDs. The method is demonstrated by the Eulerian path of graph theory. A standard encoding rule is provided, and a general formula of encoding & decoding for n channels is derived. Under the premise of such method, a one-dimensional induced position encoding readout prototyping board is designed on a 5×5 cm2 Thick Gas Electron Multiplier (THGEM), where 47 anode strips are read out by 15 encoded multiplexing channels. Verification tests are carried out on a 8 keV Cu X-ray source with 100μm slit. The test results show a robust feasibility of the method, and have a good spatial resolution and linearity in its position response. The method can dramatically reduce the number of readout channels, and has potential to build large area detectors and can be easily adapted to other detectors like MPGDs.Speakers: Mr Guangyuan YUAN (State Key Laboratory of Particle Detection and Electronics, University of Science and Technology of China, Hefei 230026, China;Department of Modern Physics, University of Science and Technology of China, Hefei 230026, China), Mr Siyuan MA (State Key Laboratory of Particle Detection and Electronics, University of Science and Technology of China, Hefei 230026, China;Department of Modern Physics, University of Science and Technology of China, Hefei 230026, China)

-

75

An I/O Controller for Real Time Distributed Tasks in Particle AcceleratorsSPES is a second generation ISOL radioactive ion beam facility in construction at the INFN National Laboratories of Legnaro (LNL). Its distributed control system embeds custom control in almost all instruments or cluster of homogeneous devices. Nevertheless, standardization is an important issue that concerns modularity and long term maintainability for a facility that has a life span of at least twenty years. In this context, the research project presented in this paper focuses on the design of a custom IOC (Input Output Controller) which acts as a local intelligent node in the distributed control network and is generic enough to perform several different tasks spanning from security and surveillance operations, beam diagnostic, data acquisition and data logging, real time processing and trigger generation. The IOC exploits the COM (Computer On Module) Express standard that is available in different form factors and processors, fulfilling the computational power requirement of varied applications. The Intel x86-64 architecture makes software development straightforward, easing the portability. The result is a custom motherboard with several application specific features and generic PC functionalities. The design is modular to a certain extent, thanks to an hardware abstraction layer and allows the development of soft and hard real time applications by means of a real time Operating System and of an on-board FPGA closely coupled to the CPU. Three PCIe slots, a FPGA Mezzanine Card (FMC) connector and several general-purpose digital/analog inputs/outputs enable functionality extensions. An optical fiber link connected to the FPGA is an high speed interface for high throughput data acquisitions or timing sensitive applications. The power distribution complies the AT standard and the whole board can be supplied via Power Over Ethernet (POE+) IEEE 802.3at standard. Networking and device-to-cloud connectivity are guaranteed via a gigabit ethernet link. The design, performance of the prototypes and intended usage will be presented.Speakers: Dr Davide Pedretti (Universita e INFN, Legnaro (IT)), Dr Stefano Pavinato (INFN - National Institute for Nuclear Physics)

-

76

An α/γ Discrimination Method for BaF2 Detector by FPGA-based Linear Neural NetworkA pulse shape discrimination (PSD) method based on linear neural network is proposed for separation of α- and γ-induced events in BaF2 crystal.

An artificial linear neural network was designed to identify α- and γ- induced events in BaF2 crystal with the inputs of several pulse information, including pulse pedestal, amplitude, gradient, long/short amplitude integral, and the amount of the samples over threshold. The neural network output, which is a two-element vector, (Y1, Y2), indicates which type the input pulse is of three: the α-induced, the γ-induced and the noises. The linear neural network is trained in Matlab using 40000 BaF2 detector pulses, and the desired optimality is achieved. Then we implement the linear neural network in a Spartan-6 FPGA using the weight matrix of the neurons.

We build a signal digitalization and real-time discrimination system basing on this method. A 1Gsps ADC is used for BaF2 detector signal sampling. Once trigged, a 2K sampling-point pulse sequence will be sent into a data processing module, and several pulse information will be extracted in FPGA, as the inputs of the linear neural network. Then the output vector transfers to PC, and after a graphic analysis, it’s shown that the α-induced events, γ-induced events and noises are well separated.

To evaluate the performance of this system, a coincidence evaluation test is processed. We utilize a LaBr3 detector as another input of the digitalization system. And a collimated 22Na γ-source is placed between the BaF2 and LaBr3 crystals. Owing to the two-photon radiation of 22Na, the self-trigged events in BaF2 detector in coincidence with γ-rays in LaBr3 crystal should also be a γ-induced event except for occasional coincidence. Among 5000 two-detector-coincidence events, 28 BaF2 events are identified as α-induced by the system, the false coverage rate is below 0.5% considering the chance of occasional coincidence. The test verifies good effect and feasibility of this system.

Speaker: Mr Chenfei Yang (1. State Key Laboratory of Particle Detection and Electronics, University of Science and Technology of China, Hefei 230026, China; 2. Department of Modern Physics, University of Science and Technology of China, Hefei 230026, China) -

77