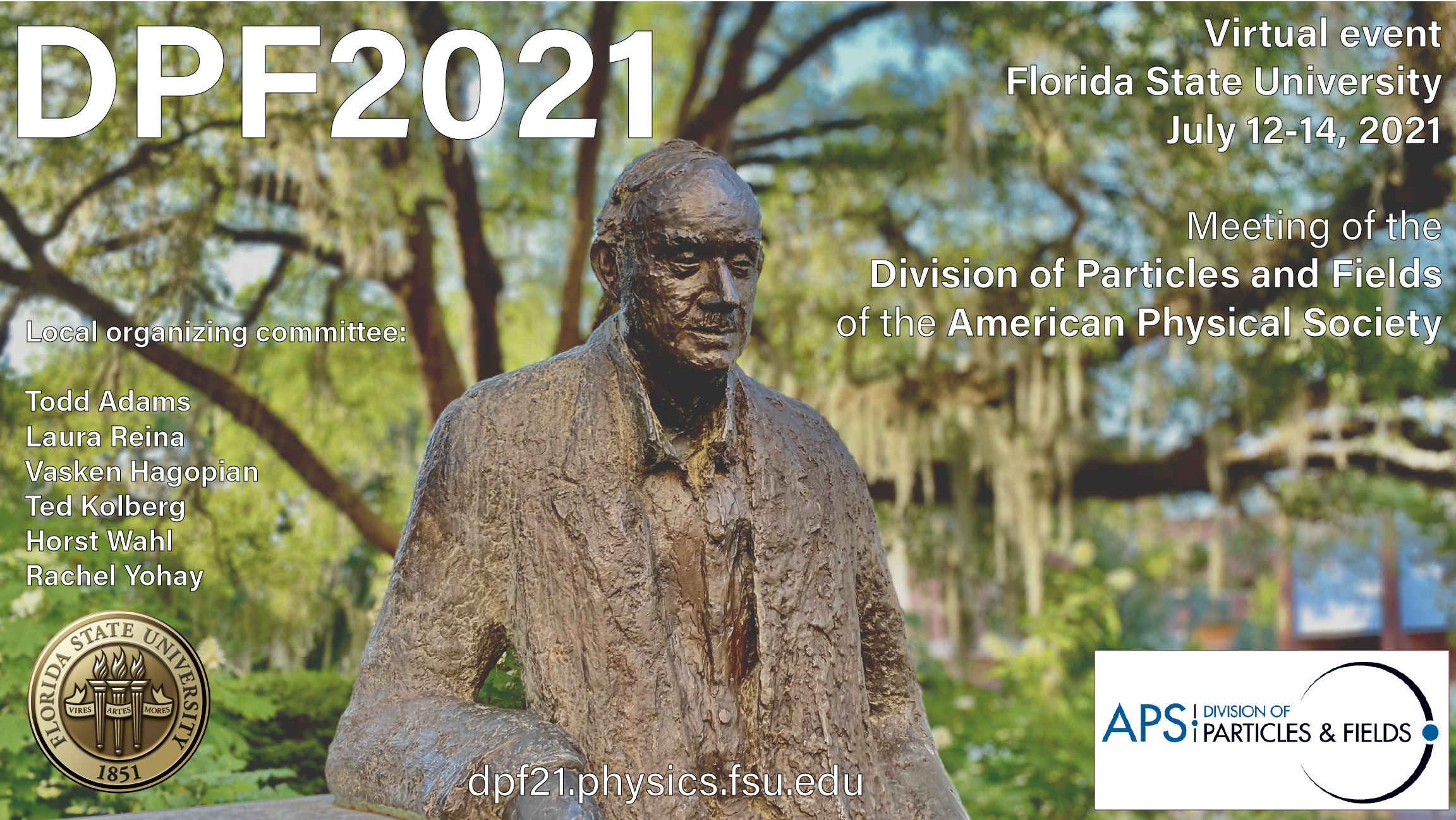

2021 Meeting of the Division of Particles and Fields of the American Physical Society (DPF21)

Zoom

Thank you for making this a successful meeting!

Recordings of presentations are now available for most talks. The timetable lists all talks. Each contribution (where the speaker allowed us) should have a link labelled "Recording".

There will be no proceedings for DPF21.

Registration and abstract submission are now closed.

The APS Division of Particles & Fields (DPF) Meeting brings the members of the Division together to review results and discuss future plans and directions for our field. It is an opportunity for attendees, especially young researchers, to present their findings. The meeting opened each day with plenary sessions, followed by selected community sessions and then parallel sessions to complete the day.

Topics covered included: LHC Run 2 Results; LHC Run 3 and HL-LHC Projections; Accelerators & Detectors; Computing, Machine Learning, and AI; Quantum Computing and Sensing; Electroweak & Top Quark Physics; Higgs Physics; QCD & Heavy Ion Physics; Rare Processes and Precision Measurements; Neutrino Physics; Physics Beyond the Standard Model; Particle Astrophysics; Dark Matter; Cosmology & Dark Energy; Gravity & Gravitational Waves; Lattice Gauge Theory; Field & String Theory; Outreach & Education; Diversity, Equity, & Inclusion.

DPF2021 was held as a virtual-only event via Zoom. It is hosted by the Florida State University High Energy Physics group and with the scientific program determined by the DPF Program Committee.

There was no Registration Fee. You do not need to be a member of APS to register or to submit an abstract.

Support for this meeting was provided by the FSU Office of Research, the FSU College of Arts and Sciences, the FSU Physics Department, and the FSU High Energy Physics group.

Follow the meeting on Twitter @apsdpf2021 or #apdpf2021.

-

-

Plenary Sessions: Monday Plenary Session Plenary Sessions

Plenary Sessions

Zoom

Convener: R. Sekhar Chivukula (UC San Diego)-

1

WelcomeSpeakers: R. Sekhar Chivukula (UC San Diego), Todd Adams (Florida State University (US))

-

2

Hints of lepton flavor non-universality in b decays

Experimental hints of lepton flavor non-universality in the decays of b-hadrons are an exciting sign of possible new physics beyond the Standard Model. Results from multiple experiments indicate that electrons, muons, and tau leptons may not be different only because of their masses. I will review the experimental situation of these “b-anomalies”, including recent developments and prospects for the near future. Further results from LHCb, Belle II, and other experiments in the coming years should be able to confirm or rule out the presence of new physics in these decays.

Speaker: Matthew Scott Rudolph (Syracuse University (US)) -

3

Recent Progress in Measuring Mixing and CP Violation in B-meson Decays

This talk will review the role of the CKM matrix in governing meson-antimeson oscillations and CP violation in the Standard Model. Recent measurements of B_s oscillations and decays by LHCb, CMS, and ATLAS will be discussed in this context, as will be measurements of CP violation in B_d and B_u decays. The direct measurements of the CKM angle gamma (from decays produced by tree-level amplitudes) are combined and the resulting value compared to that determined indirectly.

Speaker: Michael David Sokoloff (University of Cincinnati (US)) -

4

Hints for flavorful new physics

Flavor physics is addressing two complementary questions. First, what is the origin of the hierarchical flavor structure of the Standard Model quarks and leptons? Second, are there sources of flavor and CP violation beyond the Standard Model? I will discuss recent theoretical developments in this area, focusing mainly on the so-called "B-anomalies" -- persistent hints for the violation of lepton flavor universality in decays of B mesons. I will review the status of the anomalies, discuss possible new physics explanations, and outline the prospects of resolving the anomalies with expected experimental data.

Speaker: Wolfgang Altmannshofer (UC Santa Cruz) -

5

The value of improving HEP outreach and how to participate

HEP is funded essentially entirely with public funds. Since there are many organizations that wish to receive federal funds, it is imperative that the HEP community raise its visibility among the general public. However, outreach to the public has long been neglected by the HEP community. In this talk, I will discuss the importance of outreach. I will also describe some of the methods that work, and will also mention some programs that the Snowmass process is developing to make it easier for individuals to engage in communicating with the public.

Speaker: Don Lincoln (Fermilab)

-

1

-

12:10

Break

-

Community Session: Awards Ceremony and Diversity & Inclusion Community Sessions

Community Sessions

Zoom

Convener: Tao Han (University of Pittsburgh)-

6

WelcomeSpeaker: Tao Han (University of Pittsburgh)

-

7

Review of DPF APS Fellows 2019 and 2020Speakers: Tao Han (University of Pittsburgh), Tao Han (University of Pittsburgh)

-

8

DPF Instrumentation AwardSpeakers: Karsten Heeger (Yale University), Tao Han (University of Pittsburgh), Tao Han (University of Pittsburgh)

-

9

DPF Mentor Award 2019 - Tim TaitSpeaker: Tim M.P. Tait (University of California, Irvine)

-

10

DPF Mentor Award 2020 - Tesla JeltemaSpeaker: Tesla Jeltema (University of California, Santa Cruz)

-

11

What Is The "DELTA-PHY" initiative?

Many have observed for organizations there is evidence to support the belief that their cultures are their destinies. During the summer of 2020, the DELTA-PHY initiative was launched in an effort for the APS to deliberate upon and if needed move to transform its culture. It does this by asking three key questions: (a.) What are the values of the APS? (b.) Aside from producing world-class physics, what are the inputs,

outputs, practices, traditions of the APS? (c.) Do answers to these questions align and are they in alignment with the APS 2019 strategic plan? DELTA PHY activities are envisioned to be timely and cutting across the society's 'stove pipe' structures.Speaker: S. James Gates (Brown University) -

12

APS Advocacy on Research SecuritySpeaker: Mark Elsesser (American Physical Society)

-

6

-

14:00

Break

-

Beyond Standard Model: BSM 1A Track A

Track A

Zoom

Conveners: Elodie Deborah Resseguie (Lawrence Berkeley National Lab. (US)), Sabyasachi Chakraborty (Florida State University), Tova Ray Holmes (University of Tennessee (US))-

14:30

Break

-

13

Search for chargino pair-production and chargino-neutralino production with $R$-Parity Violating decays in pp collisions at $\sqrt{s}$= 13 TeV with ATLAS

A search is presented for chargino pair-production and chargino-neutralino production, where the almost mass-degenerate chargino and neutralino each decay via $R$-Parity-violating couplings to a boson ($W/Z/H$) and a charged lepton or neutrino. This analysis searches for a trilepton invariant mass resonance in data corresponding to an integrated luminosity of 139 fb$^{-1}$ recorded in proton-proton collisions at $\sqrt{s}$ = 13 TeV with the ATLAS detector at the Large Hadron Collider at CERN.

Speaker: Lucas Macrorie Flores (University of Pennsylvania (US)) -

14

Search for top squark production in fully-hadronic final states in proton-proton collisions at sqrt(s)=13 TeV

A search for production of the supersymmetric partners of the top quark, top squarks, is presented. The search is based on proton-proton collision events containing multiple jets, no leptons, and large transverse momentum imbalance. The data were collected with the CMS detector at the CERN LHC at a center-of-mass energy of 13 TeV, and correspond to an integrated luminosity of 137 fb-1. The targeted signal production scenarios are direct and gluino-mediated top squark production, including scenarios in which the top squark and neutralino masses are nearly degenerate. The search utilizes novel algorithms based on deep neural networks that identify hadronically decaying top quarks and W bosons, which are expected in many of the targeted signal models. No statistically significant excess of events is observed relative to the expectation from the standard model, and limits on the top squark production cross section are obtained in the context of simplified supersymmetric models for various production and decay modes.

Speaker: Anna Henckel Merritt (University of Illinois at Chicago (US)) -

15

Searching for Electroweak Supersymmetry with the ATLAS Detector

With several recent anomalies observed that are in tension with the Standard Model, and with no clear roadmap to the source of new physics, this is an exciting time to explore for new particles at the LHC. Supersymmetry (SUSY) is an elegant solution to many of the Standard Model mysteries, and SUSY models with electroweakly produced sparticles are particularly interesting as possible explanations to the g-2 anomaly, the observed dark-matter density, and more. ATLAS has a rich program of complementary electroweak SUSY searches, and the latest Run 2 results using 139 fb$^{-1}$ of 13 TeV proton-proton collision data are discussed that shed light on where new physics may be found, such as in the three lepton final state.

Speaker: Jeff Dandoy (University of Pennsylvania (US)) -

16

Updated status of the gluino searches in pMSSM in the light of latest LHC data and Dark Matter constraints

Minimal Supersymmetric Standard Model (MSSM) is one of the most well-motivated and well-studied scenarios for going beyond the Standard Model (SM). Apart from solving the hierarchy problem, one of the primary motivations is the presence of a suitable dark matter (DM) candidate, namely the lightest neutralino, in the particle spectrum of SUSY. Measurement of DM relic density of the universe by the WMAP/PLANCK experiments puts the model under probe. In addition, stringent constraints on the masses of strongly interacting sparticles have also been shown at the Large Hadron Collider (LHC) experiment by analysing Run II data for specific simplified models. However, many assumptions made by the experimental collaborations can not be realized in the actual theoretically motivated models. In this study, we revisit the bound on the gluino mass placed by the ATLAS collaboration. We reveal that the exclusion region is shrunk in the $M_{\widetilde{g}}-M_{\widetilde{\chi}^0_1}$ plane in the pMSSM scenario corresponding to different hierarchies of left and right squark mass parameters. Importantly, for higgsino type lighter electro-weakinos, the bound on gluino mass from 1l + jets + MET search practically does not exist. We have also performed detailed analysis on neutralino dark matter and have found that in most of the region of LSP mass range, required relic density is achieved and also, the direct as well as the indirect detection constraints are satisfied.

Speaker: Abhi Mukherjee (University of Kalyani) -

17

Search for Gluino-Mediated Stop Pair Production in Events with b-jets and Large Missing Transverse Momentum Collected with the ATLAS Detector

A search for supersymmetry involving the pair production of gluions decaying via stop quarks into the lightest neutralino $\tilde{\chi}^{0}_{1}$ is reported. It uses LHC $pp$ collision data at $\sqrt{s}\ =\ 13\ TeV$ with an integrated luminosity of $139fb^{-1}$ collected with the ATLAS detector in 2015-2018. The search is performed in events containing large missing transverse momentum and several energetic jets, at least three of which must be identified as originating from b-quarks. The analysis is done in two final states, one of which is required to have at least one charged lepton (electron or muon), and the second one is required the veto on leptons. Expected exclusion limit for gluino and neutralino masses is evaluated using simplified signal model. It is found to be $800\ GeV$ and below for neutralino masses with gluino masses of less than $2275\ GeV$ at the $95\%$ confidence level.

Speaker: Egor Antipov (Oklahoma State University (US))

-

14:30

-

Beyond Standard Model: BSM 1G Track G

Track G

Zoom

Conveners: Ben Carlson (University of Pittsburgh), Pavel Fileviez Perez (Case Western Reserve University), Ryan Sangwoo Kim (Florida State University (US))-

18

New Contributions to Flavour Observables from Left-Right Symmetric Models with Universal Seesaw

A Left-Right Symmetric Model which utilizes VLFs to generate fermion masses via a universal see-saw mechanism is studied. In this talk, I will present the latest results of our analysis on the flavor observables constraining the model. Cabibbo anomaly can be easily resolved in this model, thereby predicting the mass of vector-like quarks. Further, I will discuss the possibility of explaining the neutral current B-anomalies using this model.

Speaker: Ritu Dcruz (Oklahoma State University) -

19

Search for Vector-Like-Quarks T/B in Same-Sign Dilepton and Multi-lepton Final States at $\sqrt{s}=13$ TeV

The discovery of a Higgs boson with mass near 125 GeV in 2012 marked one of the most important milestones in particle physics. The low mass of this Higgs boson with diverging loop corrections adds motivation to look for new physics Beyond the Standard Model (BSM). Several BSM theories introduced new heavy quark partners, called vector-like quarks (VLQ), with mass at the TeV scale. In particular, the vector-like top quark (T) can cancel the largest correction due to the top quark loop, which is one of the main contributions to the divergence, and stabilize the scalar Higgs boson mass. This analysis searches for pair production of vector-like T or B quarks with charge 2e/3 and e/3 in proton-proton collisions at 13 TeV at the LHC. Theories predict 3 decay modes for T and B, respectively : bW, tZ , tH and tW, bZ, bH. The branching ratios vary over different theoretical models. We focus on events where bosons decays leptonically and result in a final state with a same-sign (SS) di-lepton pair and a final state with multiple (3 or more) leptons. We analyze data collected by the CMS detector in the LHC in 2017 and 2018 with integrated luminosities of 41.5 and 59.7 fb^{-1}. Besides Standard Model (SM) processes with the same final states, lepton misidentification contributes a significant part of the background to both SS dilepton and multilepton channel and is estimated by a data-driven method. In addition, charge mis-identification is another source of background for SS dilepton channel, which is also estimated by a data-driven method. Comparing the estimated background with data, and considering uncertainties, we determine an upper limit on the TT or BB production cross section. We calculate limits at different mass points of T and B and different branching fraction combinations.

Speaker: Ms Jess Wong (Brown University (US)) -

20

Call for Abstracts Search for pair production of vector-like quarks in the Wb+X final state using the full Run 2 dataset of pp collisions at sqrt{s} = 13 TeV from the ATLAS detector

Vector-like quarks (VLQ) are predicted in many extensions to the Standard Model (SM), especially those aimed at solving the hierarchy problem. Their vector-like nature allows them to extend the SM while still being compatible with electroweak sector measurements. In many models, VLQs decay to a SM boson and to a third-generation quark. Pair production of VLQ provides a model-independent method of searching due to the Quantum Chromodynamics production of the particles. This talk presents a search for pair production of vector-like top quarks that each decay into a SM W boson and a bottom quark, with one W boson decaying leptonically and the other decaying hadronically. The analysis takes advantage of boosted boson identification and data-driven correction of the dominant ttbar background prediction to improve sensitivity. Further, this analysis extends the previous analysis sensitivity by including the full 140$fb^{-1}$ dataset of $pp$ collisions at $\sqrt{s}=$13 TeV collected with the ATLAS detector.

Speaker: Evan Richard Van De Wall (Oklahoma State University (US)) -

21

All-Hadronic search for Vector-Like Quarks using the BESTagger with 137 $fb^{-1}$ of $\sqrt{s}=13$ TeV proton-proton collisions collected by CMS

We present the status of our all-hadronic analysis in search of pair-produced Vector-Like Quarks (VLQs) using the Boosted Event Shape Tagger (BEST) with the CMS detector using 137 $fb^{-1}$ of $\sqrt{s} = 13$ TeV proton-proton collisions at the LHC. VLQs are motivated by models which predict compositeness of the scalar Higgs boson, and which avoid increasing constraints from Higgs measurements. In the all-hadronic channel, this analysis is sensitive to all possible VLQ decay modes: T(B)->t(b)H/t(b)Z/b(t)W, capturing the highest branching fraction of each process. The high mass of the VLQs produce highly boosted objects in the final state which can be reconstructed as anti-kt R=0.8 jets and identified as either QCD/b/W/Z/H/t using the BESTagger. The tagger boosts jet constituents into various rest frames and uses neural networks to find correlations between event shape variables, such as Fox-Wolfram moments and sphericity, to determine the category of identification. We define signal regions by the classification of the highest four jets in pT. We estimate our QCD-dominant background with a data-driven 3-jet control region, then fit its normalization simultaneously with simulations of well-modeled sub-dominant background such as ttbar and W/Z+jets. The HT (scalar sum $p_T$) of the event is scanned for an excess of signal in 120 of 126 possible combinations, and the least 6 signal-rich combinations are used as validation regions for the QCD estimation. The analysis is in progress and plans to be completed soon.

Speaker: Johan Sebastian Bonilla (University of California Davis (US)) -

22

Single Vector-Like quark production via chromo-magnetic moment at the LHC

In many models that address the naturalness problem, top-quark partners are often postulated in order to cure the issue related to the quadratic corrections of the mass of the Higgs boson. In this work, we study alternative modes for the production of top- and bottom-quark partners ($T$ and $B$), $pp\rightarrow B$ and $pp\rightarrow T\bar{t}$, via a chromo-magnetic moment coupling. We adopt the simplest composite Higgs effective theory for the top-quark sector incorporating partial compositeness, and investigate the sensitivity of the 14 TeV LHC

Speaker: Xing Wang (UC San Diego) -

23

Heavy leptons and the muon anomalous magnetic moment

The recently updated measurement of the muon anomalous magnetic moment strengthens the motivations for new particles beyond the Standard Model. We discuss two well-motivated 2HDM scenarios with vectorlike leptons as well as the Standard Model extended with vectorlike lepton doublets and singlets as possible explanations for the anomalous measurement. In these models we find that, with couplings of order 1, new leptons as heavy as 8 TeV can explain the anomaly, well out of reach of expectations for the LHC. We summarize the implications of future precision measurements of Higgs- and Z- boson couplings which can provide indirect probes of these scenarios and their viability to explain the anomalous magnetic moment of the muon.

Speaker: Dr Navin McGinnis (TRIUMF)

-

18

-

Computation, Machine Learning, and AI: COMP 1E Track E

Track E

Zoom

Conveners: Aishik Ghosh (University of California Irvine (US)), Gordon Watts (University of Washington (US)), Horst Wahl (Florida State University)-

24

Deep-Learned Event Variables for Collider Phenomenology

In this talk, we will introduce a technique to train neural networks into being good event variables, which are useful to an analysis over a range of values for the unknown parameters of a model.

We will use our technique to learn event variables for several common event topologies studied in colliders. We will demonstrate that the networks trained using our technique can mimic powerful, previously known event variables like invariant mass, transverse mass, and MT2.

We will describe how the machine learned event variables can go beyond the hand-derived event variables in terms of sensitivity, while retaining several attractive properties of event variables, including the robustness they offer against unknown modeling errors.

Speaker: Prasanth Shyamsundar (Fermi National Accelerator Laboratory) -

25

Unfolding ATLAS Collider Data with the Novel OmniFold Procedure

To perform theoretical calculations and comparisons with collider data, it must first be corrected for various detector effects, namely noise processes, detector acceptance, detector distortions, and detector efficiency; this process is called “unfolding” in high energy physics (or “deconvolution” elsewhere). While most unfolding procedures are carried out over only one or two binned observables at a time, OmniFold is a simulation-based maximum likelihood procedure which employs deep learning to do unbinned and (variable-, and) high-dimensional unfolding. We apply OmniFold to a measurement of all charged particle properties in $Z+$jets events using the full Run 2 $pp$ collision dataset recorded by the ATLAS detector to complete the first application of OmniFold on physical collider data.

Speaker: Adi Suresh (University of California, Berkeley) -

26

Neural Empirical Bayes: Source Distribution Estimation and its Applications to Simulation-Based Inference

We examine the problem of unfolding in particle physics, or de-corrupting observed distributions to estimate underlying truth distributions, through the lens of Empirical Bayes and deep generative modeling. The resulting method, Neural Empirical Bayes (NEB), can unfold continuous multi-dimensional distributions, in contrast to traditional approaches that treat unfolding as a discrete linear inverse problem. We exclusively apply our method in the absence of a tractable likelihood function, as is typical in scientific domains relying on computer simulations. Moreover, combining NEB with suitable sampling methods allows posterior inference for individual samples, thus enabling the possibility of reconstruction with uncertainty estimation.

Speaker: Maxime Noel Pierre Vandegar (SLAC National Accelerator Laboratory (US)) -

27

Progress towards a more sensitive CWoLa hunt with the ATLAS detector

As the search for physics beyond the Standard Model widens, 'model-agnostic' searches, which do not assume any particular model of new physics, are increasing in importance. One promising model-agnostic search strategy is Classification Without Labels (CWoLa), in which a classifier is trained to distinguish events in a signal region from similar events in a sideband region, thereby learning about the presence of signal in the signal region. The CWoLa strategy was recently used in a full search for new physics in dijet events from Run-2 ATLAS data; in this search, only the masses of the two jets were used as classifier inputs. It has since been observed that while CWoLa performs well in such low-dimensional use cases, difficulties arise when adding additional jet features as classifier inputs. In this talk, we will describe ongoing work to combat these problems and extend the sensitivity of a CWoLa search by adding new observables to an ongoing analysis using $139$ $\text{fb}^{-1}$ of data from $pp$ collisions at $\sqrt{s}=$ 13 TeV in the ATLAS detector. In particular, we will discuss the anticipated benefits of adding classifier features, as well as the implementation of a simulation-assisted version of CWoLa which makes the strategy more robust.

Speaker: Kees Christian Benkendorfer (Lawrence Berkeley National Lab. (US)) -

28

Active Learning for Exclusion level set estimation with the ATLAS experiment

Excursion is a tool to efficiently estimate level sets of

computationally expensive black box functions using Active Learning.

Excursion uses a Gaussian Process Regression as a surrogate model for

the black box function. It queries the target function (black box) iteratively in order to increase the available information regarding the desired level sets. We implement Excursion using GPyTorch which provides

state-of-the-art fast posterior fitting techniques and takes advantage

of GPUs to scale computations to higher dimensions.In this talk, we demonstrate that Excursion significantly outperforms

traditional grid search approaches and we will detail the current work

in progress on improving Exotics searches as an intermediate step towards the ATLAS Run 2 pMSSM scan on $pp$ collisions at $\sqrt{s}=$ 13 TeV with the ATLAS detector.Speaker: Irina Espejo Morales (New York University (US)) -

29

Recent Progress in ML for Tracker DQM

Data Quality Monitoring (DQM) is an important process of collecting high quality data for physics analysis. Currently, the workflow of DQM is manpower intensive to scrutinize and certify hundreds of histograms. Identifying good quality and reliable data is necessary to make accurate predictions, simulations, therefore anomalies in the detector must be timely identified to minimize data loss. With the use of Machine Learning (ML) algorithms raising alarms at the anomalies or failures can be automated and data certification process be made more efficient. The Tracker DQM team at the CMS Experiment (at the LHC) has been working on designing and implementing ML features to monitor this complex detector. This contribution presents the recent progress in this direction.

Speaker: Guillermo Antonio Fidalgo Rodriguez (University of Puerto Rico at Mayagüez)

-

24

-

Cosmology and Dark Energy: COS 1J Track J

Track J

Zoom

Conveners: Kevin Huffenberger (Florida State University), Rachel Yohay (Florida State University (US))-

30

Dark Energy Spectroscopic Instrument: Science Overview

Some of the open questions in fundamental physics can be addressed by looking at the distribution of matter in the Universe as a function of scale and time (or redshift). We can study the nature of dark energy, causing the accelerated expansion of the Universe. We can measure the sum of the neutrino masses, and potentially determine their hierarchy. We can test the standard model at energies higher that those accessible at the laboratory, by studying the primordial density perturbations. The Dark Energy Spectroscopic Instrument (DESI) has just started a 5-years program to generate the largest and most accurate 3D map of the distribution of galaxies and quasars. By measuring the statistical properties of these catalogs, DESI will be able to reconstruct the expansion history of the Universe over the last 11 billion years, while making precise measurements of the growth of structure. In this presentation, I will review the forecasted performance of the DESI survey, and show how it will dramatically improve our understanding of dark energy, inflation, and the mass of the neutrinos.

Speaker: Andreu Font-Ribera (Institut de Física d'Altes Energies (IFAE)) -

31

Dark Energy Spectroscopic Instrument: Instrumentation Overview

The Dark Energy Spectroscopic Instrument (DESI) has embarked on an ambitious survey to explore the nature of dark energy with spectroscopic measurements of 35 million galaxies and quasars in just five years. DESI will determine precise redshifts and employ the Baryon Acoustic Oscillation method to measure distances from the local universe to beyond 11 billion light years, as well as employ Redshift Space Distortions to measure the growth of structure and probe potential modifications to general relativity. In this presentation I will describe the instrumentation we developed to conduct the DESI survey, as well as the flowdown from the science requirements to the technical requirements on the instrumentation. The new instrumentation includes a wide-field, 3.2 degree diameter prime-focus corrector that focuses the light onto 5020 robotic fiber positioners on the 0.8-m diameter, aspheric focal surface. This high density is only possible because of the very compact positioner design, which allows a minimum separation of only 10.4-mm. The positioners and their fibers are evenly divided among ten wedge-shaped petals, and each bundle directs the light of 500 fibers into one of ten spectrographs via a contiguous, high-efficiency, nearly 50-m fiber cable bundle. The ten, identical spectrographs each use a pair of dichroics to split the light into three wavelength channels, and each channel is optimized for a distinct wavelength and spectral resolution that together record the light from 360-980 nm. I will conclude with some highlights from the on-sky validation of the instrument.

Speaker: Prof. Paul Martini (Ohio State University) -

32

Dark Energy Spectroscopic Instrument: Data Overview

The Dark Energy Spectroscopic Instrument (DESI) started its main survey. Over 5 years, it will measure the spectra and redshifts of about 35 millions galaxies and quasars over 14,000 square degrees. This 3D map will be used to reconstruct the expansion history of the universe up to z=3.5, and measure the growth rate of structure in the redshift range 0.7-1.6 with unequaled precision. The start of the survey marks the end of a successful survey validation period during which more than one million cosmological redshifts were measured, already about as many as in any previous survey. This data set, along with many commissioning studies, has demonstrated the project meets its science requirements written many years ago. I will present how we have validated the target selection, the observation strategy and the data processing, demonstrating that we can achieve our goals in terms of density of galaxies and quasars with measured redshifts, with the required precision, for exposure times that allow us to cover one third of the sky in five years.

Speaker: Julien Guy (Lawrence Berkeley National Lab) -

33

Cosmological Measurements of Massive Light Relics

An intriguing and well-motivated possibility for the particle makeup of the dark sector is that a small fraction of the observed abundance is made up of light, feebly-interacting particle species. Due to their weakness of interaction but comparatively large number abundance, cosmological datasets are particularly powerful tools to leverage here. In this talk I discuss the impact of these new particle species on observables, the CMB and LSS in particular, and present the strongest constraints to date on existence light relics in our universe.

Speaker: Linda Xu -

15:30

Break

-

30

-

Dark Matter: DM 1D Track D

Track D

Zoom

Conveners: Hooman Davoudiasl (BNL), Ivan Esteban (CCAPP, Ohio State University), Suho Kim (Florida State University (US))-

34

Global First of Direct Dark Matter Effective Field Theories with GAMBIT

GAMBIT (the Global and Modular Beyond-the-standard-model Inference Tool) is a flexible and extensible framework that can be used to undertake global fits of essentially any BSM theory to relevant experimental data sets. Currently included in code are results from collider searches for new physics, cosmology, neutrino experiments, astrophysical and terrestrial dark matter searches, and precision measurements. In this talk I will begin with a brief update on recent additions to the code and then present the results of a recent global fit that we have undertaken. In this study, we simultaneously varied the coefficients of 14 EFT operators describing the interactions between dark matter, quarks, gluons and the photon, in order to determine the most general current constraints on the allowed properties of WIMP dark matter.

Speaker: Jonathan Cornell (Weber State University) -

35

Studying dark matter with MadDM: Lines and loops

Automated tools for the computation of amplitudes and cross sections have become the backbone of phenomenological studies beyond the standard model. We present the latest developments in MadDM, a calculator of dark matter observables based on MadGraph5_aMC@NLO. The new version enables the fully automated computation of loop-induced annihilation processes, relevant for indirect detection of dark matter. Of particular interest is the electroweak annihilation into $\gamma X$, where $X=\gamma$, $Z$, $h$ or any new unstable particle even under the dark symmetry. These processes lead to the sharp spectral feature of monochromatic gamma lines: a smoking-gun signature for dark matter annihilation in our Galaxy. MadDM provides the predictions for the respective fluxes near Earth and derives constraints from the $\gamma$-ray line searches by Fermi-LAT and HESS. As an application, we present the implications for the parameter space of the Inert Doublet model and a top-philic $t$-channel mediator model.

Speaker: Mr Daniele Massaro (Università di Bologna) -

36

Experimental signatures of a new dark matter WIMP

The WIMP proposed here yields the observed abundance of dark matter, and is consistent with the current limits from direct detection, indirect detection, and collider experiments, if its mass is $\sim 72$ GeV/$c^2$. It is also consistent with analyses of the gamma rays observed by Fermi-LAT from the Galactic center (and other sources), and of the antiprotons observed by AMS-02, in which the excesses are attributed to dark matter annihilation. These successes are shared by the inert doublet model (IDM), but the phenomenology is very different: The dark matter candidate of the IDM has first-order gauge couplings to other new particles, whereas the present candidate does not. In addition to indirect detection through annihilation products, it appears that the present particle can be observed in the most sensitive direct-detection and collider experiments currently being planned.

Speaker: Roland Allen (Texas A&M University) -

37

Testing freeze-in with $Z′$ bosons

If dark matter interacts too feebly with ordinary matter, it was not able to thermalize with the bath in the early universe. Such Feebly Interacting Massive Particles (FIMPs) would therefore be produced via the freeze-in mechanism. Testing FIMPs is a challenging task, given the smallness of their couplings. In this talk, I will discuss our recent proposal of a $Z’$ portal where freeze-in can be currently tested by many experiments. In our model, $Z’$ bosons with masses in the MeV-PeV range have both vector and axial couplings to ordinary and dark fermions. We place constraints on our parameter space with bounds from direct detection, atomic parity violation, leptonic anomalous magnetic moments, neutrino-electron scattering, collider, and beam dump experiments.

Speaker: Taylor Gray (Carleton University) -

38

Freezing In with Lepton Flavored Fermions

Dark, chiral fermions carrying lepton flavor quantum numbers are natural candidates for freeze-in. Small couplings with the Standard Model fermions of the order of lepton Yukawas are ‘automatic’ in the limit of Minimal Flavor Violation. In the absence of total lepton number violating interactions, particles with certain representations under the flavor group remain absolutely stable. For masses in the GeV-TeV range, the simplest model with three flavors, leads to signals at future direct detection experiments like DARWIN. Interestingly, freeze-in with a smaller flavor group such as SU (2) is already being probed by XENON1T.

Speaker: Shiuli Chatterjee (Indian Institute of Science) -

15:45

Break

-

34

-

Dark Matter: DM 1K Track K

Track K

Zoom

Conveners: Muti Wulansatiti (Florida State University (US)), Robert Group (University of Virginia), Zhengkang Zhang (Caltech)-

39

Dark Matter Search Results from DAMIC at SNOLAB

The DAMIC experiment at SNOLAB uses thick, fully-depleted, scientific grade charge-coupled devices (CCDs) to search for the interactions between proposed dark matter particles in the galactic halo and the ordinary silicon atoms in the detector. DAMIC CCDs operate with an extremely low instrumental noise and dark current, making them particularly sensitive to ionization signals expected from low-mass dark matter particles. Throughout 2017-18, DAMIC has collected data with an array of seven CCDs (40-gram target) installed in a low radiation environment in the SNOLAB underground laboratory. This talk will focus on the recent dark matter search results from DAMIC. We will present the search methodology and results from an 11 kg day exposure WIMP search, including the strictest limit on the WIMP-nucleon scattering cross section for a silicon target for $m_\chi < 9 \ \rm GeV \ c^{-2}$. Additionally, we will discuss recent limits on light dark matter that could interact with the electrons of the silicon atoms.

Speaker: Alexander Piers (University of Washington) -

40

Backgrounds and Shielding for SuperCDMS SNOLAB

SuperCDMS deploys cryogenic germanium and silicon detectors which are sensitive in both the athermal phonon and ionization channels to search for dark matter. In order to observe such a small potential signal, all background sources need to be well understood and then mitigated.

Low-background shielding was designed such that the environmental background is negligible compared to the irreducible background due to cosmogenic activation in the detectors themselves. The overall background budget of the SuperCDMS experiment will be presented, along with the iterative process of design, assay, and fabrication of the now complete shielding system.Speaker: Jack Nelson (University of Minnesota) -

41

Improved Background Rate and Coincident Event Removal in SuperCDMS HVeV Run 3

The third science run of SuperCDMS HVeV detectors (single-charge sensitive detectors with high Neganov-Trofimov-Luke phonon gain) took place at the NEXUS underground test facility in early 2021, incorporating two important changes to test background hypotheses and enhance sensitivity. First, this was the first HVeV dataset taken underground (300 mwe) and in a shielded environment. Second, the run utilized three detectors operated simultaneously to identify sources of background events that produce 2 or more electron-hole pairs. We will present preliminary results and interpretation from these tests as well as an estimate of the expected sensitivity of the dataset.

Speaker: Taylor Aralis (Caltech) -

42

Diamond detector for dark matter direct detection

We present the theoretical case along with some early measurements with diamond test chips that demonstrate the viability of TES on diamond as a potential platform for direct detection of sub-GeV dark matter.

Diamond targets can be sensitive to both nuclear and electron recoils from dark matter scattering in the MeV and above mass range, as well as to absorption processes of dark matter with masses between sub-eV to 10's of eV.

Compared to other proposed semiconducting targets such as germanium and silicon, diamond detectors can probe lower dark matter masses via nuclear recoils due to the lightness of the carbon nucleus. The expected reach for electron recoils is comparable to that of germanium and silicon, with the advantage that dark counts are expected to be under better control. Via absorption processes, unconstrained QCD axion parameter space can be successfully probed in diamond for masses of order 10~eV.Speaker: To Chin Yu (SLAC/Stanford) -

43

HeRALD: Dark Matter Direct Detection with Superfluid 4He

ABSTRACT:

HeRALD, the Helium Roton Apparatus for Light Dark Matter, will use a superfluid 4He target to probe the sub-GeV dark matter parameter space. The HeRALD design is sensitive to all signal channels produced by nuclear recoils in superfluid helium: singlet and triplet excimers, as well as phonon-like excitations of the superfluid medium. Excimers are detected via calorimetry with Transition-Edge-Sensor readout in and around the superfluid helium. Phonon-like vibrational excitations eject helium atoms from the superfluid-vacuum interface which are detected by adsorption onto calorimetry suspended above the interface. I will discuss the design, sensitivity projections, and ongoing R&D for the HeRALD experiment. In particular, I will present an initial light yield measurement of superfluid helium down to order 50 keV.Speaker: Pratyush Patel (University of Massachusetts Amherst) -

44

Dark Matter Absorption via Electronic Excitations Revisited

Absorption of dark matter (DM) allows direct detection experiments to probe a broad range of DM candidates with masses much smaller than kinematically allowed via scattering. It has been known for some time that for vector and pseudoscalar DM the absorption rate can be related to the target's optical properties, i.e. the conductivity/dielectric. However this is not the case for scalar DM, where the absorption rate is determined by a, formally, NLO operator which does not appear in the photon absorption process. Therefore the absorption rate must be determined by other methods. We use a combination of first principles numeric calculations and semi-analytic modeling to compute the absorption rate in silicon, germanium and a superconducting aluminum target. We also find good agreement between these approaches and the data-driven approach for the vector and pseudoscalar DM models.

Speaker: Tanner Trickle (California Institute of Technology)

-

39

-

Field and String Theory: F&S 1H Track H

Track H

Zoom

Conveners: Jens Boos (William & Mary), Jingyu Zhang (Florida State University (US))-

45

Wormholes and black hole microstates in AdS/CFT

It has long been known that the coarse-grained approximation to the black hole density of states can be computed using classical Euclidean gravity. In this talk I will present evidence for another entry in the dictionary between Euclidean gravity and black hole physics, namely that Euclidean wormholes describe a coarse-grained approximation to the energy level statistics of black hole microstates. Our main result is an integral representation for wormhole amplitudes in Einstein gravity and in full-fledged AdS/CFT. These amplitudes are non-perturbative corrections to the two-boundary problem in AdS quantum gravity. The full amplitude is UV sensitive, dominated by small wormholes, but it admits an integral transformation with a macroscopic, weakly curved saddle-point approximation. In the boundary description this saddle appears to dominate a smeared version of the connected two-point function of the black hole density of states, and suggests level repulsion in the spectrum of AdS black hole microstates.

Speaker: Kristan Jensen (University of Victoria) -

46

UV-completion in Particle Theory with Infinite Derivatives: Conformal Invariance, Laboratory and Cosmological Implications

We will discuss constructions of string-inspired higher-derivative non-local extension of particle theory which is explicitly ghost-free. Showing quantum loop calculations in the weak perturbation limit we explore the implications on the hierarchy problem and vacuum instability problem in Higgs theory. Then we will discuss the abelian and non-abelian model-building in infinite derivative QFT in 4-D which naturally leads to the predictions of dynamical conformal invariance in the UV at the quantum level due to the vanishing of the \beta-functions above the energy scale of non-locality M. The theory remains finite and perturbative upto infinite energy scales resolving the issue of Landau poles. We move on to the implications of infinite-derivatives in LHC, dark matter, astrophysical and inflationary observables and comment on constraints on the scale M and dimensional transmutation of the scale M. Next we will discuss the strong perturbation limit and show that mass gap that arises due to the interactions in the theory gets diluted in the UV due to the higher-derivatives again reaching a conformal limit in the asymptotic regions both for the scalar field case and Yang-Mills cases. For the Yang-Mills, the gauge theory is confining without fermions and we explore the exact beta-function in the theory. We conclude by summarising the non-locality as a framework UV-completion in particle theory and gravity and the road ahead for its fate with model-building with respect to BSM physics, particularly neutrinos, dark matter and axions.

Speaker: Anish Ghoshal (University of Warsaw, Poland) -

47

One-loop determinant for massive vector field in large dimension limit

We derive an expression for the one-loop determinant of the massive vector field in the Anti-de Sitter black brane geometry with large dimension limit. We utilize the Denef, Hartnoll and Sachdev method, which constructs the one-loop determinant from the quasinormal modes of the field. The large dimension limit decouples the equations of motion for different field components, and also selects a specific set of quasinormal modes that contribute to the non-polynomial part of the one-loop determinant. We hope this result can provide some useful information even when the number of dimension D is finite, since it's the leading order contribution when we treat D as a parameter and do an expansion in terms of 1/D.

Speaker: Mr Yuchen Du (University of Virginia) -

48

Reconciling a minimal length scale with lack of photon dispersion

Generic arguments lead to the idea of a minimal length scale in quantum gravity. An observational signal of such a minimal length scale is that photons would exhibit dispersion. In 2009, the observation of a short gamma ray burst seemed to push the minimal length scale to distances smaller than the Planck length. This poses a challenge for minimal length models. Here we propose a modification of the position and momentum operators which lead to a minimal length scale, but preserve the photon energy-momentum relationship E=pc. In this way there is no dispersion of photons with different energies. This can be accomplished without modifying the commutation relationship [x,p]=iℏ.

Speaker: Douglas Singleton (California State University, Fresno) -

49

Gravitational corrections to two-loop beta function in quantum electrodynamics

The quantization of Einsteins's general relativity leads to a nonrenormalizable quantum field theory. However, the potential harm of nonrenormalizability, can be overcome in the effective field theory (EFT) framework, where there is an unambiguous way to define a well behaved and reliable quantum theory of gravitation, if only we agree to restrict ourselves to low energies compared to the Planck scale. Although the effective field theory of gravitation is perfectly well-defined as a quantum field theory, some subtleties arise from its nonrenormalizability, such as the use of the renormalization group equations, as illustrated by the controversy involving the gravitational corrections to the beta function of gauge theories. In 2005, Robinson and Wilczek announced their conclusion that gravity contributes with a negative term to the beta function of the gauge coupling, meaning that quantum gravity could make gauge theories asymptotically free. This result was soon contested. It was shown that the claimed gravitational correction is gauge dependent, and a lot of subsequent research on the subject followed with varying conclusions. In this work we use the framework of effective field theory to couple Einstein's gravity to quantum electrodynamics and determine the gravitational corrections to the two-loop beta function of the electric charge. Our results indicate that gravitational corrections do not alter the running behavior of the electric charge, on the contrary, we observe that it gives a positive contribution to the beta function, making the electric charge grow faster.

Speaker: Mr Huan Souza (Federal University of Pará) -

15:45

Break

-

45

-

Higgs & Electroweak Physics: HIG 1B Track B

Track B

Zoom

Conveners: Nan Lu (California Institute of Technology (US)), Nicholas Bower (Florida State University (US))-

50

Measurement of the properties of Higgs boson production at $\sqrt{s}=$ 13 TeV in the diphoton decay channel using 139 $fb^{-1}$ of $pp$ collision data with the ATLAS experiment

Measurements of Higgs boson production cross sections are carried out in the diphoton decay channel using 139 $fb^{-1}$ of $pp$ collision data at $\sqrt{s}=$13 TeV collected by the ATLAS experiment. Cross-sections for gluon fusion, weak vector boson fusion, associated production with a $W$ or $Z$ boson, and top quark associated production processes are reported. An upper limit of eight times the Standard Model prediction is set for the associated production of a Higgs boson with a single top quark process. Higgs boson production is further characterized through measurements of the Simplified Template Cross-Sections (STXS) in 27 fiducial regions. All the measurement results are compatible with the Standard Model predictions.

Speaker: Chen Zhou (University of Wisconsin Madison (US)) -

51

Analysis Optimization of the VBF HWW Measurement at the ATLAS Experiment

The precision measurements of the properties of the Higgs boson are among the principal goals of the LHC Run-2 program. This talk reports on the measurements of the fiducial and differential Higgs boson production cross sections via Vector Boson Fusion with a muon, an electron, and two neutrinos from the decay of W bosons, along with the presence of two energetic jets in the final state. The analysis uses $pp$ collision data at a center-of-mass energy of 13 TeV collected with the ATLAS detector between 2015 and 2018 corresponding to an integrated luminosity of 139 fb$^{−1}$. The optimizations of the selection criteria and the signal extraction methods will be discussed in detail, in particular the use of machine learning techniques for performing a multidimensional fit for extracting the signal and normalizing the simulated backgrounds to data.

Speaker: Sagar Addepalli (Brandeis University (US)) -

52

ttH and ttW analysis in multileptons channel

The Large Hadron Collider (LHC) is a “top quark factory”. It allows for precise measurements of several top quark properties. In addition to this, for the first time ever it is now possible to measure rare processes involving top quarks. Associated production of top and anti-top quarks along with the Higgs boson or with electro-weak gauge bosons like W or Z has been observed at the LHC. Precise measurements of these processes have implications on the Standard Model of particle physics and even in cosmology. Recent results from measurements of these rare top quarks processes involving multileptonic final states, at the ATLAS experiment in 𝑝𝑝 collisions at $\sqrt(s)=13$ TeV with 80 fb−1 of data will be discussed.

Speaker: Rohin Thampilali Narayan (Southern Methodist University (US)) -

53

Higgs to two b's or not two b's: The measurement for a Standard Model Higgs boson, prooduced in association with a W or Z boson, decaying into a pair of b-quarks in pp collisions at 13 TeV with the ATLAS detector

Following the discovery of the Higg's boson in 2012 by both the ATLAS and CMS experiments, a wealth of papers have been published concerning measurements or observations of the Higgs' decay modes. However, the most dominant decay mode, $H \rightarrow b\bar{b}$, proved to be an elusive and challenging search due to the low signal-to-background environment, and a diverse range of backgrounds arising from multiple Standard Model processes. The backgrounds include $W$+jets, $Z$+jets, and $t\bar{t}$ production amongst others. Measurements of the $WH$ and $ZH$ production, with the $W$ or $Z$ boson decaying into charged leptons (electrons or muons, including those produced from the leptonic decay of a tau lepton), in the $H\rightarrow b\bar{b}$ decay channel in $pp$ collisions at 13 TeV, corresponding to an integrated luminosity of 139 fb$^{-1}$, with the ATLAS detector was performed. The production of a Higgs boson in association with a $W$ or $Z$ boson has been established with observed (expected) significances of 4.0 (4.1) and 5.3 (5.1) standard deviations, respectively.

Speaker: Ms Amy Tee (University of Wisconsin Madison) -

54

NLO Merging using HJets

In this talk I will present results of the simulation of electroweak Higgs boson production at the CERN LHC using the Herwig 7 general purpose event generator using one-loop matrix elements via the interface to HJets. The main result will be the simulation of next-to-leading order merging of Higgs boson plus 2 and 3 jets with a dipole parton shower. Additionally, I will comment on non-factorizable radiative corrections to this important Higgs boson production process. I will, also, provide a comparison of the full calculation with the well known t-channel approximation (a.k.a VBF) provided by the parton-level Monte Carlo program, VBFNLO.

Speaker: Tinghua Chen (Wichita State University) -

55

W + W − H production through bottom quarks fusion at hadron colliders

With the standard model working well in describing the collider data, the focus is now on determining the standard model parameters as well as for any hint of deviation. In particular, the determination of the couplings of the Higgs boson with itself and with other particles of the model is important to better understand the electroweak symmetry breaking sector of the model. In this

letter, we look at the process pp → W W H, in particular through the fusion of bottom quarks. Due to the non-negligible coupling of the Higgs boson with the bottom quarks, there is a dependence on the W W HH coupling in this process. This sub-process receives the largest contribution when the Wbosons are longitudinally polarized. We compute one-loop QCD corrections to various final states with polarized W bosons. We find that the corrections to the final state with the longitudinally polarized W bosons are large. It is shown that the measurement of the polarization of the W bosons can be used as a tool to probe the WWHH coupling in this process. We also examine the effect of varying

WWHH coupling in the κ-framework.Speaker: Biswajit Das (Institute Of Physics, Bhubaneswar, India`)

-

50

-

Higgs & Electroweak Physics: HIG 1L Track L

Track L

Zoom

Conveners: Evelyn Jean Thomson (University of Pennsylvania (US)), Laura Reina (Florida State University (US))-

56

Higgs to charm quarks in vector boson fusion plus a photon

Experimentally probing the charm-Yukawa coupling in the LHC experiments

is important, but very challenging due to an enormous QCD background. We study a new channel that can be used to search for the Higgs decay $H\to c\bar c$, using the vector boson fusion (VBF) mechanism with an associated photon. In addition to suppressing the QCD background, the photon gives an effective trigger handle. We discuss the trigger implications of this final state that can be utilized in ATLAS and CMS. We propose a novel search strategy for $H\to c\bar c$ in association with VBF jets and a photon, where we find a projected sensitivity of about 5 times the SM charm-Yukawa coupling at 95$\%$ C.L. at High Luminosity LHC (HL-LHC). Our result is comparable and complementary to existing projections at the HL-LHC. We also discuss the implications of increasing the center of mass collision energy to 30 TeV and 100 TeV.Speaker: Sze Ching Iris Leung (University of Pittsburgh) -

57

Search for the Decay of the Higgs Boson to Charm Quarks with the ATLAS Experiment

The measurements at the Large Hadron Collider(LHC), so far, have established Higgs Yukawa couplings to Fermions are close to the Standard Model(SM) expectation for the 3rd Fermion generation. However, the rather ad hoc assumption of universal Yukawa coupling for other Fermion generations has a little experimental constraint. This is very challenging to probe due to small branching fractions, extensive quantum chromodynamics(QCD) backgrounds, and difficulties in jet flavor identification. A direct search by the ATLAS experiment for the SM Higgs boson decaying to a pair of charm quarks is presented. The dataset delivered by the LHC in $pp$ collisions at $\sqrt{s}=$ 13 TeV and recorded by the ATLAS detector corresponds to an integrated luminosity of 139 fb-1. Charm tagging algorithms are optimized to distinguish c-quark jets from both light flavor jets and b-quark jets. The analysis method is validated with the study of diboson (WW, WZ, and ZZ) production, with observed (expected) significances of 2.6(2.2) standard deviations above the background-only hypothesis for the (W/Z)Z(→cc¯) process and 3.8(4.6) standard deviations for the (W/Z)W(→cq) process. The (W/Z)H(→cc¯) search yields an observed (expected) limit of 26(31) times the predicted cross-section times branching fraction for a Higgs boson with a mass of 125 GeV, corresponding to an observed (expected) constraint on the charm Yukawa coupling modifier |κc|<8.5(12.4), at the 95% confidence level.

Speaker: Zijun Xu (SLAC National Accelerator Laboratory (US)) -

58

Search for the Higgs boson decaying to a pair of muons in pp collisions at 13 TeV with the ATLAS detector

The dimuon decay of the Higgs boson is the most promising process for probing the Yukawa couplings to the second generation fermions at the Large Hadron Collider (LHC). We present a search for this important process using the data corresponding to an integrated luminosity of 139 fb$^{-1}$ collected with the ATLAS detector in $pp$ collisions at $\sqrt{s} = 13 \mathrm{TeV}$ at the LHC. Events are divided into several regions using boosted decision trees to target different production modes of the Higgs boson. The measured signal strength (defined as the ratio of the observed signal yield to the one expected in the Standard Model) is $\mu = 1.2 \pm 0.6$. The observed (expected) significance over the background-only hypothesis for a Higgs boson with a mass of 125.09 GeV is 2.0$\sigma$ (1.7$\sigma$).

Speaker: Jay Chan (University of Wisconsin Madison (US)) -

59

Search for Higgs boson decays to bottom quarks in the vector boson fusion production mode with the ATLAS detector

The Higgs Boson is expected to decay to bb approximately 58% of the time. Despite the large branching fraction, due to the large background from Standard Model events with b-jets, measuring this decay has been less precise than other, less frequent, decays. Measuring H(bb) in the vector boson fusion production mode has historically been insensitive, but developments in the background estimates and discrimination, as well as improvements in the signal extraction techniques, have resulted in an observed (expected) significance of 2.6 (2.8) standard deviations from the background-only hypothesis. This analysis uses a dataset with an integrated luminosity of 126 $fb^{-1}$, collected in $pp$ collisions at $\sqrt{s}=$ 13 TeV with the ATLAS detector at the Large Hadron Collider (LHC) during LHC Run 2 and considers only fully-hadronic final states. This talk will focus on the background estimation and signal extraction techniques that are unique to this analysis, as well as the results.

Speaker: Matthew Henry Klein (University of Michigan (US)) -

60

Study of inclusive Higgs-boson production at high transverse momentum in the $H\to b\bar{b}$ decay mode with the ATLAS detector

The ever-growing interest into high-energy production of the Higgs boson, motivated by an enhanced sensitivity to New Physics scenarios, pushes the development of experimental techniques for the reconstruction of boosted decay products from the Higgs-boson hadronic decays.

This talk will discuss recent studies of inclusive Higgs-boson production with sizable transverse momentum decaying to a $b\bar{b}$ quark pair (ATLAS-CONF-2021-010). The analyzed data were recorded with the ATLAS detector in proton-proton collisions with a center-of-mass energy of $\sqrt{s}=13\,$ TeV at the Large Hadron Collider between 2015 and 2018, corresponding to an integrated luminosity of $136\,\text{fb}^{-1}$.

Higgs bosons decaying to $b\bar{b}$ are reconstructed as single large-radius jets and identified by the experimental signature of two $b$-hadron decays. The analysis takes advantage of an analytical model for the description of the multi-jet background, and combines multiple regions rich in Higgs-boson signal and specific background signatures. The experimental techniques are validated in the same kinematic regime using the $Z\to b\bar{b}$ process.

For Higgs-boson production at transverse momenta above 450 GeV, the production cross section is found to be 13±57(stat.)±22(syst.)±3(theo.) fb. The differential cross section 95% confidence level upper limits as a function of Higgs boson transverse momentum are $σ_H$(300<$p_{\text{T}}^H$<450 GeV)<2.8 pb, $σ_H$(450<$p_{\text{T}}^H$<650 GeV)<91 fb, $σ_H$($p_{\text{T}}^H$>650GeV)<40.5 fb, and $σ_H$($p_{\text{T}}^H$>1TeV)<10.3 fb. Evidence for the production of $Z->b\bar{b}$ with $p_{\text{T}}^Z>650\,\text{GeV}$ is obtained. All results are consistent with the Standard Model predictions.Speaker: Dr Andrea Sciandra (University of California,Santa Cruz (US)) -

61

Using the light: A search for vector boson fusion production of the $H(\rightarrow b \bar{b})\gamma$ final state at $\sqrt{s}=13$ TeV with the ATLAS detector

A search for the Standard Model Higgs boson produced in association with a high-energy photon is performed using ${132}$ ${fb^{-1}}$ of $pp$ collision data at $\sqrt{s}={13}$ TeV collected with the ATLAS detector at the Large Hadron Collider. The vector boson fusion production mode of the Higgs boson is particularly powerful for studying the $H(\rightarrow b \bar{b})\gamma$ final state because the photon requirement greatly reduces the multijet background and because the Higgs boson decays primarily to bottom quark-antiquark pairs. Utilization of Monte Carlo, machine learning, and model fitting techniques resulted in a measured Higgs boson signal strength of $1.3 \pm 1.0$ relative to the Standard Model prediction. This correlates with an observed signal significance greater than background of 1.3 standard deviations, compared to 1.0 standard deviations expected.

Speaker: Carolyn Gee (University of California,Santa Cruz (US))

-

56

-

Neutrinos: NU 1F Track F

Track F

Zoom

Conveners: CHRISTOPHER MAUGER (University of Pennsylvania), Oleksandr Tomalak (University of Kentucky), Redwan Md Habibullah (Florida State University (US))-

62

ProtoDUNE Physics and Results

ProtoDUNE-SP and ProtoDUNE-DP DUNE's large scale single-phase and dual-phase prototypes of DUNEs far detector modules, operated at CERN Neutrino Platform. ProtoDUNE-SP has finished its Phase-1 running in 2020 and has successfully collected test beam and cosmic ray data. In this talk, I will discuss the first results on ProtoDUNE-SP Phase-1's physics performance and ProtoDUNE-DPs design and progress.

Speaker: Wenjie Wu (University of California, Irvine) -

63

Improved Neutrino Energy Estimation in Neutral Current Interactions with Liquid Argon Time Projection Chambers

Large liquid argon time projection chambers (LAr TPCs) at SBN and DUNE will provide an unprecedented amount of information about GeV-scale neutrino interactions. By taking advantage of the excellent tracking and calorimetric performance of LAr TPCs, we present a novel method for estimating the neutrino energy in neutral current interactions that significantly improves upon conventional methods in terms of energy resolution and bias. We present a toy study exploring the application of this new method to the sterile neutrino search at SBN under a 3+1 model.

Speaker: Dr Christopher Hilgenberg (University of Minnesota) -

64

The DUNE Near Detector

The Deep Underground Neutrino Experiment (DUNE) is an upcoming long-baseline neutrino experiment which will study neutrino oscillations. Neutrino oscillations will detected at the DUNE far detector 1300 km away from the start of the beam at Fermilab. The DUNE near detector (ND) will be located on-site at Fermilab, and will be used to provide an initial characterization of the neutrino beam, as well as to constrain systematic uncertainties on neutrino oscillation measurements. The detector suite consists of a modular 50-ton LArTPC (ND-LAr), a magnetized 1-ton gaseous argon time projection chamber (ND-Gar) surrounded by an electromagnetic calorimeter, and the System for on-Axis Neutrino Detection (SAND), composed by magnetized electromagnetic calorimeter and inner tracker. In this talk, these detectors and their physics goals will be discussed.

Speaker: Lea Di Noto (INFN e Universita Genova (IT)) -

65

System for on-Axis Neutrino Detection (SAND) as part of the DUNE near detector

In order to achieve a precise measurement of the leptonic CP violation phase, Deep Underground Neutrino Experiment (DUNE) will employ four 10 kt scale far detector modules and a near detector complex.

In the near detector complex, a System for on-Axis Neutrino Detection (SAND) is located downstream of a liquid-argon TPC (LAr) and a high pressure gaseous-argon TPC (GAr). SAND consists of an inner tracking system, surrounded by the KLOE superconducting magnet with an electromagnetic calorimeter inside. Due to the high event rate and accurate neutrino energy reconstruction capability, SAND can serve as a good beam monitor. Besides, SAND provides comprehensive measurements on non-Ar targets allowing constraints on the A-dependence of neutrino interaction models. In addition, with the capability of neutron kinetic energy detection, a full reconstruction of neutrino interaction would be possible, which opens new ways to analyze the events. In this talk, a number of physics studies and the latest design of SAND will be presented.

Speaker: Guang Yang (Stony Brook University) -

66

Search for low-energy nuclear recoil in XENON1T

The XENON collaboration has recently published results lowering the energy threshold to search for nuclear recoils produced by solar $^8$B neutrinos using a $0.6$ tonne-year exposure with the XENON1T detector. Due to the low energy threshold, a number of novel techniques are required to reduce the consequent increase in backgrounds. No significant $^8$B neutrino-like excess is found after unblinding. New upper limits are placed on the dark matter-nucleus cross section for dark matter masses as low as $3~\mathrm{GeV}/c^2$, as well as on a model of non-standard neutrino interactions. This talk will present the techniques used to lower backgrounds and to validate signal and background models.

Speaker: Zihao Xu (Columbia University) -

15:45

Break

-

62

-

Particle Detectors: DET 1C Track C

Track C

Zoom

Conveners: Karol Krizka (Lawrence Berkeley National Lab. (US)), Ted Kolberg (Florida State University (US)), Ulrich Heintz (Brown University (US))-

67

The CMS Electromagnetic Calorimeter calibration and performance during LHC Run 2

The CMS electromagnetic calorimeter (ECAL) of the Compact Muon Solenoid (CMS) is a high granularity lead tungstate crystal calorimeter operating at the CERN Large Hadron Collider. The ECAL is designed to achieve excellent energy resolution which is crucial for studies of Higgs boson decays with electromagnetic particles in the final state, as well as for searches for new physics involving electrons and photons. Recently the energy response of the calorimeter has been precisely calibrated exploiting the full Run 2 data, with the goal of achieving the most optimal performance. A dedicated calibration of each detector channel has been performed with physics events using electrons from W and Z boson decays, photons from pi0/eta decays, and the azimuthally symmetric energy distribution of minimum bias events. We will describe the calibration strategies that have been implemented and the excellent performance achieved by the CMS ECAL with the ultimate calibration of Run 2 data, in terms of energy scale stability and energy resolution.

Speaker: Jelena Mijuskovic (University of Montenegro (ME)) -

68

ECAL trigger primitives performance in Run 2 and improvements for Run 3

The CMS electromagnetic calorimeter (ECAL) is a high resolution crystal calorimeter operating at the CERN LHC. The on-detector readout electronics digitizes the signals and provides information on the deposited energy in the ECAL to the hardware-based Level-1 trigger system. The L1 trigger system receives information from different CMS subdetectors at 40 MHz, the proton bunch collision rate, and decides for each collision whether the full detector must be read out, reducing the rate of accepted events to about 100 kHz. The increased luminosity of the LHC Run2 with respect to Run1 has required frequent calibrations during data taking operations to account at trigger level for radiation-induced changes in crystal and photodetector response. For the LHC Run3 (2022-24), further improvements in the energy and time reconstruction of the CMS ECAL trigger primitives are being explored. These exploit additional features of the on-detector electronics. In this presentation we will review the ECAL trigger primitives performance during LHC Run2 and present the improvements to the ECAL trigger system envisaged for the LHC Run3.

Speaker: Davide Valsecchi (Università degli Studi e INFN Milano-Bicocca (IT)) -

15:00

Break

-

69

RADiCAL - Innovative Ultracompact Radiation-hard, Fast-timing EM Calorimetry

To address the challenges of providing high performance calorimetry and other types of instrumentation in future experiments under high luminosity and difficult radiation and pileup conditions, R&D is being conducted on promising optical-based technologies that can inform the design of future detectors, with emphasis on ultra-compactness, excellent energy resolution and spatial resolution, and especially fast timing capability.

The strategy builds upon the following concepts: use of dense materials to minimize the cross sections and lengths (depths) of detector elements; maintaining Molière Radii of the structures as small as possible; use of radiation-hard materials; use of optical techniques that can provide high efficiency and fast response while keeping optical paths as short as possible; and use of radiation resistant, high efficiency photosensors.

High material density is achieved by using thin layers of tungsten absorber interleaved with active layers of dense, highly efficient crystal or ceramic scintillator. Several scintillator approaches are currently being explored, including rare-earth 3+ activated materials Ce3+ and Pr3+ for brightness and Ca co-doping for improved (faster) fluorescence decay time.

Light collection and transfer from the scintillation layers to photosensors is enabled by the development and refinement of new waveshifters (WLS) and the incorporation of these materials into radiation hard quartz waveguide elements. WLS dye developments include fast organic dyes of the DSB1 type, ESIPT (excited state intermolecular proton transfer) dyes having very large Stokes’ Shifts and hence very low optical self-absorption, and inorganic fluorescent materials such as LuAG:Ce, which is noted for its radiation resistance.

Optical waveguide approaches include quartz capillaries containing WLS cores to: (1) provide high resolution EM energy measurement; (2) with WLS materials strategically placed at the location of the EM shower maximum to provide high resolution timing of EM showers, and (3) with WLS shifter elements placed at various depth locations to provide depth segmentation and angular measurement of the EM shower development.

Light directly from the scintillators or indirectly via wave shifters is detected by pixelated, Geiger-mode photosensors that have high quantum efficiency over a wide spectral range and designed to avoid saturation. These include the development of very small pixel (5-7 micron) silicon photomultiplier devices (SiPM) operated at low gain and cooled (typically -35°C or below), and longer-term R&D on photosensors based upon large band-gap materials including GaInP. Both efforts are directed toward improved device performance in high radiation fields.

The main emphases of the RADiCAL R&D program are: (1) the bench, beam and radiation testing of individual scintillator, wave shifter and photo sensing elements; and (2) by combining these into ultra-compact modular structures, to characterize and assess their performance for measurement of energy, fast timing, and depth segmentation. Recent results and program plans will be presented.

Speaker: Randy Ruchti (University of Notre Dame (US)) -

70

Using Photosensitive Dopants to Enhance Large LArTPC Performance

A challenge in large LArTPCs is efficient photon collection for low energy, MeV-scale, deposits. Past studies have demonstrated that augmenting traditional ionization-based calorimetry with information from the scintillation signals can greatly improve the precision of measurements of energy deposited. We propose the use of photosensitive dopants to efficiently convert the scintillation signals of the liquid argon directly into ionization signals. This could enable the collection of more than 40% of all the scintillation information, a considerable improvement over conventional light collection solutions. We will discuss the implications this can have on LArTPC physics programs, what hints of performance improvements we can gather from past studies, and what R&D we envision are needed to establish using these dopants in large LArTPCs.

Speaker: Joseph Zennamo (Fermi National Accelerator Laboratory) -

71

Progress on construction and qualification tests of the Mu2e electromagnetic calorimeter mechanical structures

The “muon-to-electron conversion” (Mu2e) experiment at Fermilab will search for the Charged Lepton Flavour Violating neutrino-less coherent conversion of a muon into an electron in the field of an aluminum nucleus. The observation of this process would be the unambiguous evidence of physics beyond the Standard Model. Mu2e detectors comprise a straw-tracker, an electromagnetic calorimeter and an external veto for cosmic rays. The calorimeter provides excellent electron identification, complementary information to aid pattern recognition and track reconstruction, and a fast calorimetric online trigger. The detector has been designed as a state-of-the-art crystal calorimeter and employs 1340 pure Cesium Iodide (CsI) crystals readout by UV-extended silicon photosensors and fast front-end and digitization electronics. A design consisting of two identical annular matrices (named “disks”) positioned at the relative distance of 70 cm downstream the aluminum target along the muon beamline satisfies the Mu2e physics requirements.

The hostile Mu2e operational conditions, in terms of radiation levels (total ionizing dose of 12 krad and a neutron fluence of 5x1010 n/cm2 @ 1 MeVeq (Si)/y), magnetic field intensity (1 T) and vacuum level (10^-4 Torr) have posed tight constraints on the design of the detector mechanical structures and materials choice. The support structure of the two 670 crystal matrices employs two aluminum hollow rings and parts made of open-cell vacuum-compatible carbon fiber. The photosensors and service front-end electronics for each crystal are assembled in a unique mechanical unit inserted in a machined copper holder. The 670 units are supported by a machined plate made of vacuum-compatible plastic material. The plate also integrates the cooling system made of a network of copper lines flowing a low temperature radiation-hard fluid and placed in thermal contact with the copper holders to constitute a low resistance thermal bridge. The data acquisition electronics is hosted in aluminum custom crates positioned on the external lateral surface of the two disks. The crates also integrate the electronics cooling system as lines running in parallel to the front-end system.

In this talk we will review the constraints on the calorimeter mechanical structures design, the development from the conceptual design to the specifications of all the structural components, including the mechanical and thermal simulations that have determined the materials and technological choices and the specifications of the cooling station, the status of components production, the components quality assurance tests, the detector assembly procedures, and the procedures for detector transportation and installation in the experimental area.Speaker: Daniele Pasciuto (Istituto Nazionale di Fisica Nucleare - Pisa)

-

67

-

Top Quark: TOP 1I Track I

Track I

Zoom

Conveners: Doreen Wackeroth (SUNY Buffalo), Manfred Kraus (Florida State University)-

72

Toponium Phenomenology at the LHC

Measurements of the di-leptonic top-antitop events at the LHC unraveled several important excesses. We examine the possibility that those excesses are consequences of the lack of non-perturbative enhancement of the production cross section near the t-tbar threshold. While sub-dominant in terms of total rates, so-far neglected toponium effects yield the additional production of di-leptonic systems of small invariant mass and small azimuthal angle separation, which could contribute the above-mentioned deviations from the Standard Model. We propose a method to discover toponium in present and future data, and our results should pave the way to further experimental and phenomenological studies on toponium. Deeper understanding of the threshold behavior of the top pair production is necessary to accurately determine the top quark mass, which is one of the most important parameters of the SM.

Speaker: Ya-Juan Zheng -

73

Flavor changing top decays to charm and Higgs with $\tau \tau$ at the LHC

We investigate the prospects of discovering the top quark decay into

a charm quark and a Higgs boson ($t \to c h^0$) in top quark pair

production at the CERN Large Hadron Collider (LHC).